The approach to managing persistent data has been a key design decision in every software project we’ve worked on. Given that persistent data isn’t a new or unusual requirement for Java applications, you’d expect to be able to make a simple choice among similar, well-established persistence solutions. Think of web application frameworks (Struts versus WebWork), GUI component frameworks (Swing versus SWT), or template engines (JSP versus Velocity). Each of the competing solutions has various advantages and disadvantages, but they all share the same scope and overall approach. Unfortunately, this isn’t yet the case with persistence technologies, where we see some wildly differing solutions to the same problem.

For several years, persistence has been a hot topic of debate in the Java community. Many developers don’t even agree on the scope of the problem. Is persistence a problem that is already solved by relational technology and extensions such as stored procedures, or is it a more pervasive problem that must be addressed by special Java component models, such as EJB entity beans? Should we hand-code even the most primitive CRUD (create, read, update, delete) operations in SQL and JDBC, or should this work be automated? How do we achieve portability if every database management system has its own SQL dialect? Should we abandon SQL completely and adopt a different database technology, such as object database systems? Debate continues, but a solution called object/relational mapping (ORM) now has wide acceptance. Hibernate is an open source ORM service implementation.

Hibernate is an ambitious project that aims to be a complete solution to the problem of managing persistent data in Java. It mediates the application’s interaction with a relational database, leaving the developer free to concentrate on the business problem at hand. Hibernate is a nonintrusive solution. You aren’t required to follow many Hibernate-specific rules and design patterns when writing your business logic and persistent classes; thus, Hibernate integrates smoothly with most new and existing applications and doesn’t require disruptive changes to the rest of the application.

This topic is about Hibernate. We’ll cover basic and advanced features and describe some ways to develop new applications using Hibernate. Often, these recommendations won’t even be specific to Hibernate. Sometimes they will be our ideas about the best ways to do things when working with persistent data, explained in the context of Hibernate. This topic is also about Java Persistence, a new standard for persistence that is part of the also updated EJB 3.0 specification. Hibernate implements Java Persistence and supports all the standardized mappings, queries, and APIs. Before we can get started with Hibernate, however, you need to understand the core problems of object persistence and object/relational mapping. This topic explains why tools like Hibernate and specifications such as Java Persistence and EJB 3.0 are needed.

First, we define persistent data management in the context of object-oriented applications and discuss the relationship of SQL, JDBC, and Java, the underlying technologies and standards that Hibernate is built on. We then discuss the so-called object/relational paradigm mismatch and the generic problems we encounter in object-oriented software development with relational databases. These problems make it clear that we need tools and patterns to minimize the time we have to spend on the persistence-related code of our applications. After we look at alternative tools and persistence mechanisms, you’ll see that ORM is the best available solution for many scenarios. Our discussion of the advantages and drawbacks of ORM will give you the full background to make the best decision when picking a persistence solution for your own project.

We also take a look at the various Hibernate software modules, and how you can combine them to either work with Hibernate only, or with Java Persistence and EJB 3.0-compliant features.

What is persistence?

Almost all applications require persistent data. Persistence is one of the fundamental concepts in application development. If an information system didn’t preserve data when it was powered off, the system would be of little practical use. When we talk about persistence in Java, we’re normally talking about storing data in a relational database using SQL. We’ll start by taking a brief look at the technology and how we use it with Java. Armed with that information, we’ll then continue our discussion of persistence and how it’s implemented in object-oriented applications.

Relational databases

You, like most other developers, have probably worked with a relational database. Most of us use a relational database every day. Relational technology is a known quantity, and this alone is sufficient reason for many organizations to choose it.

But to say only this is to pay less respect than is due. Relational databases are entrenched because they’re an incredibly flexible and robust approach to data management. Due to the complete and consistent theoretical foundation of the relational data model, relational databases can effectively guarantee and protect the integrity of the data, among other desirable characteristics. Some people would even say that the last big invention in computing has been the relational concept for data management as first introduced by E.F. Codd (Codd, 1970) more than three decades ago.

Relational database management systems aren’t specific to Java, nor is a relational database specific to a particular application. This important principle is known as data independence. In other words, and we can’t stress this important fact enough, data lives longer than any application does. Relational technology provides a way of sharing data among different applications, or among different technologies that form parts of the same application (the transactional engine and the reporting engine, for example). Relational technology is a common denominator of many disparate systems and technology platforms. Hence, the relational data model is often the common enterprise-wide representation of business entities.

Relational database management systems have SQL-based application programming interfaces; hence, we call today’s relational database products SQL database management systems or, when we’re talking about particular systems, SQL databases.

Before we go into more detail about the practical aspects of SQL databases, we have to mention an important issue: Although marketed as relational, a database system providing only an SQL data language interface isn’t really relational and in many ways isn’t even close to the original concept. Naturally, this has led to confusion. SQL practitioners blame the relational data model for shortcomings in the SQL language, and relational data management experts blame the SQL standard for being a weak implementation of the relational model and ideals. Application developers are stuck somewhere in the middle, with the burden to deliver something that works. We’ll highlight some important and significant aspects of this issue throughout the topic, but generally we’ll focus on the practical aspects. If you’re interested in more background material, we highly recommend Practical Issues in Database Management: A Reference for the Thinking Practitioner by Fabian Pascal (Pascal, 2000).

Understanding SQL

To use Hibernate effectively, a solid understanding of the relational model and SQL is a prerequisite. You need to understand the relational model and topics such as normalization to guarantee the integrity of your data, and you’ll need to use your knowledge of SQL to tune the performance of your Hibernate application. Hibernate automates many repetitive coding tasks, but your knowledge of persistence technology must extend beyond Hibernate itself if you want to take advantage of the full power of modern SQL databases. Remember that the underlying goal is robust, efficient management of persistent data.

Let’s review some of the SQL terms used in this topic. You use SQL as a data definition language (DDL) to create a database schema with CREATE and ALTER statements. After creating tables (and indexes, sequences, and so on), you use SQL as a data manipulation language (DML) to manipulate and retrieve data. The manipulation operations include insertions, updates, and deletions. You retrieve data by executing queries with restrictions, projections, and join operations (including the Cartesianproduct). For efficient reporting, you use SQL to group, order, and aggregate data as necessary. You can even nest SQL statements inside each other; this technique uses subselects.

You’ve probably used SQL for many years and are familiar with the basic operations and statements written in this language. Still, we know from our own experience that SQL is sometimes hard to remember, and some terms vary in usage. To understand this topic, we must use the same terms and concepts. if any of the terms we’ve mentioned are new or unclear.

If you need more details, especially about any performance aspects and how SQL is executed, get a copy of the excellent topic SQL Tuning by Dan Tow (Tow, 2003). Also read An Introduction to Database Systems by Chris Date (Date, 2003) for the theory, concepts, and ideals of (relational) database systems. The latter topic is an excellent reference (it’s big) for all questions you may possibly have about databases and data management.

Although the relational database is one part of ORM, the other part, of course, consists of the objects in your Java application that need to be persisted to and loaded from the database using SQL.

Using SQL in Java

When you work with an SQL database in a Java application, the Java code issues SQL statements to the database via the Java Database Connectivity (JDBC) API. Whether the SQL was written by hand and embedded in the Java code, or generated on the fly by Java code, you use the JDBC API to bind arguments to prepare query parameters, execute the query, scroll through the query result table, retrieve values from the result set, and so on. These are low-level data access tasks; as application developers, we’re more interested in the business problem that requires this data access. What we’d really like to write is code that saves and retrieves objects—the instances of our classes—to and from the database, relieving us of this low-level drudgery.

Because the data access tasks are often so tedious, we have to ask: Are the relational data model and (especially) SQL the right choices for persistence in object-oriented applications? We answer this question immediately: Yes! There are many reasons why SQL databases dominate the computing industry—relational database management systems are the only proven data management technology, and they’re almost always a requirement in any Java project.

However, for the last 15 years, developers have spoken of a paradigm mismatch. This mismatch explains why so much effort is expended on persistence-related concerns in every enterprise project. The paradigms referred to are object modeling and relational modeling, or perhaps object-oriented programming and SQL.

Let’s begin our exploration of the mismatch problem by asking what persistence means in the context of object-oriented application development. First we’ll widen the simplistic definition of persistence stated at the beginning of this section to a broader, more mature understanding of what is involved in maintaining and using persistent data.

Persistence in object-oriented applications

In an object-oriented application, persistence allows an object to outlive the process that created it. The state of the object can be stored to disk, and an object with the same state can be re-created at some point in the future.

This isn’t limited to single objects—entire networks of interconnected objects can be made persistent and later re-created in a new process. Most objects aren’t persistent; a transient object has a limited lifetime that is bounded by the life of the process that instantiated it. Almost all Java applications contain a mix of persistent and transient objects; hence, we need a subsystem that manages our persistent data.

Modern relational databases provide a structured representation of persistent data, enabling the manipulating, sorting, searching, and aggregating of data. Database management systems are responsible for managing concurrency and data integrity; they’re responsible for sharing data between multiple users and multiple applications. They guarantee the integrity of the data through integrity rules that have been implemented with constraints. A database management system provides data-level security. When we discuss persistence in this topic, we’re thinking of all these things:

■ Storage, organization, and retrieval of structured data

■ Concurrency and data integrity

■ Data sharing

And, in particular, we’re thinking of these problems in the context of an object-oriented application that uses a domain model.

An application with a domain model doesn’t work directly with the tabular representation of the business entities; the application has its own object-oriented model of the business entities. If the database of an online auction system has ITEM and BID tables, for example, the Java application defines Item and Bid classes.

Then, instead of directly working with the rows and columns of an SQL result set, the business logic interacts with this object-oriented domain model and its runtime realization as a network of interconnected objects. Each instance of a Bid has a reference to an auction Item, and each Item may have a collection of references to Bid instances. The business logic isn’t executed in the database (as an SQL stored procedure); it’s implemented in Java in the application tier. This allows business logic to make use of sophisticated object-oriented concepts such as inheritance and polymorphism. For example, we could use well-known design patterns such as Strategy, Mediator, and Composite (Gamma and others, 1995), all of which depend on polymorphic method calls.

Now a caveat: Not all Java applications are designed this way, nor should they be. Simple applications may be much better off without a domain model. Complex applications may have to reuse existing stored procedures. SQL and the JDBC API are perfectly serviceable for dealing with pure tabular data, and the JDBC RowSet makes CRUD operations even easier. Working with a tabular representation of persistent data is straightforward and well understood.

However, in the case of applications with nontrivial business logic, the domain model approach helps to improve code reuse and maintainability significantly. In practice, both strategies are common and needed. Many applications need to execute procedures that modify large sets of data, close to the data. At the same time, other application modules could benefit from an object-oriented domain model that executes regular online transaction processing logic in the application tier. An efficient way to bring persistent data closer to the application code is required.

If we consider SQL and relational databases again, we finally observe the mismatch between the two paradigms. SQL operations such as projection and join always result in a tabular representation of the resulting data. (This is known as transitive closure, the result of an operation on relations is always a relation.) This is quite different from the network of interconnected objects used to execute the business logic in a Java application. These are fundamentally different models, not just different ways of visualizing the same model.

With this realization, you can begin to see the problems—some well understood and some less well understood—that must be solved by an application that combines both data representations: an object-oriented domain model and a persistent relational model. Let’s take a closer look at this so-called paradigm mismatch.

The paradigm mismatch

The object/relational paradigm mismatch can be broken into several parts, which we’ll examine one at a time. Let’s start our exploration with a simple example that is problem free. As we build on it, you’ll begin to see the mismatch appear.

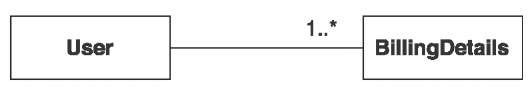

Suppose you have to design and implement an online e-commerce application. In this application, you need a class to represent information about a user of the system, and another class to represent information about the user’s billing details, as shown in figure 1.1.

In this diagram, you can see that a User has many BillingDetails. You can navigate the relationship between the classes in both directions. The classes representing these entities may be extremely simple:

Figure 1.1

A simple UML class diagram of the User and BillingDetails entities

Note that we’re only interested in the state of the entities with regard to persistence, so we’ve omitted the implementation of property accessors and business methods (such as getUsername() or billAuction()).

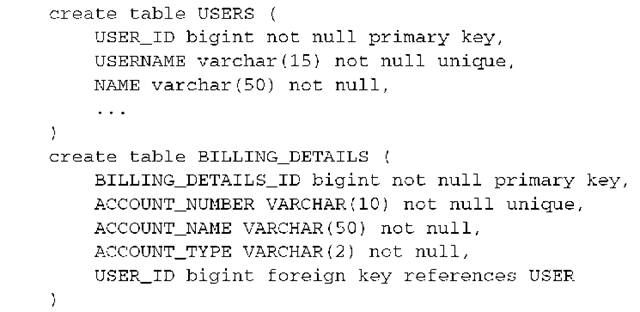

It’s easy to come up with a good SQL schema design for this case:

The relationship between the two entities is represented as the foreign key, USERNAME, in BILLING_DETAILS. For this simple domain model, the object/relational mismatch is barely in evidence; it’s straightforward to write JDBC code to insert, update, and delete information about users and billing details.

Now, let’s see what happens when we consider something a little more realistic. The paradigm mismatch will be visible when we add more entities and entity relationships to our application.

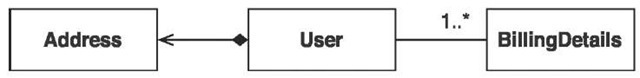

The most glaringly obvious problem with our current implementation is that we’ve designed an address as a simple String value. In most systems, it’s necessary to store street, city, state, country, and ZIP code information separately. Of course, we could add these properties directly to the User class, but because it’s highly likely that other classes in the system will also carry address information, it makes more sense to create a separate Address class. The updated model is shown in figure 1.2.

Should we also add an ADDRESS table? Not necessarily. It’s common to keep address information in the USERS table, in individual columns. This design is likely to perform better, because a table join isn’t needed if you want to retrieve the user and address in a single query. The nicest solution may even be to create a user-defined SQL datatype to represent addresses, and to use a single column of that new type in the USERS table instead of several new columns.

Basically, we have the choice of adding either several columns or a single column (of a new SQL datatype). This is clearly a problem of granularity.

Figure 1.2

The User has an Address

The problem of granularity

Granularity refers to the relative size of the types you’re working with.

Let’s return to our example. Adding a new datatype to our database catalog, to store Address Java instances in a single column, sounds like the best approach. A new Address type (class) in Java and a new ADDRESS SQL datatype should guarantee interoperability. However, you’ll find various problems if you check the support for user-defined datatypes (UDT) in today’s SQL database management systems.

UDT support is one of a number of so-called object-relational extensions to traditional SQL. This term alone is confusing, because it means that the database management system has (or is supposed to support) a sophisticated datatype system— something you take for granted if somebody sells you a system that can handle data in a relational fashion. Unfortunately, UDT support is a somewhat obscure feature of most SQL database management systems and certainly isn’t portable between different systems. Furthermore, the SQL standard supports user-defined datatypes, but poorly.

This limitation isn’t the fault of the relational data model. You can consider the failure to standardize such an important piece of functionality as fallout from the object-relational database wars between vendors in the mid-1990s. Today, most developers accept that SQL products have limited type systems—no questions asked. However, even with a sophisticated UDT system in our SQL database management system, we would likely still duplicate the type declarations, writing the new type in Java and again in SQL. Attempts to find a solution for the Java space, such as SQLJ, unfortunately, have not had much success.

For these and whatever other reasons, use of UDTs or Java types inside an SQL database isn’t common practice in the industry at this time, and it’s unlikely that you’ll encounter a legacy schema that makes extensive use of UDTs. We therefore can’t and won’t store instances of our new Address class in a single new column that has the same datatype as the Java layer.

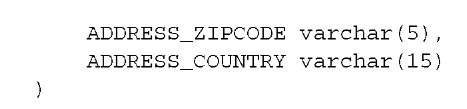

Our pragmatic solution for this problem has several columns of built-in vendor-defined SQL types (such as boolean, numeric, and string datatypes). The USERS table is usually defined as follows:

Classes in our domain model come in a range of different levels of granularity— from coarse-grained entity classes like User, to finer-grained classes like Address, down to simple String-valued properties such as zipcode. In contrast, just two levels of granularity are visible at the level of the SQL database: tables such as USERS, and columns such as ADDRESS_ZIPCODE.

Many simple persistence mechanisms fail to recognize this mismatch and so end up forcing the less flexible SQL representation upon the object model. We’ve seen countless User classes with properties named zipcode!

It turns out that the granularity problem isn’t especially difficult to solve. We probably wouldn’t even discuss it, were it not for the fact that it’s visible in so many existing systems.

A much more difficult and interesting problem arises when we consider domain models that rely on inheritance, a feature of object-oriented design we may use to bill the users of our e-commerce application in new and interesting ways.

The problem of subtypes

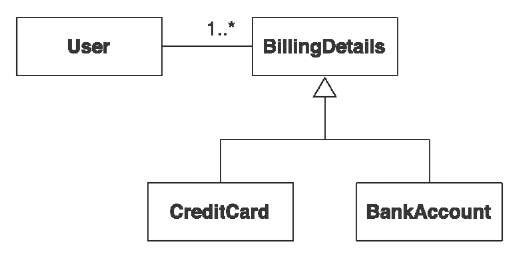

In Java, you implement type inheritance using superclasses and subclasses. To illustrate why this can present a mismatch problem, let’s add to our e-commerce application so that we now can accept not only bank account billing, but also credit and debit cards. The most natural way to reflect this change in the model is to use inheritance for the BillingDetails class.

We may have an abstract BillingDetails superclass, along with several concrete subclasses: CreditCard, BankAccount, and so on. Each of these subclasses defines slightly different data (and completely different functionality that acts on that data). The UML class diagram in figure 1.3 illustrates this model.

SQL should probably include standard support for supertables and subtables. This would effectively allow us to create a table that inherits certain columns from its parent. However, such a feature would be questionable, because it would introduce a new notion: virtual columns in base tables. Traditionally, we expect virtual columns only in virtual tables, which are called views. Furthermore, on a theoretical level, the inheritance we applied in Java is type inheritance. A table isn’t a type, so the notion of supertables and subtables is questionable. In any case, we can take the short route here and observe that SQL database products don’t generally implement type or table inheritance, and if they do implement it, they don’t follow a standard syntax and usually expose you to data integrity problems (limited integrity rules for updatable views).

Figure 1.3

Using inheritance for different billing strategies

But we aren’t finished with inheritance. As soon as we introduce inheritance into the model, we have the possibility of polymorphism.

The User class has an association to the BillingDetails superclass. This is a polymorphic association. At runtime, a User object may reference an instance of any of the subclasses of BillingDetails. Similarly, we want to be able to write polymorphic queries that refer to the BillingDetails class, and have the query return instances of its subclasses.

SQL databases also lack an obvious way (or at least a standardized way) to represent a polymorphic association. A foreign key constraint refers to exactly one target table; it isn’t straightforward to define a foreign key that refers to multiple tables. We’d have to write a procedural constraint to enforce this kind of integrity rule.

The result of this mismatch of subtypes is that the inheritance structure in your model must be persisted in an SQL database that doesn’t offer an inheritance strategy.

The next aspect of the object/relational mismatch problem is the issue of object identity You probably noticed that we defined USERNAME as the primary key of our USERS table. Was that a good choice? How do we handle identical objects in Java?

The problem of identity

Although the problem of object identity may not be obvious at first, we’ll encounter it often in our growing and expanding e-commerce system, such as when we need to check whether two objects are identical. There are three ways to tackle this problem: two in the Java world and one in our SQL database. As expected, they work together only with some help.

Java objects define two different notions of sameness:

■ Object identity (roughly equivalent to memory location, checked with a==b)

■ Equality as determined by the implementation of the equals() method (also called equality by value)

On the other hand, the identity of a database row is expressed as the primary key value. It’s common for several nonidentical objects to simultaneously represent the same row of the database, for example, in concurrently running application threads. Furthermore, some subtle difficulties are involved in implementing equals() correctly for a persistent class.

Let’s discuss another problem related to database identity with an example. In our table definition for USERS, we used USERNAME as a primary key. Unfortunately, this decision makes it difficult to change a username; we need to update not only the USERNAME column in USERS, but also the foreign key column in BILLING_ DETAILS. To solve this problem, later in the topic we’ll recommend that you use surrogate keys whenever you can’t find a good natural key (we’ll also discuss what makes a key good). A surrogate key column is a primary key column with no meaning to the user; in other words, a key that isn’t presented to the user and is only used for identification of data inside the software system. For example, we may change our table definitions to look like this:

The USER_ID and BILLING_DETAILS_ID columns contain system-generated values. These columns were introduced purely for the benefit of the data model, so how (if at all) should they be represented in the domain model?

In the context of persistence, identity is closely related to how the system handles caching and transactions. Different persistence solutions have chosen different strategies, and this has been an area of confusion.

So far, the skeleton e-commerce application we’ve designed has identified the mismatch problems with mapping granularity, subtypes, and object identity. We’re almost ready to move on to other parts of the application, but first we need to discuss the important concept of associations: how the relationships between our classes are mapped and handled. Is the foreign key in the database all you need?

Problems relating to associations

In our domain model, associations represent the relationships between entities. The User, Address, and BillingDetails classes are all associated; but unlike Address, BillingDetails stands on its own. BillingDetails instances are stored in their own table. Association mapping and the management of entity associations are central concepts in any object persistence solution.

Object-oriented languages represent associations using object references; but in the relational world, an association is represented as a foreign key column, with copies of key values (and a constraint to guarantee integrity). There are substantial differences between the two representations.

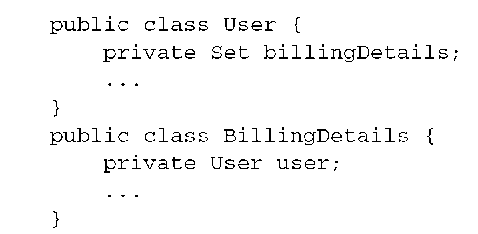

Object references are inherently directional; the association is from one object to the other. They’re pointers. If an association between objects should be navigable in both directions, you must define the association twice, once in each of the associated classes. You’ve already seen this in the domain model classes:

On the other hand, foreign key associations aren’t by nature directional. Navigation has no meaning for a relational data model because you can create arbitrary data associations with table joins and projection. The challenge is to bridge a completely open data model, which is independent of the application that works with the data, to an application-dependent navigational model, a constrained view of the associations needed by this particular application.

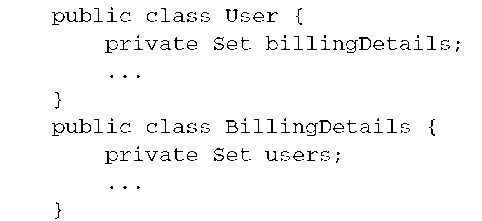

It isn’t possible to determine the multiplicity of a unidirectional association by looking only at the Java classes. Java associations can have many-to-many multiplicity. For example, the classes could look like this:

These are one-to-one associations:

Table associations, on the other hand, are always one-to-many or one-to-one. You can see the multiplicity immediately by looking at the foreign key definition. The following is a foreign key declaration on the BILLING_DETAILS table for a one-to-many association (or, if read in the other direction, a many-to-one association):

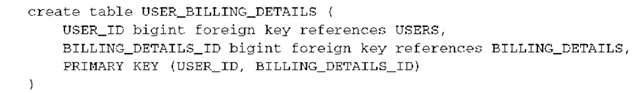

If you wish to represent a many-to-many association in a relational database, you must introduce a new table, called a link table. This table doesn’t appear anywhere in the domain model. For our example, if we consider the relationship between the user and the billing information to be many-to-many, the link table is defined as follows:

So far, the issues we’ve considered are mainly structural. We can see them by considering a purely static view of the system. Perhaps the most difficult problem in object persistence is a dynamic problem. It concerns associations, and we’ve already hinted at it when we drew a distinction between object network navigation and table joins in section 1.1.4, “Persistence in object-oriented applications.” Let’s explore this significant mismatch problem in more depth.

The problem of data navigation

There is a fundamental difference in the way you access data in Java and in a relational database. In Java, when you access a user’s billing information, you call aUser.getBillingDetails ().getAccountNumber() or something similar. This is the most natural way to access object-oriented data, and it’s often described as walking the object network. You navigate from one object to another, following pointers between instances. Unfortunately, this isn’t an efficient way to retrieve data from an SQL database.

The single most important thing you can do to improve the performance of data access code is to minimize the number of requests to the database. The most obvious way to do this is to minimize the number of SQL queries. (Of course, there are other more sophisticated ways that follow as a second step.)

Therefore, efficient access to relational data with SQL usually requires joins between the tables of interest. The number of tables included in the join when retrieving data determines the depth of the object network you can navigate in memory. For example, if you need to retrieve a User and aren’t interested in the user’s billing information, you can write this simple query:

On the other hand, if you need to retrieve a User and then subsequently visit each of the associated BillingDetails instances (let’s say, to list all the user’s credit cards), you write a different query:

As you can see, to efficiently use joins you need to know what portion of the object network you plan to access when you retrieve the initial User—this is before you start navigating the object network!

On the other hand, any object persistence solution provides functionality for fetching the data of associated objects only when the object is first accessed. However, this piecemeal style of data access is fundamentally inefficient in the context of a relational database, because it requires executing one statement for each node or collection of the object network that is accessed. This is the dreaded n+1 selects problem.

This mismatch in the way you access objects in Java and in a relational database is perhaps the single most common source of performance problems in Java applications. There is a natural tension between too many selects and too big selects, which retrieve unnecessary information into memory. Yet, although we’ve been blessed with innumerable topics and magazine articles advising us to use StringBuffer for string concatenation, it seems impossible to find any advice about strategies for avoiding the n+1 selects problem. Fortunately, Hibernate provides sophisticated features for efficiently and transparently fetching networks of objects from the database to the application accessing them.

The cost of the mismatch

We now have quite a list of object/relational mismatch problems, and it will be costly (in time and effort) to find solutions, as you may know from experience. This cost is often underestimated, and we think this is a major reason for many failed software projects. In our experience (regularly confirmed by developers we talk to), the main purpose of up to 30 percent of the Java application code written is to handle the tedious SQL/JDBC and manual bridging of the object/relational paradigm mismatch. Despite all this effort, the end result still doesn’t feel quite right. We’ve seen projects nearly sink due to the complexity and inflexibility of their database abstraction layers. We also see Java developers (and DBAs) quickly lose their confidence when design decisions about the persistence strategy for a project have to be made.

One of the major costs is in the area of modeling. The relational and domain models must both encompass the same business entities, but an object-oriented purist will model these entities in a different way than an experienced relational data modeler would. The usual solution to this problem is to bend and twist the domain model and the implemented classes until they match the SQL database schema. (Which, following the principle of data independence, is certainly a safe long-term choice.)

This can be done successfully, but only at the cost of losing some of the advantages of object orientation. Keep in mind that relational modeling is underpinned by relational theory. Object orientation has no such rigorous mathematical definition or body of theoretical work, so we can’t look to mathematics to explain how we should bridge the gap between the two paradigms—there is no elegant transformation waiting to be discovered. (Doing away with Java and SQL, and starting from scratch isn’t considered elegant.)

The domain modeling mismatch isn’t the only source of the inflexibility and the lost productivity that lead to higher costs. A further cause is the JDBC API itself. JDBC and SQL provide a statement-oriented (that is, command-oriented) approach to moving data to and from an SQL database. If you want to query or manipulate data, the tables and columns involved must be specified at least three times (insert, update, select), adding to the time required for design and implementation. The distinct dialects for every SQL database management system don’t improve the situation.

To round out your understanding of object persistence, and before we approach possible solutions, we need to discuss application architecture and the role of a persistence layer in typical application design.

Persistence layers and alternatives

In a medium- or large-sized application, it usually makes sense to organize classes by concern. Persistence is one concern; others include presentation, workflow, and business logic.1 A typical object-oriented architecture includes layers of code that represent the concerns. It’s normal and certainly best practice to group all classes and components responsible for persistence into a separate persistence layer in a layered system architecture.

In this section, we first look at the layers of this type of architecture and why we use them. After that, we focus on the layer we’re most interested in—the persistence layer—and some of the ways it can be implemented.

Layered architecture

A layered architecture defines interfaces between code that implements the various concerns, allowing changes to be made to the way one concern is implemented without significant disruption to code in the other layers. Layering also determines the kinds of interlayer dependencies that occur. The rules are as follows:

■ Layers communicate from top to bottom. A layer is dependent only on the layer directly below it.

■ Each layer is unaware of any other layers except for the layer just below it.

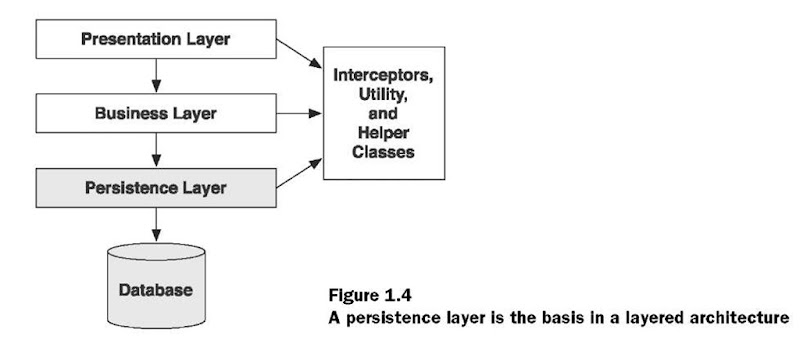

Different systems group concerns differently, so they define different layers. A typical, proven, high-level application architecture uses three layers: one each for presentation, business logic, and persistence, as shown in figure 1.4.

Let’s take a closer look at the layers and elements in the diagram:

■ Presentation layer—The user interface logic is topmost. Code responsible for the presentation and control of page and screen navigation is in the presentation layer.

■ Business layer—The exact form of the next layer varies widely between applications. It’s generally agreed, however, that the business layer is responsible for implementing any business rules or system requirements that would be understood by users as part of the problem domain. This layer usually includes some kind of controlling component—code that knows when to invoke which business rule. In some systems, this layer has its own internal representation of the business domain entities, and in others it reuses the model defined by the persistence layer.

■ Persistence layer—The persistence layer is a group of classes and components responsible for storing data to, and retrieving it from, one or more data stores. This layer necessarily includes a model of the business domain entities (even if it’s only a metadata model).

■ Database—The database exists outside the Java application itself. It’s the actual, persistent representation of the system state. If an SQL database is used, the database includes the relational schema and possibly stored procedures.

■ Helper and utility classes—Every application has a set of infrastructural helper or utility classes that are used in every layer of the application (such as Exception classes for error handling). These infrastructural elements don’t form a layer, because they don’t obey the rules for interlayer dependency in a layered architecture.

Let’s now take a brief look at the various ways the persistence layer can be implemented by Java applications. Don’t worry—we’ll get to ORM and Hibernate soon. There is much to be learned by looking at other approaches.

Hand-coding a persistence layer with SQL/JDBC

The most common approach to Java persistence is for application programmers to work directly with SQL and JDBC. After all, developers are familiar with relational database management systems, they understand SQL, and they know how to work with tables and foreign keys. Moreover, they can always use the well-known and widely used data access object (DAO) pattern to hide complex JDBC code and nonportable SQL from the business logic.

The DAO pattern is a good one—so good that we often recommend its use even with ORM. However, the work involved in manually coding persistence for each domain class is considerable, particularly when multiple SQL dialects are supported. This work usually ends up consuming a large portion of the development effort. Furthermore, when requirements change, a hand-coded solution always requires more attention and maintenance effort.

Why not implement a simple mapping framework to fit the specific requirements of your project? The result of such an effort could even be reused in future projects. Many developers have taken this approach; numerous homegrown object/relational persistence layers are in production systems today. However, we don’t recommend this approach. Excellent solutions already exist: not only the (mostly expensive) tools sold by commercial vendors, but also open source projects with free licenses. We’re certain you’ll be able to find a solution that meets your requirements, both business and technical. It’s likely that such a solution will do a great deal more, and do it better, than a solution you could build in a limited time.

Developing a reasonably full-featured ORM may take many developers months. For example, Hibernate is about 80,000 lines of code, some of which is much more difficult than typical application code, along with 25,000 lines of unit test code. This may be more code than is in your application. A great many details can easily be overlooked in such a large project—as both the authors know from experience! Even if an existing tool doesn’t fully implement two or three of your more exotic requirements, it’s still probably not worth creating your own tool. Any ORM software will handle the tedious common cases—the ones that kill productivity. It’s OK if you need to hand-code certain special cases; few applications are composed primarily of special cases.

Using serialization

Java has a built-in persistence mechanism: Serialization provides the ability to write a snapshot of a network of objects (the state of the application) to a byte stream, which may then be persisted to a file or database. Serialization is also used by Java’s Remote Method Invocation (RMI) to achieve pass-by value semantics for complex objects. Another use of serialization is to replicate application state across nodes in a cluster of machines.

Why not use serialization for the persistence layer? Unfortunately, a serialized network of interconnected objects can only be accessed as a whole; it’s impossible to retrieve any data from the stream without deserializing the entire stream. Thus, the resulting byte stream must be considered unsuitable for arbitrary search or aggregation of large datasets. It isn’t even possible to access or update a single object or subset of objects independently. Loading and overwriting an entire object network in each transaction is no option for systems designed to support high concurrency.

Given current technology, serialization is inadequate as a persistence mechanism for high concurrency web and enterprise applications. It has a particular niche as a suitable persistence mechanism for desktop applications.

Object-oriented database systems

Because we work with objects in Java, it would be ideal if there were a way to store those objects in a database without having to bend and twist the object model at all. In the mid-1990s, object-oriented database systems gained attention. They’re based on a network data model, which was common before the advent of the relational data model decades ago. The basic idea is to store a network of objects, with all its pointers and nodes, and to re-create the same in-memory graph later on. This can be optimized with various metadata and configuration settings.

An object-oriented database management system (OODBMS) is more like an extension to the application environment than an external data store. An OODBMS usually features a multitiered implementation, with the backend data store, object cache, and client application coupled tightly together and interacting via a proprietary network protocol. Object nodes are kept on pages of memory, which are transported from and to the data store.

Object-oriented database development begins with the top-down definition of host language bindings that add persistence capabilities to the programming language. Hence, object databases offer seamless integration into the object-oriented application environment. This is different from the model used by today’s relational databases, where interaction with the database occurs via an intermediate language (SQL) and data independence from a particular application is the major concern.

For background information on object-oriented databases, we recommend the respective topic in An Introduction to Database Systems (Date, 2003).

We won’t bother looking too closely into why object-oriented database technology hasn’t been more popular; we’ll observe that object databases haven’t been widely adopted and that it doesn’t appear likely that they will be in the near future. We’re confident that the overwhelming majority of developers will have far more opportunity to work with relational technology, given the current political realities (predefined deployment environments) and the common requirement for data independence.

Other options

Of course, there are other kinds of persistence layers. XML persistence is a variation on the serialization theme; this approach addresses some of the limitations of byte-stream serialization by allowing easy access to the data through a standardized tool interface. However, managing data in XML would expose you to an object/hierarchical mismatch. Furthermore, there is no additional benefit from the XML itself, because it’s just another text file format and has no inherent capabilities for data management. You can use stored procedures (even writing them in Java, sometimes) and move the problem into the database tier. So-called object-relational databases have been marketed as a solution, but they offer only a more sophisticated datatype system providing only half the solution to our problems (and further muddling terminology). We’re sure there are plenty of other examples, but none of them are likely to become popular in the immediate future.

Political and economic constraints (long-term investments in SQL databases), data independence, and the requirement for access to valuable legacy data call for a different approach. ORM may be the most practical solution to our problems.

Object/relational mapping

Now that we’ve looked at the alternative techniques for object persistence, it’s time to introduce the solution we feel is the best, and the one we use with Hibernate: ORM. Despite its long history (the first research papers were published in the late 1980s), the terms for ORM used by developers vary. Some call it object relational mapping, others prefer the simple object mapping; we exclusively use the term object/relational mapping and its acronym, ORM. The slash stresses the mismatch problem that occurs when the two worlds collide.

In this section, we first look at what ORM is. Then we enumerate the problems that a good ORM solution needs to solve. Finally, we discuss the general benefits that ORM provides and why we recommend this solution.

What is ORM?

In a nutshell, object/relational mapping is the automated (and transparent) persistence of objects in a Java application to the tables in a relational database, using metadata that describes the mapping between the objects and the database.

ORM, in essence, works by (reversibly) transforming data from one representation to another. This implies certain performance penalties. However, if ORM is implemented as middleware, there are many opportunities for optimization that wouldn’t exist for a hand-coded persistence layer. The provision and management of metadata that governs the transformation adds to the overhead at development time, but the cost is less than equivalent costs involved in maintaining a hand-coded solution. (And even object databases require significant amounts of metadata.)

FAQ Isn’t ORM a Visio plug-in ? The acronym ORM can also mean object role modeling, and this term was invented before object/relational mapping became relevant. It describes a method for information analysis, used in database modeling, and is primarily supported by Microsoft Visio, a graphical modeling tool. Database specialists use it as a replacement or as an addition to the more popular entity-relationship modeling. However, if you talk to Java developers about ORM, it’s usually in the context of object/relational mapping.

An ORM solution consists of the following four pieces:

■ An API for performing basic CRUD operations on objects of persistent classes

■ A language or API for specifying queries that refer to classes and properties of classes

■ A facility for specifying mapping metadata

■ A technique for the ORM implementation to interact with transactional objects to perform dirty checking, lazy association fetching, and other optimization functions

We’re using the term full ORM to include any persistence layer where SQL is automatically generated from a metadata-based description. We aren’t including persistence layers where the object/relational mapping problem is solved manually by developers hand-coding SQL with JDBC. With ORM, the application interacts with the ORM APIs and the domain model classes and is abstracted from the underlying SQL/JDBC. Depending on the features or the particular implementation, the ORM engine may also take on responsibility for issues such as optimistic locking and caching, relieving the application of these concerns entirely.

Let’s look at the various ways ORM can be implemented. Mark Fussel (Fussel, 1997), a developer in the field of ORM, defined the following four levels of ORM quality. We have slightly rewritten his descriptions and put them in the context of today’s Java application development.

Pure relational

The whole application, including the user interface, is designed around the relational model and SQL-based relational operations. This approach, despite its deficiencies for large systems, can be an excellent solution for simple applications where a low level of code reuse is tolerable. Direct SQL can be fine-tuned in every aspect, but the drawbacks, such as lack of portability and maintainability, are significant, especially in the long run. Applications in this category often make heavy use of stored procedures, shifting some of the work out of the business layer and into the database.

Light object mapping

Entities are represented as classes that are mapped manually to the relational tables. Hand-coded SQL/JDBC is hidden from the business logic using well-known design patterns. This approach is extremely widespread and is successful for applications with a small number of entities, or applications with generic, metadata-driven data models. Stored procedures may have a place in this kind of application.

Medium object mapping

The application is designed around an object model. SQL is generated at build time using a code-generation tool, or at runtime by framework code. Associations between objects are supported by the persistence mechanism, and queries may be specified using an object-oriented expression language. Objects are cached by the persistence layer. A great many ORM products and homegrown persistence layers support at least this level of functionality. It’s well suited to medium-sized applications with some complex transactions, particularly when portability between different database products is important. These applications usually don’t use stored procedures.

Full object mapping

Full object mapping supports sophisticated object modeling: composition, inheritance, polymorphism, and persistence by reachability. The persistence layer implements transparent persistence; persistent classes do not inherit from any special base class or have to implement a special interface. Efficient fetching strategies (lazy, eager, and prefetching) and caching strategies are implemented transparently to the application. This level of functionality can hardly be achieved by a homegrown persistence layer—it’s equivalent to years of development time. A number of commercial and open source Java ORM tools have achieved this level of quality.

This level meets the definition of ORM we’re using in this topic. Let’s look at the problems we expect to be solved by a tool that achieves full object mapping.

Generic ORM problems

The following list of issues, which we’ll call the ORM problems, identifies the fundamental questions resolved by a full object/relational mapping tool in a Java environment. Particular ORM tools may provide extra functionality (for example, aggressive caching), but this is a reasonably exhaustive list of the conceptual issues and questions that are specific to object/relational mapping.

1 What do persistent classes look like? How transparent is the persistence tool? Do we have to adopt a programming model and conventions for classes of the business domain?

2 How is mapping metadata defined? Because the object/relational transformation is governed entirely by metadata, the format and definition of this metadata is important. Should an ORM tool provide a GUI interface to manipulate the metadata graphically? Or are there better approaches to metadata definition?

3 How do object identity and equality relate to database (primary key) identity? How do we map instances of particular classes to particular table rows?

4 How should we map class inheritance hierarchies? There are several standard strategies. What about polymorphic associations, abstract classes, and interfaces?

5 How does the persistence logic interact at runtime with the objects of the business domain? This is a problem of generic programming, and there are a number of solutions including source generation, runtime reflection, runtime bytecode generation, and build-time bytecode enhancement. The solution to this problem may affect your build process (but, preferably, shouldn’t otherwise affect you as a user).

6 What is the lifecycle of a persistent object? Does the lifecycle of some objects depend upon the lifecycle of other associated objects? How do we translate the lifecycle of an object to the lifecycle of a database row?

7 What facilities are provided for sorting searching, and aggregating? The application could do some of these things in memory, but efficient use of relational technology requires that this work often be performed by the database.

8 How do we efficiently retrieve data with associations? Efficient access to relational data is usually accomplished via table joins. Object-oriented applications usually access data by navigating an object network. Two data access patterns should be avoided when possible: the n+1 selects problem, and its complement, the Cartesian product problem (fetching too much data in a single select).

Two additional issues that impose fundamental constraints on the design and architecture of an ORM tool are common to any data access technology:

■ Transactions and concurrency

■ Cache management (and concurrency)

As you can see, a full object/relational mapping tool needs to address quite a long list of issues. By now, you should be starting to see the value of ORM. In the next section, we look at some of the other benefits you gain when you use an ORM solution.

Why ORM?

An ORM implementation is a complex beast—less complex than an application server, but more complex than a web application framework like Struts or Tapestry. Why should we introduce another complex infrastructural element into our system? Will it be worth it?

It will take us most of this topic to provide a complete answer to those questions, but this section provides a quick summary of the most compelling benefits. First, though, let’s quickly dispose of a nonbenefit.

A supposed advantage of ORM is that it shields developers from messy SQL. This view holds that object-oriented developers can’t be expected to understand SQL or relational databases well, and that they find SQL somehow offensive. On the contrary, we believe that Java developers must have a sufficient level of familiarity with—and appreciation of—relational modeling and SQL in order to work with ORM. ORM is an advanced technique to be used by developers who have already done it the hard way. To use Hibernate effectively, you must be able to view and interpret the SQL statements it issues and understand the implications for performance.

Now, let’s look at some of the benefits of ORM and Hibernate.

Productivity

Persistence-related code can be perhaps the most tedious code in a Java application. Hibernate eliminates much of the grunt work (more than you’d expect) and lets you concentrate on the business problem.

No matter which application-development strategy you prefer—top-down, starting with a domain model, or bottom-up, starting with an existing database schema—Hibernate, used together with the appropriate tools, will significantly reduce development time.

Maintainability

Fewer lines of code (LOC) make the system more understandable, because it emphasizes business logic rather than plumbing. Most important, a system with less code is easier to refactor. Automated object/relational persistence substantially reduces LOC. Of course, counting lines of code is a debatable way of measuring application complexity.

However, there are other reasons that a Hibernate application is more maintainable. In systems with hand-coded persistence, an inevitable tension exists between the relational representation and the object model implementing the domain. Changes to one almost always involve changes to the other, and often the design of one representation is compromised to accommodate the existence of the other. (What almost always happens in practice is that the object model of the domain is compromised.) ORM provides a buffer between the two models, allowing more elegant use of object orientation on the Java side, and insulating each model from minor changes to the other.

Performance

A common claim is that hand-coded persistence can always be at least as fast, and can often be faster, than automated persistence. This is true in the same sense that it’s true that assembly code can always be at least as fast as Java code, or a handwritten parser can always be at least as fast as a parser generated by YACC or ANTLR—in other words, it’s beside the point. The unspoken implication of the claim is that hand-coded persistence will perform at least as well in an actual application. But this implication will be true only if the effort required to implement at-least-as-fast hand-coded persistence is similar to the amount of effort involved in utilizing an automated solution. The really interesting question is what happens when we consider time and budget constraints?

Given a persistence task, many optimizations are possible. Some (such as query hints) are much easier to achieve with hand-coded SQL/JDBC. Most optimizations, however, are much easier to achieve with automated ORM. In a project with time constraints, hand-coded persistence usually allows you to make some optimizations. Hibernate allows many more optimizations to be used all the time. Furthermore, automated persistence improves developer productivity so much that you can spend more time hand-optimizing the few remaining bottlenecks.

Finally, the people who implemented your ORM software probably had much more time to investigate performance optimizations than you have. Did you know, for instance, that pooling PreparedStatement instances results in a significant performance increase for the DB2 JDBC driver but breaks the InterBase JDBC driver? Did you realize that updating only the changed columns of a table can be significantly faster for some databases but potentially slower for others? In your handcrafted solution, how easy is it to experiment with the impact of these various strategies?

Vendor independence

An ORM abstracts your application away from the underlying SQL database and SQL dialect. If the tool supports a number of different databases (and most do), this confers a certain level of portability on your application. You shouldn’t necessarily expect write-once/run-anywhere, because the capabilities of databases differ, and achieving full portability would require sacrificing some of the strength of the more powerful platforms. Nevertheless, it’s usually much easier to develop a cross-platform application using ORM. Even if you don’t require cross-platform operation, an ORM can still help mitigate some of the risks associated with vendor lock-in.

In addition, database independence helps in development scenarios where developers use a lightweight local database but deploy for production on a different database.

You need to select an ORM product at some point. To make an educated decision, you need a list of the software modules and standards that are available.

Introducing Hibernate, EJB3, and JPA

Hibernate is a full object/relational mapping tool that provides all the previously listed ORM benefits. The API you’re working with in Hibernate is native and designed by the Hibernate developers. The same is true for the query interfaces and query languages, and for how object/relational mapping metadata is defined.

Before you start your first project with Hibernate, you should consider the EJB 3.0 standard and its subspecification, Java Persistence. Let’s go back in history and see how this new standard came into existence.

Many Java developers considered EJB 2.1 entity beans as one of the technologies for the implementation of a persistence layer. The whole EJB programming and persistence model has been widely adopted in the industry, and it has been an important factor in the success ofJ2EE (or, Java EE as it’s now called).

However, over the last years, critics of EJB in the developer community became more vocal (especially with regard to entity beans and persistence), and companies realized that the EJB standard should be improved. Sun, as the steering party of J2EE, knew that an overhaul was in order and started a new Java specification request (JSR) with the goal of simplifying EJB in early 2003. This new JSR, Enterprise JavaBeans 3.0 (JSR 220), attracted significant interest. Developers from the Hibernate team joined the expert group early on and helped shape the new specification. Other vendors, including all major and many smaller companies in the Java industry, also contributed to the effort. An important decision made for the new standard was to specify and standardize things that work in practice, taking ideas and concepts from existing successful products and projects. Hibernate, therefore, being a successful data persistence solution, played an important role for the persistence part of the new standard. But what exactly is the relationship between Hibernate and EJB3, and what is Java Persistence?

Understanding the standards

First, it’s difficult (if not impossible) to compare a specification and a product. The questions that should be asked are, “Does Hibernate implement the EJB 3.0 specification, and what is the impact on my project? Do I have to use one or the other?”

The new EJB 3.0 specification comes in several parts: The first part defines the new EJB programming model for session beans and message-driven beans, the deployment rules, and so on. The second part of the specification deals with persistence exclusively: entities, object/relational mapping metadata, persistence manager interfaces, and the query language. This second part is called Java Persistence API (JPA), probably because its interfaces are in the package javax.persistence. We’ll use this acronym throughout the topic.

This separation also exists in EJB 3.0 products; some implement a full EJB 3.0 container that supports all parts of the specification, and other products may implement only the Java Persistence part. Two important principles were designed into the new standard:

■ JPA engines should be pluggable, which means you should be able to take out one product and replace it with another if you aren’t satisfied—even if you want to stay with the same EJB 3.0 container or Java EE 5.0 application server.

■ JPA engines should be able to run outside of an EJB 3.0 (or any other) runtime environment, without a container in plain standard Java.

The consequences of this design are that there are more options for developers and architects, which drives competition and therefore improves overall quality of products. Of course, actual products also offer features that go beyond the specification as vendor-specific extensions (such as for performance tuning, or because the vendor has a focus on a particular vertical problem space).

Hibernate implements Java Persistence, and because a JPA engine must be pluggable, new and interesting combinations of software are possible. You can select from various Hibernate software modules and combine them depending on your project’s technical and business requirements.

Hibernate Core

The Hibernate Core is also known as Hibernate 3.2.x, or Hibernate. It’s the base service for persistence, with its native API and its mapping metadata stored in XML files. It has a query language called HQL (almost the same as SQL), as well as programmatic query interfaces for Criteria and Example queries. There are hundreds of options and features available for everything, as Hibernate Core is really the foundation and the platform all other modules are built on.

You can use Hibernate Core on its own, independent from any framework or any particular runtime environment with all JDKs. It works in every Java EE/J2EE application server, in Swing applications, in a simple servlet container, and so on. As long as you can configure a data source for Hibernate, it works. Your application code (in your persistence layer) will use Hibernate APIs and queries, and your mapping metadata is written in native Hibernate XML files.

Native Hibernate APIs, queries, and XML mapping files are the primary focus of this topic, and they’re explained first in all code examples. The reason for that is that Hibernate functionality is a superset of all other available options.

Hibernate Annotations

A new way to define application metadata became available with JDK 5.0: type-safe annotations embedded directly in the Java source code. Many Hibernate users are already familiar with this concept, as the XDoclet software supports Javadoc metadata attributes and a preprocessor at compile time (which, for Hibernate, generates XML mapping files).

With the Hibernate Annotations package on top of Hibernate Core, you can now use type-safe JDK 5.0 metadata as a replacement or in addition to native Hibernate XML mapping files. You’ll find the syntax and semantics of the mapping annotations familiar once you’ve seen them side-by-side with Hibernate XML mapping files. However, the basic annotations aren’t proprietary.

The JPA specification defines object/relational mapping metadata syntax and semantics, with the primary mechanism being JDK 5.0 annotations. (Yes, JDK 5.0 is required for Java EE 5.0 and EJB 3.0.) Naturally, the Hibernate Annotations are a set of basic annotations that implement the JPA standard, and they’re also a set of extension annotations you need for more advanced and exotic Hibernate mappings and tuning.

You can use Hibernate Core and Hibernate Annotations to reduce your lines of code for mapping metadata, compared to the native XML files, and you may like the better refactoring capabilities of annotations. You can use only JPA annotations, or you can add a Hibernate extension annotation if complete portability isn’t your primary concern. (In practice, you should embrace the product you’ve chosen instead of denying its existence at all times.)

We’ll discuss the impact of annotations on your development process, and how to use them in mappings, throughout this topic, along with native Hibernate XML mapping examples.

Hibernate EntityManager

The JPA specification also defines programming interfaces, lifecycle rules for persistent objects, and query features. The Hibernate implementation for this part of JPA is available as Hibernate EntityManager, another optional module you can stack on top of Hibernate Core. You can fall back when a plain Hibernate interface, or even a JDBC Connection is needed. Hibernate’s native features are a superset of the JPA persistence features in every respect. (The simple fact is that

Hibernate EntityManager is a small wrapper around Hibernate Core that provides JPA compatibility.)

Working with standardized interfaces and using a standardized query language has the benefit that you can execute your JPA-compatible persistence layer with any EJB 3.0 compliant application server. Or, you can use JPA outside of any particular standardized runtime environment in plain Java (which really means everywhere Hibernate Core can be used).

Hibernate Annotations should be considered in combination with Hibernate EntityManager. It’s unusual that you’d write your application code against JPA interfaces and with JPA queries, and not create most of your mappings with JPA annotations.

Java EE 5.0 application servers

We don’t cover all of EJB 3.0 in this topic; our focus is naturally on persistence, and therefore on the JPA part of the specification. (We will, of course, show you many techniques with managed EJB components when we talk about application architecture and design.)

Hibernate is also part of the JBoss Application Server (JBoss AS), an implementation of J2EE 1.4 and (soon)Java EE 5.0. A combination of Hibernate Core, Hibernate Annotations, and Hibernate EntityManager forms the persistence engine of this application server. Hence, everything you can use stand-alone, you can also use inside the application server with all the EJB 3.0 benefits, such as session beans, message-driven beans, and other Java EE services.

To complete the picture, you also have to understand that Java EE 5.0 application servers are no longer the monolithic beasts of the J2EE 1.4 era. In fact, the JBoss EJB 3.0 container also comes in an embeddable version, which runs inside other application servers, and even in Tomcat, or in a unit test, or a Swing application. In the next topic, you’ll prepare a project that utilizes EJB 3.0 components, and you’ll install the JBoss server for easy integration testing.

As you can see, native Hibernate features implement significant parts of the specification or are natural vendor extensions, offering additional functionality if required.

Here is a simple trick to see immediately what code you’re looking at, whether JPA or native Hibernate. If only the javax.persistence.* import is visible, you’re working inside the specification; if you also import org.hibernate.*, you’re using native Hibernate functionality. We’ll later show you a few more tricks that will help you cleanly separate portable from vendor-specific code.

FAQ What is the future of Hibernate ? Hibernate Core will be developed independently from and faster than the EJB 3.0 or Java Persistence specifications. It will be the testing ground for new ideas, as it has always been. Any new feature developed for Hibernate Core is immediately and automatically available as an extension for all users of Java Persistence with Hibernate Annotations and Hibernate EntityManager. Over time, if a particular concept has proven its usefulness, Hibernate developers will work with other expert group members on future standardization in an updated EJB or Java Persistence specification. Hence, if you’re interested in a quickly evolving standard, we encourage you to use native Hibernate functionality, and to send feedback to the respective expert group. The desire for total portability and the rejection of vendor extensions were major reasons for the stagnation we saw in EJB 1.x and 2.x.

After so much praise of ORM and Hibernate, it’s time to look at some actual code.

It’s time to wrap up the theory and to set up a first project.