In this topic, we’ll show you how to retrieve objects from the database and how you can optimize the loading of object networks when you navigate from object to object in your application.

We then enable caching; you’ll learn how to speed up data retrieval in local and distributed applications.

Defining the global fetch plan

Retrieving persistent objects from the database is one of the most interesting parts of working with Hibernate.

The object-retrieval options

Hibernate provides the following ways to get objects out of the database:

■ Navigating the object graph, starting from an already loaded object, by accessing the associated objects through property accessor methods such as aUser.getAddress().getCity(), and so on. Hibernate automatically loads (and preloads) nodes of the graph while you call accessor methods, if the persistence context is still open.

■ Retrieval by identifier, the most convenient method when the unique identifier value of an object is known.

■ The Hibernate Query Language (HQL), which is a full object-oriented query language. The Java Persistence query language (JPA QL) is a standardized subset of the Hibernate query language.

■ The Hibernate Criteria interface, which provides a type-safe and object-oriented way to perform queries without the need for string manipulation. This facility includes queries based on example objects.

■ Native SQL queries, including stored procedure calls, where Hibernate still takes care of mapping the JDBC result sets to graphs of persistent objects.

In your Hibernate or JPA application, you use a combination of these techniques.

We won’t discuss each retrieval method in much detail in this topic. We’re more interested in the so-called default fetch plan and fetching strategies. The default fetch plan and fetching strategy is the plan and strategy that applies to a particular entity association or collection. In other words, it defines if and how an associated object or a collection should be loaded, when the owning entity object is loaded, and when you access an associated object or collection. Each retrieval method may use a different plan and strategy—that is, a plan that defines what part of the persistent object network should be retrieved and how it should be retrieved. Your goal is to find the best retrieval method and fetching strategy for every use case in your application; at the same time, you also want to minimize the number of SQL queries for best performance.

Before we look at the fetch plan options and fetching strategies, we’ll give you an overview of the retrieval methods. (We also mention the Hibernate caching system sometimes, but we fully explore it later in this topic.)

You saw how objects are retrieved by identifier earlier in the previous topic, so we won’t repeat it here. Let’s go straight to the more flexible query options, HQL (equivalent to JPA QL) and Criteria. Both allow you to create arbitrary queries.

The Hibernate Query Language and JPA QL

The Hibernate Query Language is an object-oriented dialect of the familiar database query language SQL. HQL bears some close resemblance to ODMG OQL, but unlike OQL, it’s adapted for use with SQL databases and is easier to learn (thanks to its close resemblance to SQL) and fully implemented (we don’t know of any OQL implementation that is complete).

The EJB 3.0 standard defines the Java Persistence query language. This new JPA QL and the HQL have been aligned so that JPA QL is a subset of HQL. A valid JPA QL query is always also a valid HQL query; HQL has more options that should be considered vendor extensions of the standardized subset.

HQL is commonly used for object retrieval, not for updating, inserting, or deleting data. Object state synchronization is the job of the persistence manager, not the developer. But, as we’ve shown in the previous topic, HQL and JPA QL support direct bulk operations for updating, deleting, and inserting, if required by the use case (mass data operations).

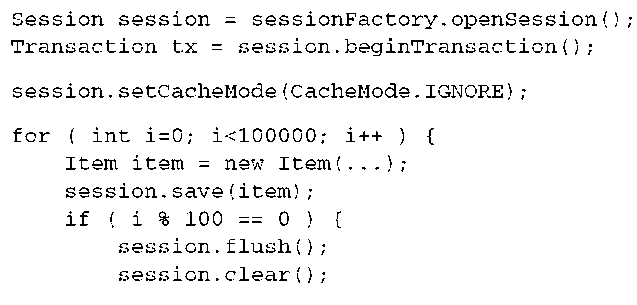

Most of the time, you only need to retrieve objects of a particular class and restrict by the properties of that class. For example, the following query retrieves a user by first name.

After preparing query q, you bind a value to the named parameter :fname. The result is returned as a List of User objects.

HQL is powerful, and even though you may not use the more advanced features all the time, they’re needed for more difficult problems. For example, HQL supports

■ The ability to apply restrictions to properties of associated objects related by reference or held in collections (to navigate the object graph using query language).

■ The ability to retrieve only properties of an entity or entities, without the overhead of loading the entity itself into the persistence context. This is sometimes called a report query; it is more correctly called projection.

■ The ability to order the results of the query.

■ The ability to paginate the results.

■ Aggregation with group by, having, and aggregate functions like sum, min, and max/min.

■ Outer joins when retrieving multiple objects per row.

■ The ability to call standard and user-defined SQL functions.

■ Subqueries (nested queries).

Querying with a criteria

The Hibernate query by criteria (QBC) API allows a query to be built by manipulation of criteria objects at runtime. This lets you specify constraints dynamically without direct string manipulations, but you don’t lose much of the flexibility or power of HQL. On the other hand, queries expressed as criteria are often much less readable than queries expressed in HQL.

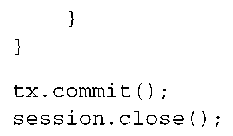

Retrieving a user by first name is easy with a Criteria object:

A Criteria is a tree of Criterion instances. The Restrictions class provides static factory methods that return Criterion instances. Once the desired criteria tree is build, it’s executed against the database.

Many developers prefer query by criteria, considering it a more object-oriented approach. They also like the fact that the query syntax may be parsed and validated at compile time, whereas HQL expressions aren’t parsed until runtime (or startup, if externalized named queries are used).

The nice thing about the Hibernate Criteria API is the Criterion framework. This framework allows extension by the user, which is more difficult in the case of a query language like HQL.

Note that the Criteria API is native to Hibernate; it isn’t part of the Java Persistence standard. In practice, Criteria will be the most common Hibernate extension you utilize in your JPA application. We expect that a future version of the JPA or EJB standard will include a similar programmatic query interface.

Querying by example

As part of the Criteria facility, Hibernate supports query by example (QBE). The idea behind query by example is that the application supplies an instance of the queried class, with certain property values set (to nondefault values). The query returns all persistent instances with matching property values. Query by example isn’t a particularly powerful approach. However, it can be convenient for some applications, especially if it’s used in combination with Criteria:

This example first creates a new Criteria that queries for User objects. Then you add an Example object, a User instance with only the firstname property set. Finally, a Restriction criterion is added before executing the query.

A typical use case for query by example is a search screen that allows users to specify a range of different property values to be matched by the returned result set. This kind of functionality can be difficult to express cleanly in a query language; string manipulations are required to specify a dynamic set of constraints.

You now know the basic retrieval options in Hibernate. We focus on the object-fetching plans and strategies for the rest of this section.

Let’s start with the definition of what should be loaded into memory.

The lazy default fetch plan

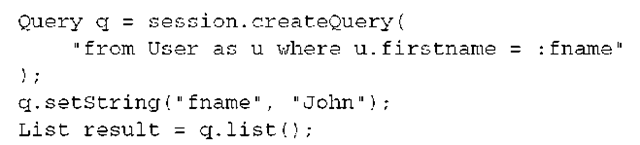

Hibernate defaults to a lazy fetching strategy for all entities and collections. This means that Hibernate by default loads only the objects you’re querying for. Let’s explore this with a few examples.

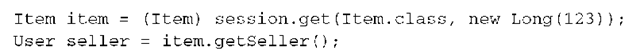

If you query for an Item object (let’s say you load it by its identifier), exactly this Item and nothing else is loaded into memory:

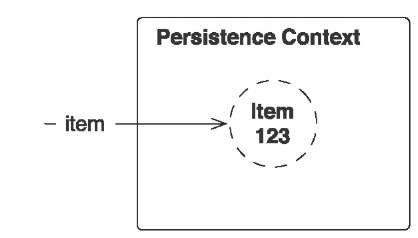

This retrieval by identifier results in a single (or possibly several, if inheritance or secondary tables are mapped) SQL statement that retrieves an Item instance. In the persistence context, in memory, you now have this item object available in persistent state, as shown in figure 13.1.

Figure 13.1

An uninitialized placeholder for an Item instance

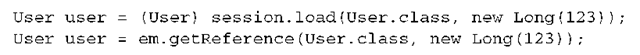

We’ve lied to you. What is available in memory after the load() operation isn’t a persistent item object. Even the SQL that loads an Item isn’t executed. Hibernate created a proxy that looks like the real thing.

Understanding proxies

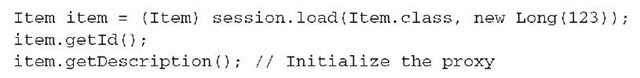

Proxies are placeholders that are generated at runtime. Whenever Hibernate returns an instance of an entity class, it checks whether it can return a proxy instead and avoid a database hit. A proxy is a placeholder that triggers the loading of the real object when it’s accessed for the first time:

The third line in this example triggers the execution of the SQL that retrieves an Item into memory. As long as you access only the database identifier property, no initialization of the proxy is necessary. (Note that this isn’t true if you map the identifier property with direct field access; Hibernate then doesn’t even know that the getId() method exists. If you call it, the proxy has to be initialized.)

You first load two objects, an Item and a User. Hibernate doesn’t hit the database to do this: It returns two proxies. This is all you need, because you only require the Item and User to create a new Bid. The save(newBid) call executes an INSERT statement to save the row in the BID table with the foreign key value of an Item and a User—this is all the proxies can and have to provide. The previous code snippet doesn’t execute any SELECT!

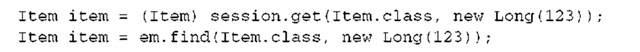

If you call get() instead of load() you trigger a database hit and no proxy is returned. The get() operation always hits the database (if the instance isn’t already in the persistence context and if no transparent second-level cache is active) and returns null if the object can’t be found.

A JPA provider can implement lazy loading with proxies. The method names of the operations that are equivalent to load() and get() on the EntityManager API are find() and getReference():

The first call, find(), has to hit the database to initialize an Item instance. No proxies are allowed—it’s the equivalent of the Hibernate get() operation. The second call, getReference(), may return a proxy, but it doesn’t have to—which translates to load() in Hibernate.

Because Hibernate proxies are instances of runtime generated subclasses of your entity classes, you can’t get the class of an object with the usual operators. This is where the helper method HibernateProxyHelper.getClassWithoutIni-tializingProxy(o) is useful.

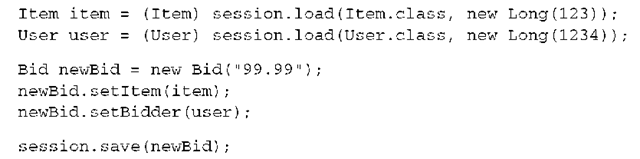

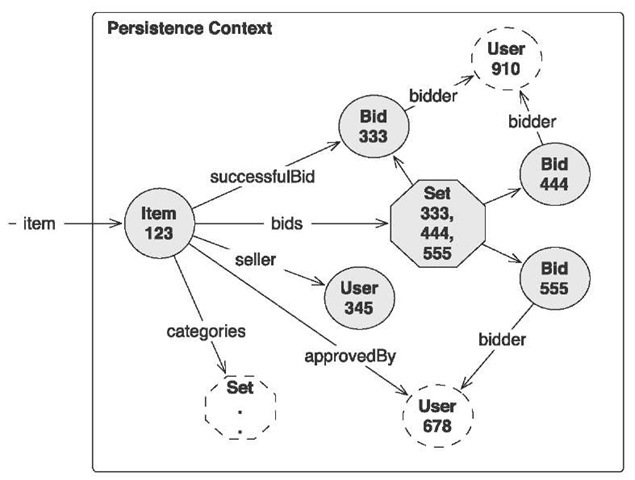

Let’s assume you have an Item instance into memory, either by getting it explicitly or by calling one of its properties and forcing initialization of a proxy. Your persistence context now contains a fully loaded object, as shown in figure 13.2.

Again, you can see proxies in the picture. This time, these are proxies that have been generated for all *-to-one associations. Associated entity objects are not loaded right away; the proxies carry the identifier values only. From a different perspective: the identifier values are all foreign key columns in the item’s row. Collections also aren’t loaded right away, but we use the term collection wrapper to describe this kind of placeholder. Internally, Hibernate has a set of smart collections that can initialize themselves on demand. Hibernate replaces your collections with these; that is why you should use collection interfaces only in your domain model. By default, Hibernate creates placeholders for all associations and collections, and only retrieves value-typed properties and components right away. (This is unfortunately not the default fetch plan standardized by Java Persistence; we’ll get back to the differences later.)

A proxy is useful if you need the Item only to create a reference, for example:

Figure 13.2

Proxies and collection wrappers represent the boundary of the loaded graph.

FAQ Does lazy loading of one-to-one associations work? Lazy loading for one-to-one associations is sometimes confusing for new Hibernate users. If you consider one-to-one associations based on shared primary keys, an association can be proxied only if it’s constrained=”true”. For example, an Address always has a reference to a User. If this association is nullable and optional, Hibernate first would have to hit the database to find out whether a proxy or a null should be applied—the purpose of lazy loading is to not hit the database at all. You can enable lazy loading through bytecode instrumentation and interception, which we’ll discuss later.

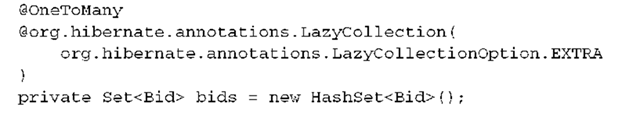

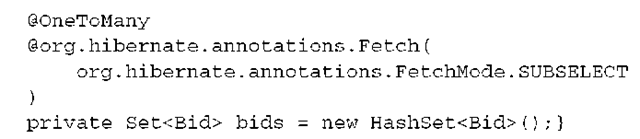

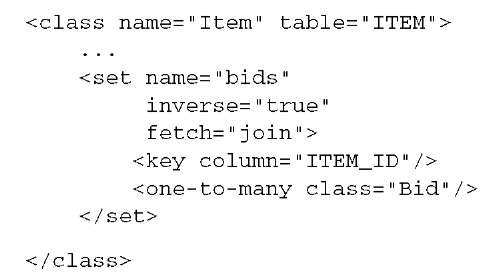

A proxy is initialized if you call any method that is not the identifier getter method, a collection is initialized if you start iterating through its elements or if you call any of the collection-management operations, such as size() and con-tains(). Hibernate provides an additional setting that is mostly useful for large collections; they can be mapped as extra lazy. For example, consider the collection of bids of an Item:

The collection wrapper is now smarter than before. The collection is no longer initialized if you call size(), contains(), or isEmpty()—the database is queried to retrieve the necessary information. If it’s a Map or a List, the operations con-tainsKey() and get() also query the database directly. A Hibernate extension annotation enables the same optimization:

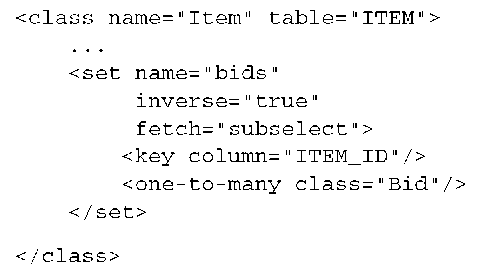

Let’s define a fetch plan that isn’t completely lazy. First, you can disable proxy generation for entity classes.

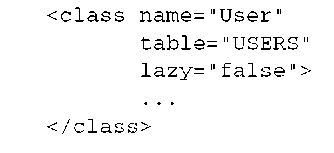

Disabling proxy generation

Proxies are a good thing: They allow you to load only the data that is really needed. They even let you create associations between objects without hitting the database unnecessarily. Sometimes you need a different plan—for example, you want to express that a User object should always be loaded into memory and no placeholder should be returned instead.

You can disable proxy generation for a particular entity class with the lazy=”false” attribute in XML mapping metadata:

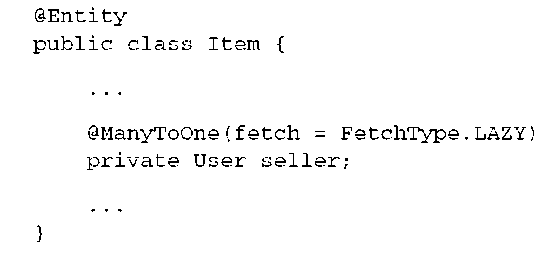

The JPA standard doesn’t require an implementation with proxies; the word proxy doesn’t even appear in the specification. Hibernate is a JPA provider that relies on proxies by default, so the switch that disables Hibernate proxies is available as a vendor extension:

A load() of a User object can’t return a proxy. The JPA operation getRefer-ence() can no longer return a proxy reference. This may be what you desired to achieve. However, disabling proxies also has consequences for all associations that reference the entity. For example, the Item entity has a seller association to a User. Consider the following operations that retrieve an Item:

In addition to retrieving the Item instance, the get() operation now also loads the linked seller of the Item; no User proxy is returned for this association. The same is true for JPA: The Item that has been loaded with find() doesn’t reference a seller proxy. The User who is selling the Item must be loaded right away. (We answer the question how this is fetched later.)

Disabling proxy generation on a global level is often too coarse-grained. Usually, you only want to disable the lazy loading behavior of a particular entity association or collection to define a fine-grained fetch plan. You want the opposite: eager loading of a particular association or collection.

Eager loading of associations and collections

You’ve seen that Hibernate is lazy by default. All associated entities and collections aren’t initialized if you load an entity object. Naturally, you often want the opposite: to specify that a particular entity association or collection should always be loaded. You want the guarantee that this data is available in memory without an additional database hit. More important, you want a guarantee that, for example, you can access the seller of an Item if the Item instance is in detached state. You have to define this fetch plan, the part of your object network that you want to always load into memory.

Disabling proxy generation for an entity has serious consequences. All of these operations require a database hit:

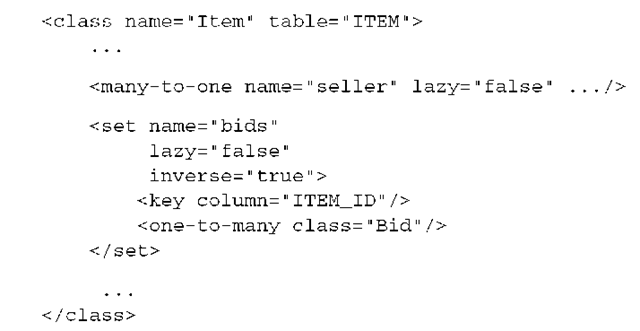

Let’s assume that you always require the seller of an Item. In Hibernate XML mapping metadata you’d map the association from Item to User as lazy=”false”:

The same “always load” guarantee can be applied to collections—for example, all bids of an Item:

If you now get() an Item (or force the initialization of a proxied Item), both the seller object and all the bids are loaded as persistent instances into your persistence context:

The persistence context after this call is shown graphically in figure 13.3.

Other lazy mapped associations and collections (the bidder of each Bid instance, for example) are again uninitialized and are loaded as soon as you access them. Imagine that you close the persistence context after loading an Item. You can now navigate, in detached state, to the seller of the Item and iterate through all the bids for that Item. If you navigate to the categories this Item is assigned to, you get a LazyInitializationException! Obviously, this collection wasn’t part of your fetch plan and wasn’t initialized before the persistence context was closed. This also happens if you try to access a proxy—for example, the User that approved the item. (Note that you can access this proxy two ways: through the approvedBy and bidder references.)

Figure 13.3 A larger graph fetched eagerly through disabled lazy associations and collections

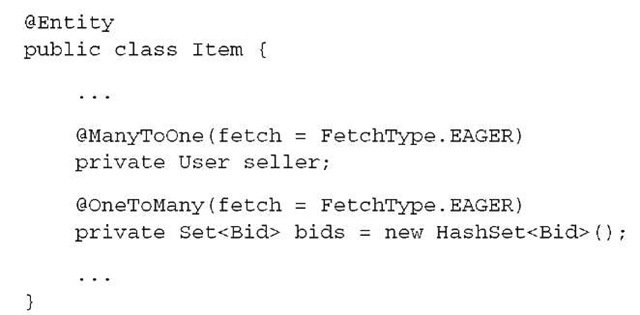

With annotations, you switch the FetchType of an entity association or a collection to get the same result:

The FetchType.EAGER provides the same guarantees as lazy=”false” in Hibernate: the associated entity instance must be fetched eagerly, not lazily. We already mentioned that Java Persistence has a different default fetch plan than Hibernate. Although all associations in Hibernate are completely lazy, all @ManyToOne and @OneToOne associations default to FetchType.EAGER! This default was standardized to allow Java Persistence provider implementations without lazy loading (in practice, such a persistence provider wouldn’t be very useful). We recommend that you default to the Hibernate lazy loading fetch plan by setting FetchType. LAZY in your to-one association mappings and only override it when necessary:

You now know how to create a fetch plan; that is, how you define what part of the persistent object network should be retrieved into memory. Before we show you how to define how these objects should be loaded and how you can optimize the SQL that will be executed, we’d like to demonstrate an alternative lazy loading strategy that doesn’t rely on proxies.

Lazy loading with interception

Runtime proxy generation as provided by Hibernate is an excellent choice for transparent lazy loading. The only requirement that this implementation exposes is a package or public visible no-argument constructor in classes that must be proxied and nonfinal methods and class declarations. At runtime, Hibernate generates a subclass that acts as the proxy class; this isn’t possible with a private constructor or a final entity class.

On the other hand, many other persistence tools don’t use runtime proxies: They use interception. We don’t know of many good reasons why you’d use interception instead of runtime proxy generation in Hibernate. The nonprivate constructor requirement certainly isn’t a big deal. However, in two cases, you may not want to work with proxies:

■ The only cases where runtime proxies aren’t completely transparent are polymorphic associations that are tested with instanceof. Or, you may want to typecast an object but can’t, because the proxy is an instance of a runtime-generated subclass.

■ Proxies and collection wrappers can only be used to lazy load entity associations and collections. They can’t be used to lazy load individual scalar properties or components. We consider this kind of optimization to be rarely useful. For example, you usually don’t want to lazy load the initialPrice of an Item. Optimizing at the level of individual columns that are selected in SQL is unnecessary if you aren’t working with (a) a significant number of optional columns or (b) with optional columns containing large values that have to be retrieved on-demand. Large values are best represented with locator objects (LOBs); they provide lazy loading by definition without the need for interception. However, interception (in addition to proxies, usually) can help you to optimize column reads.

Let’s discuss interception for lazy loading with a few examples.

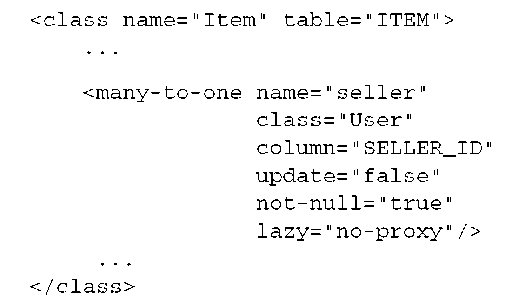

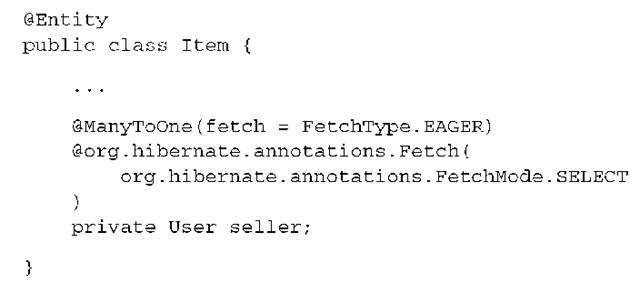

Imagine that you don’t want to utilize a proxy of User entity class, but you still want the benefit of lazy loading an association to User—for example, as seller of an Item. You map the association with no-proxy:

The default of the lazy attribute is proxy. By setting no-proxy, you’re telling Hibernate to apply interception to this association:

The first line retrieves an Item object into persistent state. The second line accesses the seller of that Item. This call to getSeller() is intercepted by Hibernate and triggers the loading of the User in question. Note how proxies are more lazy than interception: You can call item.getSeller().getId() without forcing initialization of the proxy. This makes interception less useful if you only want to set references, as we discussed earlier.

You can also lazy load properties that are mapped with <property> or <component>; here the attribute that enables interception is lazy=”true”, in Hibernate XML mappings. With annotations, @Basic( fetch = FetchType . LAZY) is a hint for Hibernate that a property or component should be lazy loaded through interception.

To disable proxies and enable interception for associations with annotations, you have to rely on a Hibernate extension:

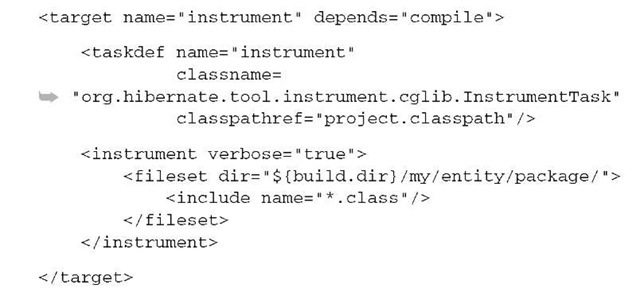

To enable interception, the bytecode of your classes must be instrumented after compilation, before runtime. Hibernate provides an Ant task for that purpose:

We leave it up to you if you want to utilize interception for lazy loading—in our experience, good use cases are rare.

Naturally, you not only want to define what part of your persistent object network must be loaded, but also how these objects are retrieved. In addition to creating a fetch plan, you want to optimize it with the right fetching strategies.

Selecting a fetch strategy

Hibernate executes SQL SELECT statements to load objects into memory. If you load an object, a single or several SELECTs are executed, depending on the number of tables which are involved and the fetchingstrategy you’ve applied.

Your goal is to minimize the number of SQL statements and to simplify the SQL statements, so that querying can be as efficient as possible. You do this by applying the best fetching strategy for each collection or association. Let’s walk through the different options step by step.

By default, Hibernate fetches associated objects and collections lazily whenever you access them (we assume that you map all to-one associations as FetchType. LAZY if you use Java Persistence). Look at the following trivial code example:

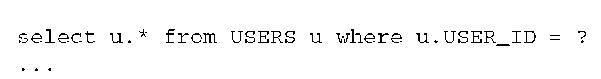

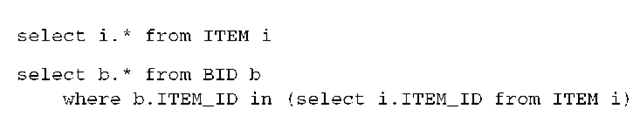

You didn’t configure any association or collection to be nonlazy, and that proxies can be generated for all associations. Hence, this operation results in the following SQL SELECT:

(Note that the real SQL Hibernate produces contains automatically generated aliases; we’ve removed them for readability reasons in all the following examples.) You can see that the SELECT queries only the ITEM table and retrieves a particular row. All entity associations and collections aren’t retrieved. If you access any prox-ied association or uninitialized collection, a second SELECT is executed to retrieve the data on demand.

Your first optimization step is to reduce the number of additional on-demand SELECTs you necessarily see with the default lazy behavior—for example, by prefetching data.

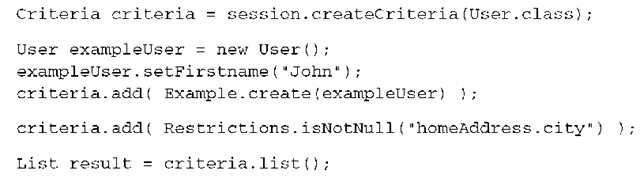

Prefetching data in batches

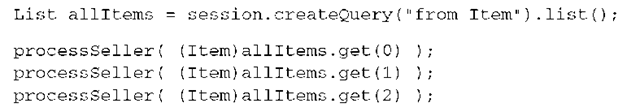

If every entity association and collection is fetched only on demand, many additional SQL SELECT statements may be necessary to complete a particular procedure. For example, consider the following query that retrieves all Item objects and accesses the data of each items seller:

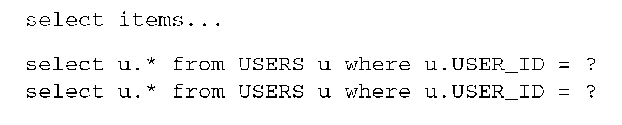

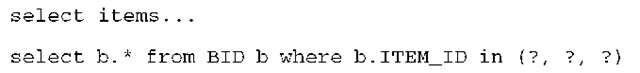

Naturally, you use a loop here and iterate through the results, but the problem this code exposes is the same. You see one SQL SELECT to retrieve all the Item objects, and an additional SELECT for every seller of an Item as soon as you process it. All associated User objects are proxies. This is one of the worst-case scenarios we’ll describe later in more detail: the n+1 selects problem. This is what the SQL looks like:

Hibernate offers some algorithms that can prefetch User objects. The first optimization we now discuss is called batch fetching, and it works as follows: If one proxy of a User must be initialized, go ahead and initialize several in the same SELECT. In other words, if you already know that there are three Item instances in the persistence context, and that they all have a proxy applied to their seller association, you may as well initialize all the proxies instead of just one.

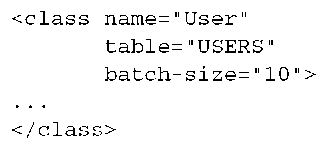

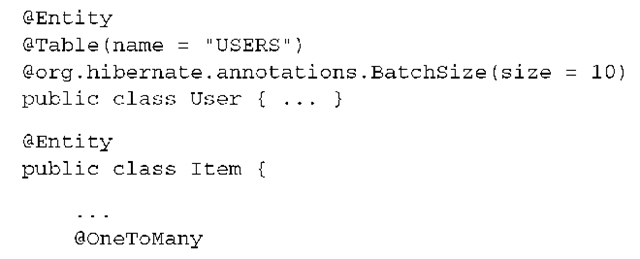

Batch fetching is often called a blind-guess optimization, because you don’t know how many uninitialized User proxies may be in a particular persistence context. In the previous example, this number depends on the number of Item objects returned. You make a guess and apply a batch-size fetching strategy to your User class mapping:

You’re telling Hibernate to prefetch up to 10 uninitialized proxies in a single SQL SELECT, if one proxy must be initialized. The resulting SQL for the earlier query and procedure may now look as follows:

The first statement that retrieves all Item objects is executed when you list() the query. The next statement, retrieving three User objects, is triggered as soon as you initialize the first proxy returned by allItems.get(0).getSeller(). This query loads three sellers at once—because this is how many items the initial query returned and how many proxies are uninitialized in the current persistence context. You defined the batch size as “up to 10.” If more than 10 items are returned, you see how the second query retrieves 10 sellers in one batch. If the application hits another proxy that hasn’t been initialized, a batch of another 10 is retrieved— and so on, until no more uninitialized proxies are left in the persistence context or the application stops accessing proxied objects.

FAQ What is the real batch-fetching algorithm? You can think about batch fetching as explained earlier, but you may see a slightly different algorithm if you experiment with it in practice. It’s up to you if you want to know and understand this algorithm, or if you trust Hibernate to do the right thing. As an example, imagine a batch size of 20 and a total number of 119 uninitialized proxies that have to be loaded in batches. At startup time, Hibernate reads the mapping metadata and creates 11 batch loaders internally. Each loader knows how many proxies it can initialize: 20, 10, 9, 8, 7, 6, 5, 4, 3, 2, 1. The goal is to minimize the memory consumption for loader creation and to create enough loaders that every possible batch fetch can be produced. Another goal is to minimize the number of SQL SELECTs, obviously. To initialize 119 proxies Hibernate executes seven batches (you probably expected six, because 6 x 20 > 119). The batch loaders that are applied are five times 20, one time 10, and one time 9, automatically selected by Hibernate.

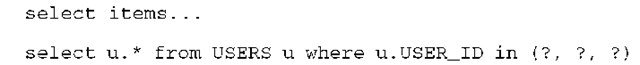

Batch fetching is also available for collections:

If you now force the initialization of one bids collection, up to 10 more collections of the same type, if they’re uninitialized in the current persistence context, are loaded right away:

In this case, you again have three Item objects in persistent state, and touching one of the unloaded bids collections. Now all three Item objects have their bids loaded in a single SELECT.

Batch-size settings for entity proxies and collections are also available with annotations, but only as Hibernate extensions:

Prefetching proxies and collections with a batch strategy is really a blind guess. It’s a smart optimization that can significantly reduce the number of SQL statements that are otherwise necessary to initialize all the objects you’re working with. The only downside of prefetching is, of course, that you may prefetch data you won’t need in the end. The trade-off is possibly higher memory consumption, with fewer SQL statements. The latter is often much more important: Memory is cheap, but scaling database servers isn’t.

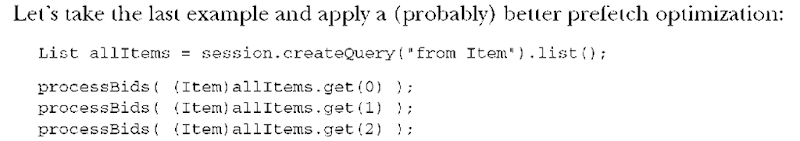

Another prefetching algorithm that isn’t a blind guess uses subselects to initialize many collections with a single statement.

Prefetching collections with subselects

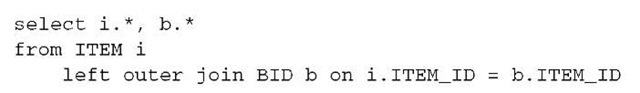

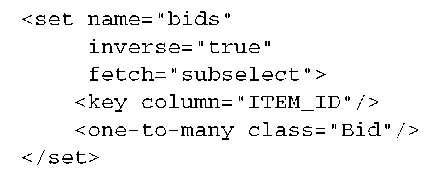

You get one initial SQL SELECT to retrieve all Item objects, and one additional SELECT for each bids collection, when it’s accessed. One possibility to improve this would be batch fetching; however, you’d need to figure out an optimum batch size by trial. A much better optimization is subselect fetching for this collection mapping:

Hibernate now initializes all bids collections for all loaded Item objects, as soon as you force the initialization of one bids collection. It does that by rerunning the first initial query (slightly modified) in a subselect:

Prefetching using a subselect is a powerful optimization; we’ll show you a few more details about it later, when we walk through a typical scenario. Subselect fetching is, at the time of writing, available only for collections, not for entity proxies. Also note that the original query that is rerun as a subselect is only remembered by Hibernate for a particular Session. If you detach an Item instance without initializing the collection of bids, and then reattach it and start iterating through the collection, no prefetching of other collections occurs.

All the previous fetching strategies are helpful if you try to reduce the number of additional SELECTs that are natural if you work with lazy loading and retrieve objects and collections on demand. The final fetching strategy is the opposite of on-demand retrieval. Often you want to retrieve associated objects or collections in the same initial SELECT with a JOIN.

Eager fetching with joins

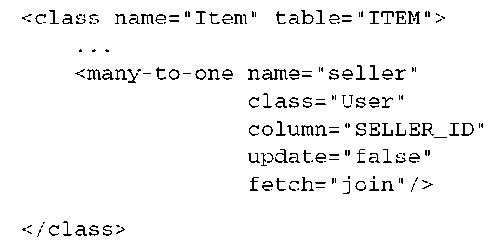

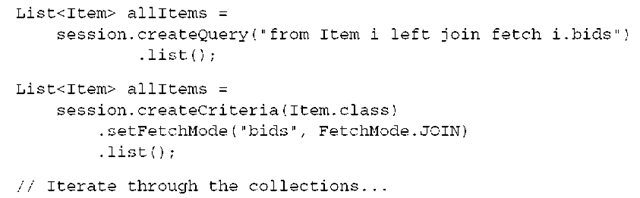

Lazy loading is an excellent default strategy. On other hand, you can often look at your domain and data model and say, “Every time I need an Item, I also need the seller of that Item.” If you can make that statement, you should go into your mapping metadata, enable eager fetching for the seller association, and utilize SQL joins:

In annotations, you again have to use a Hibernate extension to enable this optimization:

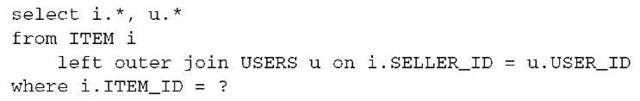

Hibernate now loads both an Item and its seller in a single SQL statement. For example:

Obviously, the seller is no longer lazily loaded on demand, but immediately. Hence, a fetch=”join” disables lazy loading. If you only enable eager fetching with lazy=”false”, you see an immediate second SELECT. With fetch=”join”, you get the seller loaded in the same single SELECT. Look at the resultset from this query shown in figure 13.4.

Item item = (Item) session.get(Item.class, new Long(123));

This operation triggers the following SQL SELECT:

|

ITEMJD |

DESCRIPTION |

SELLERJD |

|

USERJD |

USERNAME |

|

|

1 |

Item Nr. One |

2 |

2 |

johndoe |

Figure 13.4 Two tables are joined to eagerly fetch associated rows.

Hibernate reads this row and marshals two objects from the result. It connects them with a reference from Item to User, the seller association. If an Item doesn’t have a seller all u.* columns are filled with NULL. This is why Hibernate uses an outer join, so it can retrieve not only Item objects with sellers, but all of them. But you know that an Item has to have a seller in CaveatEmptor. If you enable <many-to-one not-null=” true”/>, Hibernate executes an inner join instead of an outer join.

You can also set the eager join fetching strategy on a collection:

If you now load many Item objects, for example with createCriteria(Item. class).list(), this is how the resulting SQL statement looks:

The resultset now contains many rows, with duplicate data for each Item that has many bids, and NULL fillers for all Item objects that don’t have bids. Look at the resultset in figure 13.5.

Hibernate creates three persistent Item instances, as well as four Bid instances, and links them all together in the persistence context so that you can navigate this graph and iterate through collections—even when the persistence context is closed and all objects are detached.

Eager-fetching collections using inner joins is conceptually possible, and we’ll do this later in HQL queries. However, it wouldn’t make sense to cut off all the Item objects without bids in a global fetching strategy in mapping metadata, so there is no option for global inner join eager fetching of collections.

With Java Persistence annotations, you enable eager fetching with a FetchType annotation attribute:

This mapping example should look familiar: You used it to disable lazy loading of an association and a collection earlier. Hibernate by default interprets this as an eager fetch that shouldn’t be executed with an immediate second SELECT, but with a JOIN in the initial query.

|

ITEMJD |

DESCRIPTION |

BIDJD |

ITEMJD |

AMOUNT |

|

|

1 |

Item Nr. One |

1 |

1 |

99.00 |

|

|

1 |

Item Nr. One |

2 |

1 |

100.00 |

|

|

1 |

Item Nr. One |

3 |

1 |

101.00 |

|

|

2 |

Item Nr. Two |

4 |

2 |

4.99 |

|

|

3 |

Item Nr. Three |

NULL |

NULL |

NULL |

Figure 13.5

Outer join fetching of associated collection elements

You can keep the FetchType.EAGER Java Persistence annotation but switch from join fetching to an immediate second select explicitly by adding a Hibernate extension annotation:

If an Item instance is loaded, Hibernate will eagerly load the seller of this item with an immediate second SELECT.

Finally, we have to introduce a global Hibernate configuration setting that you can use to control the maximum number ofjoined entity associations (not collections). Consider all many-to-one and one-to-one association mappings you’ve set to fetch=”join” (or FetchType.EAGER) in your mapping metadata. Let’s assume that Item has a successfulBid association, that Bid has a bidder, and that User has a shippingAddress. If all these associations are mapped with fetch=”join”, how many tables are joined and how much data is retrieved when you load an Item?

The number of tables joined in this case depends on the global hibernate. max_fetch_depth configuration property. By default, no limit is set, so loading an Item also retrieves a Bid, a User, and an Address in a single select. Reasonable settings are small, usually between 1 and 5. You may even disable join fetching for many-to-one and one-to-one associations by setting the property to 0! (Note that some database dialects may preset this property: For example, MySQLDialect sets it to 2.)

SQL queries also get more complex if inheritance or joined mappings are involved. You need to consider a few extra optimization options whenever secondary tables are mapped for a particular entity class.

Optimizing fetching for secondary tables

If you query for objects that are of a class which is part of an inheritance hierarchy, the SQL statements get more complex:

This operation retrieves all BillingDetails instances. The SQL SELECT now depends on the inheritance mapping strategy you’ve chosen for BillingDetails and its subclasses CreditCard and BankAccount. Assuming that you’ve mapped them all to one table (a table-per-hierarchy), the query isn’t any different than the one shown in the previous section. However, if you’ve mapped them with implicit polymorphism, this single HQL operation may result in several SQL SELECTs against each table of each subclass.

Outer joins for a table-per-subclass hierarchy

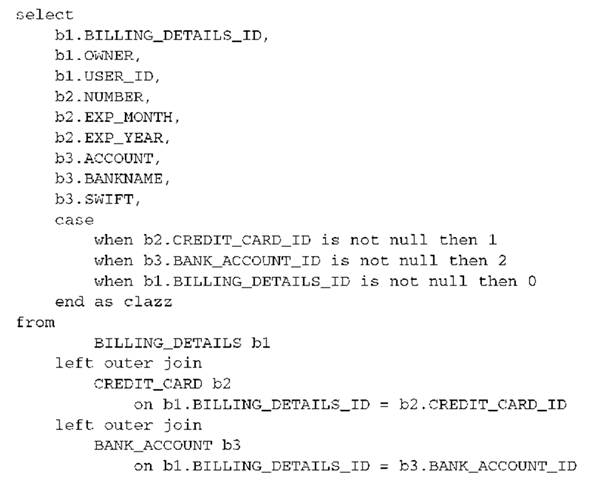

If you map the hierarchy in a normalized fashion all subclass tables are OUTER JOINed in the initial statement:

This is already a interesting query. It joins three tables and utilizes a CASE … WHEN … END expression to fill in the clazz column with a number between 0 and 2. Hibernate can then read the resultset and decide on the basis of this number what class each of the returned rows represents an instance of.

Many database-management systems limit the maximum number of tables that can be combined with an OUTER JOIN. You’ll possibly hit that limit if you have a wide and deep inheritance hierarchy mapped with a normalized strategy (we’re talking about inheritance hierarchies that should be reconsidered to accommodate the fact that after all, you’re working with an SQL database).

Switching to additional selects

In mapping metadata, you can then tell Hibernate to switch to a different fetching strategy. You want some parts of your inheritance hierarchy to be fetched with immediate additional SELECT statements, not with an OUTER JOIN in the initial query.

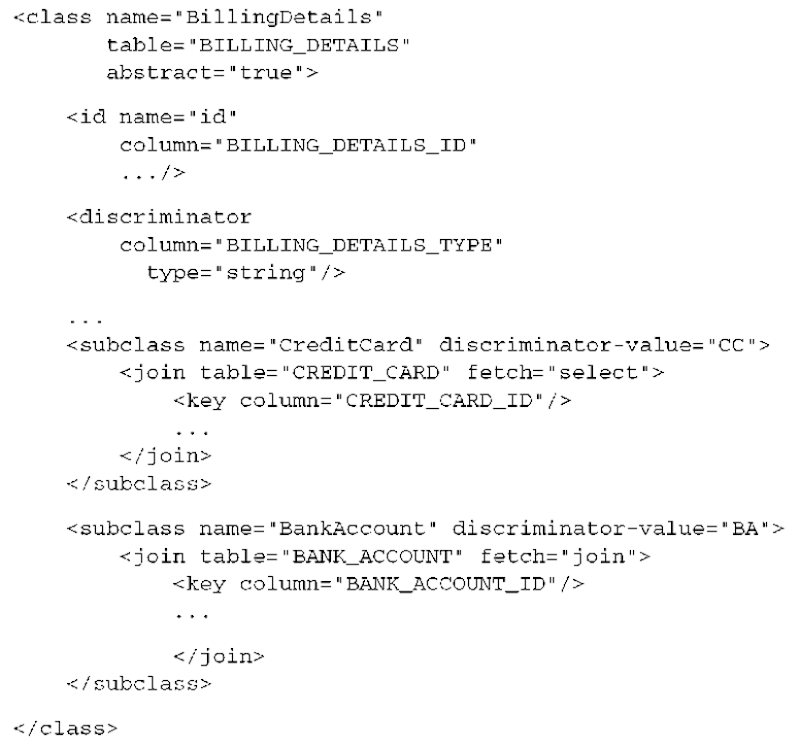

The only way to enable this fetching strategy is to refactor the mapping slightly, as a mix of table-per-hierarchy (with a discriminator column) and table-per-subclass with the <join> mapping:

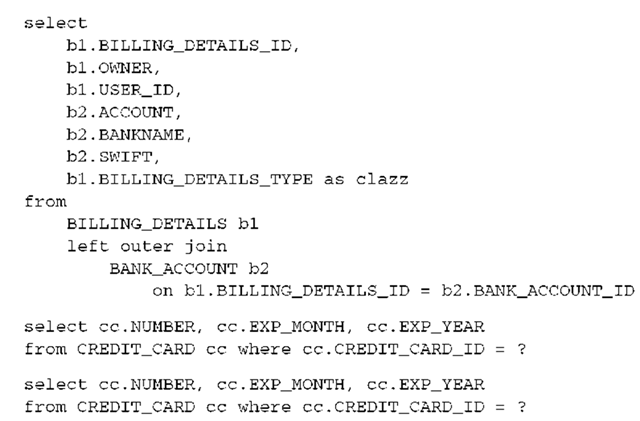

This mapping breaks out the CreditCard and BankAccount classes each into its own table but preserves the discriminator column in the superclass table. The fetching strategy for CreditCard objects is select, whereas the strategy for BankAccount is the default, join. Now, if you query for all BillingDetails, the following SQL is produced:

The first SQL SELECT retrieves all rows from the superclass table and all rows from the BANK_ACCOUNT table. It also returns discriminator values for each row as the clazz column. Hibernate now executes an additional select against the CREDIT_ CARD table for each row of the first result that had the right discriminator for a CreditCard. In other words, two queries mean that two rows in the BILLING_ DETAILS superclass table represent (part of) a CreditCard object.

This kind of optimization is rarely necessary, but you now also know that you can switch from a default join fetching strategy to an additional immediate select whenever you deal with a <join> mapping.

We’ve now completed our journey through all options you can set in mapping metadata to influence the default fetch plan and fetching strategy. You learned how to define what should be loaded by manipulating the lazy attribute, and how it should be loaded by setting the fetch attribute. In annotations, you use FetchType.LAZY and FetchType.EAGER, and you use Hibernate extensions for more fine-grained control of the fetch plan and strategy.

Knowing all the available options is only one step toward an optimized and efficient Hibernate or Java Persistence application. You also need to know when and when not to apply a particular strategy.

Optimization guidelines

By default, Hibernate never loads data that you didn’t ask for, which reduces the memory consumption of your persistence context. However, it also exposes you to the so-called n+1 selects problem. If every association and collection is initialized only on demand, and you have no other strategy configured, a particular procedure may well execute dozens or even hundreds of queries to get all the data you require. You need the right strategy to avoid executing too many SQL statements.

If you switch from the default strategy to queries that eagerly fetch data with joins, you may run into another problem, the Cartesian product issue. Instead of executing too many SQL statements, you may now (often as a side effect) create statements that retrieve too much data.

You need to find the middle ground between the two extremes: the correct fetching strategy for each procedure and use case in your application. You need to know which global fetch plan and strategy you should set in your mapping metadata, and which fetching strategy you apply only for a particular query (with HQL or Criteria).

We now introduce the basic problems of too many selects and Cartesian products and then walk you through optimization step by step.

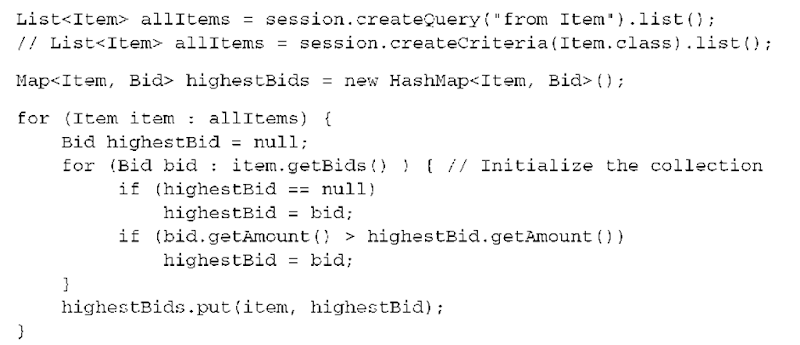

The n+1 selects problem is easy to understand with some example code. Let’s assume that you don’t configure any fetch plan or fetching strategy in your mapping metadata: Everything is lazy and loaded on demand. The following example code tries to find the highest Bids for all Items (there are many other ways to do this more easily, of course):

First you retrieve all Item instances; there is no difference between HQL and Criteria queries. This query triggers one SQL SELECT that retrieves all rows of the ITEM table and returns n persistent objects. Next, you iterate through this result and access each Item object.

What you access is the bids collection of each Item. This collection isn’t initialized so far, the Bid objects for each item have to be loaded with an additional query. This whole code snippet therefore produces n+1 selects.

You always want to avoid n+1 selects.

A first solution could be a change of your global mapping metadata for the collection, enabling prefetching in batches:

Instead of n+1 selects, you now see n/10+1 selects to retrieve the required collections into memory. This optimization seems reasonable for an auction application: “Only load the bids for an item when they’re needed, on demand. But if one collection of bids must be loaded for a particular item, assume that other item objects in the persistence context also need their bids collections initialized. Do this in batches, because it’s somewhat likely that not all item objects need their bids.”

With a subselect-based prefetch, you can reduce the number of selects to exactly two:

The first query in the procedure now executes a single SQL SELECT to retrieve all Item instances. Hibernate remembers this statement and applies it again when you hit the first uninitialized collection. All collections are initialized with the second query. The reasoning for this optimization is slightly different: “Only load the bids for an item when they’re needed, on demand. But if one collection of bids must be loaded, for a particular item, assume that all other item objects in the persistence context also need their bids collection initialized.”

Finally, you can effectively turn off lazy loading of the bids collection and switch to an eager fetching strategy that results in only a single SQL SELECT:

This seems to be an optimization you shouldn’t make. Can you really say that “whenever an item is needed, all its bids are needed as well”? Fetching strategies in mapping metadata work on a global level. We don’t consider fetch=”join” a common optimization for collection mappings; you rarely need a fully initialized collection all the time. In addition to resulting in higher memory consumption, every OUTER JOINed collection is a step toward a more serious Cartesian product problem, which we’ll explore in more detail soon.

In practice, you’ll most likely enable a batch or subselect strategy in your mapping metadata for the bids collection. If a particular procedure, such as this, requires all the bids for each Item in-memory, you modify the initial HQL or Criteria query and apply a dynamic fetching strategy:

Both queries result in a single SELECT that retrieves the bids for all Item instances with an OUTER JOIN (as it would if you have mapped the collection with join=”fetch”).

This is likely the first time you’ve seen how to define a fetching strategy that isn’t global. The global fetch plan and fetching strategy settings you put in your mapping metadata are just that: global defaults that always apply. Any optimization process also needs more fine-grained rules, fetching strategies and fetch plans that are applicable for only a particular procedure or use case. We’ll have much more to say about fetching with HQL and Criteria in the next topic. All you need to know now is that these options exist.

The n+1 selects problem appears in more situations than just when you work with lazy collections. Uninitialized proxies expose the same behavior: You may need many SELECTs to initialize all the objects you’re working with in a particular procedure. The optimization guidelines we’ve shown are the same, but there is one exception: The fetch=”join” setting on <many-to-one> or <one-to-one> associations is a common optimization, as is a @ManyToOne(fetch = FetchType .EAGER) annotation (which is the default in Java Persistence). Eager join fetching of single-ended associations, unlike eager outer-join fetching of collections, doesn’t create a Cartesian product problem.

The Cartesian product problem

The opposite of the n+1 selects problem are SELECT statements that fetch too much data. This Cartesian product problem always appears if you try to fetch several “parallel” collections.

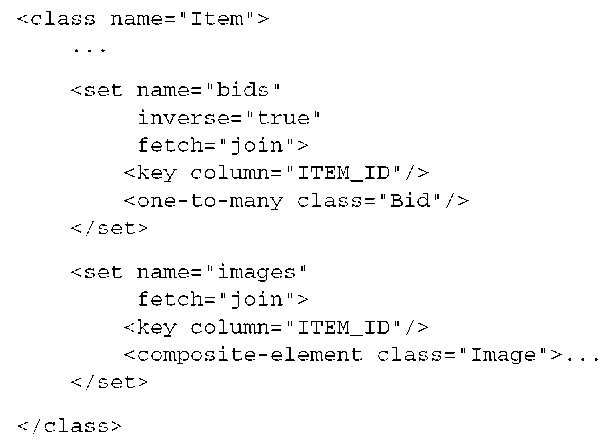

Let’s assume you’ve made the decision to apply a global fetch=”join” setting to the bids collection of an Item (despite our recommendation to use global prefetching and a dynamic join-fetching strategy only when necessary). The Item class has other collections: for example, the images. Let’s also assume that you decide that all images for each item have to be loaded all the time, eagerly with a fetch=”join” strategy:

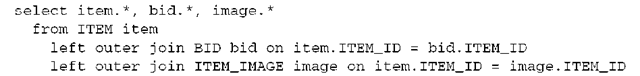

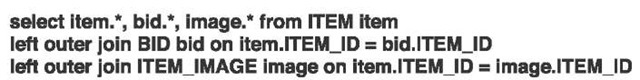

If you map two parallel collections (their owning entity is the same) with an eager outer-join fetching strategy, and load all Item objects, Hibernate executes an SQL SELECT that creates a product of the two collections:

Look at the resultset of that query, shown in figure 13.6.

This resultset contains lots of redundant data. Item 1 has three bids and two images, item 2 has one bid and one image, and item 3 has no bids and no images. The size of the product depends on the size of the collections you’re retrieving: 3 times 2, 1 times 1, plus 1, total 8 result rows. Now imagine that you have 1,000 items in the database, and each item has 20 bids and 5 images—you’ll see a result-set with possibly 100,000 rows! The size of this result may well be several megabytes. Considerable processing time and memory are required on the database server to create this resultset. All the data must be transferred across the network. Hibernate immediately removes all the duplicates when it marshals the resultset into persistent objects and collections—redundant information is skipped. Three queries are certainly faster!

|

ITEMJD |

DESCRIPTION |

|

BIDJD |

ITEMJD |

AMOUNT |

|

IMAGEJMAME |

|

1 |

Item Nr. One |

1 |

1 |

99.00 |

foo.jpg |

||

|

1 |

Item Nr. One |

1 |

1 |

99.00 |

bar.jpg |

||

|

1 |

Item Nr. One |

2 |

1 |

100.00 |

foo.jpg |

||

|

1 |

Item Nr. One |

2 |

1 |

100.00 |

bar.jpg |

||

|

1 |

Item Nr. One |

3 |

1 |

101.00 |

foo.jpg |

||

|

1 |

Item Nr. One |

3 |

1 |

101.00 |

bar.jpg |

||

|

2 |

Item Nr. Two |

4 |

2 |

4.99 |

baz.jpg |

||

|

3 |

Item Nr. Three |

NULL |

NULL |

NULL |

NULL |

Figure 13.6 A product is the result of two outer joins with many rows.

You get three queries if you map the parallel collections with fetch=”subse-lect”; this is the recommended optimization for parallel collections. However, for every rule there is an exception. As long as the collections are small, a product may be an acceptable fetching strategy. Note that parallel single-valued associations that are eagerly fetched with outer-join SELECTs don’t create a product, by nature.

Finally, although Hibernate lets you create Cartesian products with fetch=”join” on two (or even more) parallel collections, it throws an exception if you try to enable fetch=”join” on parallel <bag> collections. The result-set of a product can’t be converted into bag collections, because Hibernate can’t know which rows contain duplicates that are valid (bags allow duplicates) and which aren’t. If you use bag collections (they are the default @OneToMany collection in Java Persistence), don’t enable a fetching strategy that results in products. Use subselects or immediate secondary-select fetching for parallel eager fetching of bag collections.

Global and dynamic fetching strategies help you to solve the n+1 selects and Cartesian product problems. Hibernate offers another option to initialize a proxy or a collection that is sometimes useful.

Forcing proxy and collection initialization

A proxy or collection wrapper is automatically initialized whenever any of its methods are invoked (except for the identifier property getter, which may return the identifier value without fetching the underlying persistent object). Prefetching and eager join fetching are possible solutions to retrieve all the data you’d need.

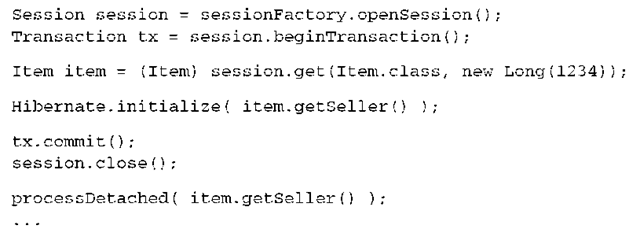

You sometimes want to work with a network of objects in detached state. You retrieve all objects and collections that should be detached and then close the persistence context.

In this scenario, it’s sometimes useful to explicitly initialize an object before closing the persistence context, without resorting to a change in the global fetching strategy or a different query (which we consider the solution you should always prefer).

You can use the static method Hibernate.initialize() for manual initialization of a proxy:

Hibernate.initialize() may be passed a collection wrapper or a proxy. Note that if you pass a collection wrapper to initialize(), it doesn’t initialize the target entity objects that are referenced by this collection. In the previous example, Hibernate.initalize( item.getBids () ) wouldn’t load all the Bid objects inside that collection. It initializes the collection with proxies of Bid objects!

Explicit initialization with this static helper method is rarely necessary; you should always prefer a dynamic fetch with HQL or Criteria.

Now that you know all the options, problems, and possibilities, let’s walk through a typical application optimization procedure.

Optimization step by step

First, enable the Hibernate SQL log. You should also be prepared to read, understand, and evaluate SQL queries and their performance characteristics for your specific database schema: Will a single outer-join operation be faster than two selects? Are all the indexes used properly, and what is the cache hit-ratio inside the database? Get your DBA to help you with that performance evaluation; only he has the knowledge to decide what SQL execution plan is the best. (If you want to become an expert in this area, we recommend the topic SQL Tuning by Dan Tow, [Tow, 2003].)

The two configuration properties hibernate.format_sql and hibernate. use_sql_comments make it a lot easier to read and categorize SQL statements in your log files. Enable both during optimization.

Next, execute use case by use case of your application and note how many and what SQL statements are executed by Hibernate. A use case can be a single screen in your web application or a sequence of user dialogs. This step also involves collecting the object retrieval methods you use in each use case: walking the object links, retrieval by identifier, HQL, and Criteria queries. Your goal is to bring down the number (and complexity) of SQL statements for each use case by tuning the default fetch plan and fetching strategy in metadata.

It’s time to define your fetch plan. Everything is lazy loaded by default. Consider switching to lazy=”false” (or FetchType.EAGER) on many-to-one, one-to-one, and (sometimes) collection mappings. The global fetch plan defines the objects that are always eagerly loaded. Optimize your queries and enable eager fetching if you need eagerly loaded objects not globally, but in a particular procedure—a use case only.

Once the fetch plan is defined and the amount of data required by a particular use case is known, optimize how this data is retrieved. You may encounter two common issues:

■ The SQL statements use join operations that are too complex and slow. First optimize the SQL execution plan with your DBA. If this doesn’t solve the problem, remove fetch=”join” on collection mappings (or don’t set it in the first place). Optimize all your many-to-one and one-to-one associations by considering if they really need a fetch=”join” strategy or if the associated object should be loaded with a secondary select. Also try to tune with the global hibernate.max_fetch_depth configuration option, but keep in mind that this is best left at a value between 1 and 5.

■ Too many SQL statements may be executed. Set fetch=”join” on many-to-one and one-to-one association mappings. In rare cases, if you’re absolutely sure, enable fetch=”join” to disable lazy loading for particular collections. Keep in mind that more than one eagerly fetched collection per persistent class creates a product. Evaluate whether your use case can benefit from prefetching of collections, with batches or subselects. Use batch sizes between 3 and 15.

After setting a new fetching strategy, rerun the use case and check the generated SQL again. Note the SQL statements and go to the next use case. After optimizing all use cases, check every one again and see whether any global optimization had side effects for others. With some experience, you’ll easily be able to avoid any negative effects and get it right the first time.

This optimization technique is practical for more than the default fetching strategies; you may also use it to tune HQL and Criteria queries, which can define the fetch plan and the fetching strategy dynamically. You often can replace a global fetch setting with a new dynamic query or a change of an existing query— we’ll have much more to say about these options in the next topic.

In the next section, we introduce the Hibernate caching system. Caching data on the application tier is a complementary optimization that you can utilize in any sophisticated multiuser application.

Caching fundamentals

A major justification for our claim that applications using an object/relational persistence layer are expected to outperform applications built using direct JDBC is the potential for caching. Although we’ll argue passionately that most applications should be designed so that it’s possible to achieve acceptable performance without the use of a cache, there is no doubt that for some kinds of applications, especially read-mostly applications or applications that keep significant metadata in the database, caching can have an enormous impact on performance. Furthermore, scaling a highly concurrent application to thousands of online transactions usually requires some caching to reduce the load on the database server(s).

We start our exploration of caching with some background information. This includes an explanation of the different caching and identity scopes and the impact of caching on transaction isolation. This information and these rules can be applied to caching in general and are valid for more than just Hibernate applications. This discussion gives you the background to understand why the Hibernate caching system is the way it is. We then introduce the Hibernate caching system and show you how to enable, tune, and manage the first- and second-level Hibernate cache. We recommend that you carefully study the fundamentals laid out in this section before you start using the cache. Without the basics, you may quickly run into hard to debug concurrency problems and risk the integrity of your data.

Caching is all about performance optimization, so naturally it isn’t part of the Java Persistence or EJB 3.0 specification. Every vendor provides different solutions for optimization, in particular any second-level caching. All strategies and options we present in this section work for a native Hibernate application or an application that depends on Java Persistence interfaces and uses Hibernate as a persistence provider.

A cache keeps a representation of current database state close to the application, either in memory or on disk of the application server machine. The cache is a local copy of the data. The cache sits between your application and the database. The cache may be used to avoid a database hit whenever

■ The application performs a lookup by identifier (primary key).

■ The persistence layer resolves an association or collection lazily.

It’s also possible to cache the results of queries.The performance gain of caching query results is minimal in many cases, so this functionality is used much less often.

Before we look at how Hibernate’s cache works, let’s walk through the different caching options and see how they’re related to identity and concurrency.

Caching strategies and scopes

Caching is such a fundamental concept in object/relational persistence that you can’t understand the performance, scalability, or transactional semantics of an ORM implementation without first knowing what kind of caching strategy (or strategies) it uses. There are three main types of cache:

■ Transaction scope cache—Attached to the current unit of work, which may be a database transaction or even a conversation. It’s valid and used only as long as the unit of work runs. Every unit of work has its own cache. Data in this cache isn’t accessed concurrently.

■ Process scope cache—Shared between many (possibly concurrent) units of work or transactions. This means that data in the process scope cache is accessed by concurrently running threads, obviously with implications on transaction isolation.

■ Cluster scope cache—Shared between multiple processes on the same machine or between multiple machines in a cluster. Here, network communication is an important point worth consideration.

A process scope cache may store the persistent instances themselves in the cache, or it may store just their persistent state in a disassembled format. Every unit of work that accesses the shared cache then reassembles a persistent instance from the cached data.

A cluster scope cache requires some kind of remote process communication to maintain consistency. Caching information must be replicated to all nodes in the cluster. For many (not all) applications, cluster scope caching is of dubious value, because reading and updating the cache may be only marginally faster than going straight to the database.

Persistence layers may provide multiple levels of caching. For example, a cache miss (a cache lookup for an item that isn’t contained in the cache) at the transaction scope may be followed by a lookup at the process scope. A database request is the last resort.

The type of cache used by a persistence layer affects the scope of object identity (the relationship between Java object identity and database identity).

Caching and object identity

Consider a transaction-scoped cache. It seems natural that this cache is also used as the identity scope of objects. This means the cache implements identity handling: Two lookups for objects using the same database identifier return the same actual Java instance. A transaction scope cache is therefore ideal if a persistence mechanism also provides unit of work-scoped object identity.

Persistence mechanisms with a process scope cache may choose to implement process-scoped identity. In this case, object identity is equivalent to database identity for the whole process. Two lookups using the same database identifier in two concurrently running units of work result in the same Java instance. Alternatively, objects retrieved from the process scope cache may be returned by value. In this case, each unit of work retrieves its own copy of the state (think about raw data), and resulting persistent instances aren’t identical. The scope of the cache and the scope of object identity are no longer the same.

A cluster scope cache always needs remote communication, and in the case of POJO-oriented persistence solutions like Hibernate, objects are always passed remotely by value. A cluster scope cache therefore can’t guarantee identity across a cluster.

For typical web or enterprise application architectures, it’s most convenient that the scope of object identity be limited to a single unit of work. In other words, it’s neither necessary nor desirable to have identical objects in two concurrent threads. In other kinds of applications (including some desktop or fat-client architectures), it may be appropriate to use process scoped object identity. This is particularly true where memory is extremely limited—the memory consumption of a unit of work scoped cache is proportional to the number of concurrent threads.

However, the real downside to process-scoped identity is the need to synchronize access to persistent instances in the cache, which results in a high likelihood of deadlocks and reduced scalability due to lock contention.

Caching and concurrency

Any ORM implementation that allows multiple units of work to share the same persistent instances must provide some form of object-level locking to ensure synchronization of concurrent access. Usually this is implemented using read and write locks (held in memory) together with deadlock detection. Implementations like Hibernate that maintain a distinct set of instances for each unit of work (unit of work-scoped identity) avoid these issues to a great extent.

It’s our opinion that locks held in memory should be avoided, at least for web and enterprise applications where multiuser scalability is an overriding concern. In these applications, it usually isn’t required to compare object identity across concurrent units of work; each user should be completely isolated from other users.

There is a particularly strong case for this view when the underlying relational database implements a multiversion concurrency model (Oracle or PostgreSQL, for example). It’s somewhat undesirable for the object/relational persistence cache to redefine the transactional semantics or concurrency model of the underlying database.

Let’s consider the options again. A transaction/unit of work-scoped cache is preferred if you also use unit of work-scoped object identity and if it’s the best strategy for highly concurrent multiuser systems. This first-level cache is mandatory, because it also guarantees identical objects. However, this isn’t the only cache you can use. For some data, a second-level cache scoped to the process (or cluster) that returns data by value can be a useful. This scenario therefore has two cache layers; you’ll later see that Hibernate uses this approach.

Let’s discuss which data benefits from second-level caching—in other words, when to turn on the process (or cluster) scope second-level cache in addition to the mandatory first-level transaction scope cache.

Caching and transaction isolation

A process or cluster scope cache makes data retrieved from the database in one unit of work visible to another unit of work. This may have some nasty side effects on transaction isolation.

First, if an application has nonexclusive access to the database, process scope caching shouldn’t be used, except for data which changes rarely and may be safely refreshed by a cache expiry. This type of data occurs frequently in content management-type applications but rarely in EIS or financial applications.

There are two main scenarios for nonexclusive access to look out for:

■ Clustered applications

■ Shared legacy data

Any application that is designed to scale must support clustered operation. A process scope cache doesn’t maintain consistency between the different caches on different machines in the cluster. In this case, a cluster scope (distributed) second-level cache should be used instead of the process scope cache.

Many Java applications share access to their database with other applications. In this case, you shouldn’t use any kind of cache beyond a unit of work scoped first-level cache. There is no way for a cache system to know when the legacy application updated the shared data. Actually, it’s possible to implement application-level functionality to trigger an invalidation of the process (or cluster) scope cache when changes are made to the database, but we don’t know of any standard or best way to achieve this. Certainly, it will never be a built-in feature of Hibernate. If you implement such a solution, you’ll most likely be on your own, because it’s specific to the environment and products used.

After considering nonexclusive data access, you should establish what isolation level is required for the application data. Not every cache implementation respects all transaction isolation levels and it’s critical to find out what is required. Let’s look at data that benefits most from a process- (or cluster-) scoped cache. In practice, we find it useful to rely on a data model diagram (or class diagram) when we make this evaluation. Take notes on the diagram that express whether a particular entity (or class) is a good or bad candidate for second-level caching.

A full ORM solution lets you configure second-level caching separately for each class. Good candidate classes for caching are classes that represent

■ Data that changes rarely

■ Noncritical data (for example, content-management data)

■ Data that is local to the application and not shared

Bad candidates for second-level caching are

■ Data that is updated often

■ Financial data

■ Data that is shared with a legacy application

These aren’t the only rules we usually apply. Many applications have a number of classes with the following properties:

■ A small number of instances

■ Each instance referenced by many instances of another class or classes

■ Instances that are rarely (or never) updated

This kind of data is sometimes called reference data. Examples of reference data are ZIP codes, reference addresses, office locations, static text messages, and so on. Reference data is an excellent candidate for caching with a process or cluster scope, and any application that uses reference data heavily will benefit greatly if that data is cached. You allow the data to be refreshed when the cache timeout period expires.

We shaped a picture of a dual layer caching system in the previous sections, with a unit of work-scoped first-level and an optional second-level process or cluster scope cache. This is close to the Hibernate caching system.

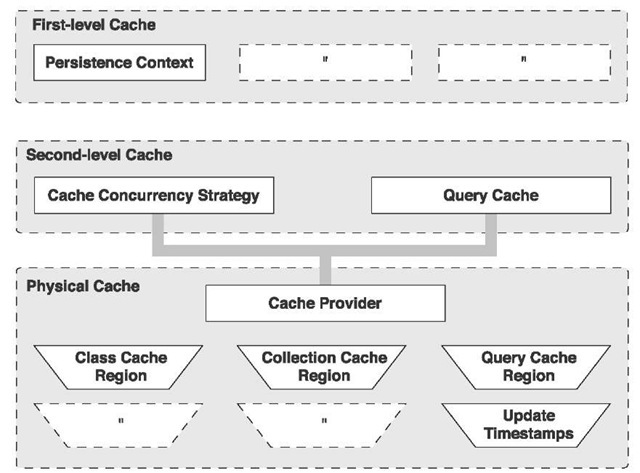

The Hibernate cache architecture

As we hinted earlier, Hibernate has a two-level cache architecture. The various elements of this system can be seen in figure 13.7:

■ The first-level cache is the persistence context cache. A Hibernate Session lifespan corresponds to either a single request (usually implemented with one database transaction) or a conversation. This is a mandatory first-level cache that also guarantees the scope of object and database identity (the exception being the StatelessSession, which doesn’t have a persistence context).

■ The second-level cache in Hibernate is pluggable and may be scoped to the process or cluster. This is a cache of state (returned by value), not of actual persistent instances. A cache concurrency strategy defines the transaction isolation details for a particular item of data, whereas the cache provider represents the physical cache implementation. Use of the second-level cache is optional and can be configured on a per-class and per-collection basis—each such cache utilizes its own physical cache region.

■ Hibernate also implements a cache for query resultsets that integrates closely with the second-level cache. This is an optional feature; it requires two additional physical cache regions that hold the cached query results and the timestamps when a table was last updated. We discuss the query cache in the next topics because its usage is closely tied to the query being executed.

We’ve already discussed the first-level cache, the persistence context, in detail.

Let’s go straight to the optional second-level cache

The Hibernate second-level cache

The Hibernate second-level cache has process or cluster scope: All persistence contexts that have been started from a particular SessionFactory (or are associated with EntityManagers of a particular persistence unit) share the same second-level cache.

Figure 13.7 Hibernate’s two-level cache architecture

Persistent instances are stored in the second-level cache in a disassembled form. Think of disassembly as a process a bit like serialization (the algorithm is much, much faster than Java serialization, however).

The internal implementation of this process/cluster scope cache isn’t of much interest. More important is the correct usage of the cache policies—caching strategies and physical cache providers.

Different kinds of data require different cache policies: The ratio of reads to writes varies, the size of the database tables varies, and some tables are shared with other external applications. The second-level cache is configurable at the granularity of an individual class or collection role. This lets you, for example, enable the second-level cache for reference data classes and disable it for classes that represent financial records. The cache policy involves setting the following:

■ Whether the second-level cache is enabled

■ The Hibernate concurrency strategy

■ The cache expiration policies (such as timeout, LRU, and memory-sensitive)

■ The physical format of the cache (memory, indexed files, cluster-replicated)

Not all classes benefit from caching, so it’s important to be able to disable the second-level cache. To repeat, the cache is usually useful only for read-mostly classes. If you have data that is updated much more often than it’s read, don’t enable the second-level cache, even if all other conditions for caching are true! The price of maintaining the cache during updates can possibly outweigh the performance benefit of faster reads. Furthermore, the second-level cache can be dangerous in systems that share the database with other writing applications. As explained in earlier sections, you must exercise careful judgment here for each class and collection you want to enable caching for.

The Hibernate second-level cache is set up in two steps. First, you have to decide which concurrency strategy to use. After that, you configure cache expiration and physical cache attributes using the cache provider.

Built-in concurrency strategies

A concurrency strategy is a mediator: It’s responsible for storing items of data in the cache and retrieving them from the cache. This is an important role, because it also defines the transaction isolation semantics for that particular item. You’ll have to decide, for each persistent class and collection, which cache concurrency strategy to use if you want to enable the second-level cache.

The four built-in concurrency strategies represent decreasing levels of strictness in terms of transaction isolation:

■ Transactional—Available in a managed environment only, it guarantees full transactional isolation up to repeatable read, if required. Use this strategy for read-mostly data where it’s critical to prevent stale data in concurrent transactions, in the rare case of an update.

■ Read-write—This strategy maintains read committed isolation, using a time-stamping mechanism and is available only in nonclustered environments. Again, use this strategy for read-mostly data where it’s critical to prevent stale data in concurrent transactions, in the rare case of an update.

■ Nonstrict-read-write—Makes no guarantee of consistency between the cache and the database. If there is a possibility of concurrent access to the same entity, you should configure a sufficiently short expiry timeout. Otherwise, you may read stale data from the cache. Use this strategy if data hardly ever changes (many hours, days, or even a week) and a small likelihood of stale data isn’t of critical concern.

■ Read-only—A concurrency strategy suitable for data which never changes. Use it for reference data only.

Note that with decreasing strictness comes increasing performance. You have to carefully evaluate the performance of a clustered cache with full transaction isolation before using it in production. In many cases, you may be better off disabling the second-level cache for a particular class if stale data isn’t an option! First benchmark your application with the second-level cache disabled. Enable it for good candidate classes, one at a time, while continuously testing the scalability of your system and evaluating concurrency strategies.

It’s possible to define your own concurrency strategy by implementing org. hibernate.cache.CacheConcurrencyStrategy, but this is a relatively difficult task and appropriate only for rare cases of optimization.

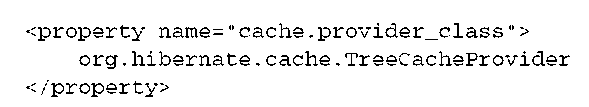

Your next step after considering the concurrency strategies you’ll use for your cache candidate classes is to pick a cache provider. The provider is a plug-in, the physical implementation of a cache system.

Choosing a cache provider

For now, Hibernate forces you to choose a single cache provider for the whole application. Providers for the following open source products are built into Hibernate:

■ EHCache is a cache provider intended for a simple process scope cache in a single JVM. It can cache in memory or on disk, and it supports the optional Hibernate query result cache. (The latest version of EHCache now supports clustered caching, but we haven’t tested this yet.)

■ OpenSymphony OSCache is a service that supports caching to memory and disk in a single JVM, with a rich set of expiration policies and query cache support.

■ SwarmCache is a cluster cache based on JGroups. It uses clustered invalidation but doesn’t support the Hibernate query cache.

■ JBoss Cache is a fully transactional replicated clustered cache also based on the JGroups multicast library. It supports replication or invalidation, synchronous or asynchronous communication, and optimistic and pessimistic locking. The Hibernate query cache is supported, assuming that clocks are synchronized in the cluster.

It’s easy to write an adaptor for other products by implementing org.hibernate. cache.CacheProvider. Many commercial caching systems are pluggable into Hibernate with this interface.

Not every cache provider is compatible with every concurrency strategy! The compatibility matrix in table 13.1 will help you choose an appropriate combination.

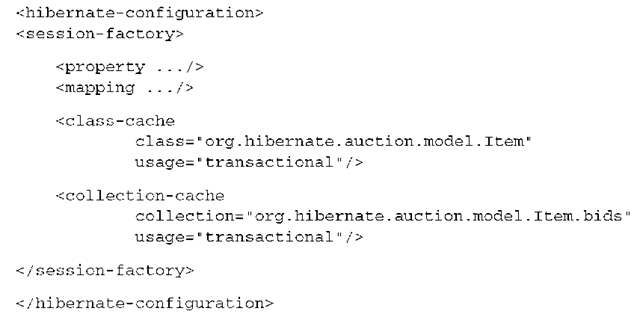

Setting up caching involves two steps: First, you look at the mapping metadata for your persistent classes and collections and decide which cache concurrency strategy you’d like to use for each class and each collection. In the second step, you enable your preferred cache provider in the global Hibernate configuration and customize the provider-specific settings and physical cache regions. For example, if you’re using OSCache, you edit oscache.properties, or for EHCache, ehcache.xml in your classpath.

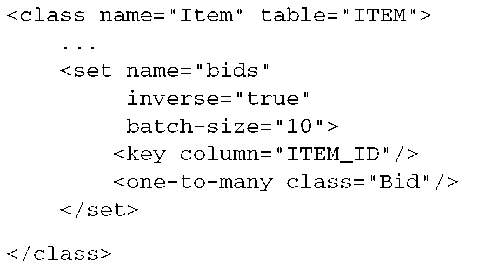

Table 13.1 Cache concurrency strategy support

|

Concurrency strategy cache provider |

Read-only |

Nonstrict-read-write |

Read-write |

Transactional |

|

EHCache |

X |

X |

X |

|

|

OSCache |

X |

X |

X |

|

|

SwarmCache |

X |

X |

|

|

|

JBoss Cache |

X |

|

|

X |

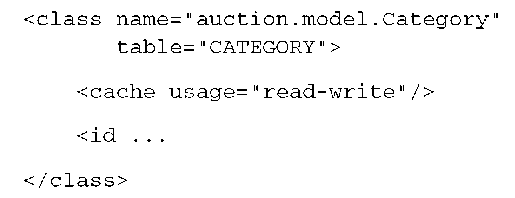

Let’s enable caching for the CaveatEmptor Category, Item, and Bid classes.

Caching in practice

First we’ll consider each entity class and collection and find out what cache concurrency strategy may be appropriate. After we select a cache provider for local and clustered caching, we’ll write their configuration file(s).

Selecting a concurrency control strategy

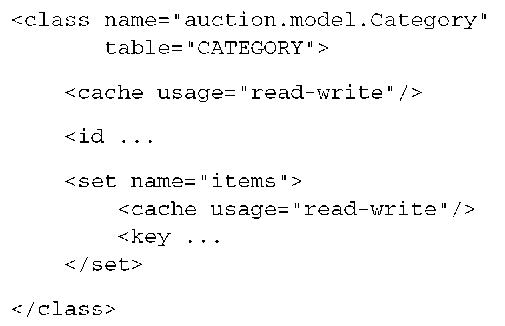

The Category has a small number of instances and is updated rarely, and instances are shared between many users. It’s a great candidate for use of the second-level cache.

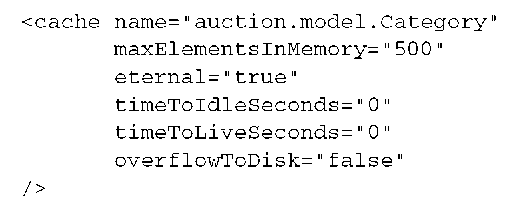

Start by adding the mapping element required to tell Hibernate to cache Category instances.

The usage=”read-write” attribute tells Hibernate to use a read-write concurrency strategy for the auction.model.Category cache. Hibernate now hits the second-level cache whenever you navigate to a Category or when you load a Category by identifier.

If you use annotations, you need a Hibernate extension:

You use read-write instead of nonstrict-read-write because Category is a highly concurrent class, shared between many concurrent transactions. (It’s clear that a read committed isolation level is good enough.) A nonstrict-read-write would rely only on cache expiration (timeout), but you prefer changes to categories to be visible immediately.

The class caches are always enabled for a whole hierarchy of persistent classes. You can’t only cache instances of a particular subclass.

This mapping is enough to tell Hibernate to cache all simple Category property values, but not the state of associated entities or collections. Collections require their own <cache> region. For the items collection you use a read-write concurrency strategy:

The region name of the collection cache is the fully qualified class name plus the collection property name, auction.model.Category.items. The @org.hiber-nate.annotations.Cache annotation can also be declared on a collection field or getter method.

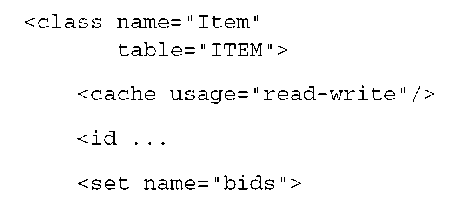

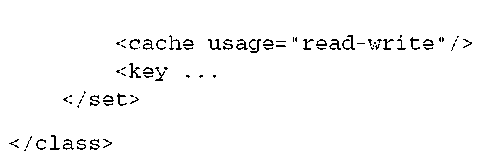

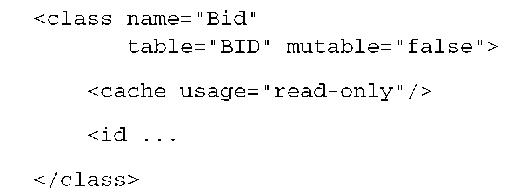

This cache setting is effective when you call aCategory.getItems()—in other words, a collection cache is a region that contains “which items are in which category.” It’s a cache of identifiers only; there is no actual Category or Item data in that region.