Face Recognition

Face recognition is a task that humans perform routinely and effortlessly in our daily lives. Wide availability of powerful and low-cost desktop and embedded computing systems has created an enormous interest in automatic processing of digital images in a variety of applications, including biometric authentication, surveillance, human-computer interaction, and multimedia management. Research and development in automatic face recognition follows naturally.

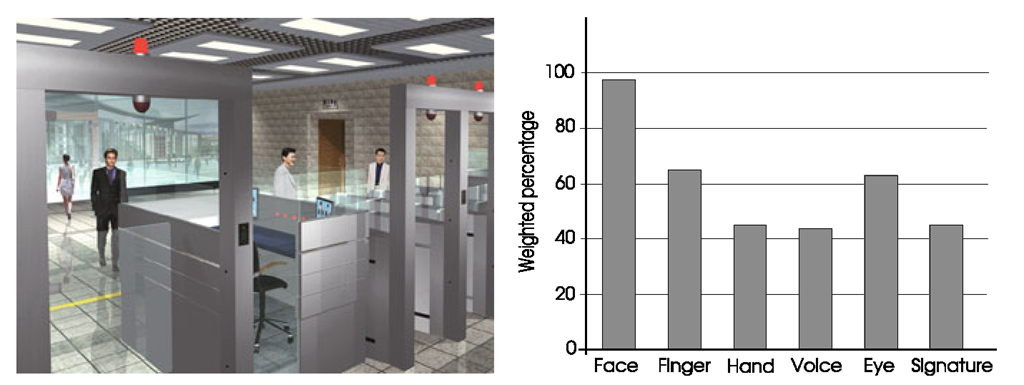

Face recognition has several advantages over other biometric modalities such as fingerprint and iris: besides being natural and nonintrusive, the most important advantage of face is that it can be captured at a distance and in a covert manner. Among the six biometric attributes considered by Hietmeyer [16], facial features scored the highest compatibility in a Machine Readable Travel Documents (MRTD) [27] system based on a number of evaluation factors, such as enrollment, renewal, machine requirements, and public perception, shown in Fig. 1.1. Face recognition, as one of the major biometric technologies, has become increasingly important owing to rapid advances in image capture devices (surveillance cameras, camera in mobile phones), availability of huge amounts of face images on the Web, and increased demands for higher security.

The first automated face recognition system was developed by Takeo Kanade in his Ph.D. thesis work [18] in 1973. There was a dormant period in automatic face recognition until the work by Sirovich and Kirby [19, 38] on a low dimensional face representation, derived using the Karhunen-Loeve transform or Principal Component Analysis (PCA).

Fig. 1.1 A scenario of using biometric MRTD systems for passport control (left), and a comparison of various biometric traits based on MRTD compatibility.

It is the pioneering work of Turk and Pentland on Eigenface [42] that reinvigorated face recognition research. Other major milestones in face recognition include: the Fisherface method [3, 12], which applied Linear Discriminant Analysis (LDA) after a PCA step to achieve higher accuracy; the use of local filters such as Gaborjets [21, 45] to provide more effective facial features; and the design of the AdaBoost learning based cascade classifier architecture for real time face detection [44].

Face recognition technology is now significantly advanced since the time when the Eigenface method was proposed. In the constrained situations, for example where lighting, pose, stand-off, facial wear, and facial expression can be controlled, automated face recognition can surpass human recognition performance, especially when the database (gallery) contains a large number of faces.1 However, automatic face recognition still faces many challenges when face images are acquired under unconstrained environments. In the following sections, we give a brief overview of the face recognition process, analyze technical challenges, propose possible solutions, and describe state-of-the-art performance.

This topic provides an introduction to face recognition research. Main steps of face recognition processing are described. Face detection and recognition problems are explained from a face subspace viewpoint. Technology challenges are identified and possible strategies for solving some of the problems are suggested.

Categorization

As a biometric system, a face recognition system operates in either or both of two modes: (1) face verification (or authentication), and (2) face identification (or recognition). Face verification involves a one-to-one match that compares a query face image against an enrollment face image whose identity is being claimed. Person verification for self-serviced immigration clearance using E-passport is one typical application.

Face identification involves one-to-many matching that compares a query face against multiple faces in the enrollment database to associate the identity of the query face to one of those in the database. In some identification applications, one just needs to find the most similar face. In a watchlist check or face identification in surveillance video, the requirement is more than finding most similar faces; a confidence level threshold is specified and all those faces whose similarity score is above the threshold are reported.

The performance of a face recognition system largely depends on a variety of factors such as illumination, facial pose, expression, age span, hair, facial wear, and motion. Based on these factors, face recognition applications may be divided into two broad categories in terms of a user’s cooperation: (1) cooperative user scenarios and (2) noncooperative user scenarios.

The cooperative case is encountered in applications such as computer login, physical access control, and e-passport, where the user is willing to be cooperative by presenting his/her face in a proper way (for example, in a frontal pose with neutral expression and eyes open) in order to be granted the access or privilege.

In the noncooperative case, which is typical in surveillance applications, the user is unaware of being identified. In terms of distance between the face and the camera, near field face recognition (less than 1 m) for cooperative applications (e.g., access control) is the least difficult problem, whereas far field noncooperative applications (e.g., watchlist identification) in surveillance video is the most challenging.

Applications in-between the above two categories can also be foreseen. For example, in face-based access control at a distance, the user is willing to be cooperative but he is unable to present the face in a favorable condition with respect to the camera. This may present challenges to the system even though such cases are still easier than identifying the identity of the face of a subject who is not cooperative. However, in almost all of the cases, ambient illumination is the foremost challenge for most face recognition applications.

Processing Workflow

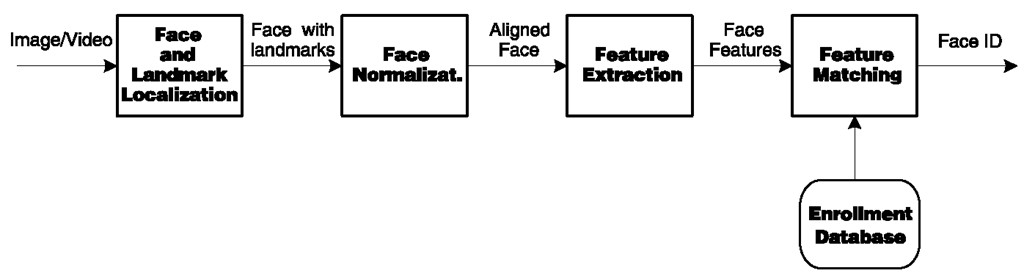

Face recognition is a visual pattern recognition problem, where the face, represented as a three-dimensional object that is subject to varying illumination, pose, expression, and other factors, needs to be identified based on acquired images. While twodimensional face images are commonly used in most applications, certain applications requiring higher levels of security demand the use of three-dimensional (depth or range) images or optical images beyond the visual spectrum. A face recognition system generally consists of four modules as depicted in Fig. 1.2: face localization, normalization, feature extraction, and matching. These modules are explained below.

Fig. 1.2 Depiction of face recognition processing flow

Face detection segments the face area from the background. In the case of video, the detected faces may need to be tracked across multiple frames using a face tracking component. While face detection provides a coarse estimate of the location and scale of the face, face landmarking localizes facial landmarks (e.g., eyes, nose, mouth, and facial outline). This may be accomplished by a landmarking module or face alignment module.

Face normalization is performed to normalize the face geometrically and photometrically. This is necessary because state-of-the-art recognition methods are expected to recognize face images with varying pose and illumination. The geometrical normalization process transforms the face into a standard frame by face cropping. Warping or morphing may be used for more elaborate geometric normalization. The photometric normalization process normalizes the face based on properties such as illumination and gray scale.

Face feature extraction is performed on the normalized face to extract salient information that is useful for distinguishing faces of different persons and is robust with respect to the geometric and photometric variations. The extracted face features are used for face matching.

In face matching the extracted features from the input face are matched against one or many of the enrolled faces in the database. The matcher outputs ‘yes’ or ‘no’ for 1:1 verification; for 1:N identification, the output is the identity of the input face when the top match is found with sufficient confidence or unknown when the tip match score is below a threshold. The main challenge in this stage of face recognition is to find a suitable similarity metric for comparing facial features.

The accuracy of face recognition systems highly depends on the features that are extracted to represent the face which, in turn, depend on correct face localization and normalization. While face recognition still remains a challenging pattern recognition problem, it may be analyzed from the viewpoint of face subspaces or manifolds, as follows.

Face Subspace

Although face recognition technology has significantly improved and can now be successfully performed in “real-time” for images and videos captured under favorable (constrained) situations, face recognition is still a difficult endeavor, especially for unconstrained tasks where viewpoint, illumination, expression, occlusion, and facial accessories can vary considerably. This can be illustrated from face subspace or manifold viewpoint.

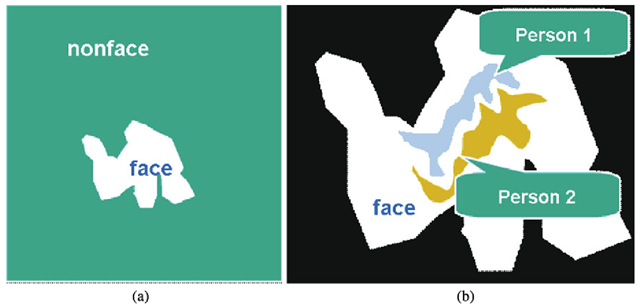

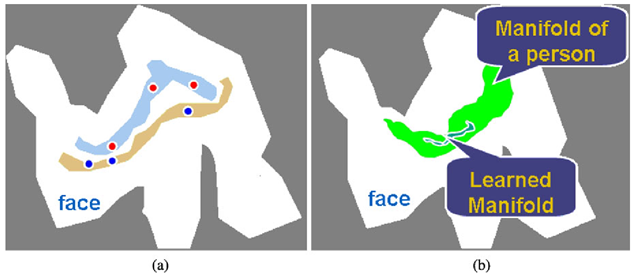

Fig. 1.3 Face subspace or manifolds. a Face versus nonface manifolds. b Face manifolds of different individuals

Subspace analysis techniques for face recognition are based on the fact that a class of patterns of interest, such as the face, resides in a subspace of the input image space. For example, a 64 x 64 8-bit image with 4096 pixels can express a large number of pattern classes, such as trees, houses, and faces. However, among the 2564096 > io9864 possible “configurations,” only a tiny fraction correspond to faces. Therefore, the pixel-based image representation is highly redundant, and the dimensionality of this representation could be greatly reduced when only the face patterns are of interest.

The eigenface or PCA method [19, 42] derives a small number (typically 40 or lower) of principal components or eigenfaces from a set of training face images. Given the eigenfaces as basis for a face subspace, a face image is compactly represented by a low dimensional feature vector and a face can be reconstructed as a linear combination of the eigenfaces. The use of subspace modeling techniques has significantly advanced the face recognition technology.

The manifold or distribution of all the faces accounts for variations in facial appearance whereas the nonface manifold accounts for all objects other than the faces. If we examine these manifolds in the image space, we find them highly nonlinear and nonconvex [5, 41]. Figure 1.3(a) illustrates face versus nonface manifolds and Fig. 1.3(b) illustrates the manifolds of two individuals in the entire face manifold. Face detection can be considered as a task of distinguishing between the face and nonface manifolds in the image (subwindow) space and face recognition can be considered as a task of distinguishing between faces of different individuals in the face manifold.

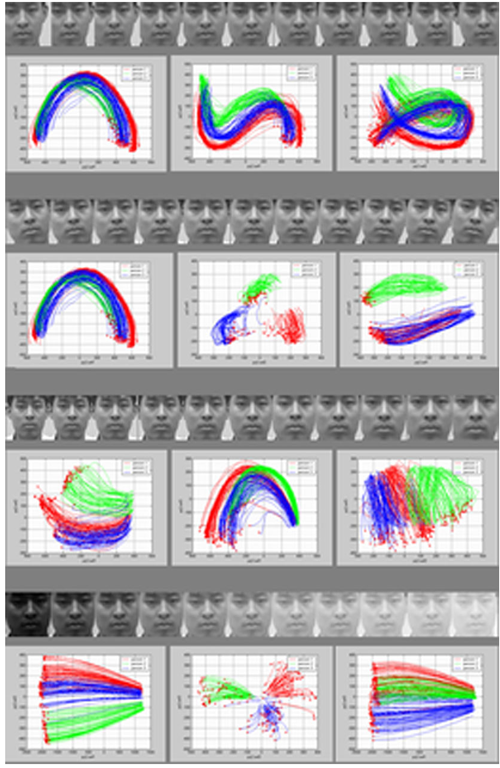

Figure 1.4 further demonstrates the nonlinearity and nonconvexity of face manifolds in a PCA subspace spanned by the first three principal components, where the plots are drawn from real face image data. Each plot depicts the manifolds of three individuals (in three colors). The data consists of 64 frontal face images for each individual.

Fig. 1.4 Nonlinearity and nonconvexity of face manifolds under (from top to bottom) translation, rotation, scaling, and Gamma transformations

A transform (horizontal transform, in-plane rotation, size scaling, and gamma transform for the 4 groups, respectively) is performed on each face image with 11 gradually varying parameters, producing 11 transformed face images; each transformed image is cropped to contain only the face region; the 11 cropped face images form a sequence. A curve in this figure represents such a sequence in the PCA space, and so there are 64 curves for each individual. The three-dimensional (3D) PCA space is projected on three different 2D spaces (planes). We can observe the nonlinearity of the trajectories.

The following observations can be drawn based on Fig. 1.4. First, while this example is demonstrated in the PCA space, more complex (nonlinear and noncon-vex) trajectories are expected in the original image space. Second, although these face images have been subjected to geometric transformations in the 2D plane and pointwise lighting (gamma) changes, more significant complexity of trajectories is expected for geometric transformations in 3D space (for example, out-of-plane head rotations) and ambient lights.

Technology Challenges

As shown in Fig. 1.3, the problem of face detection is highly nonlinear and non-convex, even more so for face matching. Face recognition evaluation reports, for example Face Recognition Technology (FERET) [34], Face Recognition Vendor Test (FRVT) [31] and other independent studies, indicate that the performance of many state-of-the-art face recognition methods deteriorates with changes in lighting, pose, and other factors [8, 43, 50]. The key technical challenges in automatic face recognition are summarized below.

Large Variability in Facial Appearance Whereas shape and reflectance are intrinsic properties of a face, the appearance (i.e., the texture) of a face is also influenced by several other factors, including the facial pose (or, equivalently, camera viewpoint), illumination, and facial expression. Figure 1.5 shows an example of large intra-subject variations caused by these factors. Aging is also an important factor that leads to an increase in the intra-subject variations especially in applications requiring duplication of government issued photo ID documents (e.g., driver licenses and passports). In addition to these, various imaging parameters, such as aperture, exposure time, lens aberrations, and sensor spectral response also increase intra-subject variations. Face-based person identification is further complicated by possible small inter-subject variations (Fig. 1.6). All these factors are confounded in the image data, so “the variations between the images of the same face due to illumination and viewing direction are almost always larger than the image variation due to change in face identity” [30]. This variability makes it difficult to extract the intrinsic information about the face identity from a facial image.

Complex Nonlinear Manifolds As illustrated above, the entire face manifold is highly nonconvex, and so is the face manifold of any individual under various changes.

Fig. 1.5 Intra-subject variations in pose, illumination, expression, occlusion, accessories (e.g., glasses), color, and brightness.

Fig. 1.6 Similarity of frontal faces between a twins and b a father and his son.

Linear methods such as PCA [19, 42], independent component analysis (ICA) [2], and linear discriminant analysis (LDA) [3]) project the data linearly from a high-dimensional space (for example, the image space) to a low-dimensional subspace. As such, they are unable to preserve the nonconvex variations of face manifolds necessary to differentiate among individuals. In a linear subspace, Euclidean distance and, more generally, the Mahalanobis distance do not perform well for discriminating between face and nonface manifolds and between manifolds of different individuals (Fig. 1.7(a)). This limits the power of the linear methods to achieve highly accurate face detection and recognition in many practical scenarios.

High Dimensionality and Small Sample Size Another challenge in face recognition is the generalization ability, which is illustrated in Fig. 1.7(b). The figure depicts a canonical face image of size 112 x 92 which resides in a 10,304-dimensional feature space. The number of example face images per person (typically fewer than 10, and sometimes just one) available for learning the manifold is usually much smaller than the dimensionality of the image space; a system trained on a small number of examples may not generalize well to unseen instances of the face.

Fig. 1.7 Challenges in face recognition from subspace viewpoint. a Euclidean distance is unable to differentiate between individuals. When using Euclidean distance, an inter-person distance can be smaller than an intra-person distance. b The learned manifold or classifier is unable to characterize (i.e., generalize) unseen images of the same face