Reliability of Ground Truth

When training a system to recognize facial expression, the investigator assumes that training and test data are accurately labeled. This assumption may or may not be accurate. Asking subjects to perform a given action is no guarantee that they will. To ensure internal validity, expression data must be manually coded, and the reliability of the coding verified. Interobserver reliability can be improved by providing rigorous training to observers and monitoring their performance. FACS coders must pass a standardized test, which ensures (initially) uniform coding among international laboratories. Monitoring is best achieved by having observers independently code a portion of the same data. As a general rule, 15% to 20% of data should be comparison-coded. To guard against drift in coding criteria [62], restandardization is important. When assessing reliability, coefficient kappa [36] is preferable to raw percentage of agreement, which may be inflated by the marginal frequencies of codes. Kappa quantifies interobserver agreement after correcting for the level of agreement expected by chance.

Databases

Because most investigators have used relatively limited data sets, the generalizabil-ity of different approaches to facial expression analysis remains unknown. In most data sets, only relatively global facial expressions (e.g., joy or anger) have been considered, subjects have been few in number and homogeneous with respect to age and ethnic background, and recording conditions have been optimized. Approaches to facial expression analysis that have been developed in this way may transfer poorly to applications in which expressions, subjects, contexts, or image properties are more variable. In the absence of comparative tests on common data, the relative strengths and weaknesses of different approaches are difficult to determine. In the areas of face and speech recognition, comparative tests have proven valuable [76], and similar benefits would likely accrue in the study of facial expression analysis. A large, representative test-bed is needed with which to evaluate different approaches. We list several databases for facial expression analysis in Sect. 19.4.5.

Relation to Other Facial Behavior or Nonfacial Behavior

Facial expression is one of several channels of nonverbal communication. Contraction of the muscle zygomaticus major (AU 12), for instance, is often associated with positive or happy vocalizations, and smiling tends to increase vocal fundamental frequency [16]. Also facial expressions often occur during conversations. Both expressions and conversations can cause facial changes. Few research groups, however, have attempted to integrate gesture recognition broadly defined across multiple channels of communication [44, 45]. An important question is whether there are advantages to early rather than late integration [38]. Databases containing multimodal expressive behavior afford the opportunity for integrated approaches to analysis of facial expression, prosody, gesture, and kinetic expression.

Summary and Ideal Facial Expression Analysis Systems

The problem space for facial expression includes multiple dimensions. An ideal facial expression analysis system has to address all these dimensions, and it outputs accurate recognition results. In addition, the ideal facial expression analysis system must perform automatically and in real-time for all stages (Fig. 19.1). So far, several systems can recognize expressions in real time [53, 68, 97]. We summarize the properties of an ideal facial expression analysis system in Table 19.5.

Recent Advances

For automatic facial expression analysis, Suwa et al. [90] presented an early attempt in 1978 to analyze facial expressions by tracking the motion of 20 identified spots on an image sequence. Considerable progress had been made since 1990 in related technologies such as image analysis and pattern recognition that make AFEA possible. Samal and Iyengar [81] surveyed the early work (before 1990) about automatic recognition and analysis of human face and facial expression. Two survey papers summarized the work (before year 1999) of facial expression analysis [35, 69].

|

Robustness |

|

|

Rb1 |

Deal with subjects of different age, gender, ethnicity |

|

Rb2 |

Handle lighting changes |

|

Rb3 |

Handle large head motion |

|

Rb4 |

Handle occlusion |

|

Rb5 |

Handle different image resolution |

|

Rb6 |

Recognize all possible expressions |

|

Rb7 |

Recognize expressions with different intensity |

|

Rb8 |

Recognize asymmetrical expressions |

|

Rb9 |

Recognize spontaneous expressions |

|

Automatic process |

|

|

Am1 |

Automatic face acquisition |

|

Am2 |

Automatic facial feature extraction |

|

Am3 |

Automatic expression recognition |

|

Real-time process |

|

|

Rt1 |

Real-time face acquisition |

|

Rt2 |

Real-time facial feature extraction |

|

Rt3 |

Real-time expression recognition |

|

Autonomic Process |

|

|

An1 |

Output recognition with confidence |

|

An2 |

Adaptive to different level outputs based on input images |

Table 19.5 Properties of an ideal facial expression analysis system

Recently, Zeng et al. [114] surveyed the work (before year 2007) for affect recognition methods including audio, visual and spontaneous expressions. In this topic, instead of giving a comprehensive survey of facial expression analysis literature, we explore the recent advances in facial expression analysis based on four problems:

(1) face acquisition, (2) facial feature extraction and representation, (3) facial expression recognition, and (4) multimodal expression analysis. In addition, we list the public available databases for expression analysis.

Many efforts have been made for facial expression analysis [4, 5, 8, 13, 15, 18, 20, 23, 32, 34, 35, 37, 45, 58-60, 67, 69, 70, 87, 95, 96, 102, 104, 107, 110-115, 117]. Because most of the work are summarized in the survey papers [35, 69, 114], here we focus on the recent research in automatic facial expression analysis which tends to follow these directions:

• Build more robust systems for face acquisition, facial data extraction and representation, and facial expression recognition to handle head motion (in-plane and out-of-plane), occlusion, lighting changes, and lower intensity of expressions

• Employ more facial features to recognize more expressions and to achieve a higher recognition rate

• Recognize facial action units and their combinations rather than emotion-specified expressions

• Recognize action units as they occur spontaneously

• Develop fully automatic and real-time AFEA systems

• Analyze emotion portrayals by combining multimodal features such as facial expression, vocal expression, gestures, and body movements

Face Acquisition

With few exceptions, most AFEA research attempts to recognize facial expressions only from frontal-view or near frontal-view faces [51, 70]. Kleck and Mendolia [51] first studied the decoding of profile versus full-face expressions of affect by using three perspectives (a frontal face, a 90° right profile, and a 90° left profile). Forty-eight decoders viewed the expressions from 64 subjects in one of the three facial perspectives. They found that the frontal faces elicited higher intensity ratings than profile views for negative expressions. The opposite was found for positive expressions. Pantic and Rothkrantz [70] used dual-view facial images (a full-face and a 90° right profile) which are acquired by two cameras mounted on the user’s head. They did not compare the recognition results by using only the frontal view and the profile. So far, it is unclear how many expressions can be recognized by side-view or profile faces. Because the frontal-view face is not always available in real environments, the face acquisition methods should detect both frontal and nonfrontal view faces in an arbitrary scene.

To handle out-of-plane head motion, face can be obtained by face detection, 2D or 3D face tracking, or head pose detection. Nonfrontal view faces are warped or normalized to frontal view for expression analysis.

Face Detection

Many face detection methods have been developed to detect faces in an arbitrary scene [47, 55, 72, 78, 86, 89, 101]. Most of them can detect only frontal and near-frontal views of faces. Heisele et al. [47] developed a component-based, trainable system for detecting frontal and near-frontal views of faces in still gray images. Rowley et al. [78] developed a neural network based system to detect frontal-view face. Viola and Jones [101] developed a robust real-time face detector based on a set of rectangle features.

To handle out-of-plane head motion, some researchers developed face detectors to detect face from different views [55, 72, 86]. Pentland et al. [72] detected faces by using the view-based and modular eigenspace method. Their method runs real-time and can handle varying head positions. Schneiderman and Kanade [86] proposed a statistical method for 3D object detection that can reliably detect human faces with out-of-plane rotation. They represent the statistics of both object appearance and nonobject appearance using a product of histograms. Each histogram represents the joint statistics of a subset of wavelet coefficients and their position on the object. Li et al. [55] developed an AdaBoost-like approach to detect faces with multiple views. A detail survey about face detection can be found in paper [109].

Some facial expression analysis systems use the face detector which developed by Viola et al. [101] to detect face for each frame [37]. Some systems [18, 67, 94-96, 104] assume that the first frame of the sequence is frontal and expressionless. They detect faces only in the first frame and then perform feature tracking or head tracking for the remaining frames of the sequence.

Head Pose Estimation

In a real environment, out-of-plane head motion is common for facial expression analysis. To handle the out-of-plane head motion, head pose estimation can be employed. The methods for estimating head pose can be classified as 3D model-based methods [1, 91, 98, 104] and 2D image-based methods [9, 97, 103, 118].

3D Model-Based Method Many systems employ a 3D model based method to estimate head pose [4, 5,15,18,20,67,102,104]. Bartlett et al. [4, 5] usedacanoni-cal wire-mesh face model to estimate face geometry and 3D pose from hand-labeled feature points. In papers [15, 102], the authors used an explicit 3D wireframe face model to track geometric facial features defined on the model [91]. The 3D model is fitted to the first frame of the sequence by manually selecting landmark facial features such as corners of the eyes and mouth. The generic face model, which consists of 16 surface patches, is warped to fit the selected facial features. To estimate the head motion and deformations of facial features, a two-step process is used. The 2D image motion is tracked using template matching between frames at different resolutions. From the 2D motions of many points on the face model, the 3D head motion then is estimated by solving an overdetermined system of equations of the projective motions in the least-squares sense [15].

In paper [104], a cylindrical head model is used to automatically estimate the 6 degrees of freedom (dof) of head motion in realtime. An active appearance model (AAM) method is used to automatically map the cylindrical head model to the face region, which is detected by face detection [78], as the initial appearance template. For any given frame, the template is the head image in the previous frame that is projected onto the cylindrical model. Then the template is registered with the head appearance in the given frame to recover the full motion of the head. They first use the iteratively reweighted least squares technique [6] to deal with nonrigid motion and occlusion. Second, they update the template dynamically in order to deal with gradual changes in lighting and self-occlusion. This enables the system to work well even when most of the face is occluded.

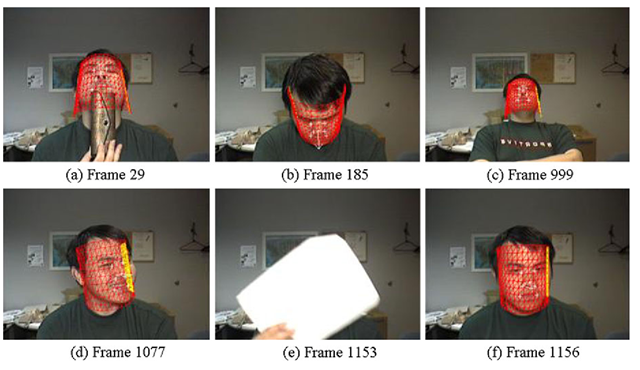

Fig. 19.3 Example of 3D head tracking, including re-registration after losing the head

Because head poses are recovered using templates that are constantly updated and the pose estimated for the current frame is used in estimating the pose in the next frame, errors would accumulate unless otherwise prevented. To solve this problem, the system automatically selects and stores one or more frames and associated head poses from the tracked images in the sequence (usually including the initial frame and pose) as references. Whenever the difference between the estimated head pose and that of a reference frame is less than a preset threshold, the system rectifies the current pose estimate by re-registering this frame with the reference. The reregistration prevents errors from accumulating and enables the system to recover head pose when the head reappears after occlusion, such as when the head moves momentarily out of the camera’s view. On-line tests suggest that the system could work robustly for an indefinite period of time. It was also quantitatively evaluated in image sequences that include maximum pitch and yaw as large as 40 and 75 degrees, respectively. The precision of recovered motion was evaluated with respect to the ground truth obtained by a precise position and orientation measurement device with markers attached to the head and found to be highly consistent (e.g., for maximum yaw of 75 degrees, absolute error averaged 3.86 degrees). An example of the 3D head tracking is shown in Fig. 19.3 including reregistration after losing the head. More details can be found in paper [104].

2D Image-Based Method To handle the full range of head motion for expression analysis, Tian et al. [97] detected the head instead of the face. The head detection uses the smoothed silhouette of the foreground object as segmented using background subtraction and computing the negative curvature minima (NCM) points of the silhouette. Other head detection techniques that use silhouettes can be found elsewhere [42, 46].

Table 19.6 Definitions and examples of the three head pose classes: frontal or near frontal view, side view or profile, and others, such as back of the head or occluded faces. The expression analysis process is applied to only the frontal and near-frontal view faces [9, 97]

After the head is located, the head image is converted to gray-scale, histogram-equalized, and resized to the estimated resolution. Then a three-layer neural network (NN) is employed to estimate the head pose. The inputs to the network are the processed head image. The outputs are the three head poses: (1) frontal or near frontal view, (2) side view or profile, (3) others, such as back of the head or occluded face (Table 19.6). In the frontal or near frontal view, both eyes and lip corners are visible. In the side view or profile, at least one eye or one corner of the mouth becomes selfoccluded because of the head. The expression analysis process is applied only to the frontal and near-frontal view faces. Their system performs well even with very low resolution of face images.

Facial Feature Extraction and Representation

After the face is obtained, the next step is to extract facial features. Two types of features can be extracted: geometric features and appearance features. Geometric features present the shape and locations of facial components (including mouth, eyes, brows, and nose). The facial components or facial feature points are extracted to form a feature vector that represents the face geometry. The appearance features present the appearance (skin texture) changes of the face, such as wrinkles and furrows. The appearance features can be extracted on either the whole-face or specific regions in a face image.

To recognize facial expressions, an AEFA system can use geometric features only [15, 20, 70], appearance features only [5, 37, 59], or hybrid features (both geometric and appearance features) [23, 95, 96, 102]. The research shows that using hybrid features can achieve better results for some expressions.

To remove the effects of variation in face scale, motion, lighting, and other factors, one can first align and normalize the face to a standard face (2D or 3D) manually or automatically [23, 37, 57, 102], and then obtain normalized feature measurements by using a reference image (neutral face) [95].

Fig. 19.4 Facial feature extraction for expression analysis [95]. a Multistate models for geometric feature extraction. b Locations for calculating appearance features

![Definitions and examples of the three head pose classes: frontal or near frontal view, side view or profile, and others, such as back of the head or occluded faces. The expression analysis process is applied to only the frontal and near-frontal view faces [9, 97] Definitions and examples of the three head pose classes: frontal or near frontal view, side view or profile, and others, such as back of the head or occluded faces. The expression analysis process is applied to only the frontal and near-frontal view faces [9, 97]](http://what-when-how.com/wp-content/uploads/2012/06/tmp7527317_thumb_thumb_thumb.png)

![Facial feature extraction for expression analysis [95]. a Multistate models for geometric feature extraction. b Locations for calculating appearance features Facial feature extraction for expression analysis [95]. a Multistate models for geometric feature extraction. b Locations for calculating appearance features](http://what-when-how.com/wp-content/uploads/2012/06/tmp7527318_thumb.png)