Introduction

Images of faces, represented as high-dimensional pixel arrays, often belong to a manifold of intrinsically low dimension. Face recognition, and computer vision research in general, has witnessed a growing interest in techniques that capitalize on this observation and apply algebraic and statistical tools for extraction and analysis of the underlying manifold. In this topic, we describe in roughly chronologic order techniques that identify, parameterize, and analyze linear and nonlinear subspaces, from the original Eigenfaces technique to the recently introduced Bayesian method for probabilistic similarity analysis. We also discuss comparative experimental evaluation of some of these techniques as well as practical issues related to the application of subspace methods for varying pose, illumination, and expression.

Face Space and Its Dimensionality

Computer analysis of face images deals with a visual signal (light reflected off the surface of a face) that is registered by a digital sensor as an array of pixel values. The pixels may encode color or only intensity. In this topic, we assume the latter case (i.e., gray-level imagery). After proper normalization and resizing to a fixed m-by-n size, the pixel array can be represented as a point (i.e., vector) in an mn-dimensional image space by simply writing its pixel values in a fixed (typically raster) order. A critical issue in the analysis of such multidimensional data is the dimensionality, the number of coordinates necessary to specify a data point. Below we discuss the factors affecting this number in the case of face images.

Image Space Versus Face Space

To specify an arbitrary image in the image space, one needs to specify every pixel value. Thus, the “nominal” dimensionality of the space, dictated by the pixel representation, is mn, a high number even for images of modest size. Recognition methods that operate on this representation suffer from a number of potential disadvantages, most of them rooted in the so-called curse of dimensionality.

• Handling high-dimensional examples, especially in the context of similarity- and matching-based recognition, is computationally expensive.

• For parametric methods, the number of parameters one needs to estimate typically grows exponentially with the dimensionality. Often this number is much higher than the number of images available for training, making the estimation task in the image space ill-posed.

• Similarly, for nonparametric methods, the sample complexity—the number of examples needed to represent the underlying distribution of the data efficiently— is prohibitively high.

However, much of the surface of a face is smooth and has regular texture. Therefore, per-pixel sampling is in fact unnecessarily dense: The value of a pixel is typically highly correlated with the values of the surrounding pixels. Moreover, the appearance of faces is highly constrained; for example, any frontal view of a face is roughly symmetrical, has eyes on the sides, nose in the middle, and so on. A vast proportion of the points in the image space does not represent physically possible faces. Thus, the natural constraints dictate that the face images are in fact confined to a subspace referred to as the face subspace.

Principal Manifold and Basis Functions

It is common to model the face subspace as a (possibly disconnected) principal manifold embedded in the high-dimensional image space. Its intrinsic dimensionality is determined by the number of degrees of freedom within the face subspace; the goal of subspace analysis is to determine this number and to extract the principal modes of the manifold. The principal modes are computed as functions of the pixel values and referred to as basis functions of the principal manifold.

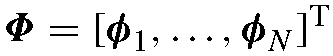

To make these concepts concrete, consider a straight line in R3, passing through the origin and parallel to the vector a = [a1,a2,a3]T. Any point on the line can be described by three coordinates; nevertheless, the subspace that consists of all points on the line has a single degree of freedom, with the principal mode corresponding to translation along the direction of a. Consequently, representing the points in this subspace requires a single basis function: The analogy here is between the line and the face subspace and between R3 and the image space.

The analogy here is between the line and the face subspace and between R3 and the image space.

Note that, in theory, according to the described model any face image should fall in the face subspace. In practice, owing to sensor noise, the signal usually has a nonzero component outside the face subspace. This introduces uncertainty into the model and requires algebraic and statistical techniques capable of extracting the basis functions of the principal manifold in the presence of noise. In Sect. 2.2.3, we briefly describe principal component analysis, which plays an important role in many of such techniques. For a more detailed discussion, see Gerbrands [12] and Joliffe [17].

Principal Component Analysis

Principal component analysis (PCA) [17] is a dimensionality reduction technique based on extracting the desired number of principal components of the multidimensional data. The first principal component is the linear combination of the original dimensions that has the maximum variance; the nth principal component is the linear combination with the highest variance, subject to being orthogonal to the n – 1 first principal components.

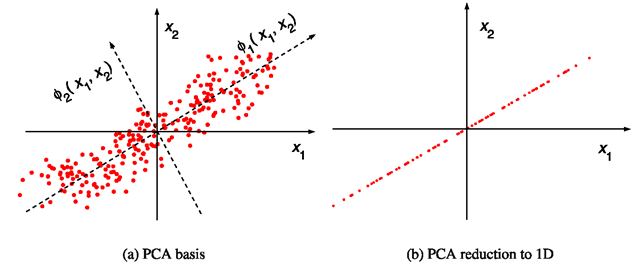

The idea of PCA is illustrated in Fig. 2.1a; the axis labeled![]() corresponds to the direction of maximum variance and is chosen as the first principal component. In a two-dimensional case, the second principal component is then determined uniquely by the orthogonality constraints; in a higher-dimensional space the selection process would continue, guided by the variances of the projections.

corresponds to the direction of maximum variance and is chosen as the first principal component. In a two-dimensional case, the second principal component is then determined uniquely by the orthogonality constraints; in a higher-dimensional space the selection process would continue, guided by the variances of the projections.

PCA is closely related to the Karhunen-Loève Transform (KLT) [21], which was derived in the signal processing context as the orthogonal transform with the basis  that for any

that for any![]() minimizes the average L2 reconstruction error for data points x

minimizes the average L2 reconstruction error for data points x

One can show [12] that, under the assumption that the data are zero-mean, the formulations of PCA and KLT are identical. Without loss of generality, we hereafter assume that the data are indeed zero-mean; that is, the mean face Xe is always subtracted from the data.

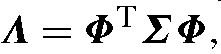

The basis vectors in KLT can be calculated in the following way. Let X be the N x M data matrix whose columns xi,…, xM are observations of a signal embedded in Rn ; in the context of face recognition, M is the number of available face images, and N = mn is the number of pixels in an image. The KLT basis Φ is obtained by solving the eigenvalue problem where Σ is the covariance

where Σ is the covariance

Fig. 2.1 The concept of PCA/KLT. a Solid lines, the original basis; dashed lines, the KLT basis. The dots are selected at regularly spaced locations on a straight line rotated at 30° and then perturbed by isotropic 2D Gaussian noise. b The projection (1D reconstruction) of the data using only the first principal component matrix of the data

is the eigenvector matrix of Σ, and Λ is the diagonal matrix with eigenvalues

is the eigenvector matrix of Σ, and Λ is the diagonal matrix with eigenvalues![]() on its main diagonal, so φ j is the eigenvector corresponding to the j th largest eigenvalue. Then it can be shown that the eigenvalue Xi is the variance of the data projected on

on its main diagonal, so φ j is the eigenvector corresponding to the j th largest eigenvalue. Then it can be shown that the eigenvalue Xi is the variance of the data projected on![]()

Thus, to perform PCA and extract k principal components of the data, one must project the data onto![]() the first k columns of the KLT basis Φ, which correspond to the k highest eigenvalues of Σ. This can be seen as a linear projection

the first k columns of the KLT basis Φ, which correspond to the k highest eigenvalues of Σ. This can be seen as a linear projection![]() which retains the maximum energy (i.e., variance) of the signal. Another important property of PCA is that it decorrelates the data: the covariance matrix of

which retains the maximum energy (i.e., variance) of the signal. Another important property of PCA is that it decorrelates the data: the covariance matrix of is always diagonal.

is always diagonal.

The main properties of PCA are summarized by the following

namely, approximate reconstruction, orthonormality of the basis![]() and decorrelated principal components

and decorrelated principal components respectively. These properties are illustrated in Fig. 2.1, where PCA is successful in finding the principal manifold, and in Fig. 2.8a (see later), where it is less successful, owing to clear nonlinearity of the principal manifold.

respectively. These properties are illustrated in Fig. 2.1, where PCA is successful in finding the principal manifold, and in Fig. 2.8a (see later), where it is less successful, owing to clear nonlinearity of the principal manifold.

PCA may be implemented via singular value decomposition (SVD). The SVD of an M x N matrix![]() is given by

is given by

where the M x N matrix U and the N x N matrix V have orthonormal columns, and the N x N matrix D has the singular values1 of X on its main diagonal and zero elsewhere.

It can be shown that![]() so SVD allows efficient and robust computation of PCA without the need to estimate the data covariance matrix Σ (2.2). When the number of examples M is much smaller than the dimension N, this is a crucial advantage.

so SVD allows efficient and robust computation of PCA without the need to estimate the data covariance matrix Σ (2.2). When the number of examples M is much smaller than the dimension N, this is a crucial advantage.

Eigenspectrum and Dimensionality

An important largely unsolved problem in dimensionality reduction is the choice of k, the intrinsic dimensionality of the principal manifold. No analytical derivation of this number for a complex natural visual signal is available to date. To simplify this problem, it is common to assume that in the noisy embedding of the signal of interest (in our case, a point sampled from the face subspace) in a high-dimensional space, the signal-to-noise ratio is high. Statistically, that means that the variance of the data along the principal modes of the manifold is high compared to the variance within the complementary space.

This assumption relates to the eigenspectrum, the set of eigenvalues of the data covariance matrix Σ. Recall that the i th eigenvalue is equal to the variance along the i th principal component; thus, a reasonable algorithm for detecting k is to search for the location along the decreasing eigenspectrum where the value of Xi drops significantly. A typical eigenspectrum for a face recognition problem, and the natural choice of k for such a spectrum, is shown in Fig. 2.3b (see later).

In practice, the choice of k is also guided by computational constraints, related to the cost of matching within the extracted principal manifold and the number of available face images. See Penev and Sirovich [29] as well as Sects. 2.3.2 and 2.3.4 for more discussion on this issue.

Linear Subspaces

Perhaps the simplest case of principal manifold analysis arises under the assumption that the principal manifold is linear. After the origin has been translated to the mean face (the average image in the database) by subtracting it from every image, the face subspace is a linear subspace of the image space. In this section, we describe methods that operate under this assumption and its generalization, a multilinear manifold.

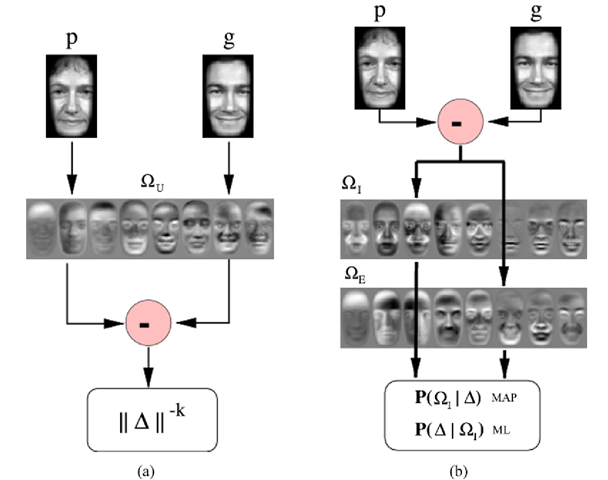

Fig. 2.2 Eigenfaces: the average face on the left, followed by seven top eigenfaces.

Eigenfaces and Related Techniques

In their ground-breaking work in 1990, Kirby and Sirovich [19] proposed the use of PCA for face analysis and representation. Their paper was followed by the “eigen-faces” technique by Turk and Pentland [35], the first application of PCA to face recognition. Because the basis vectors constructed by PCA had the same dimension as the input face images, they were named “eigenfaces.” Figure 2.2 shows an example of the mean face and a few of the top eigenfaces. Each face image was projected (after subtracting the mean face) into the principal subspace; the coefficients of the PCA expansion were averaged for each subject, resulting in a single k-dimensional representation of that subject. When a test image was projected into the subspace, Euclidean distances between its coefficient vector and those representing each subject were computed. Depending on the distance to the subject for which this distance would be minimized, and the PCA reconstruction error (2.1), the image was classified as belonging to one of the familiar subjects, as a new face, or as a nonface. The latter demonstrates the dual use of subspace techniques for detection: When the appearance of an object class (e.g., faces) is modeled by a subspace, the distance from this subspace can serve to classify an object as a member or a nonmember of the class.

Probabilistic Eigenspaces

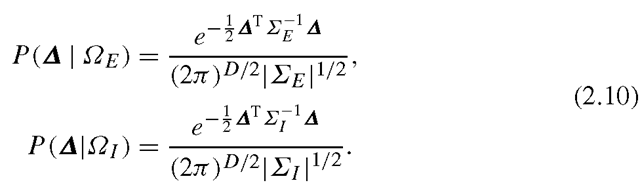

The role of PCA in the original Eigenfaces was largely confined to dimensionality reduction. The similarity between images 11 and 12 was measured in terms of the Euclidean norm of the difference![]() projected to the subspace, essentially ignoring the variation modes within the subspace and outside it. This was improved in the extension of eigenfaces proposed by Moghaddam and Pent-land [24, 25], which uses a probabilistic similarity measure based on a parametric estimate of the probability density ρ(Δ | Ω).

projected to the subspace, essentially ignoring the variation modes within the subspace and outside it. This was improved in the extension of eigenfaces proposed by Moghaddam and Pent-land [24, 25], which uses a probabilistic similarity measure based on a parametric estimate of the probability density ρ(Δ | Ω).

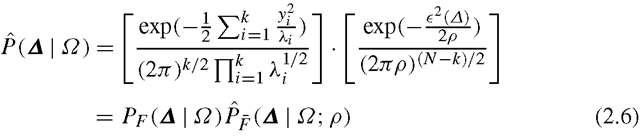

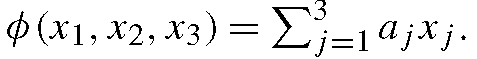

A major difficulty with such estimation is that normally there are not nearly enough data to estimate the parameters of the density in a high dimensional space. Moghaddam and Pentland overcame this problem by using PCA to divide the vector space Rn into two subspaces, as shown in Fig. 2.3: the principal subspace F, obtained by Φk (the first k columns of Φ ) and its orthogonal complement F spanned by the remaining columns of Φ. The operating assumption here is that intrinsic dimensionality k (at most) and thus reside in F, with the exception of additive white Gaussian noise within F.the data have

Fig. 2.3 a Decomposition of Rn into the principal subspace F and its orthogonal complement F for a Gaussian density. b Typical eigenvalue spectrum and its division into the two orthogonal subspaces

Every image can be decomposed into two orthogonal components by projection into these two spaces. Figure 2.3a shows the decomposition of Δ into distance within face subspace (DIFS) and the distance from face subspace (DFFS). Moreover, the probability density can be decomposed into two orthogonal components.

In the simplest case,![]() is a Gaussian density. As derived by Moghaddam and Pentland [24], the complete likelihood estimate in this case can be written as the product of two independent marginal Gaussian densities

is a Gaussian density. As derived by Moghaddam and Pentland [24], the complete likelihood estimate in this case can be written as the product of two independent marginal Gaussian densities

where![]() is the true marginal density in

is the true marginal density in is the estimated

is the estimated

marginal density in![]() are the principal components of

are the principal components of![]() is the PCA reconstruction error (2.1). The information-theoretical optimal value for the noise density parameter ρ is derived by minimizing the Kullback-Leibler (KL) divergence [8] and can be shown to be simply the average of the N – k smallest eigenvalues

is the PCA reconstruction error (2.1). The information-theoretical optimal value for the noise density parameter ρ is derived by minimizing the Kullback-Leibler (KL) divergence [8] and can be shown to be simply the average of the N – k smallest eigenvalues

This is a special case of the recent, more general factor analysis model called probabilistic PCA (PPCA) proposed by Tipping and Bishop [34]. In their formulation, the above expression for ρ is the maximum-likelihood solution of a latent variable model in contrast to the minimal-divergence solution derived by Moghaddam and Pentland [24].

In practice, most of the eigenvalues in F cannot be computed owing to insufficient data, but they can be estimated, for example, by fitting a nonlinear function to the available portion of the eigenvalue spectrum and estimating the average of the eigenvalues beyond the principal subspace. Fractal power law spectra of the form f-n are thought to be typical of “natural” phenomenon and are often a good fit to the decaying nature of the eigenspectrum, as illustrated by Fig. 2.3b.

In this probabilistic framework, the recognition of a test image x is carried out in terms of computing for every database example x i the difference![]() and its decomposition into the F and F components and then ranking the examples according to the value in (2.6).

and its decomposition into the F and F components and then ranking the examples according to the value in (2.6).

Linear Discriminants: Fisherfaces

When substantial changes in illumination and expression are present, much of the variation in the data is due to these changes. The PCA techniques essentially select a subspace that retains most of that variation, and consequently the similarity in the face subspace is not necessarily determined by the identity.

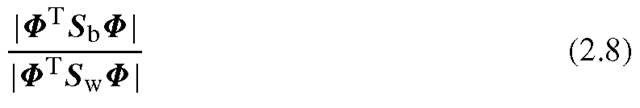

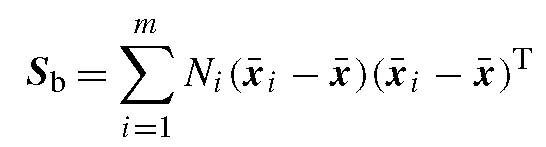

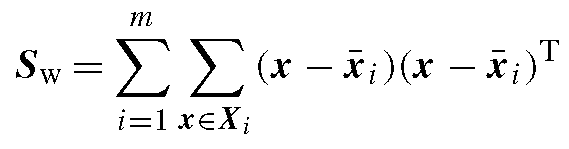

Belhumeur et al. [2] propose to solve this problem with “Fisherfaces”, an application of Fisher’s linear discriminant (FLD). FLD selects the linear subspace Φ, which maximizes the ratio

where

is the between-class scatter matrix, and

is the within-class scatter matrix; m is the number of subjects (classes) in the database. Intuitively, FLD finds the projection of the data in which the classes are most linearly separable. It can be shown that the dimension of Φ is at most m — 1.2

Because in practice Sw is usually singular, the Fisherfaces algorithm first reduces the dimensionality of the data with PCA so (2.8) can be computed and then applies FLD to further reduce the dimensionality to m – 1. The recognition is then accomplished by a NN classifier in this final subspace. The experiments reported by Belhumeur et al. [2] were performed on data sets containing frontal face images of 5 people with drastic lighting variations and another set with faces of 16 people with varying expressions and again drastic illumination changes. In all the reported experiments Fisherfaces achieve a lower error rate than eigenfaces.

Bayesian Methods

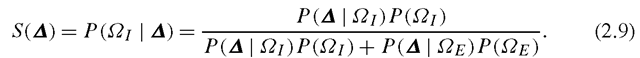

Consider now a feature space of Δ vectors, the differences between two images ![]() One can define two classes of facial image variations: intrapersonal variations Ωι (corresponding, for example, to different facial expressions and illuminations of the same individual) and extrapersonal variations ΩΕ (corresponding to variations between different individuals). The similarity measure Ξ(Δ) can then be expressed in terms of the intrapersonal a posteriori probability of Δ belonging to Ωι given by the Bayes rule.

One can define two classes of facial image variations: intrapersonal variations Ωι (corresponding, for example, to different facial expressions and illuminations of the same individual) and extrapersonal variations ΩΕ (corresponding to variations between different individuals). The similarity measure Ξ(Δ) can then be expressed in terms of the intrapersonal a posteriori probability of Δ belonging to Ωι given by the Bayes rule.

Note that this particular Bayesian formulation, proposed by Moghaddam et al. [27], casts the standard face recognition task (essentially an m-ary classification problem for m individuals) into a binary pattern classification problem with Ωι and ΩΕ.

The densities of both classes are modeled as high-dimensional Gaussians, using an efficient PCA-based method described in Sect. 2.3.2.

These densities are zero-mean, because for each Δ = I j – Ii there exists a Ii – I j.

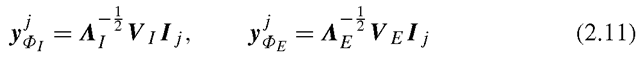

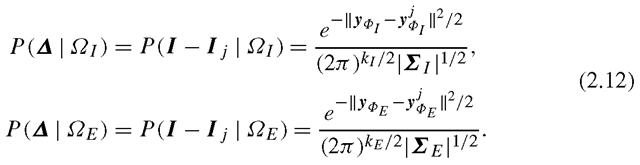

By PCA, the Gaussians are known to occupy only a subspace of image space (face subspace); thus, only the top few eigenvectors of the Gaussian densities are relevant for modeling. These densities are used to evaluate the similarity in (2.9). Computing the similarity involves first subtracting a candidate image I from a database example I j. The resulting Δ image is then projected onto the eigenvectors of the extrapersonal Gaussian and also the eigenvectors of the intrapersonal Gaussian. The exponentials are computed, normalized, and then combined as in (2.9). This operation is iterated over all examples in the database, and the example that achieves the maximum score is considered the match. For large databases, such evaluations are expensive and it is desirable to simplify them by off-line transformations.

To compute the likelihoods the database images I j

the database images I j

are preprocessed with whitening transformations [11]. Each image is converted and stored as a set of two whitened subspace coefficients: γΦι for intrapersonal space and![]() for extrapersonal space

for extrapersonal space

where Λχ and VX are matrices of the largest eigenvalues and eigenvectors, respectively, of Σχ (X being a substituting symbol for I or E).

After this preprocessing, evaluating the Gaussians can be reduced to simple Euclidean distances as in (2.12). Denominators are of course precomputed. These likelihoods are evaluated and used to compute the maximum a posteriori (MAP) similarity 5(Δ) in (2.9). Euclidean distances are computed between the kI-dimensional yΦι vectors as well as the kE-dimensional yΦε vectors. Thus, roughly 2 x (kE + kI) arithmetic operations are required for each similarity computation, avoiding repeated image differencing and projections

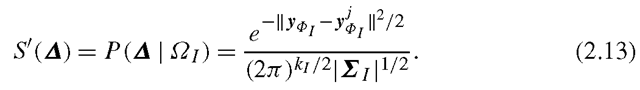

The maximum likelihood (ML) similarity matching is even simpler, as only the intrapersonal class is evaluated, leading to the following modified form for the similarity measure.

The approach described above requires two projections of the difference vector Δ, from which likelihoods can be estimated for the Bayesian similarity measure. The computation flow is illustrated in Fig. 2.4b. The projection steps are linear while the posterior computation is nonlinear. Because of the double PCA projections required, this approach has been called a “dual eigenspace” technique. Note the projection of the difference vector Δ onto the “dual eigenfaces” (ΩI and Ωε) for computation of the posterior in (2.9).

It is instructive to compare and contrast LDA (Fisherfaces) and the dual subspace technique by noting the similar roles of the between-class/within-class and extrapersonal/intrapersonal subspaces. One such analysis was presented by Wang and Tang [39] where PCA, LDA, and Bayesian methods were “unified” under a three-parameter subspace method. Ultimately, the optimal probabilistic justification of LDA is for the case of two Gaussian distributions of equal covariance (although LDA tends to perform rather well even when this condition is not strictly true). In contrast, the dual formulation is entirely general and probabilistic by definition, and it makes no appeals to geometry, Gaussianity, or symmetry of the underlying data or, in fact, the two “meta classes” (intra-, and extrapersonal). These two probability distributions can take on any form (e.g., arbitrary mixture models), not just single Gaussians, although the latter case does make for easy visualization by diagonaliz-ing the dual covariances as two sets of “eigenfaces”.

Fig. 2.4 Signal flow diagrams for computing the similarity g between two images. a Original eigenfaces. b Bayesian similarity. The difference image is projected through both sets of (intra/extra) eigenfaces to obtain the two likelihoods