So far, we’ve enjoyed the isolated invocation context provided by stateless and stateful session beans. These bean types service requests independently of one another in separate bean instances, relieving the EJB developer of the burden introduced by explicit concurrent programming. Sometimes, however, it’s useful to employ a scheme in which a single shared instance is used for all clients, and new to the EJB 3.1 Specification is the singleton session bean to fit this requirement.

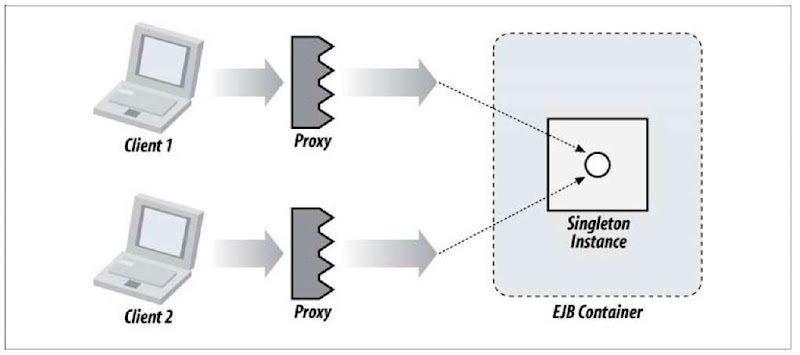

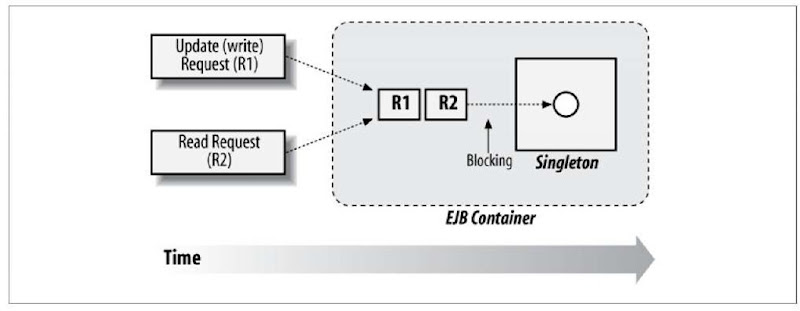

Contrary to most late-generation feature additions, the singleton is actually a much simpler conceptual model than we’ve seen in SLSB and SFSB, as shown in Figure 7-1.

Figure 7-1. All invocations upon a singleton session bean using the same backing instance

Here all client requests are directed through the container to a sole target instance. This paradigm closely resembles that of a pure POJO service approach, and there are quite a few repercussions to consider:

• The instance’s state is shared by all requests.

• There may be any number of requests pouring through the instance’s methods at any one time.

• Due to concurrent invocations, the EJB must be designed as thread-safe.

• Locking or synchronization done to ensure thread safety may result in blocking (waiting) for new requests. This may not show itself in single-user testing, but will decimate performance in a production environment if we don’t design very carefully.

• Memory footprint is the leanest of all session bean types. With only one backing instance in play, we don’t take up much RAM.

In short, the singleton bean is poised to be an incredibly efficient choice if applied correctly. Used in the wrong circumstance, or with improper locking strategies, we have a recipe for disaster. Sitting at the very center of the difference between the two is the issue of concurrency.

Concurrency

Until now, EJB developers have been able to sidestep the issue of handling many clients at once by hiding behind the container. In both the stateless and stateful models, the specification mandates that only one request may access a backing bean instance at any one time. Because each request is represented by an invocation within a single thread, this means that SLSB and SFSB implementation classes need not be thread-safe. From the instance’s perspective, one thread at most will enter at any given time.

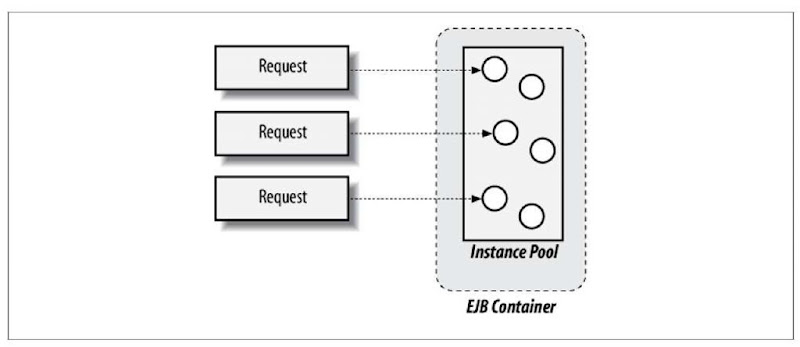

In the case of many concurrent requests to the same SLSB, the container will route each to a unique instance (Figure 7-2).

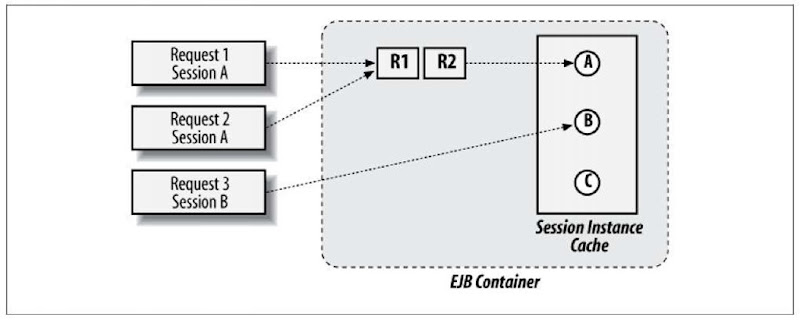

SFSB concurrent requests must be serialized by the container but blocked until the underlying instance is available (Figure 7-3).

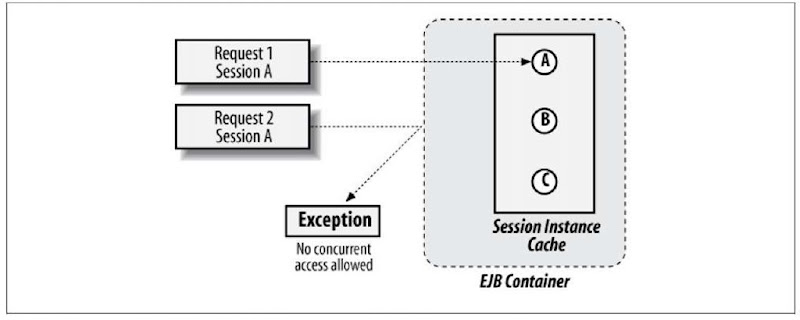

A bean provider may also optionally prohibit parallel invocations upon a particular stateful session via the use of @javax.ejb.ConcurrencyManagement (Figure 7-4).

A full discussion on concurrent programming is outside the scope of this topic, but we’ll introduce the main points of concern as they relate to EJB. Intentionally left out of this text are formal explanations of thread visibility, deadlock, livelock, and race conditions. If these are foreign concepts, a very good primer (and much more) is Java Concurrency in Practice by Brian Goetz, et al. (Addison-Wesley; http://jcip.net/).

Figure 7-2. Stateless session bean invocations pulling from a pool of backing instances for service

Figure 7-3. Stateful session bean invocations pulling from a cache of backing instances for service

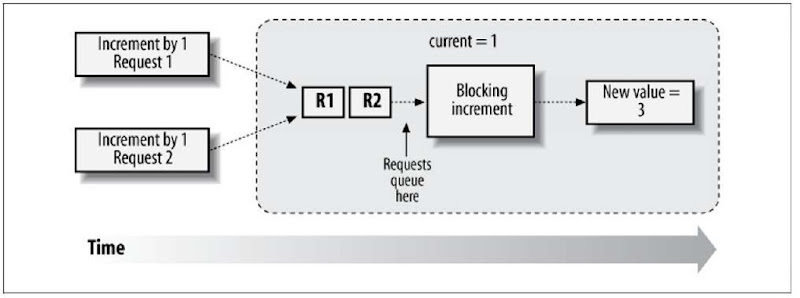

Figure 7-4. Concurrent requests upon the same session blocking until the session is available for use

Shared Mutable Access

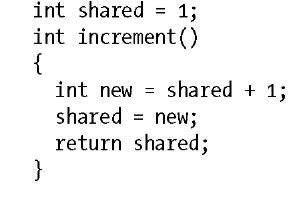

When two or more threads share access to a mutable instance, a number of interesting scenarios present themselves. State may change unexpectedly during the course of an operation. The example of a simple increment function illustrates this well:

Here we simply bump the value of a shared variable by 1. Unfortunately, in the case of many threads, this operation is not safe, as illustrated in Figure 7-5.

Figure 7-5. Poor synchronization policies can lead to incorrect results

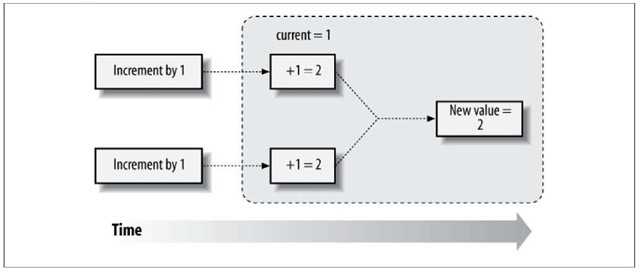

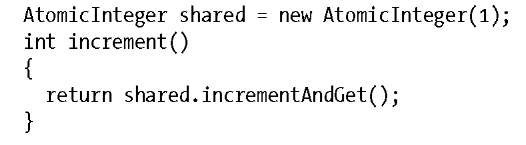

There are two solutions to this problem. First, we can encapsulate the actual increment of the shared variable into a single, contained atomic operation. The purpose of this is to eliminate the chances of other threads sneaking in because there are no two individual read/writes to nestle in between. In Java this is provided by classes in the java.util.concurrent.atomic package—for instance, AtomicInteger (http://java.sun.com/javase/6/docs/api/java/util/concurrent/atomic/AtomicBoolean.html):

In a multithreaded environment, the execution flow of this example is efficient and may look something like Figure 7-6.

Figure 7-6. Proper synchronization policies allow many concurrent requests to execute in parallel and maintain reliable state

Behind the scenes, the backing increment is done all at once. Thus there can be no danger of inconsistent behavior introduced by multithreaded access.

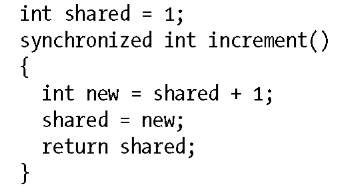

Often, however, our requirements are more involved than simple increments upon a variable, and we cannot perform all of the work in one atomic unit. In this case, we need to make other threads wait until our mutable operations have completed. This is called “blocking” and is accomplished via the synchronized keyword in Java:

The synchronized keyword here denotes that any executing thread must first gain rights to the method. This is done by acquiring what’s called a mutually exclusive lock, a guard that may be held by only one thread at a time. The thread that owns the lock is permitted entry into the synchronized block, while others must block until the lock becomes available. In Java, every object has a corresponding lock, and when applied to a method, the synchronized keyword implicitly is using the this reference. So the previous example is analogous to:

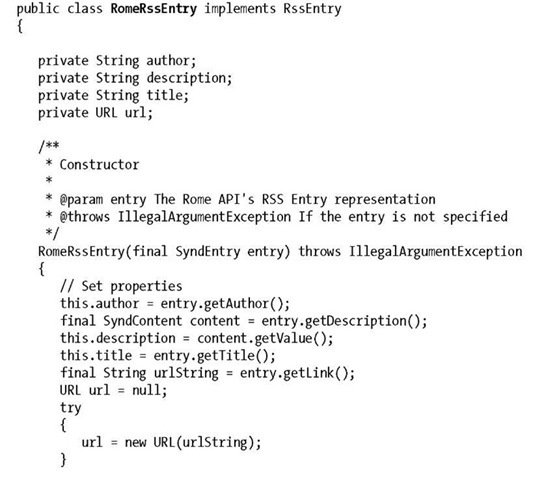

The execution flow when using a locking strategy looks like Figure 7-7.

Figure 7-7. Blocking synchronization policy making new requests wait until it’s safe to proceed

Although this code is safe, it does introduce a performance bottleneck, as only one thread may gain access to the mutable operation at any one time. Therefore we must be very selective about what and for how long we synchronize.

Container-Managed Concurrency

EJB 3.1 provides a simplistic locking abstraction to the bean provider in the form of container-managed concurrency (CMC). By introducing a few annotations (and corresponding XML metadata) into the API, the developer is freed from the responsibility of correctly implementing thread-safe code. The container will, in turn, transparently apply the appropriate locking strategy. Note that when using your bean implementation class as a POJO, however, the code is not safe for use in concurrent environments when using CMC.

By default, singleton beans employ a concurrency management type of CONTAINER, which takes the explicit form upon the bean implementation class:

Other types include BEAN and CONCURRENCY_NOT_SUPPORTED. Because only one instance of a singleton bean will exist (per VM) in an application, it makes sense to support concurrency in some fashion.

Each method in CMC will be assigned a lock type, which designates how the container should enforce access. By default, we use a write lock, which may be held by only one thread at a time. All other methods requesting the lock must wait their turn until the lock becomes available. The read lock may be explicitly specified, which will allow full concurrent access to all requests, provided that no write lock is currently being held. Locks are defined easily:

We may define the lock upon the method in the bean implementation class, on the class itself, or some combination of the two (with method-level overriding the class definition).

Requests upon a singleton bean employing CMC are not designed to block indefinitely until a lock becomes available. We may also specify sensible timeouts:

In this example we denote that the read operation should wait on an available write lock a maximum of 15 seconds before giving up and returning a javax.ejb. ConcurrentAccessTimeoutException.

Bean-Managed Concurrency

Although convenient and resistant to developer error, container-managed concurrency does not cover the full breadth of concerns that multithreaded code must address. In these cases the specification makes available the full power of the Java language’s concurrent tools by offering a bean-managed concurrency mode. This scenario opens the gates to full multithreaded access to the singleton bean, and it is the onus of the bean provider to manually apply the synchronized and volatile keywords to safeguard the bean instance in support of parallel invocations.

Lifecycle

The life of a singleton bean is very similar to that of the stateless session bean; it is either not yet instantiated or ready to service requests. In general, it is up to the Container to determine when to create the underlying bean instance, though this must be available before the first invocation is executed. Once made, the singleton bean instance lives in memory for the life of the application and is shared among all requests.

Explicit Startup

New in the EJB 3.1 Specification is the option to explicitly create the singleton bean instance when the application is deployed. This eager initialization is triggered simply:

The @javax.ejb.Startup annotation marks that the container must allocate and assign the bean instance alongside application startup. This becomes especially useful as an application-wide lifecycle listener. By additionally denoting a @javax.ejb.Post Construct method, this callback will be made at application start:

The method here will be invoked when the EJB is deployed. This application-wide callback was unavailable in previous versions of the specification.

Example: The RSSCacheEJB

Because the singleton bean represents one instance to be used by all incoming requests, we may take advantage of the extremely small impact this session type will have upon our overall memory consumption. The factors to beware are state consistency (thread-safety) and the potential blocking that this might impose. Therefore, it’s best to apply this construct in high-read environments.

One such usage may be in implementing a simple high-performance cache. By letting parallel reads tear through the instance unblocked, we don’t have the overhead of a full pool that might be supplied by an SLSB. The internal state of a cache is intended to be shared by many distinct sessions, so we don’t need the semantics of an SFSB. Additionally, we may eagerly populate the cache when the application deploys, before any requests hit the system.

For this example, we’ll define an RSS Caching service to read in a Really Simple Syndication (RSS; http://en.wikipedia.org/wiki/RSS) feed and store its contents for quick access. Relatively infrequent updates to the cache will temporarily block incoming read requests, and then concurrent operation will resume as normal, as illustrated in Figure 7-8.

Figure 7-8. A concurrent singleton session bean servicing many requests in parallel

Value Objects

As we’ll be representing the RSS entries in some generic form, first we should define what information we’ll expose to the client:

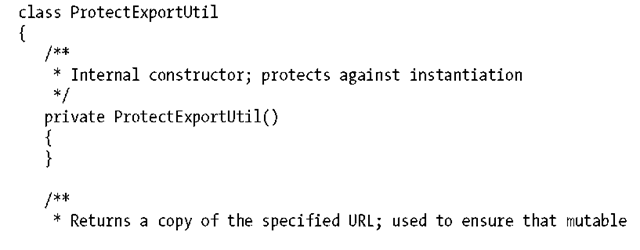

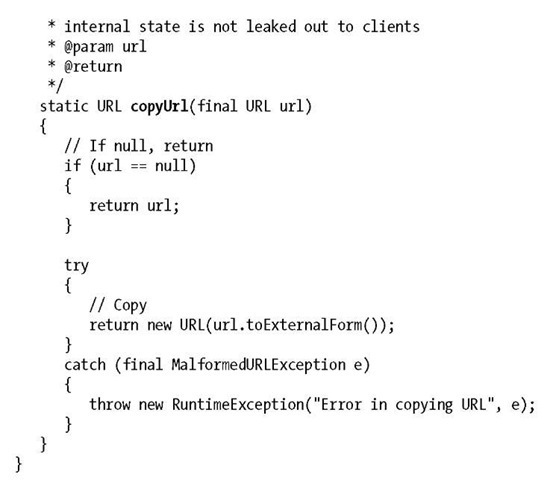

This is a very simple definition of properties. One thing that’s important to note is that the type java.net.URL is itself mutable. We must take care to protect the internal state from being exported in a read request. Otherwise, a local client, which uses pass-by-reference, may change the contents of the URL returned from getURL(). To this end, we’ll introduce a simple utility to copy the reference such that if a client writes back to it, only his view will be affected:

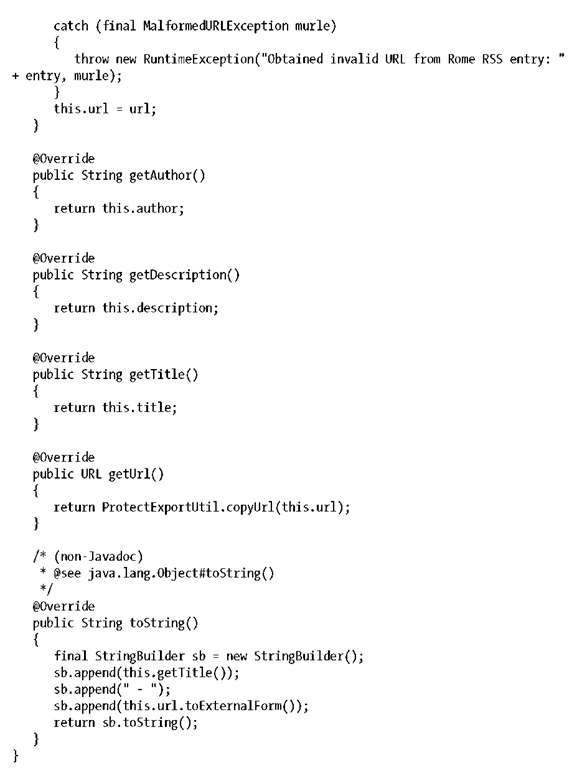

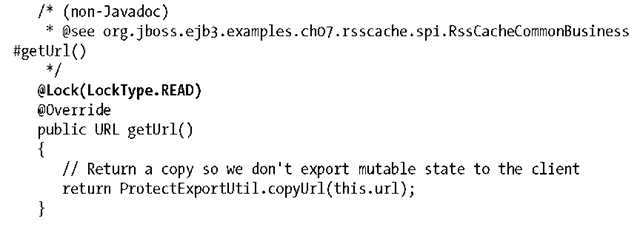

Project ROME (https://rome.dev.java.net/) is an open source framework for dealing with RSS feeds, and we’ll use it to back our implementation of RssEntry. In returning URLs, it will leverage the ProtectExportUtil:

That should cover the case of the value object our EJB will need in order to return some results to the client. Note that the ROME implementation is completely separated from the contracted interface. Should we choose to use another library in the future, our clients won’t require any recompilation.

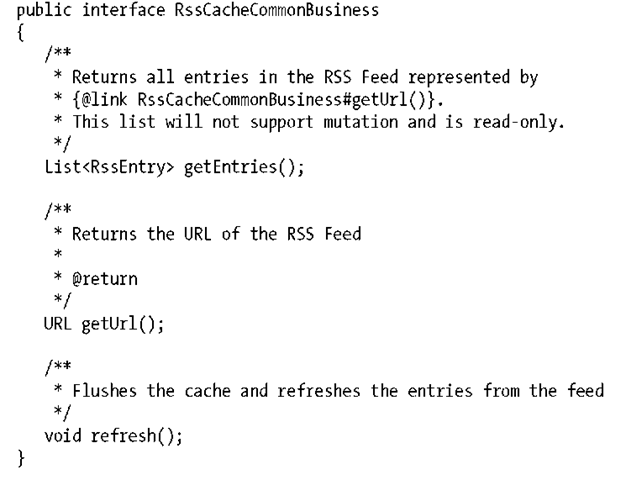

The Contract: Business Interfaces

Now we need to define methods to read the cache’s contents, obtain the URL that hosts the RSS feed, and refresh the cache from the URL:

Again, we must take care to protect the internal cache state from being exported in a read request. The point in question here is the List returned from getEntries(). Therefore we note in the documentation that the reference return will be read-only.

The refresh() operation will obtain the contents from the URL returned by getURL(), and parse these into the cache.

The cache itself is a List of the RssEntry type.

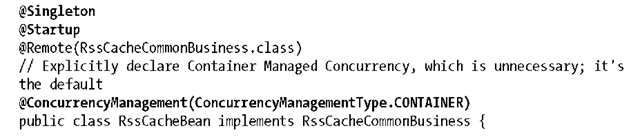

Bean Implementation Class

Once again, the bean implementation class contains the meat and potatoes of the business logic, as well as the annotations that round out the EJB’s metadata:

The declaration marks our class as a Singleton Session type that should be initialized eagerly upon application deployment. We’ll use container-managed concurrency (which is defined explicitly here for illustration, even though this is the default strategy).

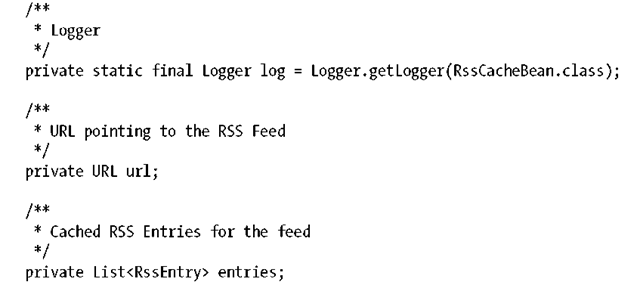

Some internal members are next:

The URL here will be used to point to the RSS feed we’re caching. The List is our cache. Now to implement our business methods:

Again, we protect the internal URL from being changed from the outside. The lock type is READ and so concurrent access is permitted, given no WRITE locks are currently held:

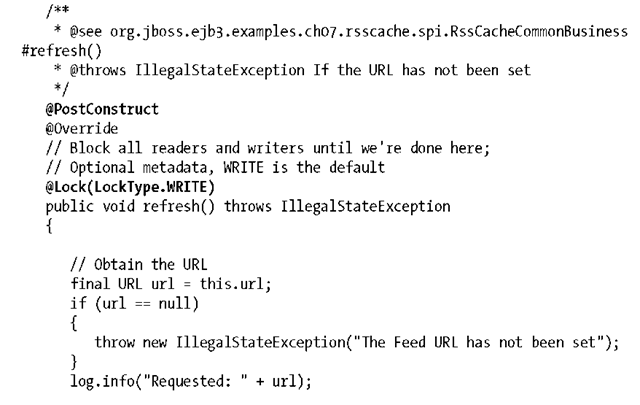

There’s a lot going on here. @PostConstruct upon the refresh() method means that this will be invoked automatically by the container when the instance is created. The instance is created at application deployment due to the @Startup annotation. So, transitively, this method will be invoked before the container is ready to service any requests.

The WRITE lock upon this method means that during its invocation, all incoming requests must wait until the pending request completes. The cache may not be read via getEntries() during refresh().

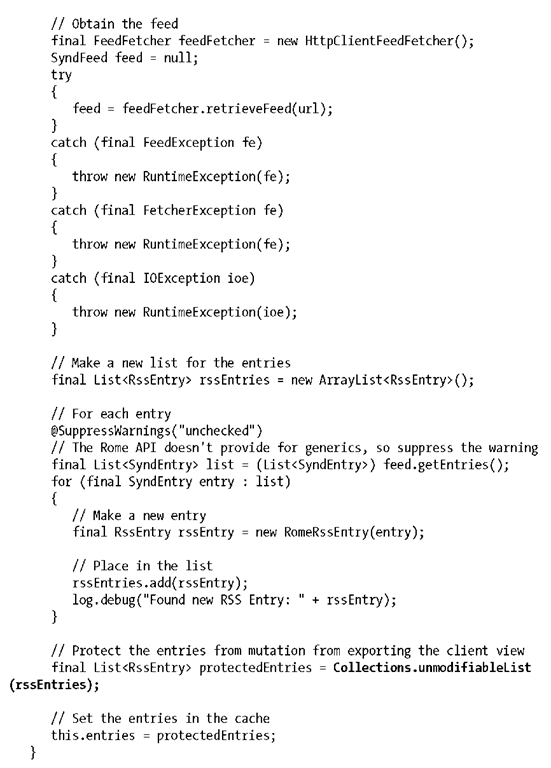

Also very important is that our cache is made immutable before being set. Accomplished via Collections.unmodifiableList(), this enforces the read-only contract of getEntries().

The remainder of the method body simply involves reading in the RSS feed from the URL and parsing it into our value objects.