INTRODUCTION

The Open Learning Initiative (OLI) at Carnegie Mellon University is a new evolutionary form of eLearning that derives from a particular tradition in using information and communication technologies (ICT) to deliver instruction. That tradition is distinctive in that it is based on rigorous and consistent application of research results and assessment methodologies from scientific studies of human learning when creating digital learning environments. The tradition is, in fact, a comparatively small part of the overall eLearning landscape. ICT-based learning tools are typically driven by the mere opportunity of leveraging technological possibilities, e.g. the “webifying” traditional textbooks, campus-wide laptop requirements, and podcasting of traditional lectures, or by intuitions of individual instructors about the potential effectiveness of particular eLearning strategies, e.g. an intuition that computer-based graphical simulations of the central limit theorem in statistics will help novice learners understand the meaning and implications of that theorem. While these non-scientific strategies, especially those based on instructor intuitions based on years of experience, have sometimes produced effective eLearning interventions, the success rate is destined to be low because they are not based on well-confirmed theory about learning and they seldom are subjected to any meaningful formative or summative evaluation to provide feedback about whether and how they are working and how they need to be modified to be more effective. The general failure of computer based pedagogical strategies, especially online classes, to bring transformative change to education is evidence of the limited success of these dominant strategies. (For some arguments for over-simplistic thinking in the eLearning domain see: Zemsky and Massey, 2005)

OLI courses also occupy and extend a second distinctive niche in the eLearning tradition. That niche is characterized by using information technology as the primary mode for delivering instruction to novice learners. It should be distinguished from the niches occupied by “learning objects” which tend to target the learning of specific ideas or skills (Mortimer, 2002) and by “OpenCourseWare” which provides access to digital materials used to supplement traditional pedagogical strategies (Long, 2002). We believe the niche of eL-earning environments that provide a preponderance of the performance of instruction will grow in importance as the world increasingly looks to information and communication technologies to address the problem of access to, affordability of, and accountability for the effectiveness of education.

A third niche in which the OLI is embedded is the “open educational resources” (OER) movement. (Hylen, 2006) While there have been open educational resources of various kinds for a long time (everything from public libraries to the public lectures on college campuses to educational television), the advent of the Internet substantially changed the possibilities for providing open access to educational materials and instruction. MIT’s OpenCourseWare project brought particular attention and focus OERs. Today, sources ranging from the Internet Archive to Apple™ Computer’s iTunes U™ to the Universal Library to individual colleges and universities provide freely available educational materials on the web. The OLI is part of this movement in that all OLI courses are freely available to individual learners anywhere in the world. The OER movement is heterogeneous and self-organizing. However, increasingly, through the help of many individuals, institutions, and foundations such as the William and Flora Hewlett Foundation (which funds the OLI) and theA.W. Mellon Foundation, delivering open educational resources has become part of the strategic thinking of the world’s educational leaders when they consider how to meet the access and quality demands we will face this century.

BACKGROUND

Since the advent of the computer, there have been many efforts to use computer technologies to provide direct instruction to students. The lessons delivered by the PLATO system on mainframe computers are an early, and enduring, case of using computing to provide instruction. (Sherwood, 1975) The emergence of powerful personal computers created the opportunity for colleges and universities, commercial vendors, and individual instructors to engage in development of what we now call eLearning tools. Anyone who has long worked in this field knows that most of the tools were developed when a single faculty member, who had some interest in computing, had an idea about how use technology to help students understand particularly challenging topics in their disciplines. During the last third of the 20th century, very few of these faculty and surprisingly few of the university and vendor efforts turned to the growing body of research in what came to be known as “cognitive science” to inform their designs. Also, little or no effort was made to engage in either formative of summative evaluation of most of the eLearning interventions. This is hardly surprising since there were seldom resources available to engage in such evaluation. One of the hypotheses that have driven the Open Learning Initiative is that the failure of eLearning to produce a transformational change in education is, in large part, because the efforts have not been based on research results from the learning sciences and have not been meaningfully evaluated in ways that would produce continuous improvements in the interventions. (Twigg, 2001)

Although the mainstream of eLearning work over the last 40 years has not been scientifically based or rigorously evaluated, there have been exceptions. Work on intelligent tutoring systems by a number of groups (Anderson, et al., 1989; Gertner, et al., 2000) has been based on making the computer play the role of an individual tutor for novice learners. That, in turn, required the inventors of these computer-based tutors to develop a keen understanding of how novices to the subject matter learned the various concepts and skills involved. Knowledge of these learning processes came from research in human learning, i.e. in cognitive science broadly construed. (For an example of this kind of research, see: Chi, M.T.H., et al., 1981)

Of particular importance to the development of OLI methodologies is the work of John Anderson and his colleagues at Carnegie Mellon, which focused on understanding human learning. Their studies and theories of human cognition, including distinctions between the ways novices and experts solve problems, became the basis for designing online learning environments that would individualize feedback for students learning subjects such as computing programming and algebra. One form of these tutors is the Cognitive Tutors developed at Carnegie Mellon. (Anderson, et al., 1995) The Cognitive Tutors were heavily evaluated and continuously improved. Their success as an eLearning intervention is well documented. Work on improving the approach continues. The generalization of the lessons from this work in intelligent tutoring is not that Cognitive Tutors are the only way to use ICT to improve education. Rather, the lesson is that results from research on human learning, what we call “the learning sciences” must guide the design of educational interventions using technology in order for ICT-based learning to finally have the long hoped for transformational impact on education. Perhaps the best single source for understanding how many of in the field have come to that conclusion is 2004 U.S. National Research Council report titled “How People Learn.” (Bransford, et al., 2000)

SCIENTIFICALLY DESIGNED ELEARNING: THE OPEN LEARNING INITIATIVE

The Open Learning Initiative (http://www.cmu.edu/oli) delivers scientifically designed, formatively and sum-matively evaluated, and iteratively improved eLearning. In these ways, it is a model of the kind of eLearning this article is about. One set of products of the OLI work is a portfolio of on-line courses in a variety of subject areas that are designed to provide a learner who is a novice to the subject all that he or she needs to master the materials presented in that course. Subject areas currently covered include statistics, engineering statics, chemistry, French, biology, economics, and formal logic. This portfolio will be extended to cover other subject areas. The courses are not simply collections of materials created by individual faculty to support traditional instruction like most of the Open Coure Ware materials. The OLI creates online courses that fully enact instruction for a given topic so that a novice learner can acquire the ideas and skills from the OLI course alone. That is, our goal is to create complete online courses from which learners can learn even if they do not have the benefit of an instructor or a class. The reality is that the OLI courses are often used by instructors in a “blended mode,” i.e. in combination with classroom instruction. We believe this to be a good use of the OLI courses; but we also believe that our goal of making them fully enact instruction makes them superior materials for using in a blended mode. This is because the underlying design assumption is that the eLearning intervention must be sufficient in and of itself to support the novice learner in acquiring the idea or skill.

OLI courses are developed by teams of learning scientists, faculty content experts, human computer interaction experts and software engineers. A second product of the effort is a developing body of knowledge in the OLI project about how to engineer courses designed to leverage what learning scientists now know about how humans learn. We have developed specific design principles and assessment methodologies based on a “scientifically informed, iteratively improved” heuristic. To be specific, step one in developing an OLI course is a survey of the literature about teaching and learning in the discipline to understand what professors and learning scientists see as the standard problem encountered by novice learner in that particular subject area. This study goes beyond literature reviews to any materials we have from previous iterations of the course taught in traditional modes, e.g. exams, previous evaluation of effectiveness of pedagogical interventions in courses at Carnegie Mellon or partner schools, and actual classroom observations to document problems students are having with the materials.

For example, work on the OLI chemistry course included an analysis of the differences between how chemistry is currently taught and how it is practiced. The team working on that course conducted a study of the teaching of chemistry in California high schools using the state’s standards and the two best selling textbooks. The study shows that most introductory chemistry courses fail to accomplish an explicit primary objective of the course: showing what most chemists do in their daily work. (Evans, et al., 2006) The OLI Engineering Statics course is informed by years of study of how well standard teaching practices align with the goals of the core course in almost every engineering major. (Steif, e. al., 2005.)

Step two in an OLI course design, well know to instructional design professionals throughout the world, is to document measurable learning objectives. The process of defining these objectives is often one of the hardest steps in the process. Nevertheless, it is crucial to designing learning environments targeted to achieve desired outcomes.

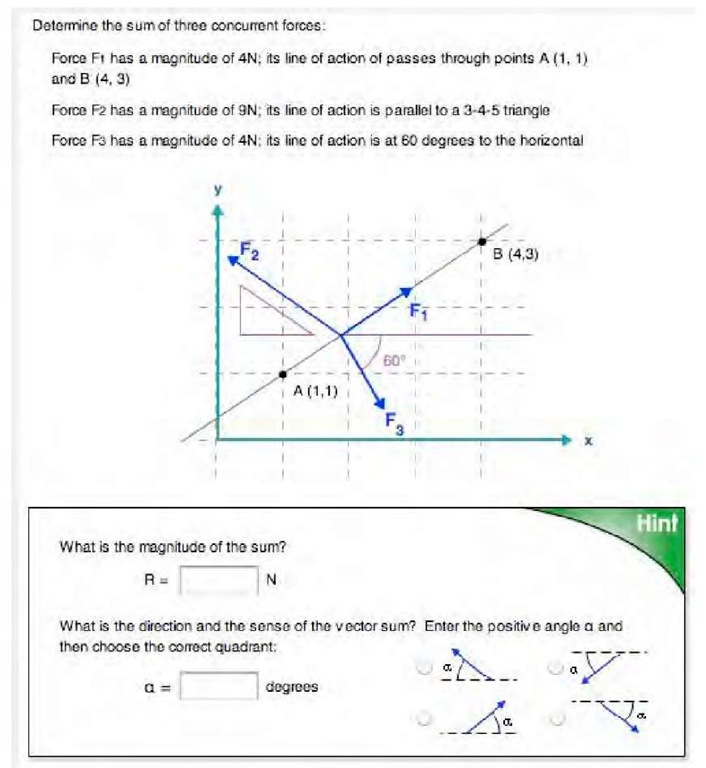

The third step is designing a range of learning support environments (simulations, open virtual labs, scaffolded learning practice, intelligent tutors, etc.) that support students in achieving those outcomes. At this point, the strategies vary somewhat based on subject matter, but all are grounded in research outcomes. For example, one clear result of work in cognitive science over the last 20 years is that immediate, targeted feedback makes a substantial difference in learning. (Bloom, B.S., 1984) “Targeted” feedback is feedback to the student that addresses directly the kind of mistake they make in performing a task or answering a question rather than simply telling them they got it right or wrong. The most sophisticated form of such feedback from a computer comes in the form of an intelligent tutoring system with rich, complex cognitive models as databases that can be used to shape individualized feedback. Some OLI courses, e.g. the statistics and physics courses, have cognitive tutors to provide that targeted feedback. (Meyer, et al., 2002) We have learned that a more modest version of a cognitive tutor which we call a “mini-tutor” that has a much simpler error detection model based on information about the most common mistakes novices make, is also an effective way of providing targeted feedback. An OLI “mini-tutor” interface (this one designed by Dr. Paul Steif of Carnegie Mellon and Anna Dollar of Miami University) looks like this:

If a student can work the problem without asking for a hint, the tutor does not intervene. If the student requires hints, they are given substantial help, but also are frequently asked to work a different problem of the same kind after being helped through the first problem.

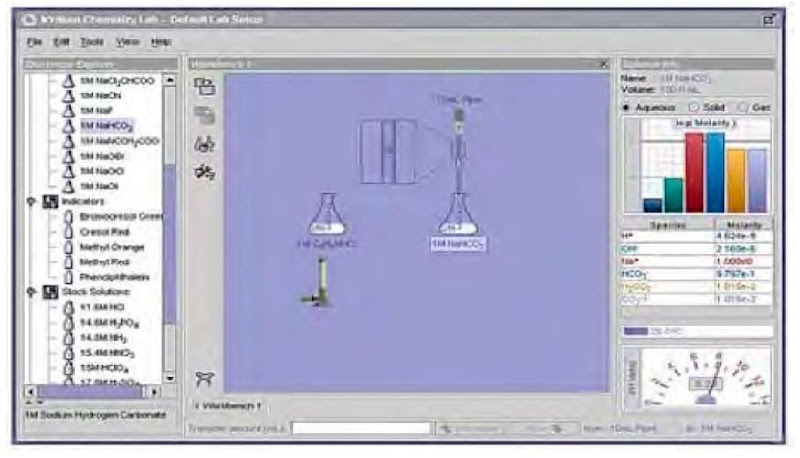

A second clear result from learning sciences research is that authentic problems are more conducive to learning that abstract exercises. (Lehrer, R.; Schauble, L., 2000; McCombs. B. L., 1996). This principle is realized in several ways in the OLI chemistry course. First, problems are drawn from real-world situations, e .g. analyzing well water in Bangladesh where naturally occurring arsenic poisoning is a serious public health problem. Second, problems are not couched simply as mathematic equations. Rather, students are given an open-ended, powerful, online virtual chemistry laboratory and asked to frame and solve the problems the way a working chemist does: in the lab. The interface to the virtual chemistry lab, created by Dr. David Yaron, looks like this:

Figure 1.

This is the authentic environment in which students solve homework problems; it allows the assignments to mimic the way working chemists solve problems.

Critical to the OLI courses success is the intensive evaluation done on each course. The most important evaluations are formative because they provide a feedback loop for improvement. Both the summative and formative evaluations of various OLI courses show definitively that students who take the fully online course do as well or better than students in traditional courses. (Scheines, 2005) In a Spring 2006 study, we administered an externally developed test of statistical reasoning, the Comprehensive Assessment of Outcomes in a first Statistics course (CAOS) as a pre- and post-test. These data revealed significant learning gains (posttest minus pretest CAOS score) for students in OLI-statistics course. Additionally, we examined outcomes of items on which less than 50% of students in the national sample were correct on posttest. Students taking the OLI course did significantly better than the national group on those particularly challenging items. (Meyer and Thille, 2006) Indeed, we have learned that the OLI statistics course can be taught in half a normal semester with greater student learning, than a traditional classroom version of the course. Using a standard national test for statistics knowledge, preliminary results show that students in a traditional course increased their knowledge of statistics significantly less than a randomized sample from that same cohort who took the online course increased theirs.

Figure 2.

In addition to learning outcomes studies, we engage in formative evaluation in the form of “think aloud” sessions with students working their way through the courses. These “contextual inquiries” lead to substantial revisions of the simulation, self-evaluation, tutoring, and virtual laboratory environments in each course.

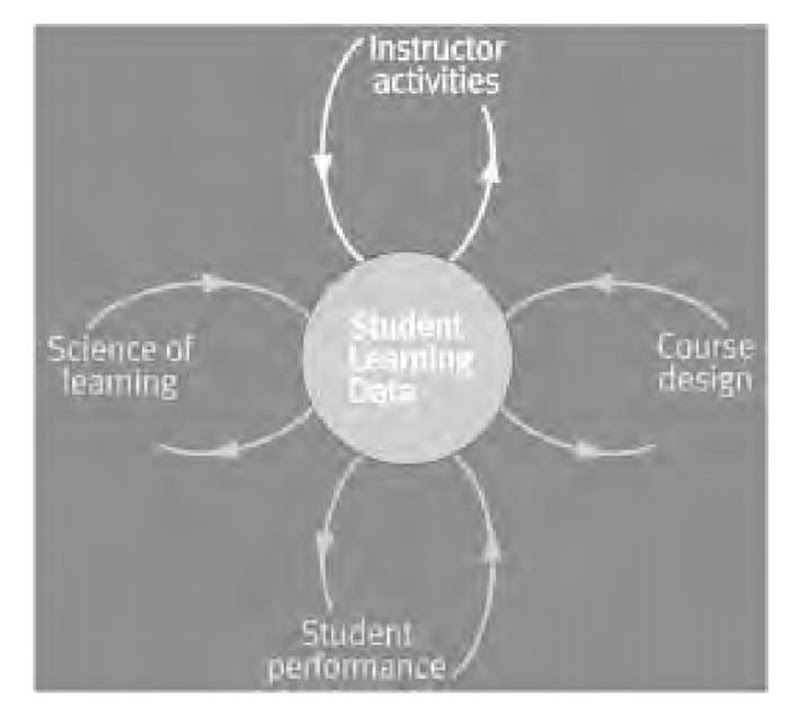

The various assessment tools embedded in the OLI software and methodologies create a rich set of feedback loops we believe must characterize effective eLearning. Figure 3 represents the multiple feedback loops essential to the OLI:

The term “feedback” is overloaded in the context of scientifically designed and improved eLearning. In general we use it to mean information derived from student activities that are used to influence or modify student performance, the design of the courses, and the body of understanding of how students learn.

Figure 3.

FUTURE TRENDS

We believe that scientifically designed and iteratively improved eLearning will become much more prevalent as the demand for demonstrable learning outcomes in education grows. Indeed, we believe that this approach can do much to provide meaningful responses to the demands for assessment of learning outcomes as measures of effective educational practices. In collaboration with the Pittsburgh Sciences of Learning Center, an NSF Center for studying effective learning interventions, the OLI team is now engaged in “instrumenting” the OLI delivery environment to provide extensive data about student activities that can be correlated with data on what they learn as a result of engaging in those activities. The idea is to leverage the fact that the students are working in a digital learning environment to gather even more data to put into the feedback loops represented in Figure 3. The outcome is likely to be a much finer grained understanding of the effectiveness of OLI instruction and an understanding of how it has to be tailored to meet individual learning styles.

CONCLUSION

Because the path followed to create the OLI courses is a difficult and expensive one, it is not certain that eLearning practitioners will follow. But if we do not bring scientific understanding and scientific methods applied to human learning to bear in designing, evaluating, and improving eLearning interventions, we are doomed to continue to fail to use technology as a transformation tool for education. The good news is that every day brings more research results from the learning sciences that eLearning practitioners can leverage to improve our efforts and make development more effectives and efficient. The hope is that projects like the Open Learning Initiative will show that scientific approaches yield effective interventions with a higher probability and are, therefore, worth the expense and effort.

KEY TERMS

Authentic Problems: Problems of the kind that practitioners in a discipline actually encounter. These contrast with may of the homework problems one finds in the “back of the textbook,” which are manufactured and abstracted from the complexities of real world contexts.

Cognitive Science: The study of human cognition. Its methodologies are wide ranging, including empirical studies of human learning; studies of artificial intelligence, and, more recently, some results from neuroscience.

Cognitive Tutor: An intelligent tutoring system based on cognitive models consistent with John Anderson’s “ACT-R” theory of human cognition.

eLearning: Instructional techniques that use information and communication technologies as tools to enable and enhance learning.

Formative Assessment: Assessment designed to provide regular, ongoing feedback about an intervention to determine what is successful and what is unsuccessful about the intervention relative to its goals; it takes place as the intervention is taking place.

Intelligent Tutoring System: An artificially intelligent computer-based system for presenting problems to students, tracking their responses, and given them feedback about their mistakes as they work through the problems.

Learning Sciences: The range of sciences that theorize about and study human learning. The methodologies are more wide ranging than those of cognitive science.

Summative Assessment: Assessment that takes place after an intervention takes place that assessed whether it had the desired effect or not.

Targeted Feedback: Feedback that has content based on specific mistakes made by students who are engaged in an assessment of their learning. It is the kind of feedback a human tutor gives when watching a student solve problems. The feedback is directed to specific errors rather than simply reporting that a student has solved the problem correctly or incorrectly.