Introduction

This is especially true when considering the case of high-definition television, or “HDTV,” as it has developed over the last several decades.

HDTV began simply as an effort to bring significantly higher image quality to the television consumer, through an increase in the “resolution” (line count and effective video bandwidth, at least) of the transmitted imagery. However, as should be apparent from the earlier discussion of the original broadcast TV standards, making a significant increase in the information content of the television signal over these original standards (now referred to as “standard definition television”, or “SDTV”) is not an easy task. Television channels, as used by any of the standard systems, do not have much in the way of readily apparent additional capacity available. Further, constraints imposed by the desired broadcast characteristics (range, power required for the transmission, etc.) and the desired cost of consumer receivers limits the range of practical choices for the broadcast television spectrum. And, of course, the existing channels were already allocated – and in some markets already filled to capacity. In the absence of any way to generate brand-new broadcast spectrum, the task of those designing the first HDTV systems seemed daunting indeed.

In a sense, new broadcast spectrum was being created, in the form of cable television systems and television broadcast via satellite. Initially, both of these new distribution models used essentially the same analog video standards as had been used by conventional over-the-air broadcasting. Satellite distribution, however, was not initially intended for direct reception by the home viewer; it was primarily for network feeds to local broadcast outlets, but individuals (and manufacturers of consumer equipment) soon discovered that these signals were relatively easy to receive. The equipment required was fairly expensive, and the receiving antenna (generally, a parabolic “dish” type) inconveniently large, but neither was outside the high end of the consumer television market. Moving satellite television in to the mainstream of this market, however, required the development of the “direct broadcast by satellite”, or DBS, model. DBS, as exemplified in North America by such services as Dish Network or DirecTV, and in Europe by BSkyB, required more powerful, purpose-built satellites, so as to enable reception by small antennas and inexpensive receivers suited to the consumer market. But DBS also required a further development that would eventually have an enormous impact on all forms of television distribution – the use of digital encoding and processing techniques. More than any other single factor, the success of DBS in the consumer TV market changed “digital television” from being a means for professionals to achieve effects and store video in the studio, to displacing the earlier analog broadcast standards across the entire industry. And the introduction of digital technology also caused a rapid and significant change in the development of HDTV. No longer simply a higher-definition version of the existing systems, “HDTV” efforts transformed into the development of what is now more correctly referred to as “Digital Advanced Television” (DATV). It is in this form that a true revolution in television is now coming into being, one that will provide far more than just more pixels on the screen.

A Brief History of HDTV Development

Practical high-definition television was first introduced in Japan, through the efforts of the NHK (the state-owned Japan Broadcasting Corporation). Following a lengthy development begun in the early 1970s, NHK began its first satellite broadcasts using an analog HDTV system in 1989. Known to the Japanese public as “Hi-Vision”, the NHK system is also commonly referred to within the TV industry as “MUSE,” which properly refers to the encoding method (Multiple Sub-Nyquist Encoding) employed. The Hi-Vision/MUSE system is based on a raster definition of 1125 total lines per frame (1035 of which are active), 2:1 interlaced with a 60.00 Hz field rate. If actually transmitted in its basic form, such a format would require a bandwidth well in excess of 20 MHz for the luminance components alone. However, the MUSE encoding used a fairly sophisticated combination of bandlimiting, subsampling of the analog signal, and “folding” of the signal spectrum (employing sampling/modulation to shift portions of the complete signal spectrum to other bands within the channel, similar to the methods used in NTSC and PAL to interleave the color and luminance information), resulting in a final transmitted bandwidth of slightly more than 8 MHz. The signal was transmitted from the broadcast satellite using conventional FM.

While technically an analog system, the MUSE broadcasting system also required the use of a significant amount of digital processing and some digital data transmission. Up to four channels of digital audio could be transmitted during the vertical blanking interval, and digitally calculated motion vector information was provided to the receiver. This permitted the receiver to compensate for blur introduced due to the fact that moving portions of the image are transmitted at a lower bandwidth than the stationary portions. (This technique was employed for additional bandwidth reduction, under the assumption that detail in a moving object is less visible, and therefore less important to the viewer, than detail that is stationary within the image.) An image format of 1440 x 1035 pixels was assumed for the MUSE HDTV signal.

While this did represent the first HDTV system actually deployed on a large scale, it also experienced many of the difficulties that hinder the acceptance of current HDTV standards. Both the studio equipment and home receivers were expensive – early Hi-Vision receivers in Japan cost in excess of ¥4.5 million (approx. US$35,000 at the time) – but really gave only one benefit: a higher-definition, wider-aspect-ratio image. Both consumers and broadcasters saw little reason to make the required investment until and unless more and clearer benefits to a new system became available. So while HDTV broadcasting did grow in Japan – under the auspices of the government-owned network – MUSE or Hi-Vision cannot be viewed as a true commercial success. The Japanese government eventually abandoned its plans for longterm support of this system, and instead announced in the 1990s that Japan would transition to the digital HDTV system being developed in the US.

In the meantime, beginning in the early 1980s, efforts were begun in both Europe and North America to develop successors to the existing television broadcast systems. This involved both HDTV and DBS development, although initially as quite separate things. Both markets had already seen the introduction of alternatives to conventional, over-the-air broadcasting, in the form of cable television systems and satellite receivers, as noted above. Additional “digital” services were also being introduced, such as the teletext systems that were became popular primarily in Europe. But all of these alternates or additions remained strongly tied to the analog transmission standards of conventional television. And, again due to the lack of perceived benefit vs. required investment for HDTV, there was initially very little enthusiasm from the broadcast community to support development of a new standard.

This began to change around the middle of the decade, as TV broadcasters perceived a growing threat from cable services and pre-recorded videotapes. As these were not constrained by the limitations of over-the-air broadcast, it was feared that they could offer the consumer significant improvements in picture quality and thereby divert additional market share from broadcasting. In the US, the Federal Communications Commission (FCC) was petitioned to initiate an effort to establish a broadcast HDTV standard, and in 1987 the FCC’s Advisory Committee on Advanced Television Service (ACATS) was formed. In Europe, a similar effort was initiated in 1986 through the establishment of a program administered jointly by several governments and private companies (the “Eureka-95” project, which began with the stated goal of introducing HDTV to the European television consumer by 1992). Both received numerous proposals, which originally were either fully analog systems or analog/digital hybrids. Many of these involved some form of “augmentation” of the existing broadcast standards (the “NTSC” and “PAL” systems), or at least were compatible with them to a degree which would have permitted the continued support of these standards indefinitely.

However, in 1990 this situation changed dramatically. Digital video techniques, developed for computer (CD-ROMs, especially) and DBS use had advanced to the point where an all-digital HDTV system was clearly possible, and in June of that year just such a system was proposed to the FCC and ACATS. General Instrument Corp. presented the all-digital “Digi-Cipher” system, which (as initially proposed) was capable of sending a 1408 x 960 image, using a 2:1 interlaced scanning format with a 60 Hz field rate, over a standard 6 MHz television channel. The advantages of an all-digital approach, particularly in enabling the level of compression which would permit transmission in a standard channel, were readily apparent, and very quickly the remaining proponents of analog systems either changed their proposals to an all-digital type or dropped out of consideration.

Table 12-1 The final four US digital HDTV proposals.a

|

Proponent |

Name of proposal |

Image/scan format |

Additional comments |

|

Zenith/AT&T |

“Digital Spectrum Compatible” |

1280 x 720 progressive scan |

59.94 Hz frame rate |

|

4-VSB modulation |

|||

|

MIT/General |

ATVA |

1280 x 720 |

59.94 Hz frame rate |

|

Instruments |

progressive scan |

16-QAM modulation |

|

|

ATRCb |

Advanced Digital |

1440 x 960 |

59.94 Hz field rate |

|

Television |

2:1 interlaced |

SS-QAM modulation |

|

|

General |

“DigiCipher” |

1408 x 960 |

59.94 Hz field rate |

|

Instruments |

2:1 interlaced |

16-QAM modulation |

a The proponents of these formed the “Grand Alliance” in 1993, which effectively merged these proposals and other input into the final US digital television proposal.

b “Advanced Television Research Consortium”; members included Thomson, Philips, NBC, and the David Sarnoff Research Center.

By early 1992, the field of contenders in the US was down to just four proponent organizations (listed in Table 12-1), each with an all-digital proposal, and a version of the NHK MUSE system. One year later, the NHK system had also been eliminated from consideration, but a special subcommittee of the ACATS was unable to determine a clear winner from among the remaining four. Resubmission and retesting of the candidate systems was proposed, but the chairman of the ACATS also encouraged the four proponent organizations to attempt to come together to develop a single joint proposal. This was achieved in May, 1993, with the announcement of the formation of the so-called “Grand Alliance,” made up of member companies from the original four proponent groups (AT&T, the David Sarnoff Research Center, General Instrument Corp., MIT, North American Philips, Thomson Consumer Electronics, and Zenith). This alliance finally produced what was to become the US HDTV standard (adopted in 1996), and which today is generally referred to as the “ATSC” (Advanced Television Systems Committee, the descendent of the original NTSC group) system.

Before examining the details of this system, we should review progress in Europe over this same period. The Eureka-95 project mentioned above can in many ways be seen as the European “Grand Alliance,” as it represented a cooperative effort of many government and industry entities, but it was not as successful as its American counterpart. Europe had already developed an enhanced television system for direct satellite broadcast, in the form of a Multiplexed Analog Components (MAC) standard, and the Eureka program was determined to build on this with the development of a compatible, high-definition version (HD-MAC). A 1250-line, 2:1 interlaced, 50 Hz field rate production standard was developed for HD-MAC, with the resulting signal again fit into a standard DBS channel through techniques similar to those employed by MUSE. However, the basic MAC DBS system itself never became well established in Europe, as the vast majority of DBS systems instead used the conventional PAL system. The HD-MAC system was placed in further jeopardy by the introduction of an enhanced, widescreen augmentation of the existing PAL system. By early 1993, Thomson and Philips – two leading European manufacturers of both consumer television receivers and studio equipment – announced that they were discontinuing efforts in HD-MAC receivers, in favor of widescreen PAL. Both Eureka-95 and HD-MAC were dead.

As in the US, European efforts would now focus on the development of all-digital HDTV systems. In 1993, a new consortium, the Digital Video Broadcasting (DVB) Project, was formed to produce standards for both standard-definition and high-definition digital television transmission in Europe. The DVB effort has been very successful, and has generated numerous standards for broadcast, cable, and satellite transmission of digital television, supporting formats that span a range from somewhat below the resolution of the existing European analog systems, to high-definition video comparable to the US system. However, while there is significant similarity between the two, there are again sufficient differences so as to make the American and European standards incompatible at present. As discussed later, the most significant differences lie in the definitions of the broadcast encoding and modulation schemes used, and there remains some hope that these eventually will be harmonized. A worldwide digital television standard, while yet to be certain, remains the goal of many.

During the development of all of the digital standards, interest in these efforts from other industries – and especially the personal computer and film industries – was growing rapidly. Once it became clear that the future of broadcast television was digital, the computer industry saw a clear interest in this work. Computer graphics systems, already an important part of television and film production, would clearly continue to grow in these applications, and personal computers in general would also become new outlets for entertainment video. Many predicted the convergence of the television receiver and home computer markets, resulting in combination “digital appliances” which would handle the tasks of both. The film industry also had obvious interests and concerns relating to the development of HDTV. A large-screen, wide-screen, and high-definition system for the home viewer could potentially impact the market for traditional cinematic presentation. On the other hand, if its standards were sufficiently capable, a digital video production system could potentially augment or even replace traditional film production. Interested parties from both became vocal participants in the HDTV standards arena, although often pulling the effort in different directions.

HDTV Formats and Rates

One of the major areas of contention in the development of digital and high-definition television standards was the selection of the standard image formats and frame/field rates.

The only common goal of HDTV efforts in general was the delivery of a “higher resolution” image – the transmitted video would have to provide more scan lines, with a greater amount of detail in each, than was available with existing standards. In “digital” terms, more pixels were needed. But exactly how much of an increased was needed to make HDTV worthwhile, and how much could practically be achieved?

Using the existing broadcast standards – at roughly 500-600 scan lines per frame – as a starting point, the goal that most seemed to aim for was a doubling of this count. “HDTV” was assumed to refer to a system that would provide about 1000-1200 lines, and comparable resolution along the horizontal axis. However, many argued that this overlooked a simple fact of life for the existing standards: that they were not actually capable of delivering the assumed 500 or so scan lines worth of vertical resolution.It was therefore argued that an “HD” system could provide the intended “doubling of resolution” by employing a progressive-scan format of between 700 and 800 scan lines per frame. These would also benefit from the other advantages of a progressive system over interlacing, such as the absence of line “twitter” and no need to provide for the proper interleaving of the fields. So two distinct classes of HDTV proposals became apparent – those using 2:1 interlaced formats of between roughly 1000 and 1200 active lines, and progressive-scan systems of about 750 active lines.

Further complicating the format definition problem were requirements, from various sources, for the line rate, frame or field rates, and/or pixel sampling rates to be compatible with existing standards. The computer industry also weighed in with the requirement that any digital HD formats selected as standards employ “square pixels”,so that they would be better suited to manipulation by computer-graphics systems. Besides the image format, the desired frame rate for the standard system was the other major point of concern. The computer industry had by this time already moved to display rates of 75 Hz and higher, to meet ergonomic requirements for “flicker-free” displays, and wanted an HDTV rate that would also meet these. The film industry had long before standardized on frame rates of 24 fps (in North America) and 25 fps (in Europe), and this also argued for a frame or field rate of 72 or 75 Hz for HDTV. Film producers were also concerned about the choice of image formats – HDTV was by this time assumed to be “widescreen,” but using a 16:9 aspect ratio that did not match any widescreen film format. Alternatives up to 2:1 were proposed.

But the television industry also had legacies of its own: the 50 Hz field rate common to European standards, and the 60 (and then 59.94+) Hz rate of the North American system. The huge amount of existing source material using these standards, and the expectation that broadcasting under the existing systems would continue for some time following the introduction of HDTV, argued for HD’s timing standards to be strongly tied to those of standard broadcast television. Discussions of these topics, throughout the late 1980s and early 1990s, often became quite heated arguments.

In the end, the approved standards in both the US and Europe represent a compromise between these many factors. All use – primarily – square-pixel formats. And, while addressing the desire for progressive-scan by many, the standards also permit the use of some interlaced formats as well, both as a matter of providing for compatibility with existing source material at the low end, and as a means of permitting greater pixel counts at the high. The recognized transmission formats and frame rates of the major HDTV standards in the world as of this writing are listed in Table 12-2. (It should be noted that, technically, there are no format and rate standards described in the official US HDTV broadcast specifications. The information shown in this table represents the last agreed-to set of format and rate standards proposed by the Grand Alliance and the ATSC. In a last-minute compromise, due to objections raised by certain computer-industry interests, this table was dropped from the proposed US rules. It is, however, still expected to be followed by US broadcasters for their HDTV systems.) Note that both systems include support for “standard definition” programming. In the DVB specifications, the 720 x 576 format is a common “square-pixel representation of 625/50 PAL/SECAM transmissions. The US standard supports two “SDTV” formats: 640 x 480, which is a standard “computer” format and one that represents a “square-pixel” version of 525/60 video, and 720 x 480. The latter format, while not using “square” pixels, is the standard for 525/60 DVD recordings, and may be used for either 4:3 (standard aspect ratio) or 16:9 “widescreen” material. It should also be noted that, while the formats shown in the table are those currently expected to be used under these systems, there is nothing fundamental to either system that would prevent the use of the other’s formats and rates. Both systems employ a packetized data-transmission scheme and use very similar compression methods. (Again, the biggest technical difference is in the modulation system used for terrestrial broadcast.) There is still some hope for reconciliation of the two into a single worldwide HDTV broadcast standard.

Table 12-2 Common “HDTV” broadcast standard formats.

|

Standard |

Image format (H x V) |

Rates/scan format |

Comments |

|

640 x 480 |

60/59.94 Hz: 2:1 interlaced |

“Standard definition” TV. Displayed as 4:3 only |

|

|

US “ATSC” HDTV broadcast |

720 x 480 |

60/59.94 Hz; 2:1 interlaced |

SDTV; std. DVD format. Displayed as 4:3 or 16:9 |

|

standard |

1280 x 720 |

24/30/60 Hz;a progressive |

Square-pixel 16:9 format |

|

1920 x 1080 |

24/30/60 Hz;a progressive and 2:1 interlaced |

Square-pixel 16:9 format. 2:1 interlaced at 59.94/60 Hz only |

|

|

720 x 576 |

b |

SDTV; std. DVD format for 625/50 systems |

|

|

DVB (as used in |

1440×1152 |

b |

2x SDTV format; non-square pixels |

|

existing 625/50 markets) |

1920×1152 |

b |

1152-line version of common 1920 x 1080 format; nonsquare at 16:9 |

|

2048 x 1152 |

b |

Square-pixel 16:9 1152-line format |

|

|

Basically an analog system; |

|||

|

Japan/NHK “MUSE” |

1440 x 1035 (effective) |

59.94 Hz; 2:1 interlaced |

will be made obsolete by adoption of an all-digital standard |

a The ATSC proposal originally permitted transmission of these formats at 24.00, 30.00 and 60.00 frames or fields per second, as well as at the so-called “NTSC-compatible” (N/1.001) versions of these.

b In regions currently using analog systems based on the 625/50 format, DVB transmissions would likely use 25 or 50 frames or fields per second, either progressive-scan or 2:1 interlaced. However, the DVB standards are not strongly tied to a particular rate or scan format, and could, for example, readily be used at the “NTSC” 59.94+ Hz field rate.

Digital Video Sampling Standards

“Digital television” generally does not refer to a system that is completely digital, from image source to output at the display. Cameras remain, to a large degree, analog devices (even the CCD image sensors used in many video cameras are, despite being fixed-format, fundamentally analog in operation), and of course existing analog source material (such as video tape) is very often used as the input to “digital” television systems. To a large degree, then, digital television standards have been shaped by the specifications required for sampling analog video, the first step in the process of conversion to digital form.

Three parameters are commonly given to describe this aspect of digital video – the number and nature of the samples. First, the sampling clock selection is fundamental to any video digitization system. In digital video standards based on the sampling of analog signals, this clock generally must be related to the basic timing parameters of the original analog standard. This is needed both to keep the sampling grid synchronized with the analog video (such that the samples occur in repeatable locations within each frame), and so that the sampling clock itself may be easily generated from the analog timebase. With the clock selected, the resulting image format in pixels is set – the number of samples per line derives from the active line period divided by the sample period, and the number of active lines is presumably already fixed within the existing analog standard.

Sampling structure

Both of these will be familiar to those coming from a computer-graphics background, but the third parameter is often the source of some confusion. The sampling structure of a digital video system is generally given via a set of three numbers; these describe the relationship between the sampling clocks used for the various components of the video signal. As in analog television, many digital TV standards recognize that the information relating to color only does not need to be provided at the same bandwidth as the luminance channel, and therefore these “chroma” components will be subsampled relative to the luminance signal. An example of a sampling structure, stated in the conventional manner, is “YCRCB, 4:2:2”, indicating that the color-difference signals “CR” and “CB” are each being sampled at one-half the rate of the luminance signal Y. (The reason for this being “4:2:2” and not “2:1:1” will become clear in a moment.) Note that “CR” and “CB” are commonly used to refer to the color difference signals in digital video practice, as in “YCRCB” rather than the “YUV” label common in analog video.

Selection of sampling rate

Per the Nyquist sampling theorem, any analog signal may be sampled and the original information fully recovered only if the sampling rate exceeds a lower limit of one-half the bandwidth of the original signal. (Note that the requirement is based on the bandwidth, and not the upper frequency limit of the original signal in the absolute sense.) If we were to sample, say, the luminance signal of a standard NTSC transmission (with a bandwidth restricted to 4.2 MHz), the minimum sampling rate would be 8.4 MHz. Or we might simply require that we properly sample the signal within the standard North American 6 MHz television channel, which then gives a lower limit on the sampling rate of 12 MHz. However, as noted above, it is also highly desirable that the selected sampling rate be related to the basic timing parameters of the analog system. This will result in a stable number and location of samples per line, etc.

A convenient reference frequency in the color television standards, and one that is already related to (and synchronous with) the line rate, is the color subcarrier frequency (commonly 3.579545+ MHz for “NTSC” system, or 4.433618+ MHz for PAL). These rates were commonly used as the basis for the sampling clock rate in the original digital television standards. To meet the requirements of the Nyquist theorem, a standard sampling rate of four times the color subcarrier was used, and so these are generally referred to as the “4fsc” standards. The basic parameters for such systems for both “NTSC” (525 lines/frame at a 59.94 Hz field rate) and “PAL” (625/50) transmissions are given in Table 12-3. Note that these are intended to be used for sampling the composite analog video signal, rather than the separate components, and so no “sampling structure” information, per the above discussion, is given.

Table 12-3![]() sampling standard rates and the resulting digital image formats.

sampling standard rates and the resulting digital image formats.

|

Parameter |

525/60 “NTSC” video |

625/50 |

|

Color subcarrier (typ.; MHz) |

3.579545 |

4.433619 |

|

4 times color subcarrier (sample rate) |

14.318182 |

17.734475 |

|

No. of samples per line (total) |

910 |

1135 |

|

No. of samples per line (active) |

768 |

948 |

|

No. of lines per frame (active) |

485 |

575 |

The CCIR-601 standard

The 4/sc standards suffer from being incompatible between NTSC and PAL versions. Efforts to develop a sampling specification that would be usable with both systems resulted in CCIR Recommendation 601, “Encoding Parameters for Digital Television for Studios.” CCIR-601 is a component digital video standard which, among other things, establishes a single common sampling rate usable with all common analog television systems. It is based on the lowest common multiple of the line rate for both the 525/60 and 625/50 common timings. This is 2.25 MHz, which is 143 times the standard 525/60 line rate (15,734.26+ Hz), and 144 times the standard 625/50 rate (15,625 Hz). The minimum acceptable luminance sampling rate based on this least common multiple is 13.5 MHz (six times the 2.25 MHz LCM), and so this was selected as the common sampling rate under CCIR-601. (Note that as this is a component system, the minimum acceptable sampling rate is set by the luminance signal bandwidth, not the full channel width.) Acceptable sampling of the color-difference signals could be achieved at one-fourth this rate, or 3.375 MHz (1.5 times the LCM of the line rates). The 3.375 MHz sampling rate was therefore established as the actual reference frequency for CCIR-601 sampling, and the sampling structure descriptions are based on that rate. Common sampling structures used with this rate include:

• 4:1:1 sampling. The luminance signal is sampled at 13.5 MHz (four times the 3.375 MHz reference), while the color-difference signals are sampled at the reference rate.

• 4:2:2 sampling. The luminance signal is sampled at 13.5 MHz (four times the 3.375 MHz reference), but the color-difference signals are sampled at twice the reference rate (6.75 MHz). This is the most common structure for studio use, as it provides greater bandwidth for the color-difference signals. The use of 4:1:1 is generally limited to low-end applications for this reason.

• 4:4:4 sampling. All signals are sampled at the 13.5 MHz rate. This provides for equal bandwidth for all, and so may also be used for the base RGB signal set (which is assumed to require equal bandwidth for all three signals). It is also used in FCrCb applications for the highest possible quality.

The resulting parameters for CCIR-601 4:2:2 sampling for both the “NTSC” (525/60 scanning) and “PAL” (625/50 scanning) systems are given in Table 12-4.

Table 12-4 CCIR-601 sampling for the 525/60 and 625/50 systems and the resulting digital image formats.

|

Parameter |

525/60 “NTSC” video |

625/50 |

|

Sample rate (luminance channel) |

13.5 MHz |

13.5 MHz |

|

No. of Y samples per line (total) |

858 |

864 |

|

No. of Y samples per line (active) |

720 |

720 |

|

No. of lines per frame (active) |

480 |

576 |

|

Chrominance sampling rate (for 4:2:2) |

6.75 MHz |

6.75 MHz |

|

Samples per line, Cr and Cb (total) |

429 |

432 |

|

Samples per line, Cr and Cb (active) |

360 |

360 |

4:2:0 Sampling

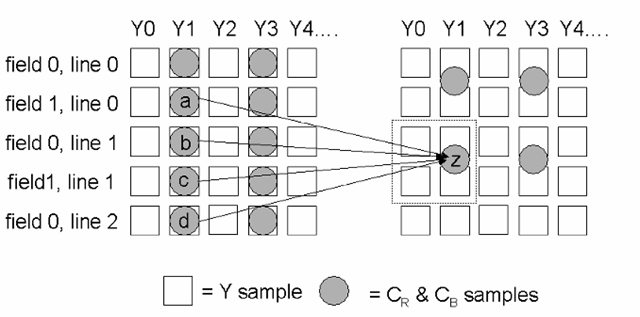

It should be noted that another sampling structure, referred to as “4:2:0,” is also in common use, although it does not strictly fit into the nomenclature of the above. Many digital video standards, and especially those using the MPEG-2 compression technique (which will be covered in the following section) recognize that the same reasoning that applies to subsampling the color components in a given line – that the viewer will not see the results of limiting the chroma bandwidth – can also be applied in the vertical direction. “4:2:0” sampling refers to an encoding in which the color-difference signals are subsampled as in 4:2:2 (i.e., sampled at half the rate of the luminance signal), but then also averaged over multiple lines. The end result is to provide a single sample of each of the color signals for every four samples of luminance, but with those four samples representing a 2 x 2 array (Figure 12-1) rather than four successive samples in the same line (as in 4:1:1 sampling). This is most often achieved by beginning with a 4:2:2 sampling structure, and averaging the color-difference samples over multiple lines as shown. (Note that in this example, the original video is assumed to be 2:1 interlace scanned – but the averaging is still performed over lines which are adjacent in the complete frame, meaning that the operation must span two successive fields.)

Figure 12-1 4:2:2 and 4:2:0 sampling structures. In 4:2:2 sampling, the color-difference signals Cr and Cb are sampled at half the rate of the luminance signal Y. So-called “4:2:0” sampling also reduces the effective bandwidth of the color-difference signals in the vertical direction, by creating a single set of Cr and Cb samples for each 2 x 2 block of Y samples. Each is derived by averaging data from four adjacent lines in the complete 4:2:2 structure (merging the two fields), as shown; the value of sample z in the 4:2:0 structure on the right is equal to (a + 3b + 3c + d)/8. The color-difference samples are normally considered as being located with the odd luminance sample points.