Introduction

A large part of the many standards having to do with displays and display interfaces concern themselves not with the nature of the physical or electrical connection, but rather solely with the format of the images to be communicated, and the timing which will be used in the transmission. In fact, these are arguably among the most fundamental and important of all display interface standards, since often these will determine if a given image can be successfully displayed, regardless of the specific interface used. Established format and timing standards often ensure the usability of video information over a wide range of different displays and physical interfaces. In some cases, such as the current analog television broadcast standards, the specifications for the scanning format and timing are fundamental to the proper operation of the complete system.

But how are these standards determined, and what factors contribute to these selections? This topic examines the needs for image format and timing standards in various industries and the concerns driving the specific choices made for each. In addition, we cover some of the problems which arise when, as so often is the case, electronic imagery created under one standard must be converted for use in a different system

The Need for Image Format Standards

In most “fully digital” systems, the image is represented via an array of discrete samples, or “pixels” (picture elements), but even in analog systems such as the conventional broadcast televisions standards, there is a defined structure in the form of separate scan lines. Clearly, the greater the number of pixels and/or scan lines used to represent the image, the greater the amount of information (in terms of spatial detail) that will be conveyed. However, there will also obviously be a limit to the number of samples per image which can be supported by any practical system; such limits may be imposed by the amount of storage available, the physical limitations of the image sensor or display device, the capacity of the channel to be used to convey the image information, or a combination of these factors.

Even if such limitations are not a concern – a rare situation, but at least imaginable – there remains a definite limit as to the number of samples (the amount of image detail which can be represented) in any given application. It may be that the image to be transmitted simply does not contain meaningful detail beyond a given level; it may also be the case that the viewer, under the expected conditions of the display environment, could not visually resolve additional detail. So for almost any practical situation, we can determine both the resolution (in the proper sense of the term, meaning the amount of detail resolvable per a given distance in the visual field) required to adequately represent the image, as well as the limits on this which will be imposed by the practical restriction of the imaging and display system itself.

But this sort of analysis will only serve to set an approximate range of image formats which might be used to satisfy the needs of the system. A viewer, for instance, will generally not notice or care if the displayed image provides 200 dpi resolution or 205 dpi – but the specifics of the image format standard chosen will have implications beyond these concerns. Specific standards for image formats ensure not only adequate performance, but also the interoperability of systems and components from various manufacturers and markets. Many imaging and display devices, as previously noted, provide only a single fixed array of physical pixels, and the establishment of format standards allows for optimum performance of such devices when used together in a given system. Further, establishment of a set of related standards, when appropriate, allows for relatively easy scalability of image between standard devices, at least compared to the situation that would exist with completely arbitrary formats.

The selection of the specific image and scanning formats to be used will come from a number of factors, including the desired resolution in the final, as-displayed image; the amount of storage, if any, required for the image, and the optimum organization (in terms of system efficiency) of that storage; and the performance available within the overall system, including the maximum rate at which images or pixels may be generated, processed, or transmitted. As will be seen shortly, an additional factor may be the availability of the necessary timing references (“clocks”) for the generation or transmission of the image data. The storage concern is of particular importance in digital systems; for optimum performance, it is often desired that the image format be chosen so as to fit in a particular memory space,1 or permit organization into segments of a convenient size. For example, many “digital” formats have been established with the number of pixels per line a particular power of two, or at least divisible in to a convenient number of “power of 2” blocks. (Examples being the 1024 x 768 format, or the 1600 x 1200 format – the latter having a number of pixels per line which is readily divisible by 8, 16, 32, or 64.)

One last issue which repeatedly has come up in format standard development, especially between the television and computer graphics industries, has been the need for “square pixel” image formats – meaning those in which a given number of picture elements or samples represents the same distance in both the horizontal and vertical directions. (This is also referred to as a “1:1 pixel aspect ratio,” although since pixels are, strictly speaking, dimen-sionless samples, the notion of an aspect ratio here is somewhat meaningless.) Television practice, both in its broadcast form and the various dedicated “closed-circuit” applications, comes from a strictly analog-signal background; while the notion of discrete samples in the vertical direction is imposed by the scanning-line structure, there is really no concept of sampling horizontally (or along the direction of the scan line). Therefore, television had no need for any consideration of “pixels” in the standard computer-graphics sense, and certainly no concern about the image samples being “square” when digital techniques finally brought sampling to this industry. (Instead, as will be seen in the later discussion of digital television, sampling standards were generally chosen to use convenient sampling clock values, and the non-square pixel formats which resulted were of little concern.) In contrast, computer-graphics practice was very much concerned with this issue, since it would be very inconvenient to have to deal with what would effectively be two different scaling factors – one horizontal and one vertical – in the generation of graphic imagery. As might be expected, this has resulted in some degree of a running battle between the two industries whenever common format standards have been discussed.

The Need for Timing Standards

Image format standards are only half of what is needed in the case of systems intended for the transmission and display of motion video.And even when motion is not a major concern of the display system, most display technologies will still require continuous refresh of the image being displayed. The timing problem is further not limited to the rate at which the images themselves are presented; it is generally not the case that the entire image is produced instantaneously, or all the samples which make up that image are transmitted or presented at the same time. Rather, the image is built up piece-by-piece, in a regular, steady sequence of pixels and lines. The timing of this process (which typically is of the type known as raster scanning) is also commonly the subject of display timing standardization.

At the frame level, meaning the rate at which the complete images are generated or displayed, the usual constraints on the rate are the need for both convincing motion portrayal, and the characteristics of the display device (in terms of the rate at which it must be refreshed in order to present the appearance of a stable image). Both of these factors generally demand frame rates at least in the low tens of frames per second, and sometimes as high as the low hundreds. Restricting the maximum rate possible in any given system are again the limitations of the image generation and display equipment itself, along with the capacity limitations of the channel(s) carrying the image information.

But within this desirable range of frame rates, why is it desirable to establish specific standards, and to further standardize to the line and pixel timing level? Standardized frame rates are desirable again to maximize interoperability between different systems and applications, and further to reduce the cost and complexity of the equipment itself. It is generally simpler and less expensive to design around a single rate, or at worst a relatively restricted range, as opposed to a design capable of handling any arbitrary rate. This same reasoning generally applies to a similar degree, if not more so, to the line rate in raster-scanned displays. A further argument for standardized frame rates comes from the need to preserve convincing motion portrayal as the imagery is shared between multiple applications. While frame-rate conversion is possible, it generally involves a resampling of the image stream and can result in aliasing and other artifacts. (An example of this is discussed later, in the methods used to handle the mismatch in rates between film and video systems.)

Practical Requirements of Format and Timing Standards

Several additional practical concerns must also be addressed in the development of format and timing standards for modern electronic display systems. Among these are those standards which may already have been established in other, related industries, along with the constraints of the typical system design for a given market or application.

For the largest electronic display markets today, which are television (and related entertainment display systems) and the personal computer market, clearly the biggest single “outside” influence has come from the standards and practices of the motion-picture industry. Film obviously predates these all-electronic markets by several decades, and remains both a significant source of the images used by each and, in turn, is itself more and more dependent on television and computer graphics as a source of imagery. Film has had an impact on display format and timing standards in a number of areas, some less obvious than others.

Probably the clearest example of the influence of the motion-picture industry is in the aspect ratios chosen for the standard image formats. Film, in its early days, was not really constrained to use a particular aspect ratio, but it soon became clear that economics dictated the establishment of camera and projector standards, and especially standards for the screen shape to be used for cinematic presentation. These early motion-picture standards efforts included the establishment of the so-called “Academy standard” (named for the Academy of Motion Picture Arts & Sciences), and its 4:3 image aspect ratio.2 During the development of television broadcast standards in the 1940s, it was natural to adopt this same format for television – as motion pictures were naturally expected to become a significant source of material for this new entertainment medium. This ultimately led to cathode-ray tube (CRT) production standardizing on 4:3 tubes, and 4:3 formats later became the norm in the PC industry for this reason. With the 4:3 aspect ratio so firmly established, the vast majority of non-CRT graphic displays (such as LCD or plasma display panels) are today also produced with a 4:3 aspect ratio! Ironically, the motion-picture industry itself abandoned the 4:3 format standard years ago, in large part due to the perceived threat of television. Fearing loss of their audience to TV, the film industry of the 1950s introduced “widescreen” formats3 such as “Panavision” or “Cinemascope,” to offer a viewing experience that home television could not match. Today, though, this has led to television now following film into the “widescreen”arena – with a wider image being one of the features promoted as part of the HDTV systems now coming to market.

Figure 7-1 “3-2 pulldown.” In this technique for displaying motion-picture film within an interlaced television system, successive frames of the 24 frames/s film are shown for three and then two fields of the television transmission. While this is a simple means of adapting to the approximately 60 Hz TV field rate, it can introduce visible motion artifacts into the scene.

The motion-picture industry has also had some relationship, although less strongly, with the selection of frame rates used in television and computer display standards. Television obviously came first. In establishing the standard frame and field rates for broadcast TV, the authorities in both North America and Europe looked first to choose a rate which would experience minimum interference from local sources of power-line-frequency magnetic fields and noise. In North America, this dictated a field rate of 60 fields/s, matching the standard AC line frequency. Similarly, European standards were written around an assumption of a 50 fields/s rate. But both of these also – fortunately – harmonized reasonably well with standard film rates. In North America, the standard motion-picture frame rate is 24 frames/s. This means that film can be shown via standard broadcast television relatively easily, using a method known as “3:2 pulldown”. As 24 and 60 are related rates (both being multiples of 12), 24 fields/s film is shown on 60 fields/s television by showing one frame of the film for three television fields, then “pulling down” the next frame and showing it for only two fields- and then repeating the cycle (Figure 7-1). In Europe, the solution is even simpler. The standard European film practice was (and is) to use 25 frames/s, not 244 – and so each frame of film requires exactly two television fields. (The “3:2 pulldown” technique is not without some degree of artifacts, but these are generally unnoticed by the viewing public.)

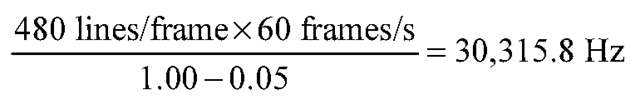

With the base frame and field rate chosen, the establishment of the remaining timing standards within a given system generally proceeds from the requirements imposed by the desired image format, and the constraints of the equipment to be used to produce, transmit, and display the images. For example, the desired frame rate and the number of lines required for the image, along with any overhead required by the equipment (such as the “retrace” time required by a CRT display) will constrain the standard line rate. If we assume a 480-line image, at 60 frames/s, and expect that the display will require approximately 5% “overhead” time in each frame for retrace, then we should expect the line rate to be approximately:

However, the selection of standard line rates and other timing parameters generally does not proceed in this fashion. Of equal importance to the format and frame rate choices is the selection of the fundamental frequency reference(s) which will be used to generate the standard timing. In both television and computer-display practice, there is generally a single “master clock” from which all system timing will be derived. Therefore, the selection of the line rate, pixel rate, and other timing parameters must be done so as to enable all necessary timing to be obtained using this reference, and still produce the desired image format and frame rate.

In the broadcast television industry, for example, it is common practice to derive all studio timing signals and clocks from a single master source, typically a highly accurate cesium or rhubidium oscillator which in turn is itself calibrated per national standard sources, such as the frequency standards provided by radio stations WWV and WWVH in the United States.5 The key frequencies defined in the television standards have been chosen so that they may be relatively easily derived from this master, and so the broadcaster is able to maintain the television transmission within the extremely tight tolerances required by the broadcast regulations.

Computer graphics hardware has followed a similar course. Initially, graphics boards were designed to produce a single standard display timing, and used an on-board oscillator of the appropriate frequency as the basis for that timing (or else derived their clock from an oscillator elsewhere in the system, such as the CPU clock). As will be seen later, computer display timing standards were initially derived from the established television standards, or even approximated those standards so that conventional television receivers could be used as PC displays. The highly popular “VGA” standard, at the time of its introduction, was essentially just a progressive-scan, square-pixel version of the North American television standard timing, and thus maintained a very high degree of compatibility with “TV” video.

However, the need to display more and more information on computer displays, as well as the desire for faster display refresh rates (to minimize flicker with large CRT displays used close to the viewer) led to a very wide range of “standard” computer formats and timings being created in the market. This situation could very easily have become chaotic, were it not for the establishment of industry standards groups created specifically to address this area (as will be seen in the next section). But along with the growing array of formats and timings in use came the development of both graphics board and displays which would attempt to support all of them (or at least all that were within their range of capabilities). These “multifrequency” products are not, however, infinitely flexible, and the ways in which they operate have placed new constraints on the choices in “standard” timings.

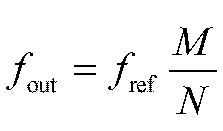

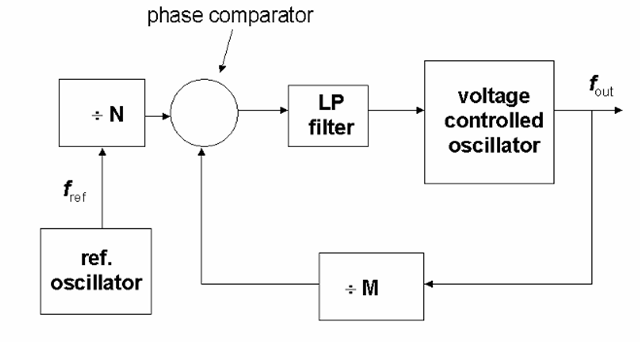

In general, computer graphics systems base the timing of their video signals on a synthesized pixel or “dot” clock. As the name would imply, this is a clock signal operating at the pixel rate of the desired video timing. In the case of single-frequency graphics systems, such a clock was typically produced by a dedicated quartz-crystal oscillator, but support of multiple timings requires the ability to produce any of a large number of possible clock frequencies. This is most often achieved through the use of phase-locked-loop (PLL) based frequency synthesizers, as shown in Figure 7-2. In this type of clock generator, a single reference oscillator may be used to produce any of a number of discrete frequencies as determined by the two counters or dividers blocks in the diagram), such that

blocks in the diagram), such that

While the set of possible output frequencies can be relatively large (depending on the number of bits available in the M and N dividers), this approach does not provide for the generation of any arbitrary frequency with perfect accuracy. In addition, large values for the M and N divide ratios are usually to be avoided, as these would have a negative impact on the stability of the resulting clock. Therefore, it is desirable that all clocks to be generated in such a system be fairly closely related to the reference clock.

Figure 7-2 PLL-based frequency synthesis. In this variant on the classic phase-locked loop, frequency dividers (which may be simple counters) are inserted into the reference frequency input, and into the feedback loop from the voltage-controlled oscillator’s output. These are shown as the “+M” and “+N” blocks. The output frequency (from the VCO) is then determined by the reference frequency fref multiplied by the factor (M/N). If the M and N values are made programmable, the synthesizer can produce a very large number of frequencies, although these are not “infinitely” variable.

Unfortunately, this has not been the case in the development of most timing standards to date. Instead, timing standards for a given format and rate combinations were created more or less independently, and whatever clock value happened to fall out for a given combination was the one published as the standard. This has begun to change, as the various industries and groups affected have recognized the need to support multiple standard timings in one hardware design. Many industry standards organizations, including the EIA/CEA, SMPTE, and VESA, have recently been developing standards with closely related pixel or sampling clock frequencies. Recent VESA computer display timing standards, for instance, have all used clock rates which are multiples of 2.25 MHz, a base rate which has roots in the television industry.6