3D Measurements

Once the calibration parameters are known and the images are oriented, the scene measurements can be performed with manual, semi-automated, or automated “matching” procedures. The measured 2D image primitives and correspondences (points or edges) are converted into unique 3D object coordinates (3D point cloud) using the collinearity principle and the known exterior and interior parameters previously recovered.

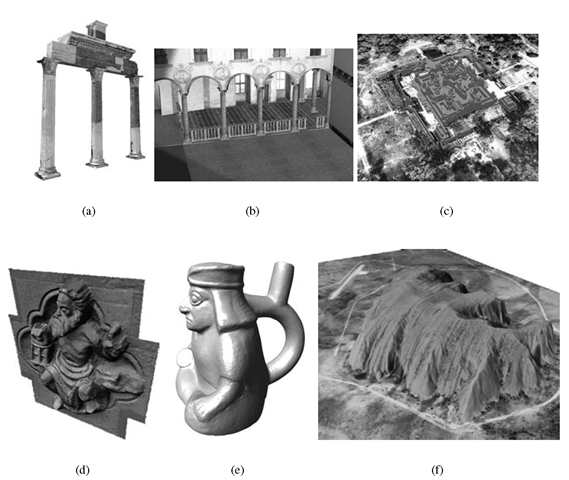

Manual or semi-automated measurements are performed in monocular or stereoscopic vision when few points are necessary to determine the 3D geometry of an object, e.g., for architectures or for 3D city modeling, where the main corners and edges need to be identified to reconstruct the 3D shape (Figure 5.7(a,b,c)) [77,78]. A relative accuracy in the range 1:5,000-15,000 is generally expected for such kinds of 3D models.

Automated procedures are instead employed when dense surface measurements and reconstructions are required, for example to derive a digital surface or terrain model (DSM or DTM) or to document detailed and complex objects like reliefs, statues, excavations areas, etc. (Figure 5.7(d,e,f)).

In certain situations, like architectural and city modeling, a mix of automated and semi-automated methods could be necessary in order to derive all the necessary information and geometric details [79,80].

Automated Measurement Procedures

The latest developments in automated image matching are demonstrating the great potentiality of the 3D reconstruction method at different scales of work, comparable to point clouds derived using range sensors and with a reasonable level of automation.

FIGURE 5.7

3D models derived from aerial or terrestrial images and achieved with interactive measurements (a, b, c) or automated procedures (d, e, f).

Overviews on stereo image matching can be found in [31,81], while [82] compared multi-view matching techniques. In the vision community, the surface measurement is generally performed using stereo-pair depth maps [53, 83] with turntable, visual-hull, controlled environment and uniform backgrounds [84, 85] or multi-view methods aiming at reconstructing a surface which minimize a global photometric discrepancy functional, regularized by explicit smoothness constraints [34, 86, 87]. Experiments are generally performed with indoor low-resolution or Internet images but the results are in any case promising. Some free tools are also available online although reconstruction accuracy in the range 1:50-1:400 images were reported [32, 53, 82]. On the other hand, automated photogrammetric matching algorithms [33, 88, 89] are usually area-based techniques; they rely on cross-correlation or Least Squares Matching algorithms applied on stereo or multiple images providing higher accuracy results in the range of 1:1,000-1:5,000.

Automated procedures involve the automatic establishment of correspondences between primitives extracted from two or more images. The problem of image correlation has been studied for more than 30 years but still many problems exist: complete automation, occlusions, poor or untextured areas, repetitive structures, moving objects (including shadows), radiometric artifacts, capability of retrieving all the details, transparent objects, applicability to diverse camera configuration, etc. In the aerial and satellite photogrammetry the problems are limited and almost solved (a proof is the large amount of commercial software for automated image triangulation and DTM/DSM generation), whereas in terrestrial applications the major problems are related to the three-dimensional characteristics of the surveyed object and the often convergent or wide-baseline images. These configurations are generally hardly accepted by modules developed for DTM/DSM generation from aerial or satellite images.

The state of the art in image matching is the multi-image approach, where multiple images are matched simultaneously and not only pairwise. In [82] the different stereo and multi-view image matching algorithms are classified according to six fundamental properties: the scene representation, photo-consistency measure, visibility model, shape prior, reconstruction algorithm, and initialization requirements. According to [90], multi-view image matching and 3D reconstruction algorithms can be classified in (1) voxel-based approaches which require the knowledge of a bounding box containing the scene and that present an accuracy limited by the resolution of the voxel grid [87,91, 92];(2) algorithms based on deformable polygonal meshes which demand a good starting point (like a visual hull model) to initialize the corresponding optimization process, therefore limiting their applicability [84,85]; (3) multiple depth maps approaches which are more flexible, but require the fusion of individual depth maps into a single 3D model [86,93], and (4) patch-based methods which represent scene surfaces by collections of small patches (or surfels) [94]. On the other hand, in [33] the image matching algorithms are classified in area-based or feature-based procedures, i.e., using the two main classes of matching primitives: image intensity patterns (windows composed of gray values around a point of interest) and features (edges and regions). This leads respectively to area-based (e.g., cross-correlation or Least Squares Matching (LSM) [95]) and feature-based (e.g., relational, structural, etc.) matching algorithms.

Once the primitives are extracted from the images, they are converted in 3D information using the known camera parameters. Area-Based Matching (ABM), especially the LSM method and its extended concept called Multi-Photo Geometrical Constraints LSM [96,97], has a very high accuracy potential (up to 1/25 pixel on well defined targets) if well textured image patches are used. ABM generally uses small areas around interest points extracted, e.g., with interest operators [98-100]. Disadvantages of ABM are the need for small searching range for successful matching, the possible smooth results if too large image patches are used and, in case of LSM, the requirement of good initial values for the unknown parameters (although this is not the case for other techniques such as graph-cut). Furthermore, matching problems (i.e., blunders) might occur in areas with occlusions, lack of or repetitive texture or if the surface does not correspond to the assumed model (e.g., planarity of the matched local surface patch). On the other hand, Feature-Based Matching (FBM)involves the extraction of features like points, edges, or regions which are then associated with some attributes (descriptors) useful to characterize and match them [101,102]. A typical strategy to match characterized features is the computation of similarity measures from the associated attributes. Compared to ABM, FBM techniques are more flexible with respect to surface discontinuities, less sensitive to image noise, and require less approximate values. The accuracy of the feature-based matching is limited by the accuracy of the feature extraction process. Moreover, due to the sparse and irregularly distributed nature of the extracted features, FBM techniques generally deliver point clouds less dense compared to ABM approaches. For all these reasons, the combination of ABM and FBM techniques is the best choice for a powerful surface measurement approach [33,103].

Structuring and Modeling

Once the scene’s main features and details are manually or automatically extracted from the images and converted into 3D information, the produced 3D point cloud should be structured in the most flexible and precise way to accurately represent the 3D measurement results and provide an optimal scene’s description and digital representation. Indeed unstructured 3D data are generally not very useful except for quick visualization and most of the applications require structured results, generally in terms of mesh or TIN (Triangular Irregular Network).

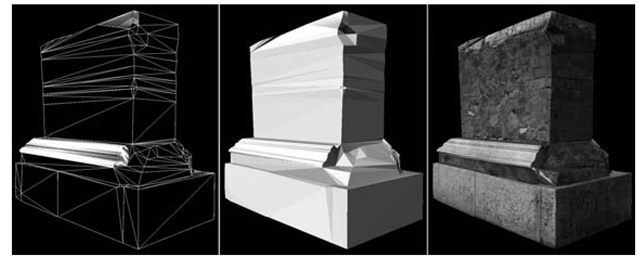

In some applications, like building reconstruction, where the object is mainly described with planar or cylindrical patches, a small number of 3D points are sufficient for the 3D modeling, and the structuring and surface generation are achieved with few triangular patches or fitting particular surfaces to the data (Figure 5.8(a, b, c)) [78,104]. Indeed automated reconstruction methods are still not performing properly with such kinds of objects in all the possible situations and too many assumptions limit the usability of the proposed approaches in the daily 3D documentation [105-107]. In other applications, like terrain modeling, statues, or complex object reconstruction, the surface generation requires very dense point clouds and smart algorithms to produce correct a mesh or TIN and successively photo-realistic results (Figure 5.8(d, e, f)).

The goal of the surface generation can be stated as follows: given a set of sample points Pi assumed to lie on or near an unknown surface S, create a surface model S’ approximating S. A surface reconstruction procedure (also called surface triangulation) cannot exactly guarantee the recovery of S, since we have information about S only through a finite set of sample points Pi. Generally, with the increasing of the sampling density, the output result S’ is more likely to be topologically correct and convergent to the original surface S. A good sample should be dense in detailed and curved areas while sparse in flat parts. Usually if the input data does not satisfy certain properties required by the triangulation algorithm (good point distribution, high density, little noise, etc.), current commercial software tools will produce incorrect results. Sometimes to improve the quality of the reconstruction, additional topological information about the surface (for example breaklines) is added to allow a more correct digital reconstruction of the scene’s geometry.

The conversion of the measured 3D point cloud into a consistent polygonal surface is generally based on four steps:

• pre-processing: erroneous points are eliminated, noise is smoothed out, and points are added to fill gaps. The data may also be resampled to generate a model of efficient size;

• determination of the global topology of the object’s surface: the neighborhood relations between adjacent parts of the data are determined, using some global sorting step and possible constraints (such as breaklines), mainly to preserve special features such as edges;

• generation of the polygonal surface: triangular (or tetrahedral) networks are created, satisfying certain quality requirements, e.g., a limit on the network element size or no intersection of breaklines;

• post-processing: editing operations (edge corrections, triangle insertion, polygon editing, holes filling, non-manifold parts fixing, etc.) are commonly applied to refine and correct the generated polygonal surface [108,109]. Those errors are visually unpleasant, might cause lighting blemishes due to the incorrect normals, and the computer model will also be unsuitable for reverse engineering or physical replicas. Moreover, over-sampled areas should be simplified while under-sampled regions should be subdivided [110].

FIGURE 5.8

An architectural object (a) which can be digitally reconstructed with sparse point cloud and few triangular patches (b,c) [8]. A detailed bass-relief (d) which needs an automated surface measurement procedure to derive a dense point cloud and a textured 3D model (e, f).

In commercial packages, the global topology of the surface and the mesh generation are generally performed automatically while pre- and post-processing operations still need manual intervention.

Texturing and Visualization

A 3D model can be visualized in wireframe, shaded, or textured mode (Figure 5.9). A textured 3D geometric model is probably the most desirable 3D object documentation since it gives, at the same time, a full geometric and appearance representation and allows unrestricted interactive visualization and manipulation at a variety of lighting conditions. The photo-realistic representation is achieved mapping a color image (generally an orthophoto in the case of aerial or satellite applications) onto the 3D geometric data. The 3D data can be in the form of points or triangles (mesh), according to the applications and requirements. The texturing of 3D point clouds (point-based rendering techniques [111]) allows a faster visualization but for detailed and complex 3D models it is not an appropriate method. On the other hand, in case of meshed or TIN data, the texture is automatically mapped if the camera parameters are known (e.g., if it is a photogrammetric model), otherwise homologue points between the 3D mesh and the 2D image to-be-mapped should be identified (e.g., if the model has been generated using range sensors). This is the bottleneck of the texturing phase in terrestrial applications as it is still an interactive procedure and no automated commercial solutions are available, although some automated approaches were proposed [46,112,113]. Indeed the identification of homologue points between 2D and 3D data is a hard task, much more complex than image to image or geometry to geometry registration. Furthermore, in applications involving infrared or multi-spectral images, it is generally quite challenging to identify common features between 2D and 3D data. In practical cases, the 2D-3D registration is done with the well known DLT approach [114] (often referred to as Tsai method [115]), where homologue points between the 3D geometry and a 2D image to-be-mapped are used to retrieve the interior and exterior unknown camera parameters. The color information is then projected (or assigned) to the surface polygons using a color-vertex encoding or a mesh parameterization.

In computer graphics applications, the texturing can also be performed with techniques able to graphically modify the derived 3D geometry (displacement mapping) or simulating the surface irregularities without touching the geometry (bump mapping, normal mapping, parallax mapping).

The texture mapping phase goes much further than simply projecting one or more static images over the 3D geometry. Problems can rise firstly from the time-consuming image-to-geometry registration and then due to variations in lighting, surface specularity, and camera settings. Often the images are exposed with the illumination at imaging time but it may need to be replaced by illumination consistent with the rendering point of view and the reflectance properties (BRDF) of the object [116]. High dynamic range (HDR) images might also be acquired to recover all scene details [117] while color discontinuities and aliasing effects must be removed [118-120].

The photo-realistic product needs then to be visualized, e.g., for communication and presentation purposes. In case of large and complex models the point-based rendering technique does not give satisfactory results and does not provide realistic visualization. Moreover the visualization of a 3D model is often the only product of interest for the external world, remaining the only possible contact with the 3D data. Therefore a realistic and accurate visualization is often required. Furthermore the ability to easily interact with a huge 3D model is a continuing and increasing problem. Indeed model sizes (both in geometry and texture) are increasing at a faster rate than computer hardware advances and this limits the possibilities of interactive and real-time visualization of the 3D results. Due to the generally large amount of data and its complexity, the rendering of large 3D models is done with multi-resolution approaches displaying the large meshes with different levels of detail (LOD), simplification, and optimization approaches [121-123].

FIGURE 5.9

Image-based 3D model of an archaeological find obtained with interactive procedures and displayed in wireframe, shaded, and textured mode.

Main Applications and Actual Problems

Photogrammetry can currently deliver mainly three types of 3D models which can be used in geo-related applications:

• digital terrain and surface models (DTM, DSM);

• 3D city models;

• cultural and natural heritage models.

Digital terrain and city models are the basis for any GIS or geo-related study and application. Terrain models are produced, mainly automatically, using aerial or satellite stereo images for the generation of topographic maps, analysis of changes, environmental models, etc. Digital surface models are realized for special applications like environmental studies, vegetation analysis, water management, deformation analysis, etc.

3D city models are generated with semi-automated procedures as more reliable and productive than fully automated methods which require many post-editing corrections. 3D city models are generally required for city planning (Figure 5.10(a)), telecommunication, disaster management, location-based services (LBS), real estate, media and advertising, games and simulation, transportation analysis, navigation, energy providers and heating dispersion studies, and archaeological documentation (Figure 5.10(b)).

Digital heritages are required at different scales and level of detail, from large site [5,44,51,124] to single structure and buildings [125], statue (Figure 5.10(c)), artifacts, and small findings. Beside management, conservation, restoration, and visualization issues, digital models of heritages are very useful for advanced databases where archaeological, geographical, architectural, and semantical information can be linked to the recovered 3D geometry and consulted, e.g., online [126].

The actual problems and main challenges in the 3D metric surveying of large and complex sites or objects arise in every phase of the photogrammetric pipeline, from the data acquisition to the visualization of the achieved 3D results. Selecting the correct platform and sensor, the appropriate measurement and modeling procedures, designing the production workflow, assuring that the final result is in accordance with all the given technical specifications and being able to fluently display and interact with the achieved 3D model are the greatest challenges. In particular, key issues and challenges arise from:

FIGURE 5.10

3D city model generated for urban planning (a) and archaeological 3D documentation (b) [79]. 3D model of the Great Buddha of Bamiyan (Afghanistan) (c) and its current empty niche after the destruction in 2001 [50] (d).

• New sensors and platforms: new digital sensors and technologies are frequently coming on the market but the software to process the acquired data are generally coming much later. The development and use of new sensors requires the study and test of innovative sensor models, and the investigation of the related network structures and accuracy performance. Of particular interest here are high-resolution satellite and aerial linear array cameras, terrestrial panoramic cameras, and laser scanners.

• Automation: the automation of photogrammetric processing is one of the most important issues when it comes to efficiency and costs of data processing. Many researchers and commercial solutions have turned towards semi-automated approaches, where the human capacity in image content interpretation is paired with the speed and precision of computer-driven measurement algorithms. But the success of automation in image analysis, interpretation, and understanding depends on many factors and is still a hot topic of research. The progress is slow and the acceptance of results depends on the quality specifications of the user and final goal of the 3D model. An automated procedure should be judged in terms of the datasets that it can handle but nowadays we can observe that (1) sensor calibration and image orientation can be done automatically [71,127], (2) scaling and geo-referencing still needs interaction while seeking the control points in the images, (3) DSM generation can be done automatically [32,33,35,91] but may need substantial post-editing if, in aerial- and satellite-based applications, a DTM is required, (4) orthoimage generation is a fully automatic process, (5) object extraction and modeling is mainly done in a semi-automated mode to achieve reliable and precise 3D results.

• Integration of sensors and data: the combination of different data sources allows one to derive different geometric levels of detail and exploit the intrinsic advantages of each sensor [8, 44]. The integration so far is mainly done at model-level (for example at the end of the modeling pipeline) while it should also be possible at data-level to overcome the weakness of each data source. In a more general 3D modeling view, an important issue is the increasing use of hybrid sensors, in order to collect as many different cues as possible.

• On-line and real-time processing: in some applications there is a need for very fast processing thus requiring new algorithmic implementation, sequential estimation, and multi-core processing. The internet is also a great help in this sector and web-based processing tools [54] for image analysis and 3D model generation [36] are available although limited to specific tasks and not ideal to collect CAD information and accurate 3D models.

Conclusions

The topic has presented on overview of the existing techniques and methodologies to derive reality-based 3D models, with a deeper description of the photogrammetric method. Photogrammetry is the art of turning 2D images into accurate 3D models. Images are acquired from terrestrial, aerial, or satellite sensors or can be found in archives. An emerging platform of particular interest in the cultural heritage field is Unmanned Aerial Vehicles (UAVs) like model helicopters which deliver high-resolution vertical or oblique views that can be extremely useful for site studies, inspection, and documentations. Photogrammetry relies on the use of at least two images combined with a stable and consolidated mathematical formulation to derive 3D information with estimates of precision and reliability of the unknown parameters from measured correspondences (tie points) in the images. The correspondences can be extracted automatically or semi-automatically according to the object and project requirements. Photogrammetry is employed in different applications like 3D documentation, conservation, restoration, reverse engineering, mapping, monitoring, visualization, animation, urban planning, deformation analysis, etc. In the case of archaeological and cultural heritage sites or objects, photogrammetry provided for accurate 3D reconstructions at different scales and for hybrid 3D models (e.g., terrain model + archaeological structures as shown in Figure 5.7(c) and Figure 5.10(b)). Nowadays 3D scanners are also becoming a standard source for input data in many application areas, but image-based modeling still remains the most complete, cheap, portable, flexible, and widely used approach, although large experience in data acquisition and processing is highly required. Furthermore, for large sites’ 3D documentation, the integration with range sensors is generally the best solution.

Despite the fact that 3D documentation is not yet the state of the art in the heritage field, the reported examples show the potentialities of the photogrammetric method to digitally document and preserve our heritages as well as share and manage the collected digital information. The image-based approach, together with active sensors, Spatial Information Systems, 3D modeling, and visualization and animation software are still in a dynamic state of development, with even better application prospects for the near future.

![An architectural object (a) which can be digitally reconstructed with sparse point cloud and few triangular patches (b,c) [8]. A detailed bass-relief (d) which needs an automated surface measurement procedure to derive a dense point cloud and a textured 3D model (e, f). An architectural object (a) which can be digitally reconstructed with sparse point cloud and few triangular patches (b,c) [8]. A detailed bass-relief (d) which needs an automated surface measurement procedure to derive a dense point cloud and a textured 3D model (e, f).](http://what-when-how.com/wp-content/uploads/2012/06/tmp8745107_thumb22_thumb.png)

![3D city model generated for urban planning (a) and archaeological 3D documentation (b) [79]. 3D model of the Great Buddha of Bamiyan (Afghanistan) (c) and its current empty niche after the destruction in 2001 [50] (d). 3D city model generated for urban planning (a) and archaeological 3D documentation (b) [79]. 3D model of the Great Buddha of Bamiyan (Afghanistan) (c) and its current empty niche after the destruction in 2001 [50] (d).](http://what-when-how.com/wp-content/uploads/2012/06/tmp8745109_thumb.png)