Image Data Acquisition

Nowadays a large amount of image products is available in terms of geometrical resolution (footprint or Ground Sample Distance), spectral resolution (number of spectral channels), and costs. Used for mapping, documentation and visualization purposes, the available images can be acquired from a wide variety of acquisition tools: middle- and high-resolution satellite sensors (GeoEye, WorldView, IKONOS, Quickbird, SPOT-5, Orbview, IRS, ASTER, Landsat), large format and linear array digital aerial cameras (ADS40, DMC, ULTRACAM), space and aerial radar platforms (AirSAR, ERS, Radarsat), model helicopters and low altitude platforms (UAV) with consumer digital cameras or small laser scanner on-board, small format digital aerial cameras (DAS, DIMAC, DSS, ICI), linear array panoramic cameras, still video cameras, camcorders, or even mobile phones. Moreover GNSS and INS/IMU systems allow precise localization and navigation and are beginning to be integrated also in terrestrial cameras. Terrestrial cameras can be traditionally distinguished as (1) amateur/consumer and panoramic cameras, employed for VR reconstructions, quick documentation, and forensic applications, with a relative accuracy potential around 1:25,000; (2) professional SRL cameras, used in industrial, archaeological, architectural, or medical applications, which allow up to 1:100,000 accuracy; (3) photogrammetric cameras, used mainly for industrial applications and with an accuracy potential of 1:200,000. Nowadays consumer digital cameras are coming with more than 8-10 mega pixel sensors, therefore highly precise measurements could be achieved also with these sensors, even if the major problems in these types of cameras are given by the lens/objective, unstable electronics, and some cheap components. Consumer digital cameras contain frame CCD (Charge Coupled Device) or CMOS (Complementary Metal-Oxide Semiconductor) sensors while industrial and panoramic cameras can have linear array sensors.

Between the available aerial platforms, particular attention is now given to the UAVs (Unmanned Aerial Vehicles), i.e., remotely operated aircrafts able to fly without a human crew. They can be controlled from a remote location or fly autonomously based on pre-programmed flight plans. There is a wide variety of UAVs shapes, dimensions, configurations, and characteristics. The most used UAVs in geomatics applications are model helicopters which use integrated GNSS/INS, stabilizer platform, and digital cameras on-board. They are generally employed to get images from otherwise hardly accessible areas and derive DSM/DTM. Traditionally UAVs were employed in the military and surveillance domain, but nowadays they are getting quite common also in the heritage field, as they are able to provide unique top-down or oblique views that can be extremely useful for site studies, inspection, and documentations. Typical UAVs span from a few centimeters up to 2-3 metres and are able to carry up to 50 kilogram instruments (cameras or laser scanners) on-board. In some cases, kites, balloons, or zeppelins are also meant as UAVs, even if they are more unstable and therefore complicated to control and maneuver on the field.

Camera Calibration and Image Orientation

Camera calibration and image orientation are procedures of fundamental importance, in particular for all those geomatics applications which rely on the extraction of precise 3D geometric information from imagery. The early theories and formulations of orientation procedures were developed more than 50 years ago and today there is a great number of procedures and algorithms available [61]. A fundamental criterion for grouping these procedures is the used camera model (i.e., the projective or the perspective camera model). Camera models based on perspective approaches require stable optics, not many image correspondences, and have high stability. On the other hand, projective methods can deal with variable focal length, but are quite unstable and need more parameters and image correspondences to derive 3D information.

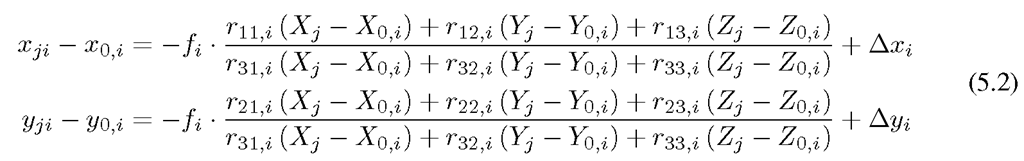

In photogrammetry, the calibration and orientation procedures, performed respectively to retrieve the camera interior and exterior parameters, use the collinearity principle and a multi-image approach (Figure 5.2). The interior orientation (IO) parameters, recovered with a calibration procedure, consist of the camera constant (or focal length f), position of the principal point (x0, y0), and some additional parameters (APs) to model systematic errors due for example to lens distortion. The calibration procedure is defined as the determination of geometric deviations of the physical reality from a geometrically ideal imaging system: the pinhole camera. The calibration can be performed for a single image or using a set of images all acquired with the same set of interior parameters. A camera is considered calibrated if the focal length, principal point offset, and its APs (strictly dependent on the used camera settings) are known. In many applications only the focal length is recovered while for precise photogrammetric measurements all the calibration parameters are generally employed. On the other hand, the exterior orientation (EO) parameters consist of the three positions of the perspective center (X0, Yo, Z0) and the rotations (ω, φ, κ) around the three axes of the coordinate system. These parameters are generally retrieved using two images (relative orientation) or simultaneously with a set of images (bundle solution).

FIGURE 5.4

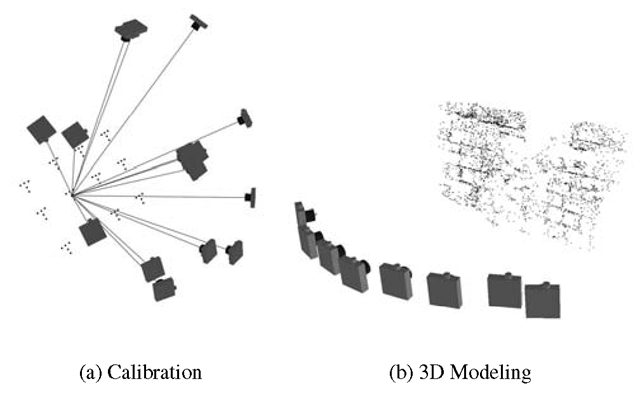

(a) Most favorable image network configuration for accurate camera calibration. (b) Typical configuration for object 3D modeling.

In all photogrammetric applications, camera calibration and image orientation are recovered separately using a photogrammetric bundle adjustment solution [62-64]. Camera calibration and image orientation can also be performed simultaneously, leading to a self-calibrating bundle-adjustment [65,66], i.e., a bundle adjustment extended with some APs to compensate for systematic errors due to the uncalibrated camera. But in practical cases, rather than simultaneously recovering the camera internal parameters and reconstructing the object, it is always better first to calibrate the camera at a given setting using the most appropriate network and after words recover the camera poses and object geometry using the calibration parameters at the same camera setting. Indeed the image network most suitable for camera calibration is different from the typical image configuration suitable for object reconstruction (Figure 5.4).

In the computer vision community, calibration and orientation are often solved simultaneously leading to the Structure from Motion approaches where an uncalibrated image sequence, generally with very short baselines, is used to recover the camera parameters and reconstruct 3D shapes [52,53,67,68]. But the results reported an accuracy of 1:400 in the best case [32], limiting their use in applications requiring only nice-looking 3D models.

The most common set of APs employed to calibrate CCD / CMOS cameras and compensate for systematic errors is the 8-term “physical” model originally formulated by Brown in 1971 [69]:

Besides the main interior parameters![]() Equation 5.1 contains three terms Ki for the radial distortion, two terms Pi for the decentering distortion, one term A for the affinity factor, and one term Sx to correct for the shear of the pixel elements in the image plane. The last two terms are rarely if ever significant in modern digital cameras and in particular for heritage applications but very useful in very high accuracy applications. Numerous investigations on different sets of APs have been performed over the past years, yet this model, despite being more than 30 years old, still holds up as the optimal formulation for complete and accurate digital camera calibration. The three APs Ki used to model the radial distortion Ar (also called barrel or pincushion distortion) are generally expressed via the odd-order polynomial

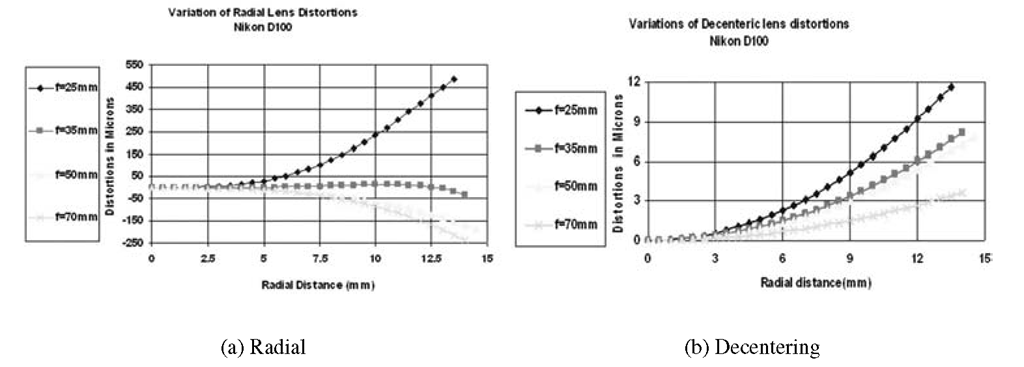

Equation 5.1 contains three terms Ki for the radial distortion, two terms Pi for the decentering distortion, one term A for the affinity factor, and one term Sx to correct for the shear of the pixel elements in the image plane. The last two terms are rarely if ever significant in modern digital cameras and in particular for heritage applications but very useful in very high accuracy applications. Numerous investigations on different sets of APs have been performed over the past years, yet this model, despite being more than 30 years old, still holds up as the optimal formulation for complete and accurate digital camera calibration. The three APs Ki used to model the radial distortion Ar (also called barrel or pincushion distortion) are generally expressed via the odd-order polynomial![]() where r is the radial distance from the image center. A typical Gaussian radial distortion profile Δγ is shown in Figure 5.5(a), which illustrates how radial distortion varies with the focal length of the camera. The coefficients Ki are usually highly correlated, with most of the error signal generally being accounted for by the cubic term Kir3. The K2 and K3 terms are typically included for wide-angle lenses and in high-accuracy machine vision and metrology applications. Recent research has demonstrated the feasibility of empirically modeling radial distortion throughout the magnification range of a zoom lens as a function of the focal length written to the image EXIF header [70]. Decentering distortion is instead due to a lack of centering of lens elements along the optical axis. The decentering distortion parameters P1 and P2 are invariably strongly projectively coupled with xp and yp. Decentering distortion is usually an order of magnitude or more less than radial distortion and it also varies with focus, but to a much less extent, as indicated by the decentering distortion profiles shown in Figure 5.5. The projective coupling between P1 and P2 and the principal point offsets increase with the increasing of the focal length and can be problematic for long focal length lenses. The extent of coupling can be diminished through the use of a 3D object point array, the adoption of higher convergence image, as well as 90-degree-rotated images.

where r is the radial distance from the image center. A typical Gaussian radial distortion profile Δγ is shown in Figure 5.5(a), which illustrates how radial distortion varies with the focal length of the camera. The coefficients Ki are usually highly correlated, with most of the error signal generally being accounted for by the cubic term Kir3. The K2 and K3 terms are typically included for wide-angle lenses and in high-accuracy machine vision and metrology applications. Recent research has demonstrated the feasibility of empirically modeling radial distortion throughout the magnification range of a zoom lens as a function of the focal length written to the image EXIF header [70]. Decentering distortion is instead due to a lack of centering of lens elements along the optical axis. The decentering distortion parameters P1 and P2 are invariably strongly projectively coupled with xp and yp. Decentering distortion is usually an order of magnitude or more less than radial distortion and it also varies with focus, but to a much less extent, as indicated by the decentering distortion profiles shown in Figure 5.5. The projective coupling between P1 and P2 and the principal point offsets increase with the increasing of the focal length and can be problematic for long focal length lenses. The extent of coupling can be diminished through the use of a 3D object point array, the adoption of higher convergence image, as well as 90-degree-rotated images.

The basic principle of photogrammetry is the collinearity model which states that a point in object space, its corresponding point in an image, and the projective center of the camera lay on a straight line (Figure 5.2). For each point j measured in the image i, the collinearity model, extended to account also for systematic errors, states:

where:

•![]() are the image coordinates of the measured tie point j in the image i;

are the image coordinates of the measured tie point j in the image i;

•![]() are the focal length and principal point of the image i;

are the focal length and principal point of the image i;

•![tmp874595_thumb[2] tmp874595_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmp874595_thumb2_thumb.png) are the position of the camera perspective center for the image i;

are the position of the camera perspective center for the image i;

•![]() are the nine elements of the rotation matrix Ri created with the three rotation angles

are the nine elements of the rotation matrix Ri created with the three rotation angles![]()

FIGURE 5.5

Typical behavior of the radial (a) and decentering (b) distortion according to the variation of the image radius and focal length.

• Xj, Yj, Zj are the 3D object coordinates of the measured point j;

• Δχί, Δyi are the two terms (Additional Parameters) used to extend the basic collinearity model to meet the physical reality and model some systematic errors during the calibration procedure (as stated in Eq. 5.1).

All measurements performed on digital images refer to a pixel coordinate system (u, v) while collinearity equations refer to the metric image coordinate system (x, y). The conversion from pixel to image coordinates is performed with an affine transformation knowing the sensor dimensions and pixel size. For each measured image point (that should be visible in multiple images) a collinearity equation is written. All the equations form a system of equations and the solution of the bundle adjustment is generally obtained with an iterative least squares method (Gauss-Markov model), thus requiring some good initial approximations of the unknown parameters. The bundle adjustment provides a simultaneous determination of all system parameters along with estimates of the precision and reliability of the unknown parameters. Furthermore, correlations between the IO and EO parameters and the object point coordinates, along with their determinability, can be quantified. To enable the correct scaling of the recorded object information, a known distance between two points visible in the images should be used while for absolute positioning (geo-referencing) at least seven external informations (for example three points with known 3D coordinates, generally named ground control points) need to be imported in the bundle procedure. Depending on the parameters which are considered either known a priori or treated as unknowns, Equation 5.2 may result in the following cases:

• self-calibrating bundle: all parameters on the right-hand side are unknown (IO, EO, object point coordinates, and additional parameters);

• general bundle method: the IO is known and fixed, the image coordinates are used to determine the EO and the object point coordinates;

• spatial resection: the IO and object point coordinates are known, the EO needs to be determined or the object point coordinates are available and the IO and EO have to be determined;

• spatial intersection: knowing the IO and EO, the object point coordinates have to be determined.

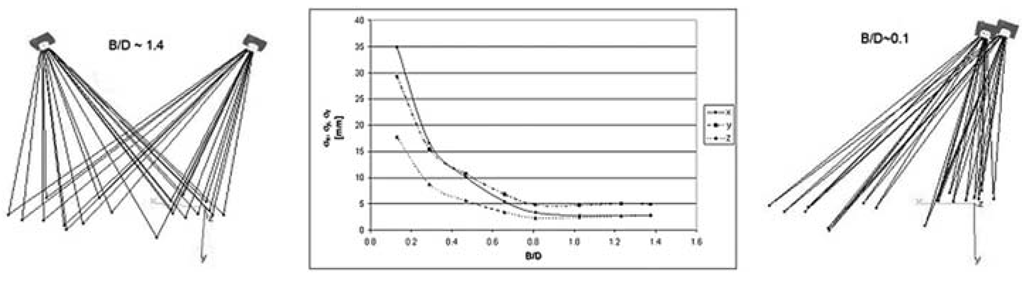

FIGURE 5.6

The behavior of the computed object coordinates accuracy with the variation of the B/D ratio between a pair of images.

Camera calibration and image orientation can be performed fully automatically by means of coded targets which are automatically recognized in the images and used as tie points in the successive bundle adjustment. Otherwise, if no targets are placed in the scene, the tie points are generally measured manually. Indeed automated markerless image orientation is a quite complex task, in particular for convergent terrestrial images. The Automatic Aerial Triangulation (AAT) has reached a significant level of maturity and reliability demonstrated by the numerous commercial softwares available on the market. On the other hand, in terrestrial photogrammetry, commercial solutions for the automated orientation of markerless sets of images are still pending and the topic is still an open research issue [71]. Few commercial packages are available to automatically orient video sequences which are generally of very low geometric resolution and so not really useful for detailed 3D modeling projects.

As previously mentioned, the photogrammetric reconstruction method relies on a minimum of two images of the same object acquired from different viewpoints. Defining B the baseline between the two images and D the average camera-object distance, a reasonable B/D (base-to-depth) ratio between the images should ensure a strong geometric configuration and reconstruction that is less sensitive to noise and measurement errors. A typical value of the B/D ratio in terrestrial photogrammetry should be around then 0.5 – 0.75, even if in practical situations it is often very difficult to fulfill this requirement. Generally, the larger the baseline, the better the accuracy of the computed object coordinates, although large baselines lead to problems in finding automatically the same correspondences in the images, due to strong perspective effects. The influence of increasing the baseline between the images is shown in Figure 5.6, which reports the computed theoretical precision of the object coordinates with the variation of the B/D ratio. It is clearly visible how small baselines have a negative influence on the accuracy of the computed 3D coordinates while larger baselines help to derive more accurate 3D information. In general the accuracy of the computed object coordinates (σχγζ) depends on the image measurement precision (σχν), image scale, and geometry (e.g., the scale number S computed and the mean object distance/camera focal length), an empirical factor q, and the number of exposures k [72]:

Summarizing the calibration and orientation phase, as also stated in different studies related in particular to terrestrial applications [66, 72-74], we can affirm that:

• the accuracy of an image network increases with the increase of the base-to-depth (B/D) ratio and using convergent images rather than images with parallel optical axes;

• the accuracy improves significantly with the number of images where a point appears. But measuring the point in more than four images gives less significant improvement;

• the accuracy increases with the number of measured points per image but the increase is not significant if the geometric configuration is strong and the measured points are well defined (like targets) and well distributed in the image;

• the image resolution (number of pixels) influences the accuracy of the computed object coordinates: on natural features, the accuracy improves significantly with the image resolution, while the improvement is less significant on well-defined large resolved targets;

• self-calibration (with or without known control points) is reliable only when the geometric configuration is favorable, mainly highly convergent images of a large number of (3D) targets well-distributed spatially and throughout the image format;

• a flat (2D) testfield could be employed for camera calibration if the images are acquired at many different distances from the testfield, to allow the recovery of the correct focal length;

• at least 2-3 images should be rotated 90 degrees to allow the recovery of the principal point,i.e., to break any projective coupling between the principal point offset and the camera station coordinates, and to provide a different variation of scale within the image;

• a complete camera calibration should be performed, in particular for the lens distortions. In most cases, particularly in modern digital cameras and for unedited images, the camera focal length can be found, albeit with less accuracy, in the header of the digital images. This can be used on uncalibrated cameras if self-calibration is not possible or unreliable.

• if the image network is geometrically weak, correlations may lead to instabilities in the least-squares estimation. The use of inappropriate APs can also weaken the bundle adjustment solution, leading to over-parameterization, in particular in the case of minimally constrained adjustments.

The described mathematical model and collinearity principle are both valid for frame cameras while for linear array sensors a different mathematical model should be employed [75]. Empirical models based on simple affine, projective or DLT transformation were also proposed, finding their main application in the processing of high-resolution satellite imagery [76].

![tmp874586_thumb[2] tmp874586_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmp874586_thumb2_thumb.png)