Introduction

The environment and its heritage sites or objects suffer from wars, natural disasters, weather changes, and human negligence. According to UNESCO [1], “a heritage can be seen as an arch between what we inherit and what we leave behind … Heritage is our legacy from the past, what we live with today and what we pass on to future generations.” Thus the importance of cultural heritage documentation is well recognized and there is an increasing pressure to document and preserve them also digitally. Therefore 3D data become a critical component to permanently record the shapes of important objects so that they might be passed down to future generations. The continuous development of new sensors, data capture methodologies, and multi-resolution 3D representations and the improvement of existing ones are contributing significantly to the documentation, conservation, and presentation of heritage information and to the growth of research in the cultural heritage field. This is also driven by the increasing requests and needs for the digital documentation of heritage sites at different scales and resolutions, for the general mapping and digital preservation of our environment but also for studies, analysis, interpretation, etc.

In the last few years, great efforts have been focused on what we inherit as cultural heritage and on their documentation, in particular for visual man-made or natural heritages, which received a lot of attention and benefits from sensor and imaging advances. This has produced firstly a large number of projects, mainly led by research groups, which have realized very good quality and complete digital models [2-9], and secondly has spurred the creation of guidelines describing standards for correct and complete documentations. The actual technologies and methodologies for cultural heritage documentation [10] allow the generation of very realistic 3D results (in terms of geometry and texture). Photogrammetry [11,12] and Laser Scanning [13,14] are the most used techniques for applications like accurate surveying, archaeological documentation, digital conservation or restoration, VR/CG, GIS, 3D repositories and catalogs, web geographic systems, visualization and animation, etc. But despite all the possible applications and the constant pressure of international organizations, a systematic and well-judged use of 3D models in the cultural heritage field is still not yet employed as a default approach for several reasons: (1) the “high cost” of 3D; (2) the difficulties in achieving good 3D models by everyone; (3) the consideration that it is an optional process of interpretation (an additional “aesthetic” factor) and documentation (2D is enough); (4) the difficulty of integrating 3D worlds with other more standard 2D material. But the availability and use of 3D computer models of heritages opens a wide spectrum of further applications and permits new analysis, studies, interpretations, and conservation policies as well as digital preservation and restoration. Thus virtual heritages should be more and more frequently used due to the great advantages that the digital technologies are giving to the heritage world and to recognize the documentation needs stated in the numerous charters and resolutions.

This topic reviews some important 3D documentation requirements and specifications, and the actual surveying and 3D modeling methodologies, and then will provide an in-depth look at the photogrammetric approach for accurate and detail reconstruction of objects and sites. The topic is organized as follows: the next section reviews the issues related to reality-based methods for large and complex site surveying and modeling, with the actual digital techniques and sensors; the photogrammetric approach will be described in Section 3, with all the steps of the 3D documentation pipeline and the open research issues in image-based modeling. Finally, some concluding remarks will end the topic.

Reality-Based 3D Modeling

Metric 3D documentation and modeling of scenes or objects should be intended as the entire procedure that starts with the surveying and data acquisition, data processing and 3D information generation, visualization and preservation for further uses like conservation, education, restoration, GIS applications, etc. A technique is intended as a scientific procedure (e.g., image processing) to accomplish a specific task while a methodology is a group or combination of techniques and activities combined to achieve a particular task.

Reality-based techniques (e.g., photogrammetry, laser scanning, etc.) [15] employ hardware and software to survey the reality as it is, documenting the actual visible situation of the site. Non-real approaches are instead based on computer graphics software (3D Studio, Maya, Sketchup, etc.) or procedural modeling [16] and they allow the generation of 3D data without any particular survey or knowledge of the site. Nowadays the digital documentation and 3D modeling of cultural heritage is mostly based on reality-based techniques and methodologies and it generally consists of [128]:

• metric recording and processing of a large amount of three (possibly four) dimensional multisource, multi-resolution, and multi-content information;

• management and conservation of the achieved 3D (4D) models for further applications;

• visualization and presentation of the results to visually present the information to other users;

• digital inventories and sharing for data retrieval, education, research, conservation, entertainment, walkthrough, or tourism purposes.

In most of the practical applications, the generation of digital 3D models of heritage objects or sites for documentation and conservation purposes requires a technique or a methodology with the following properties:

• accuracy: precision and reliability are two important factors of the surveying work and final 3D results, unless the work is done for simple and quick visualization;

• portability: the technique should be as portable as possible due to accessibility problems, absence of electricity, location constraints, etc;

• low cost: most surveying missions have limited budgets and they cannot affort expensive documentation instruments;

• fast acquisition: most sites or excavation areas allow a limited time for the 3D recording and documentation not to disturb restoration works or the visitors;

• flexibility: due to the great variety and dimensions of sites and objects, the technique should allow different scales and it should be applicable in any possible condition.

All these properties are often not applicable to a specific technique, therefore most of the surveying projects related to large and complex sites integrate and combine multiple sensors and techniques. Nevertheless, the image-based approach has different advantages compared to the range-based one and it is often selected although it sometimes requires the user’s interaction and some experience in both data acquisition and processing.

Techniques and Methodologies

The generation of reality-based 3D models of large and complex sites or structures is nowadays performed using methodologies based on passive sensors and image data [17], optical active sensors and range data [14,18,19], classical surveying (e.g., total stations or GNSS), or an integration of the aforementioned techniques [20-24]. For architecture engineering and in the construction community, geometric 3D models can also be generated from existing 2D drawings or maps, interactively and using extrusion functions [25]. The choice or integration depends on the required accuracy, site and object dimensions, location constraints, instrument’s portability and usability, surface characteristics, working team experience, project’s budget, final goal, etc.

Optical active sensors like laser scanners (pulsed, phase-shift, or triangulation-based instruments) and stripe projection systems have received in the last years a great attention for 3D documentation and modeling applications. They deliver directly ranges (e.g., distances thus 3D information in the form of unstructured point clouds) and are getting quite common in the heritage field, despite their high costs, weight, and the usual lack of good texture. For the surveying, the instrument should be placed in different locations or the object is moved in a way that the instrument can see it from different viewpoints. Successively, the raw data needs errors and outliers removal, noise reduction, and sometimes holes filling before the alignment or registration in a unique reference system to produce a unique point cloud of the surveyed scene or object. The registration is generally done in two steps: (1) manual or automatic raw alignment using targets or the data itself and (2) final global alignment based on iterative closest points [26] or least squares method procedures [27]. After the global alignment, redundant points should be removed before a surface model is produced and textured.

According to [28], the scanning results are a function of:

• intrinsic characteristics of the instrument (calibration, measurement principle, etc.);

• characteristics of the scanned material in terms of reflection, light diffusion, and absorption (amplitude response);

• characteristics of the working environment;

• coherence of the backscattered light (phase randomization);

• dependence from the chromatic content of the scanned material (frequency response).

Terrestrial range sensors work from very short ranges (a few centimeters) up to a few kilometers, in accordance with surface proprieties and environment characteristics, delivering 3D data with positioning accuracy from some hundred of microns up to some millimeters. Range sensors, coupled with GNSS/INS sensors, can also be used on aerial platform [29, 30] generally for DTM/DSM generation, city modeling, and archaeological applications. Although aware of the potentialities of the image-based approach and its recent developments in automated and dense image matching [31-35], the easy usability and reliability of optical active sensors in acquiring unstructured and dense point clouds is generally much higher. This fact has made active sensors like laser scanners a very common 3D recording solution, in particular for non-expert users. Active sensors generally also include radar instruments, which are generally not considered as optical sensors, nevertheless they are often used in the cultural heritage 3D documentation or environmental mapping.

On the other hand, image data require a mathematical formulation to transform the twodimensional image measurements into three-dimensional coordinates. Image-based modeling techniques (mainly photogrammetry and computer vision) are generally preferred in cases of lost objects, monuments, or architectures with regular geometric shapes, low-budget projects, good experience of the working team, or time or location constraints for the data acquisition and processing. Moreover, images contain all the information useful to derive the 3D shape of the surveyed scene as well as its graphical appearance (texture). Image-based 3D modeling generally requires some user interaction in the different steps of the modeling pipeline to derive accurate results, limiting its use only to experts, while for quick 3D results, useful mainly for simple visualization fully automated approaches are available [36]. Generally at least two images are necessary to derive 3D information of corresponding image points but there are also some methods to derive 3D data from a single image [37,38].

Many works reported how the photogrammetric approach generally allows surveys at different levels and in all possible combinations of object complexities, with high quality requirements, easy usage and manipulation of the final products, few time restrictions, good flexibility, and low costs [13,39,40]. Different comparisons between photogrammetry and laser scanning were also presented in the literature [41-43] although it can not be stated a priori which is the best technique. In case of large sites surveying and 3D modeling, the best solution is the integration of multiple sensors and techniques.

Multi-Sensor and Multi-Source Data Integration

Nowadays the state-of-the-art approach for the 3D documentation and modeling of large and complex sites uses and integrates multiple sensors and technologies (photogrammetry, laser scanning, topographic surveying, etc.) to (1) exploit the intrinsic potentials and advantages of each technique, (2) compensate for the individual weaknesses of each method alone, (3) derive different geometric levels of detail (LOD) of the scene under investigation, and (4) achieve more accurate and complete geometric surveying for modeling, interpretation, representation, and digital conservation issues. 3D modeling based on multi-scale data and multi-sensors integration is indeed providing the best 3D results in terms of appearance and geometric detail. Each LOD is showing only the necessary information while each technique is used where best suited.

Since the nineties, multiple data sources have been integrated for industrial, military, and mobile mapping applications. Sensor and data fusion were then applied also in the cultural heritage domain, mainly at terrestrial level [4,20], but in some cases also with satellite, aerial, and ground information for a more complete and multi-resolution 3D survey [5,23,44].

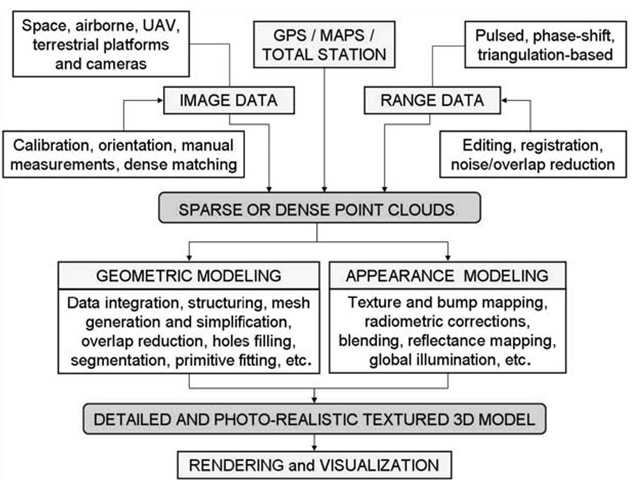

The multi-sensor and multi-resolution concept (Figure 5.1) should be distinguished between

(1) geometric modeling (3D shape acquisition, registration, and further processing) where multiple resolutions and sensors are seamlessly combined to model features with the most adequate sampling step and derive different geometric levels of detail (LOD) of the scene under investigation and

(2) appearance modeling (texturing, blending, simplification, and rendering) where photo-realistic representations are sought, taking into consideration variations in lighting, surface specularity, seamless blending of the textures, user’s viewpoint, simplification, and LOD.

FIGURE 5.1

The multi-sensor and multi-resolution 3D modeling pipeline based on passive and optical active sensors for the generation of point clouds and textured 3D models.

Beside images acquired in the visible part of the light spectrum, it is often necessary to acquire extra information provided by other sensors working in different spectral bands (e.g., IR, UV) in order to study the object more closely. Thermal infrared information is useful to analyze historical buildings, their state of conservation, reveal padding, older layers, and back structure of frescoes, while near IR is used to study paintings, revealing pentimenti, and preparatory drawings. On the other hand, the UV (ultraviolet) radiations are very useful in heritage studies to identify different varnishes and over-paintings, in particular with induced visible fluorescence imaging systems [45]. All of this multi-modal information needs to be aligned and often overlapped to the geometric data for information fusion, multi-spectral analysis, or other diagnostic applications [46].

Standards in Digital 3D Documentation

“It is essential that the principles guiding the preservation and restoration of ancient buildings should be agreed and be laid down on an international basis, with each country being responsible for applying the plan within the framework of its own culture and traditions” (The Venice Charter, i.e. The International Charter for the Conservation and Restoration of Monuments and Sites, 1964). Even if this was stated more than 40 years ago, the need for a clear, rational, standardized terminology and methodology, as well as an accepted professional principles and technique for interpretation, presentation, digital documentation, and presentation is still evident.In the face of the current digital divide, it is necessary to reinforce international cooperation and solidarity to enable all countries to ensure creation, dissemination, preservation and continued accessibility of their digital heritage” (The UNESCO Charter on the Preservation of the Digital Heritage, 2003). Therefore, beside computer recording and modeling, our heritages require more international collaborations and information sharing to digitally preserve them and make them accessible in all the possible forms and to all the possible users and clients.

From a more technical point of view, many image-based modeling packages as well as range-based systems came out on the market in the last decades to allow the digital documentation and 3D modeling of objects or scenes. Many new users are approaching these methodologies and those who are not really familiar with them need clear statements and information to know if a package or system satisfies certain requirements before investing. Therefore technical standards for the 3D imaging field must be created, like those available for the traditional surveying or CMM. Apart from standards, comparative data and best practices are also needed, to show not only advantages but also limitations of systems and software. In these respects, the German VDI/VDE 2634 contains acceptance testing and monitoring procedures for evaluating the accuracy of close-range optical 3D vision systems (particularly for full-frame range cameras and single scan). The American Society for Testing and Materials (ASTM) with its E57 standards committee is trying to develop standards for 3D imaging systems for applications like surveying, preservation, construction, etc. The International Association for Pattern Recognition (IAPR) created the Technical Committee 19 – computer vision for cultural heritage Applications – with the goal of promoting computer vision Applications in cultural heritage and their integration in all aspects of IAPR activities. TC19 aims at stimulating the development of components (both hardware and software) that can be used by researchers in cultural heritage like archaeologists, art historians, curators, and institutions like universities, museums, and research organizations. As far as the presentation and visualization of the achieved 3D models is concerned, the London Charter (http://www.londoncharter.org/) is seeking to define the basic objectives and principles for the use of 3D visualization methods in relation to intellectual integrity, reliability, transparency, documentation, standards, sustainability, and access of cultural heritage.

The Open Geospatial Consortium (OGC) developed the GML3, an extensible international standard for spatial data exchange. GML3 and other OGC standards (mainly the OpenGIS Web Feature Service (WFS) Specification) provide a framework for exchanging simple and complex 3D models. Based on the GML3, the CityGML standard was created, an open data model and XML-based format for storing, exchanging, and representing 3D urban objects and in particular virtual city models.

Photogrammetry

Photogrammetry [11,47,48] is the science of obtaining accurate, metric, and semantic information from photographs (images). Photogrammetry turns 2D image data into 3D data like digital models, rigorously establishing the geometric relationship between the image and the object as it existed at the time of the imaging event. Once the relationship, described with the collinearity principle (Figure 5.2), is correctly recovered, accurate 3D information about a scene can be strictly derived from its imagery. Photogrammetry can be done using underwater, terrestrial, aerial, or satellite imaging sensors. Generally the term Remote Sensing [49] is more associated to satellite imagery and their use for land classification and analysis or changes detection.

The photogrammetric method generally employs a minimum of two images of the same static scene or object acquired from different points of view. Similar to human vision, if an object is seen in at least two images, the different relative positions of the object in the images (the so-called parallaxes) allow a stereoscopic view and the derivation of 3D information of the scene seen in the overlapping area of the images. Photogrammetry can therefore be applied using a single image (e.g., monoplotting, ortho-rectification, etc.), or two (stereo) or multiple images (block adjustment).

FIGURE 5.2

Collinearity principle (a): camera projection center, image point, and object point P are lying on a straight line. The departure from the theoretical collinearity principle is modeled with the camera calibration procedure. Photogrammetric image triangulation principle or bundle adjustment (b): the image coordinates of homologue points measured in different images are simultaneously used to calculate their 3D object coordinates by intersecting all the collinearity rays.

Photogrammetry is used in many fields, from traditional mapping and 3D city modeling to the video games industry, from industrial inspections to movie production, from heritage documentation to the medical field. Traditionally, photogrammetry was always considered a manual and timeconsuming procedure but in the last decade many developments have led to great improvements in the performances of the technique and now many semi- or fully automated commercial procedures are available (just to mention some packages: Australis, ImageModeler, iWitness, PhotoModeler, ShapeCapture for terrestrial photogrammetry; PCI Geomatica, ERDAS LPS, Bae System Socet-Set, Z-I ImageStation mainly for satellite and aerial photogrammetry). If the goal is the recovery of a complete, detailed, precise, and reliable model, some user interaction in the photogrammetric modeling pipeline does not matter. If the purpose is just the recovery of a 3D model usable for simple visualization or virtual reality applications, fully automated procedures can also be adopted. In the case of heritage sites and objects, the advantages of photogrammetry become readily evident: (1) images contain all the information required for 3D modeling and accurate documentation (geometry and texture ); (2) taking images of an object is usually faster and easier; (3) image measurements help avoid potential damage caused by contact surveyings; (4) an object can be reconstructed even if it has disappeared or considerably changed using available or archived images [50,51]; (5) photogrammetric instruments (cameras and software) are generally cheap, very portable, easy to use, and with very high accuracy potentials; (6) the recent developments in automated surface measurement from images revealed results very similar to those achieved with range sensors [33]. Nevertheless, the integration of the photogrammetric approach with other measurement techniques (like laser scanner) should not be neglected and their combination is leading so far to quite good documentation results as it allows the use of the inherent strength of both approaches [4,9,20,44]. Compared to other techniques which also use images to derive 3D information (like computer vision, shape from shading, shape from texture, etc.), photogrammetry does not aim at the full automation of the procedures but it has always as its first goal the recovery of metric and accurate results. The most well known sister technique of photogrammetry is computer vision, which aims at deriving 3D information from images in a fully automated way using a projective geometry approach. The typical vision pipeline for scene modeling is named Structure from Motion [32, 36, 52-54] and it is getting quite common in the 3D heritage community, mainly for visualization and VR applications as it is not yet fully accurate for the daily documentation and recording of large and complex sites.

FIGURE 5.3

Photogrammetric 3D modeling pipeline: from the image data acquisition to the generation of the final textured 3D model. According to the application and type of scene or object, sparse or dense point clouds are generated using interactive or automated procedures.

The entire photogrammetric workflow (Figure 5.3) used to derive metric and accurate 3D information of a scene from a set of images consists of:

• camera calibration and image orientation,

• 3D measurements,

• structuring and modeling,

• texture mapping and visualization.

Compared to the range sensors (e.g., laser scanner) workflow, the main difference stays in the 3D point cloud derivation: indeed range sensors deliver directly the 3D data while photogrammetry requires the mathematical processing of the image data (through the calibration, orientation, and further measurement procedures) to derive the required sparse or dense point clouds useful to digitally reconstruct a scene or an object.

In some cases, 3D information could also be derived from a single image using object constraints [38,55,56] or estimating surface normals instead of image correspondences (shape from shading [37], shape from texture [57], shape from specularity [58], shape from contour [59], shape from 2D edge gradients [60]).