Abstract

It has been demonstrated that rating trust and reputation of individual nodes is an effective approach in distributed environments in order to improve security, support decision-making and promote node collaboration. Nevertheless, these systems are vulnerable to deliberate false or unfair testimonies. In one scenario the attackers collude to give negative feedback on the victim in order to lower or destroy its reputation. This attack is known as bad mouthing attack, and it can significantly deteriorate the performances of the network. The existing solutions for coping with bad mouthing are mainly concentrated on prevention techniques. In this work we propose a solution that detects and isolates the abovementioned attackers, impeding them in this way to further spread their malicious activity. The approach is based on detecting outliers using clustering, in this case self-organizing maps. An important advantage of this approach is that we have no restrictions on training data, and thus there is no need for any data pre-processing. Testing results demonstrates the capability of the approach in detecting bad mouthing attack in various scenarios.

Keywords: reputation systems, bad mouthing detection, self-organizing maps.

Introduction

Trust and reputation have recently been suggested as an effective security mechanism for open and distributed environments (Ad Hoc networks [2], WSNs [1, 2], P2P networks [3], etc.). In essence, the nodes that do not behave properly (according to the established policy of "proper" behavior) will have low reputation, so the rest of the nodes will avoid any collaboration with them, which is equivalent to its isolation from the network. Extensive research has been done on modeling and managing trust and reputation, and it has been demonstrated that rating trust and reputation of individual nodes is an effective approach in distributed environments in order to improve security, support decision-making and promote node collaboration.

There are many different definitions of trust and reputation, but in essence trust is a belief about future behavior that one node holds in others and it is based on its own experience, thus its main characteristic is subjectivity. On the other hand, reputation is considered to be the global perception of the behavior of a node based on the trust that others hold in it, thus considered to be objective [2]. The common way of defining a trust value is by using policies or credentials, so a node that behaves according to the established policy system will have high reputation and vice versa.

Alternatives to reputation systems can be incentive systems [4], where it is advantageous for the nodes to act in a way that the resulting global welfare is optimal. In these systems the nodes receive some sort of payment if they behave properly. Rational peers have no reason (in other words, incentive) to deviate from the equilibrium behavior. Different variations of reputation systems have been developed for various purposes. The distinguishing characteristics of all of them are the following: representation of information and classification, use of second-hand information, definition of trust and redemption and secondary response.

Among the principal threats on reputation systems we can include colluding attacks. Here we distinguish two opposite scenarios: ballot stuffing, where a number of entities agree to give positive feedback on an entity (often with adversarial intentions), resulting in it quickly gaining high reputation, and the opposite case, known as bad mouthing, where the attackers collude to give negative feedback on the victim, resulting in its lowered or destroyed reputation. In this work we concentrate on detecting bad mouthing attack, although the similar principle could be applied to detect ballot stuffing as well.

Available solutions for coping with bad mouthing attack mostly rely on prevention techniques, such as cryptography. However, these methods only increase the effort the attacker has to make in order to make his attack successful. For this reason, we present a machine learning based solution for detecting the sources of the attack. In this work we are mainly concerned about the reputation systems used in distributed systems such as wireless sensor or mesh networks, where these are mainly used for isolating anomalous behavior. However, the approach can easily be adapted for the situations where the entities in the network are humans. The main idea consists in detecting great inconsistencies in appraising an entity by other entities that have been in contact with it. The inconsistencies are treated as data outliers and are being detected using self-organizing maps.

The rest of the work is organized as follows. Previous work is surveyed in Section 2. Section 3 details the principles of the proposed solution, while Section 4 provides its evaluation. Finally, conclusions are drawn in Section 5.

Previous Work

As already mentioned, the majority of the existing solutions for coping with bad mouthing attack rely on prevention techniques. A typical solution is the one given in [5] that relies on cryptography. However, with the existence of side channel attacks [6], the attacker can easily guess the secret keys and compromise the cryptography-based protocols. Another solution proposes to use "controlled anonymity" [7], where the identities of the communicating entities are not known to each other. In this way, each entity has to provide ratings based on the quality of service provided, and as they can no longer identify their "victims", bad-mouthing and negative discrimination can be avoided. However, this is not always possible, for example in the case of online hotel ratings. All these solutions in essence increase the effort the attacker needs to introduce in order to compromise the system, but it will not protect the system from all the attacks. Thus, a second line of defense that would detect the attacks and stop their further spreading is necessary.

For this reason, in the recent past solutions for detecting collusion attacks on reputation systems started to appear. Machine learning has always been an attractive solution, given that it copes well the uncertainties that exist in security. A representative solution using hierarchical clustering is given in [8]. This solution, as many others, after the training assign the clusters that contain the majority of the data as "good" clusters. However, this imposes restrictions on training data, as if the algorithm does not process the "unclean" data during the training, it will not be able to detect attacks. A solution based on graph theory is given in [9]. This solution, instead of using the count-based scheme that considers the number of accusations, uses the community-based scheme that achieves the detection of up to 90% of the attackers, which permits the correct operation of the system.

Thus, our aim is to design a detection based solution that would overcome the abovementioned issues. Namely, we want to provide a solution that would not have any restrictions regarding training data, and that would be capable of detecting up to 100% of malicious entities.

Proposed Solution

Feature Extraction and Formation of Model

For each entity, the feature vector is formed of the recommendation the others give on it. The main idea is to find inconsistencies in recommendations. In the case the reputation system considers separately different services each entity has to offer, each service is characterized and examined independently. The characterization is based on the idea of £-grams and it is performed in equidistant moments of time using the recommendations between the consecutive moments. The features are different sets of recommendations (£-grams) and their occurrence or their frequency during the characterization period. Let the recommendations issued for the node n from five different nodes during 10 sample periods be those given in Table 1.

Table 1. Example of recommendations

|

n1 |

n2 |

n3 |

n4 |

n5 |

|

|

1 |

100 |

99 |

100 |

95 |

99 |

|

2 |

100 |

99 |

100 |

95 |

99 |

|

3 |

100 |

99 |

100 |

95 |

99 |

|

4 |

98 |

99 |

98 |

98 |

99 |

|

5 |

98 |

99 |

98 |

98 |

99 |

|

6 |

98 |

99 |

98 |

98 |

99 |

|

7 |

98 |

99 |

98 |

98 |

99 |

|

8 |

95 |

95 |

97 |

97 |

08 |

|

9 |

95 |

95 |

97 |

97 |

08 |

|

10 |

95 |

95 |

97 |

97 |

08 |

In this case, the extracted £-grams, i.e. features, and their corresponding feature values are given in Table 2. From this example it is obvious that the extracted number of different £-grams does not have to be the same in all characterization period. Thus, we cannot use any of the standard distance measurements. The distance between the instances of the presented model is taken from [10]. It is designed to calculate the distance between two sequences. We have elected this one (among all given in [10]) since it is proven to be the most efficient in the terms of the absolute execution time. The deployed distance function is actually is equivalent to Manhattan distance after making the following assumption: the feature that does not exist in the first vector while exists in the second (and vice versa) actually exists with the value equal to 0, since we can say that it occurs with 0 frequency. In this way, we get two vectors of the same size and the distance between the centre and an input is between 0 (the vectors have the same features with the same feature values) and 2 (the vectors have different features with the values greater than 0). In the same way, if the set of the features of one is the subset of the feature set of the other, the distance will be between 0 and 1.

In many situations this can result in having huge number of combinations. In this case, it is necessary to apply one of the following possibilities for reducing this number. One possibility is to divide the range [0,100] into few equidistant ranges (usually three to five), and assign a unique value or meaning to all the values that belong to one range. This significantly reduces the number of possible k-grams. Another possibility is to take an average of the values that belong to a certain range.

Table 2. The characterization of the previous example

|

Features |

Occurrence |

Frequency |

|

100 99 100 95 99 |

3 |

0.3 |

|

98 99 98 98 99 |

4 |

0.4 |

|

95 95 97 97 98 |

3 |

0.3 |

Detection and Isolation of Bad Mouthing Attack

As previously mentioned we treat attacks as data outliers and deploy clustering techniques. In this work we will use the self-organizing maps (SOM) algorithm, as they are relatively fast and inexpensive when the dimensionality of the data is huge, which can happen in our case.

There are two possible approaches for detecting outliers using clustering techniques [11] depending on the following two possibilities: detecting outlying clusters or detecting outlying data that belong to non-outlying clusters. For the first case, we calculate the average distance of each node to the rest of the nodes (or its closest neighborhood) (MD). In the latter case, we calculate quantization error (QE) of each input as the distance from its group center. If we train the SOM algorithm with clean data, it is obvious that we will have the second scenario. On the other hand, if the traces of attacks existed during the training, both situations are possible. Thus, we can detect attacks in the situation we do or do not have the traces of attacks during the training, which means that we do not have any restrictions on the training data. This further means that we avoid time consuming and error prone process of pre-processing the training data.

In the first step we examine the recommendations for each node in order to find the inconsistencies. Having in mind that the attacks will often result in creating new k-grams, it is reasonable to assume that the extracted vector in the presence of attackers will not be a subset of any vector extracted in normal situation, thus the distance will never be lower than 1. Thus, the suspicious values of both QE and MD are greater than 1. Upon detecting suspicious values, we pass to the second step.

In the second step the aim is to find the origin(s) of the suspicion. First, the deviations from either the mode, median or mean values of all the recommendations assigned to the node are calculated. The rationale behind this is that the majority of the nodes will assign correct recommendations, which will result in higher deviations from each of the above values in the cases of the wrong recommendations. This information can further be used in various ways, yet we choose to couple it with the reputation system, in the way the origins of the bad mouthing will result in lowered reputation, and their recommendations will not be considered. Thus, the calculated deviations are normalized to the range [0, 1], in the way the entity that is the origin of the maximal deviation has the lowest reputation, i.e. 0, while the origin of the minimal one has the highest reputation, i.e. 1. The reputations of the rest of the nodes are linearly interpolated between 0 and 1.

Self-Organizing Maps Algorithm

The self-organizing maps (SOM) algorithm [12] follows the standard steps of SOM execution, as given in [12]. The only problem-specific point is the centre, i.e. node representation and updating. Each centre is implemented as a collection whose size can be changed on the fly and whose elements are the £-grams defined in the previous text with assigned occurrence or frequency. The adjustment of nodes (that belong to the map area to be adjusted) is performed in the following way:

• If an n-gram of the input instance v(t) exists in the node, its value) is modified according to the centre update given in [12];

• If an n-gram of the instance v(t) does not exist in the cluster centre, the n-gram is added to the centre with occurrence equal to 1.

Experimental Evaluation

The proposed algorithm has been tested on the reputation systems simulator developed by our research group and designed using the C++ programming language. The network consists of a number of distributed entities, and it can simulate a number of distributed systems, such as wireless sensor networks, wireless mesh networks, etc. The reputation can be calculated in various ways, which are the implementation of the class ReputationServer. In the testing scenario, the entities assign recommendations to their neighbors. The bad mouthing attack is initiated at a certain moment and is composed of a number of malicious nodes that falsely assign low reputation to some of their neighbors in a random fashion. The time in the simulator is measured in time ticks, where the ticks are the moments of time the recommendations are being published to the rest of the world.

In the following experiments we will present the performance of the approach in various scenarios, varying the attack strength and the starting point of the attack. There will be two typical situations: in the first case the attack will start after the end of training, so the training will be performed with "clean" data, while in the second case we will have the situations where the training data contains the traces of attacks as well. The aim of these experiments is to show that no constraints on training data exist and that the approach is capable of detecting 100% of the malicious entities even in the cases they make a significant part of the network.

The scenario is based on 50 entities that can take one of the possible 300 positions. In the experiments we fix the number of the entities that take part in the bad mouthing attack, where each entity gives false accusations about 10% of the entities in its closest neighborhood.

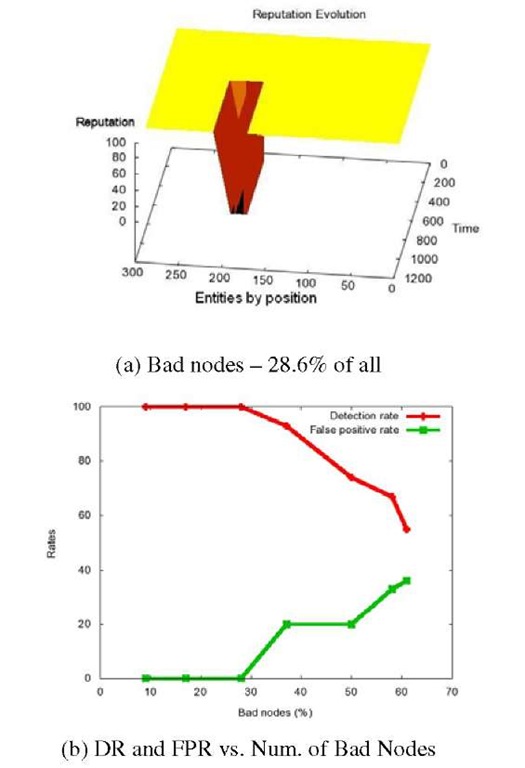

In the first experiment the attack starts after the end of training, so the training is performed with so-called clean data. In Fig. 1.a. we present the reputation evolution of the situation of the most aggressive attack that can be detected with 100% detection rate with 0% false positive rate, which is the case when the bad nodes make 28.6% of all the nodes. In Fig.1.b. the dependence of both detection rate (DR) and false positive rate (FPR) on the number of bad nodes is presented. As expected, as the number of bad nodes increases, detection rate decreases, while false positive rate increases. The simulation stops at the moment the total number of bad entities makes 61.5% of total, when the undetected bad entities make the majority of non-isolated entities. In this case the reputation system becomes compromised and stops providing correct reputation values.

Fig. 1. Experiments with different number of bad nodes after training with clean data

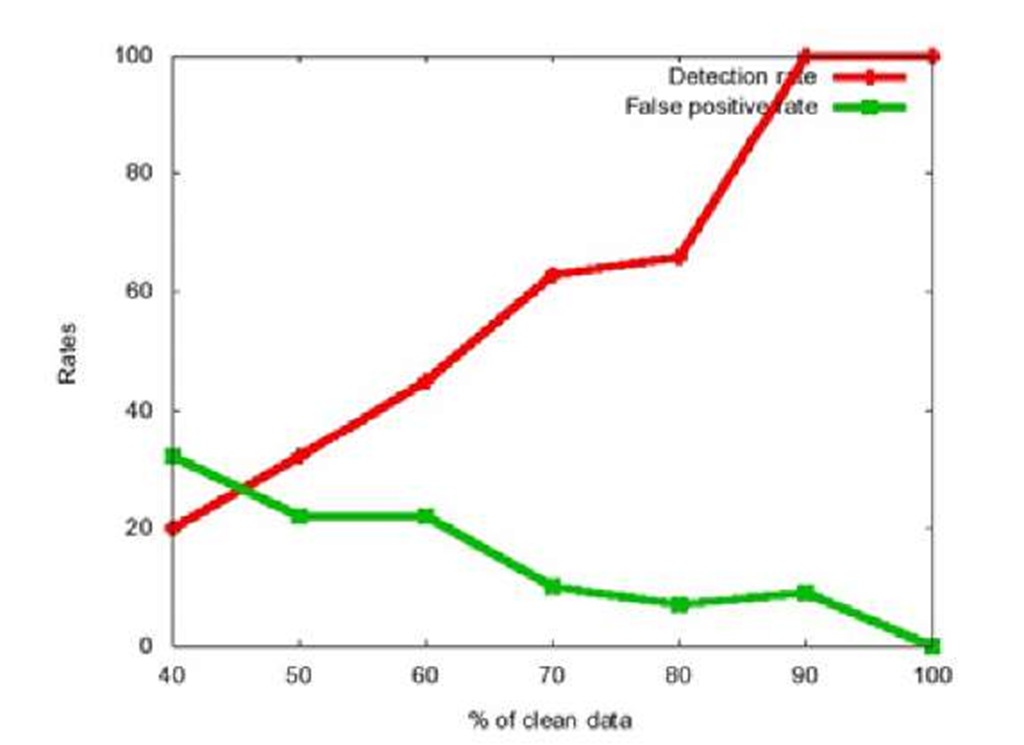

Fig. 2. DR and FPR vs. amount of clean data during the training

Now we will present the results of the experiments where the SOM algorithm is being trained with the data that contains the traces of attacks. Bad nodes make 28.6% of all the nodes. The results are presented in Fig.2. We can observe that the detection rate decreases, as the amount of the clean data during the training also decreases, while the false positive rate increases. This could be expected, as the "unclean" data makes bigger part of the training data, it is harder to distinguish it.

Conclusions

In this work we have presented an approach for detecting bad mouthing attack on reputation systems. The approach is based on outlier detection using SOM algorithm. We have proven that it is capable of achieving 100% detection rate with 0% false positive rate if trained with clean data and up to 28.6% of the nodes are malicious. For the last case, when 28.6% of the nodes are malicious, the detection of the attack is possible if at least 40% of the data are clean.

In the future we plan to test the approach on another colluding attack, ballot stuffing, where a number of entities agree to give positive feedback on an entity (often with adversarial intentions), resulting in it quickly gaining high reputation. In addition, we will test the performances of other clustering techniques instead of SOM, such as Growing Neural Gas [13].