Abstract

This study introduces a new method based on Greedy-Boost, a multiple classifier system, for better and faster intrusion detection. Detection of the anomalies in the data-processing networks is regarded as a problem of data classification allowing to use data mining and machine learning techniques to perform intrusion detection. With such automatic processing procedures, human expertise only focuses on a small set of potential anomalies which may result in important time savings and efficiency. In order to be scalable and efficient, these kinds of approaches must respect important requirements. The first is to obtain a high level of precision, that is to be able to detect a maximum of anomalies with a minimum of false alarms. The second is to detect potential anomalies as fast as possible. We propose Greedy-Boost, a new approach of boosting which is based on an adaptive combination of multiple classifiers to perform the precision of the detection. This approach uses an aspect of smooth that ensures stability of the classifier system and offers speed of detection. The experimental results, conducted on the KDD99 dataset, prove that our proposed approach outperforms several state-of-the-art methods, particularly in detecting rare attack types.

Keywords: Ensemble methods, boosting, data mining, intrusion detection systems, Greedy-Boost.

Introduction

During the past decades, the noticeable proliferation of sophisticated attack tools has not only posed a big challenge to computer security community but also been a great anxiety of organizations in defending their assets from illicitly intrusive activities. The increase of attacks proves that security mechanisms such as firewalls and anti-virus are not strong enough to protect network systems. Within such a context, many intrusion detection approaches have been proposed to handle computer and network security problems. We find two common analysis techniques for intrusion detection : misuse and anomaly detection. In fact, misuse detection uses a collection of known attacks to construct a misuse model that is usually in forms of set of rules (or signatures). However, anomaly detection builds a model by employing information (dataset) about normal behavior. The detection mechanism here is based on the deviation between an instance needed for detection and the model such as in [13] and[14]. Taking advantages from these two techniques, hybrid methods are proposed. These methods can more efficiently handle both misuse and anomaly detection problems. It is worth noting that recently proposed methods are prone to hybridized mechanisms, e.g., ensemble or aggregation systems. This type of approach presents the subject of this paper.

We introduce a new approach of intrusion detection that inherits at the same time the attributes bases of the anomaly detection approach and misuse detection approach. This hybrid approach is based on a new ensemble method, Greedy-Boost, adapted to the data networks to improve the reliability of the intrusion detection systems and to reduce the time of intrusion detection. The use of aggregation reduces the number of false alarms (false-positives) and especially the number of undetected attacks (false-negative).

This paper is organized as follows. In section 2, we present the state of the art of principals hybrid intrusion detection methods, i.e., ensemble methods, systematically summarize and compares several related works to find out new applicable research directions. In section 3, we present our hybrid approach based on an ensemble method we called Greedy-Boost and detail the principle of the new algorithm. We also prove theoretically the stability (convergence) of the approach. This is the essential feature of our algorithm because it shows why Greedy-Boost leads to more efficient and scalable results than classical ensemble methods. The dataset used in the experiments, as well as the results are presented in section 4. Finally, in section 5, we conclude and give several future works issues.

Hybrid Methods for IDS Using Ensemble Methods

The main idea of ensemble method is to build several classifiers and then aggregate the outputs of all classifiers to make decision for the final outcome. The core purpose of an ensemble is to increase classification accuracy and decrease error rate. Because each type of classifier can produce different results, ensemble method takes advantages of the strong points of each individual classifier to induce a better final outcome. There are many types of ensemble proposed in the machine learning literature. With respect to architecture, individual classifiers can in general be structured in forms of parallel (e.g., bagging), sequential (e.g., boosting), or hybrid. For making decision, the composer of classifiers can apply various mechanisms such as majority voting, Bayesian combination, distribution summation, entropy weighting, and so on. Many studies have applied the diversity of ensemble methods to the intrusion detection problem. It is worth noting that most of the studies report that ensemble method considerably enhances the efficiency of ‘rare-class’ detection and anomaly detection. Giacinto et al. introduce an ensemble system including three groups of classifiers that correspond to three subsets of features (i.e., intrinsic features, traffic features, and content features) [2]. Each group of classifiers is trained from one out of the three above feature subsets. Then, three simple fusion functions (i.e., majority vote, average, and belief) are employed for aggregation. A subsequent work of the same authors describes an ensemble architecture including multiple one-class k-means classifiers [6]. Each classifier is trained from a training subset containing a specific attack type belonging to a specific attack class (e.g., Neptune is one of twenty one attack types and belongs to DoS attack class in the KDD99 dataset). The process of ensemble is based on the Decision Template method. The proposed architecture aims at labeling a given instance to belong to a normal or known attack class, and thus is called misuse detection. More adaptively, Abadeh et al. employ Fuzzy Logic Theory to develop an ensemble method [7]. This study introduces a parallel genetic local search algorithm to generate fuzzy rule sets for each class label in the training set. Each of these rule sets is utilized to build a fuzzy classifier. Then, a decision fusion procedure is in charge of determining a class label for a given instance. Comparably, Zainal et al. describe an ensemble model that utilizes three different learning algorithms (classifiers), i.e., linear genetic programming, neural fuzzy inference system, and random forest [3]. Each classifier is trained by the same training set and assigned to a weight calculated given the strength of the classifier. For decision making, a composer of classifiers determines a class label for a given instance according to the weights of classifiers.

Xiang et al. build a multi-level hybrid model by combining two techniques, i.e., supervised decision tree and unsupervised Bayesian classification [1]. The classifier model is hierarchically structured in forms of class labels in training set. By experimenting on the KDD99 dataset, the authors motivated that the model is especially efficient in improving false negative rate compared to other methods. Apart from other methods that build classifiers from network header data, Perdisci et al. introduce a multiple classifier system for anomaly detection given network payload data [4]. This ensemble system comprises several one-class SVM classifiers. In this study, different compact representations of payload in different feature spaces are obtained by applying a dimensionality reduction algorithm. Then, each one-class SVM classifier is trained by using these different representations of payload. Given the outputs of classifiers, a final decision is made by applying some fusion functions (e.g., average, product, majority vote). The experiment is conducted on three datasets, i.e., Attack-Free Darpa Dataset, Attack-Free Gatech (a dataset of Georgia Institute of Technology) and HTTP-Attack Dataset. Based on ROC Curve Graph, detection rate of the proposed method fluctuates from 80% to 99%. Zhang et al. apply a Random Forest Algorithm, an ensemble method, to intrusion detection [9]. Random Forest produces a forest of classification trees in which each tree is built from a different bootstrap sample. Instead of using the class label attribute of training dataset for classification analysis, the proposed method only uses the attribute service type (e.g., HTTP, FTP) as the purpose of classification. In misuse detection, a given instance is passed through the trees and then a ‘majority vote’ mechanism is applied to label this instance. For outlier detection, the general idea is that if an instance is classified as the one that is different from its own service type, then this instance is regarded as an outlier. For example, if an HTTP connection record is classified as FTP service type, this connection record is determined as an outlier. More diversely, Makkamala et al. build an ensemble model using five classifiers, i.e., resilient back propagation NN, scaled conjugate gradient NN, one-step-secant NN, SVM, and multivariate adaptive regression spline [8]. All these five classifiers are operated independently and concurrently. In this model, a final classification decision is made by majority voting.

Finally, authors in [15] use boosting strategy to improve the accuracy of intrusion detection system by developing a Multi-Class SLIPPER (MC-SLIPPER) from a boosting-based learning algorithm. The system is built from multiple available binary SLIPPER modules. Multiple prediction-confidence based strategies are proposed and applied to arbitrate the final prediction among predictions from all binary SLIPPER modules. The experimental results show that the system achieves the best performance using the BP neural network.

New Approach for Better and Faster Detection

An effective intrusion detection system is an essential step for the computer security. To solve the problem of the detection system performance, we choose the classifiers aggregation method specially the adaptive strategies such as boosting [11] that is presented by its algorithm AdaBoost. In fact, the idea of boosting is the construction of many models which are then incorporated by a weighted average of estimates or a vote. It differs clearly on the way of to build the family of models: each model is an adaptive version of the precedent by giving more weight to badly predicted observations. Intuitively, this algorithm thus concentrates its efforts on the observations most difficult to correctly predict while the models aggregation avoid the over-fitting. Good results are given by this approach thanks to the adaptive way used to detect non evident observations. Based on the performance of boosting, we propose a new approach adapted to the context on intrusion detection. Compared to [15], this new approach must be robust against huge and noisy data, one of problem of network data and must classify the connection type as speed as possible such we work in real time. A response to the exigences of network data, we propose Greedy-Boost.

New Method to Perform Detection Intrusion: Greedy-Boost

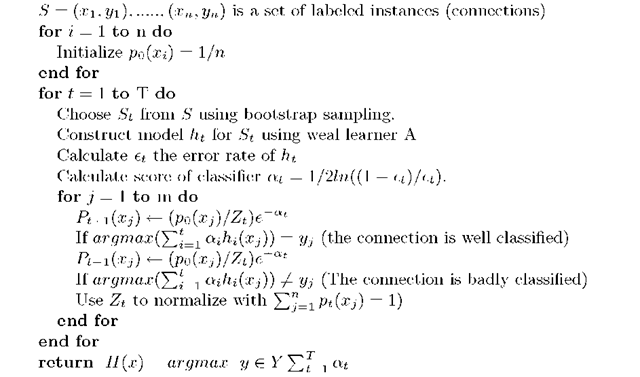

To make boosting and specially Adaboost resistant to the noise and to avoid these chaotic cyclic behaviors preventing convergence, it appears necessary to modify its update of the weights (distributions). Our idea consists in taking into account with an iteration T, not only the last assumption generated to put the up to date weights, but all the assumptions or models previously generated. We propose Greedy-Boost which based on the greedy process.

Explication of Greedy-Boost

Greedy-Boost is a pure generator of models which seeks in each iteration the model that predicts at best the examples badly predicted by the linear combination of the models previously generated. Two differences distinguish Greedy-Boost from AdaBoost. First, the current classifier is not one simple model but a linear combination of models. Then, the algorithm calculates the update of the distribution based on the initial distribution instead of the previous one (all examples with the same weight).

Algorithm 1. Greedy-Boost algorithm

In other words, we evaluate the examples badly classified by the standard model. This produces a new sample which will be used to generate a new model. The interest of such a process is the fact that it necessarily converges towards a stable solution under the hypotheses that on each iteration, we are able to find a weak learner, i.e, with an error![]() on the weighted sample.

on the weighted sample.

Theorem. Based on the hypotheses that for each iteration, the learner construct by Greedy-Boost is at least a weak learner, i.e,![]() Greedy-boost converges necessarily towards a stable solution when

Greedy-boost converges necessarily towards a stable solution when![]()

Proof : The proof is based on a theorem of [12] which ensures that in iteration t+1, the error on the learning sample of the combined classifier Ht+i of AdaBoost is lower than the one of Ht, the combined classifier of iteration t [12]:

err(.) is the empirical error. With![]() we have

we have![]() so

so

![]() . We consider that the solution of Greedy-Boost is equivalent to the solution of AdaBoost in the two fist iterations

. We consider that the solution of Greedy-Boost is equivalent to the solution of AdaBoost in the two fist iterations![]()

After, for each iteration, we change the distribution Pt with the same way of AdaBoost, based on the linear combination of models previously generated. This process is equivalent to applying AdaBoost on the current classifier![]() So

So

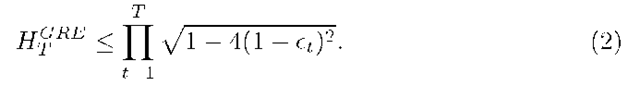

based on (1), we have .![]() Consequently in iteration T, we have :

Consequently in iteration T, we have :

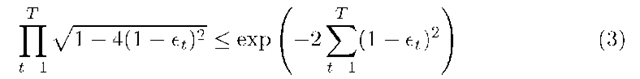

Based on [12], we have also :

we have:

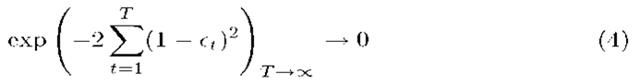

Finally, for

we have:

(4) ensures that the distribution Pt will not be modified once reaching a sufficient number of iterations, and so the convergence of the process. (4) shows that, similarly as for AdaBoost, Greedy-Boost decreases the learning error exponentially fast. Greedy-Boost stops when its error is equal to 0. Practically, Greedy-Boost reduces the error with a speedy way (only after few iterations). Consequently, Greedy-Boost performs the classification quickly in real time.

Experiments and Results

The used data are a sampling from KDD99 benchmark intrusion detection dataset. It’s a standards data which were prepared and controlled by the MIT Lincoln laboratories for the DARPA 1998 program. These data are also used for the intrusion detection challenge of KDD 99 [10]. In this section, we compare the precision and the recall of AdaBoost, C4.5 and Greedy-Boost applying 10 cross-validation to evaluate the model. We use 10 iterations for AdaBoost and Greedy-Boost experiments.

Results in table1 show the superiority of Greedy-Boost concerning the precision of the minority classes (Probe, U2R and R2L). Indeed, the precision of the prediction of these classes varies between 88% and 100% for Greedy-Boost. However, for Boosting and C4.5, the prediction precision of these classes cannot even be calculated since these methods are unable to label a connection of these classes. Moreover, the precision ensured by Greedy-Boost (Normal and DOS) are close to 100%. These results are higher than those assured by C4.5 and Boosting.

We calculated the recalls of the various classes ensured by each of the 3 algorithms. Table 2 shows the superiority of greedy-Boost compared with C4.5 and Boosting, concerning the recall of minority classes. Whereas this recall is null for C4.5 and Boosting, Greedy-Boost obtains between 44% and 97,1% of recall for each one of these classes, which constitutes a clear improvement. In the case of the two most classes, the rates of recall of greedy-Boost are close to 90%, whereas that of Boosting goes down to 82.0% for the Normal class and that of C4.5 falls to 89.2% for the DoS class!

Based on the costs matrix table used on KDD99 challenge, the results obtained on 3 shows that Greedy-Boost presents the lowest average costs compared to C4.5 and Boosting. Greedy-Boost has more the average low costs (0.0060), this thanks to its effectiveness on the minority classes, because the minority classes are the classes whose cost of bad classification is most important.

Table 1. Precision of Boosting, C4.5 and Greedy-Boost

|

Precision |

||

|

Class |

Boosting C4.5 Greedy-Boost |

|

|

0 : Normal |

82.6 66.7 |

99.1 |

|

1 : Probe |

99.0 |

|

|

2 : DoS |

95.0 99.9 |

100.0 |

|

3 : U2R |

88.5 |

|

|

4 : R2L |

93.2 |

|

Table 2. Recall of Boosting, C4.5 and Greedy-Boost on KD99

|

|

Recall |

|

|

Class |

Boosting C4.5 Greedy-Boost |

|

|

0 : Normal |

82.0 99.8 |

100.0 |

|

1 : Probe |

97.1 |

|

|

2 : DoS |

96.7 89.2 |

100.0 |

|

3 : U2R |

44.2 |

|

|

4 : R2L |

71.9 |

|

Table 3. Results of the cost

|

|

Boosting |

C4.5 |

Greedy-Boost |

|

Cost average |

0,1467 |

0,1949 |

0,0060 |

|

Standard deviation |

0,5307 |

0,6067 |

0,1358 |

Conclusion and Perspectives

This study provides an efficient design method to generate a robust ensemble system for the intrusion detection problem. The main motivation behind this method is that the use of aggregation decision for classification ensures the strengthens of the ensemble system in term of both quantitative and qualitative sides of members, but also achieves a high diversity degree between members. The keystone of our proposed solution approach is systematically addressed through a modification of boosting, specifically, the techniques to update the weight of examples badly classified. Our theatrical study proves that our approach greedy-Boost converges quickly. It is experimentally proven that, as a whole, our algorithm clearly outperform several other algorithms, when evaluated on the KDD99 dataset. We believe that our attempt in this paper is a useful contribution to the development of ensemble-based intrusion detection systems.