Abstract

Gabor wavelet-based methods have been proven that are useful in many problems including face detection. It has been shown that these features tackle well facing into image recognition. In image identification, while there is a number of human faces in a repository of employees, it is aimed to identify the face of an arrived employee is which one? So the application of gabor wavelet-based features is reasonable. We propose a weighted majority average voting classifier ensemble to handle the problem. We show that the proposed mechanism works well in an employees’ repository of our laboratory.

Keywords: Classifier Ensemble, Gabor Wavelet Features, Face Recognition, Image Processing.

Introduction

Gabor wavelet-based methods have been successfully employed in many computer-vision problems, such as fingerprint enhancement and texture segmentation [10, 11]. Also similar to the human visual system, Gabor wavelets represent the characteristics of the spatial localities and the orientation selectivity, and are locally optimal in the space and frequency domains [12]. Therefore, Gabor Wavelets are proper the choice for image decomposition and representation when the goal is to derive local and discriminating features [13].

Combinational Classifiers are so versatile in the fields of artificial intelligence. It has been proved that a single classifier is not able to learn all the problems because of three reasons:

1. Problem may inherently be multifunctional.

2. From other side, it is possible that a problem is well-defined for a base classifier which its recognition is very hard problem.

3. And finally, because of the instability of some base classifiers like Artificial Neural Networks, Decision Trees, and Bayesian Classifier and so on, the usage of Combinational Classifiers can be inevitable.

There are several methods to combine a number of classifiers in the field of image processing. Some of the most important are sum/mean and product methods, ordering (like max or min) methods and voting methods. There is a good coverage over their comparisons and evaluations in the [1], [2], [3] and [4]. In [5] and [6] it is shown that the product method can be considered as the best approach when the classifiers have correlation in their outputs. Also it is proved that in the case of outliers, the rank methods are the best choice [4]. For a more detailed study of combining classifiers, the reader is referred to [7].

Applications of combinational classifiers to improve the performance of classification have had significant interest in image processing recently. Sameer Singh and Maneesha Singh [8] have proposed a new knowledge-based predictive approach based on estimating the Mahalanobis distance between the test sample and the corresponding probability distribution function from training data that selectively triggers classifiers. They also have shown the superior performance of their method over the traditional challenging methods empirically.

This paper aims at producing an ensemble-based classification of face recognition by use of gabor features with different frequencies. The face images are first gave to the gabor feature extractor with different frequencies, then the features of all trainset union with the test data are compared with each other in each frequency. This results in a similarity vector per each frequency. The similarity vectors are finally combined to vote to which image the test data is belonged.

Voting Classifier Ensemble

Classifier ensemble works well in classification because different classifiers with the different characteristics and methodologies can complement each other and cover their internal weaknesses. If a number of different classifiers votes as an ensemble, the overall error rate will decrease significantly rather using each of them individually.

One of the oldest and the most common policy in classifier ensembles is majority voting. In this approach as it is obvious, each classifier of the ensemble is tested for an input instance and the output of each classifier is considered as its vote. The class is the winner which the most of the classifiers vote for it. The correct class is the one most which is often chosen by different classifiers. If all the classifiers indicate different classes, then the one with the highest overall outputs is selected to be the correct class.

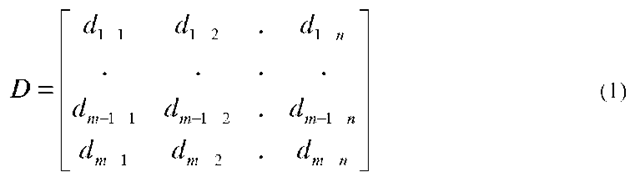

Let us assume that E is the ensemble of n classifiers {e1y e2, e3 …en}. Also assume that there are m classes in the case. Next, assume applying the ensemble over data sample d results in a binary D matrix like equation 1.

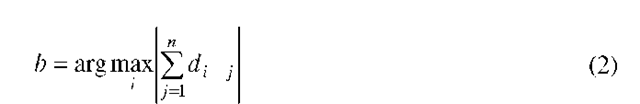

where dy is equal to one if the classifier j votes that data sample belongs to class i. Otherwise it is equal to zero. Now the ensemble decides the data sample to belong class b according to equation 2.

Another method to combine a number of classifiers which employs dij as confidence of classifier j for belonging the test data sample to class i is called majority average voting. The majority average voting uses equation 2 as majority voting.

Weighted majority vote is another approach of voting; in this method members’ votes have different worths. Unlike the previous versions of voting this is not like democracy. For example if a classifier has 99% recognition ratio, it is more worthy to use its vote with a more effect than the vote of another classifier with 80% accuracy rate. Therefore in weighted majority vote approach, every vote is multiplied by its worth. Kuncheva [7] has shown that this worth can optimally be a function of accuracy.

To sum up assume that the classifiers existing in the ensemble E have accuracies [p1, p2, p3 —pn} respectively. According to Kuncheva [7] the worth of them are [wj, w2, w3 ...wn} respectively where

Weighted majority vote mechanism decides the data sample to belong class b according to equation 2.

Similarly another method of combining which again employs dj as confidence of classifier j for belonging the test data sample to class i is called weighted majority average voting. Weighted majority average voting uses equation 4 as weighted majority voting.

Feature Extraction

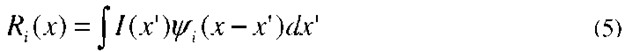

Gabor filter can capture salient visual properties such as the spatial localization, the orientation selectivity, and the spatial frequency characteristics. The Gabor responses describe a small patch of gray values in an image I(x) around a given pixel x=(x,y)T. It is based on a wavelet transformation, given by the equation 5.

which![]() is a convolution of image with a family of Gabor kernels like equation 6.

is a convolution of image with a family of Gabor kernels like equation 6.

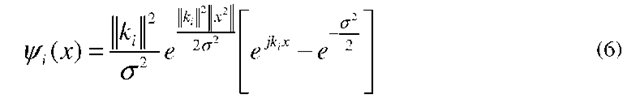

where

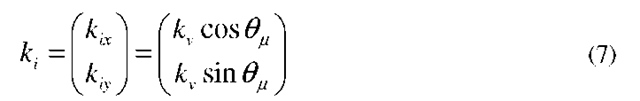

Each![]() is a plane wave characterized by the vector ki enveloped by a Gaussian function, where o is the standard deviation of this Gaussian. The center frequency of ith filter is given by the characteristic wave vector ki having a scale and orientation given by

is a plane wave characterized by the vector ki enveloped by a Gaussian function, where o is the standard deviation of this Gaussian. The center frequency of ith filter is given by the characteristic wave vector ki having a scale and orientation given by![]() . Convolving the input image with a number of complex Gabor filters with 5 spatial frequencies (v = 0,...4) and 8 orientations (u = 0,...7) will capture the whole frequency spectrums, both amplitude and phase as illustrated in [9].

. Convolving the input image with a number of complex Gabor filters with 5 spatial frequencies (v = 0,...4) and 8 orientations (u = 0,...7) will capture the whole frequency spectrums, both amplitude and phase as illustrated in [9].

According to equation 5, each image P of face train dataset is mapped to 40 images ![]() where

where![]() Test Image H is also mapped to

Test Image H is also mapped to![]() Now

Now

orientation matching between each train image and the test image is gained using equation 8.

where![]() are extracted from equation 9.

are extracted from equation 9.

where Center is a 9×9 square in the middle of the image, e.g. for image with size 80×40, it is {36,…44}x{16,…24}. Now the orientation matched image denoted by QMItv,u’ is defined as equation 10.

where

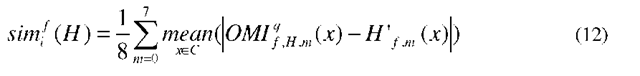

Now we define the similarity vector sim whose ith element indicates the similarity between ith train image and the test image, according to equation 12.

Employed Classification

Let assume that there exist n training images and one test image. Also assume that the training images are indexed as number one to n respectively and the test image indexed as number n+1. The goal is to understand to which training image the test image is similar. The Gabor Wavelet features of r1 frequency and eight directions are first extracted from images number one to n+1. Then the similarities between each of train images and test image are evaluated according to equation 12, as discussed in the previous section. It is obvious that in order to become these similarities comparable they must be normalized in such a way that the sum of similarities vector of test image becomes unit. So they are normalized in range [0,1]. After calculating each of these similarities between each two training and test images, a similarity vector named simrl which is a vector with n elements, is obtained. It is important to note that the simr1i means the similarity between images number i and test image.

As the reader can guess, the problem mentioned here, is an n class problem. simrl can be also served as a simple classifier Cr1 which uses image number 1 to n as its train dataset. So as to the index of the maximum value in the vector can be considered as class label of test image.

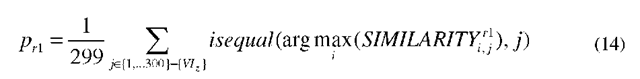

Considering simr1, r1e {0,…4} there are five classifiers to classify the test image. Now the majority-votes ensemble is employed to classify the test image. Assume that the accuracy of classifier Cr1 is denoted by pr1, the weight vector w can straightforwardly be calculated in the weighted-majority-votes ensemble.

Parameters of Classification

In the experiments, there exist 2×300 training images. Here there are 300 real classes, 2 images per each class denoted by Ii and VIi where![]() Indeed one image f class i is denoted by TIi and the other by VIi. 300 fixed images i.e. TIi, are selected as training dataset. Running the algorithm 299 times, each time one of VIi is considered as test image and the other 299 images as validation dataset. In zth running of algorithm image VIz is selected as test image and mages VIj where

Indeed one image f class i is denoted by TIi and the other by VIi. 300 fixed images i.e. TIi, are selected as training dataset. Running the algorithm 299 times, each time one of VIi is considered as test image and the other 299 images as validation dataset. In zth running of algorithm image VIz is selected as test image and mages VIj where![]() {VIz} are considered as validation dataset. Now we obtain 5 classifiers Cr1,

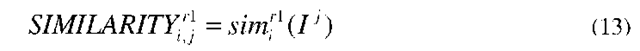

{VIz} are considered as validation dataset. Now we obtain 5 classifiers Cr1, ![]() ased on simrl. To calculate the accuracies of Cr1 the mentioned validation dataset is used as following. The similarities between each pairs of images denoted by TIi and VIi, where

ased on simrl. To calculate the accuracies of Cr1 the mentioned validation dataset is used as following. The similarities between each pairs of images denoted by TIi and VIi, where![]() are evaluated mploying equation 13.

are evaluated mploying equation 13.

It is obvious that these similarities must also again be normalized in order to become them comparable. So they are again normalized in range [0,1] as mentioned before. After calculating each of these similarities between each two of training datasets, a similarity matrix named SIMILARITY1 which is an nxn matrix, is obtained. It is important to note that the SIMILARITY1, means the similarity between image number i of training dataset and image number j of validation dataset and the VIzth column of that matrix is invalid.

Now the accuracy of classifier Cr1, on the training data, is the number of training data that correctly assigned to its correct class, divided to n. In other words, the number of the columns which its maximum value is over matrix diagonal, divided to n can be considered as the accuracy of this classifier as stated in equation 14. Although it is obvious that diagonal elements of this matrix must be the largest in their columns, it is not true in many cases.

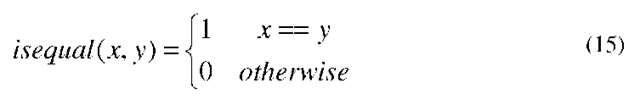

where isequal(x,y) is defined as equation 15.

Experimental Study

Experimental results are reported over 300 pairs of images. Each pair of images belongs to an employee (personnel) of our laboratory. All the images have the same resolution. All of them are first equalized using equalizing their histograms.

Live-one-out technique is used to test ensemble classifier over these images. Also features of 5 different frequencies and 8 orientations are extracted. So, there are forty similarity matrices. 599 images, except in weighted majority voting, are used as training set because there is no longer need to validation set. It is worthy to mention that the best classifier using only one of the similarity matrix, has just 76.63% recognition ratio. While recognition ratio of classifier mentioned above has 90.17% recognition ratio with majority voting, by use of the average voting as final results the 89.32% recognition ratio is achieved. But the combinational proposed approach has 92.67% recognition ratio. The Table 1 summarizes the results.

Table 1. Face recognition ratios of different methods

|

Best Cf |

MV(Cf) |

MAV(Cf) |

WMAV |

|

76.63 |

89.32 |

90.17 |

92.67 |

Conclusions

In this paper, new face identification algorithm is proposed. In the proposed algorithm first gabor wavelet features with different frequencies are extracted maximizing over different orientations. Defining one classifier per each frequency an ensemble is obtained. The ensemble uses weighted majority average voting as the consensus function. It is shown that the proposed mechanism works well in an employees’ repository of laboratory.