A SCANNER GENERATOR

The first two sections of this topic introduced scanners and discussed the tasks which scanners usually perform. The next three sections introduced mathematical models which are useful for programming a scanner in a systematic manner and for creating a scanner using a scanner generator. This section introduces a scanner generator which uses regular expressions as input.

Because the implementation of finite-state acceptors is straightforward, programs have been written which can mechanically generate scanners using a formal method of specifying a finite-state acceptor as input. This subsection introduces one such scanner generator called Lex, written by Lesk and Schmidt (1975), which uses regular expressions for specifying a scanner.

Using a set of regular expressions and associated segments of code called actions which dictate the tasks associated with each expression, Lex generates a transition table and a program which interprets the tables. An action is executed whenever a token that can be generated by the corresponding regular expression is recognized. Thus, the output from Lex is a program which simulates a finite-state acceptor and performs the additional functions which are associated with the final states of the acceptor. The scanner generated by Lex can be used in conjunction with a parser generator (specifically the program YACC, which was written by Johnson, 1975) for the lexical and syntactic phases of compilation.

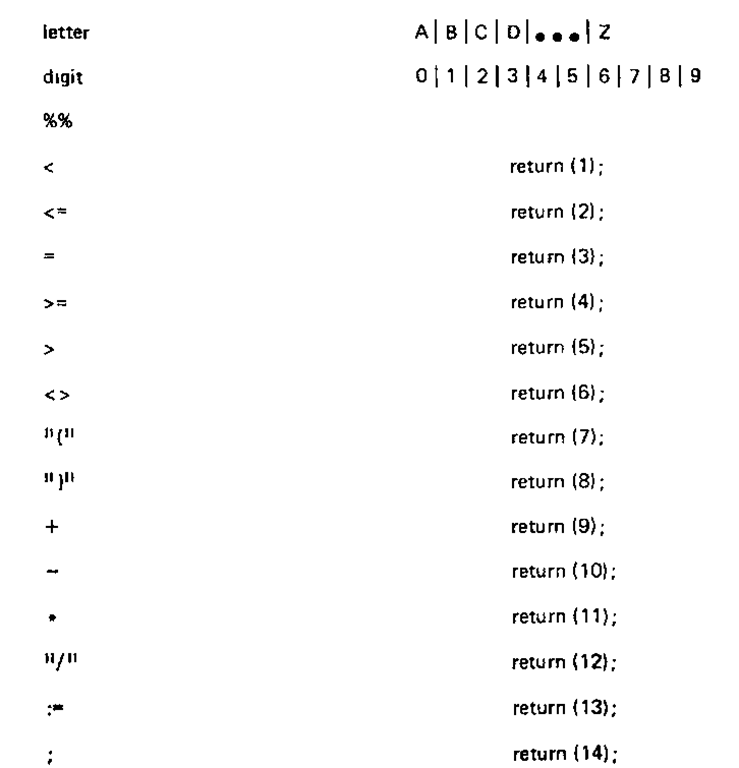

The input to Lex, which consists of three parts, has the format

definitions

%%

translation rules

%%

user subprograms

Any section may be empty, but the sections must be separated from each other by a %%.

The definitions section consists of a sequence of statements of the form

name expression

where name can be used in any regular expression given later in the Lex input for representing the regular expression on the right. Name is a valid identifier and is separated from the expression by at least one blank. Thus the definition section allows a shorthand notation to be used for, say, the set of digits, within a complex expression. For example, the digits and letters can be represented by

and an identifier as iden letter {letter|digit) We have not been using the .format specifications for regular expressions in Lex precisely. This would require a lengthy explanation of the syntax of Lex input and the features which are available in Lex. There are additional regular-expression operators in Lex which, while not making regular expressions more powerful, allow for more convenient methods of specifying tokens. A blank which is normally used to separate an expression from its action must be enclosed in quotes if it is to be included as a character within a regular expression. For the simplicity of this discussion, we use the method of specifying regular expressions which has been used throughout this topic. The reader should refer to the reference manual by Lesk and Schmidt (1975) for details about Lex.

The translation rules section consists of a sequence of rules of the form

expression action

where each expression is a regular expression and action is a sequence of code to be executed. Action consists of a piece of code written in the programming language C and is surrounded by braces if more than one C statement is used.

Because the scanner produced by Lex attempts to match one of the set of regular expressions to a sequence of input characters, it can really be considered a deterministic finite-state acceptor of the form

where each r, is a regular expression found in the translation rules section. An additional feature of Lex over a DFA is that each r, has an action which is executed if the next input token is matched to that expression.

The scanner matches the longest sequence of input characters to a regular expression. Finally, if two or more regular expressions can produce the token found in the input text for the scanner, a match with the first regular expression occurs. For example, the keyword DO might be recognized in a scanner by the regular expression which specifically matches DO and by the regular expression which matches identifiers. A match with the expression for the keyword DO would occur if it is the first expression listed.

As was mentioned in Sec. 4-2, lookahead can be a useful feature for determining what the next token in the input text is. This feature is available in Lex and is designated by a slash (/) separating two regular expressions. (The character slash is indicated in a regular expression by enclosing it within quotes, i.e., "/".) The token consists of the input text which is read and can be produced by the regular expression preceding the slash; the input text which follows must be a derivation of the regular expression following the slash in order for a match to occur.

Consider the FORTRAN example from Sec. 4-2 which required lookahead in order to determine if DOIOI was just one token or the three tokens DO, 10, and I. If it is three tokens, it is a part of a loop statement which has a comma after the equals sign and an identifier or a constant. The specification for identifying DO as the first token of a loop statement would be

If this expression fails on the input text, DO is considered as a part of an identifier.

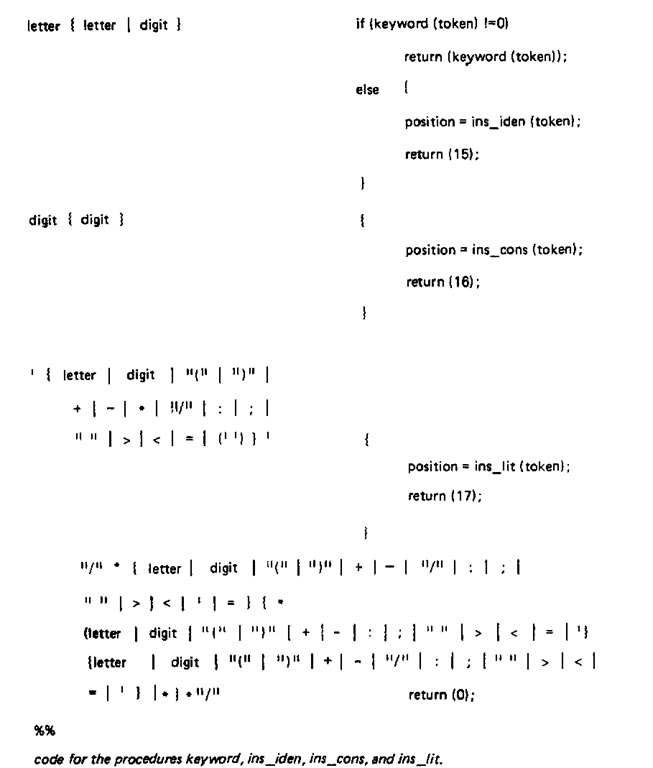

Figure 4-14 illustrates the input to Lex for describing a simple scanner which accepts the same language as the scanner SCAN of Sec. 4-2. The code for the actions is written in C, and the values returned by the actions are the internal representation numbers for the token which can be derived from the corresponding regular expression. The variable token contains the token which was just identified in the input string.

The function keyword returns the internal representation number of the keyword. Zero is returned if the token is not a keyword. The functions insjden, ins_cons, and insjit perform the symbol-table maintenance routines for identifiers, constants, and string literals, respectively, and each returns the position of the token that was inserted into the table. These subprograms are to be written by the programmer.

For most of the tokens, the scanner just returns the internal representation number. For constants and literals, however, the constant or literal is inserted into the constant table or the literal table, respectively, and the position is stored in the variable called position before returning the representation number. Position is assumed to be a global variable which gives the position where a token was stored in the symbol table to the parser. If the token is matched to the fifteenth regular expression, a call to the keyword function determines if the token is a keyword.

Figure 4-14 Example input to Lex program.

Figure 4-14

If it is, the representation number of the keyword is returned; otherwise it is an identifier, and the position where the identifier is stored in the symbol table and its internal representation number are returned.

The scanner returns 0 when a comment has been identified." Because comments are to be ignored, the calling program can call the scanner again to determine the next token in the input stream.

If the input string contains the sequence <= , the < can be matched by the first, second, and sixth regular expressions. Because an equal sign follows, however, the expression < > is rejected, and so is the first one, since the second expression can match an additional character. Thus the scanner returns the value 2.

In this topic, regular grammars, regular expressions, and finite-state acceptors were introduced. These topics provided an understanding of how scanners are constructed by hand or how a lexical analyzer is specified to a scanner generator. Parsing, the next phase of compilation, uses the internal representation numbers of the tokens identified by the scanner for performing syntactic analysis and is the topic of Chaps. 6 and 7.

![tmp4C8-601_thumb[2][2][2][2] tmp4C8-601_thumb[2][2][2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp4C8601_thumb2222_thumb.png)

![tmp4C8-602_thumb[2][2][2][2] tmp4C8-602_thumb[2][2][2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp4C8602_thumb2222_thumb.png)

![tmp4C8-603_thumb[2][2][2][2] tmp4C8-603_thumb[2][2][2][2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp4C8603_thumb2222_thumb.png)