Run-Time Address Calculation

We are now in a position to formalize a procedure for run-time address calculation in our dynamic storage-allocation model. Given a variable with a tuple address of (BL, ON) that is accessible in a block at level LEV, the address ADDR of the variable is found as follows:

where abp means the current activation-base pointer value display[BL] means the BL element of the display area in the current activation record nip means the number of implicit parameters, which has been two in the model presented in thi> section.

Notice that the expression (BL – 1) nip accounts for the size of the display (i.e., a block at level BL must have a display of size BL — 1) plus the number of implicit parameters. Since both of these values are known at compile time, some compilers begin their ON assignment at (BL – 1) 4- nip. This scheme reduces the complexity of the address calculation at run time to abp + ON for local variables and display [BL] + ON for global variables.

Handling Recursive Procedures

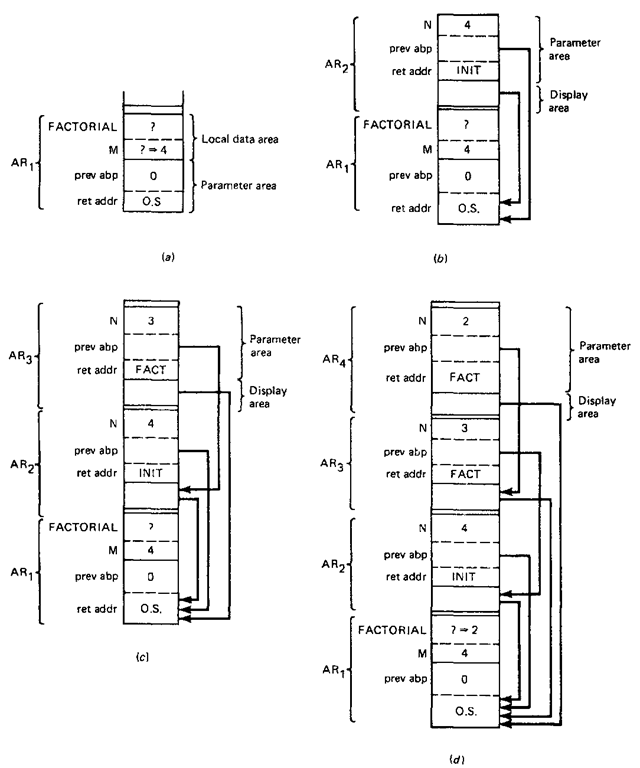

Before we conclude our discussion of dynamic storage allocation, it is important to point out that for recursive block-structured languages more than one activation record may be associated with a block at any one time. This property can be illustrated through the ALGOL program in Fig. 9-8, which contains a recursive FACTORIAL procedure. A trace of the run-time stack during execution is depicted in Fig. 9-9. The recursive procedure is assumed to be called initially with a value of 4 for M.

With each invocation of the FACTORIAL procedure, a new activation record (and hence a new copy of the formal parameter N) is placed on top of the run-time stack. A return address is also associated with each activation record. A return address with a value of FACT indicates that the return from that particular activation of FACTORIAL is to the point of invocation within the FACTORIAL procedure as indicated in Fig. 9-8.

Figure 9-8 Recursive FACTORIAL

Figure 9-9 Trace of the run-time stack for the FACTORIAL procedure up to the basis condition.

A return address with the value of INIT designates that the return should be to the point at which the FACTORIAL procedure was initially invoked. Finally, a return address with the value of "O.S." indicates that a return to the operating system takes place when the entire program has terminated execution.

The trace in Fig. 9-9 begins with the stacking of the activation record for the outer block. Note that space is left for the return value of the FACTORIAL functional procedure, which is declared local to the outermost block. The activation record for the first invocation of the FACTORIAL procedure is shown in Fig. 9-9b. Subsequent invocations of the recursive procedure are represented by the stacking of activation records as shown in Fig. 9-9c and d.

The trace proceeds until the basis condition (i.e., N < 3) is met. At this point the current value of N is returned (i.e., assigned to the storage location for FACTORIAL).

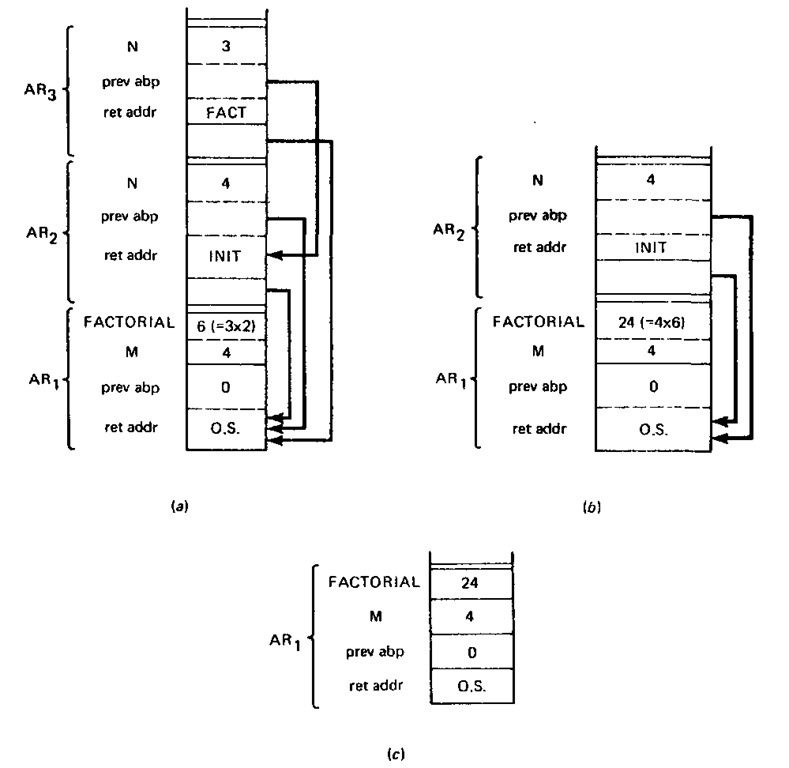

Figure 9-10 The remainder of the trace of the run-time stack for the FACTORIAL procedure.

The flow of control is then returned to the address indicated as FACT and the expression N * FACTORIAL(N – 1) is evaluated. The result of this expression (i.e., 3*2 = 6) is subsequently returned, and the process of unstacking the activation records and returning values for FACTORIAL continues as shown in Fig. 9-10. Finally, flow of control returns to the outer block through the return address INIT, and the value of FACTORIAL (i.e., 24) is printed.

From the FACTORIAL example, it is most important to understand that the value of N referenced during execution is located in the latest activation record to be allocated to FACTORIAL. This referencing is guaranteed because the calculation of the run-time address is always computed with respect to the current activation-base-pointer value.

It should be evident from the discussion of dynamic storage allocation that referencing a variable can involve a relatively complex run-time address calculation. This complexity suggests that languages with dynamic storage allocation should be used only for those applications involving rather dynamic storage demands at run time. In general, many applications, such as the traditional data-processing applications, do not require a very dynamic run-time environment, and therefore a language like COBOL, which has no dynamic storage allocation, is often used. It should be pointed out, however, that some machines, such as the Burroughs 6700, do support dynamic storage allocation with machine-level instructions. The apparent overhead in dynamic storage addressing is significantly reduced in these machines.

Let us turn our attention to a third type of storage allocation that is often required in programming languages.

HEAP STORAGE ALLOCATION

From the discussion thus far, we established that for the case of static storage specific storage requirements must be known at compile time. For the case of dynamic storage allocation, storage requirements had to be known at a precise point during execution, namely, at block entry. These allocation strategies are not sufficient to handle the storage requirements of some data structures that may appear in a programming language. Variable-length strings in SNOBOL 4 and lists in LISP 1.5 are two examples of data structures with storage requirements that are not known at compile time or at block-entry time—in fact, their storage requirements are known only at the time a new value is assigned or a new instance of the structure is created.

Requests for storage for these types of data structures are handled through a heap storage-allocation strategy. The strategy involves the reserving of a large contiguous block of memory commonly called the heap. As requests for additional storage are received, a run-time storage manager allocates space from the heap. Similarly as space is no longer required for a data structure, the manager attempts, where possible, to release the storage so that it can be reused at a later date.

Two types of heap storage requests can occur: implicit and explicit requests. We begin our discussion by giving examples of both.

Implicit Storage Requests

As an example of an implicit storage request, consider the following SNOBOL 4 program segment:

The first statement reads in a line (or buffer full) of input and assigns it to the string LINE. If there is no more input, a transfer is made to the statement labeled OUT, the value of the variable TEXT is printed, and the program terminates. If there is input, the second statement is executed. The execution of this statement causes the value of LINE to be concatenated to the current value of TEXT, the result to be assigned to TEXT, and control to be unconditionally passed to the first statement. In this manner, the string TEXT is built up to contain all the characters present in the input file. Since the input file can vary in size, the total storage requirements for TEXT cannot possibly be known until the final input character is read.

Note that in the SNOBOL program there is no command that explicitly requests additional storage to handle the requirements for the variable-length string TEXT. Rather, it is assumed that a storage manager exists which acquires additional storage as the string increases in size.

There are also instances in which storage for a string can be released. For example, suppose a SNOBOL 4 program exists which builds up a string to a significant length in a fashion similar to the previous program segment. The string that is constructed is then used in some fashion, the string variable is reinitialized to the empty string (i.e., TEXT = "), and the process of constructing a new string is reinitiated. At the point in which the variable is reinitialized to the empty string, it should be clear that the space occupied by the previous string value can be released in order that it may be reused in the construction of a new string. The releasing of heap storage in this instance occurs implicitly.

Explicit Storage Requests

Some programming languages, such as PL/I and PASCAL, allow the programmer to have explicit control over the creation or destruction of instances of a data structure. The PL/I procedure in Fig. 9-11 illustrates how a linked list of stocks and their quotations can be maintained in order by stock name. The procedure begins with the allocation of a record named STOCK. The variable STOCK is assumed to be global to the procedure and declared to have a BASED storage attribute as follows:

The ALLOCATE statement signifies to the heap manager that enough storage must be found to hold the contents of a new copy of the STOCK record. The pointer P is assigned an address pointing to the first storage location for the new copy of STOCK. The NAME and QUOTE fields of the record are filled in via an input statement.

Figure 9-11 PL/I procedure illustrating explicit allocation and freeing or’ heap storage

Then the record is either inserted into a linked list of STOCK records if that stock has not been placed in the list previously, or a previous STOCK record is updated with a new QUOTE value. If a previous record does exist, the newly created duplicate record should be destroyed, and this is accomplished with the FREE statement. In effect, the FREE statement tells the heap manager to release the storage allocated to the STOCK record, thus enabling this storage to be reused at a later point in the program.

Because the storage requirements can be dependent upon the size and nature of the input file as illustrated in the stock-quotation example, explicit data-structure creation and destruction cannot be handled at compile time or at block-entry time. As was the case with implicit storage requests, the indeterminancy until run time of storage requirements necessitates that a sophisticated heap storage manager be created as part of the run-time environment to handle this type of dynamic data-structure activity. Let us now turn to discussion of heap storage-management techniques.

Management of Fixed-Length Blocks

The simplest type of heap storage management is that involving storage requests which are always for a fixed number of storage locations. Such a situation might arise in a language like LISP, which is devoted to the manipulation of list structures whose BLOCKS are all of a single type and size. In this case, the total available heap storage can be subdivided into a series of blocks, each of the correct size. These blocks can be linked by LINK fields in the first word of each block to form a one-way linear list which will be called an availability list. A request for a storage block is then handled by a routine such as Function GET.BLOCK.

Function GET_BLOCK(HEAD). Given HEAD, the pointer to the first block on the linked list of available storage blocks, GET_BLOCK returns the address, P, of an available block.

In step 1, if we are out of storage (HEAD = NULL), then we can only terminate the program or attempt to recover some storage by a garbage-collection technique which will be discussed later in this topic. Recoverable storage is available from instances of data structures that are no longer used (i.e., referenced) by the program.

The return of a block to the availability list in heap storage is equally trivial. Simply attach the freed block as the new first block in the availability list. The question of when a block is returned will be discussed later in this topic. It should be noted, however, that all that is needed to return a block is P, the block address, and HEAD, the pointer to the first block on the availability list.

![tmp1A-349_thumb[2] tmp1A-349_thumb[2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp1A349_thumb2_thumb.png)

![tmp1A-355_thumb[2] tmp1A-355_thumb[2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp1A355_thumb2_thumb.png)

![tmp1A-356_thumb[2] tmp1A-356_thumb[2]](http://what-when-how.com/wp-content/uploads/2011/08/tmp1A356_thumb2_thumb.png)

![tmp1A-358_thumb[2]_thumb_thumb tmp1A-358_thumb[2]_thumb_thumb](http://what-when-how.com/wp-content/uploads/2011/08/tmp1A358_thumb2_thumb_thumb_thumb.png)