PROGRAMMING LANGUAGES

Interactions involving humans are most effectively carried out through the medium of language. Language permits the expression of thoughts and ideas, and without it, communication as we know it would be very difficult indeed.

In computer programming, a programming language serves as a means of communication between the person with a problem and the computer used to help solve it. An effective programming language enhances both the development and the expression of computer programs. It must bridge the gap between the often unstructured nature of human thought and the precision required for computer execution.

A program solution to a given problem will be easier and more natural to obtain if the programming language used is close to the problem. That is, the language should contain constructs which reflect the terminology and elements used in describing the problem and are independent of the computer used. Such a programming language is usually high-level. Digital computers, on the other hand, accept and understand only their own special low-level language, consisting typically of long sequences of zeros and ones. These sequences are generally unintelligible to humans. This type of low-level language is quite different from the high-level language used to describe a problem in a given area.

A hierarchy of programming languages based on increasing machine independence includes the following:

1. Machine-level languages

2. Assembly languages

3. Higher-level or user-oriented languages

4. Problem-oriented languages

A machine-level language is the lowest form of computer language. Each instruction in a program is represented by a numeric code, and numerical addresses are used throughout the program to refer to memory locations in the computer’s memory. All bookkeeping aspects of the program are the sole responsibility of the machine-language programmer. Finally, all diagnostics and programming aids must be supplied by the programmer. Also included as machine-level programs are programs written in microcode (i.e., microprograms). Microcode allows for the expression of some of the more powerful machine-level instructions in terms of a set of basic machine instructions.

Assembly language is essentially a symbolic version of a machine-level language. Each operation code is given a symbolic code such as ADD for addition and MUL for multiplication. Moreover, memory locations are given symbolic names such as PAY and RATE. Some assembly languages contain macroinstructions which are at a higher level than assembly-languages instructions. Assembly-language systems offer certain diagnostic and debugging assistance that is normally not available at the machine level.

A high-level language such as FORTRAN, PASCAL, or PL/I offers most of the features of an assembly language. While some facilities for accessing system-level features may not be provided, a high-level language offers a more enriched set of language features such as structured control constructs, nested statements, blocks, and procedures.

A problem-oriented language provides for the expression of problems in a specific application or problem area. Examples of such languages are SEQUEL for database retrieval applications and COGO for civil engineering applications.

In this topic we concern ourselves exclusively with the development of translators for high-level algebraic languages such as ALGOL, PASCAL, and FORTRAN. Although the class of high-level languages includes both procedural and nonprocedural languages, this topic concentrates on procedural languages. Unlike procedural programs, the position of a particular statement in a nonprocedural program has no bearing on its execution; that is, the interchange of any two statements in a nonprocedural program will not affect the results obtained though its execution. Examples of nonprocedural languages are PSL, a requirements statement language, and CSMP, a simulation and modeling language.

Advantages of high-level languages over low-level languages such as machine and assembly languages include the following:

1. High-level languages are easier to learn than their lower-level counterparts. The learning of many high-level languages requires little or no computer hardware background because such languages are relatively machine-independent. Furthermore, these languages are closer to their problem areas than lower-level languages.

2. The programmer does not have to be concerned with clerical tasks involving numerical or symbolic references to instructions, memory locations, constants, etc. Such tasks are handled by a system which translates the high-level language program into machine language.

3. A programmer is not required to know how to convert data from external forms to various internal forms within the memory of a computer. The ability of a programmer to convert, say, numeric data into internal forms such as floating-point numbers and packed-decimal numbers should be irrelevant.

4. Most high-level languages offer a programmer a variety of control structures which are not available in low-level languages. High-level languages offer several of the following language constructs:

Conditional statements (such as IF-THEN-ELSE and CASE statements) Looping statements (for both counted and conditional loops) Nested statements Block structures

These control structures improve programming style and facilitate certain programming approaches such as structured programming. Resulting programs are easier to read, understand, and alter. This results in reduced programming costs because programs are less complicated.

5. Programs written in a high-level language are usually more easily debugged than their machine- or assembly-language equivalents. High-level languages offer constructs that eliminate or reduce certain kinds of programming errors that occur in low-level languages. For example, the declaration of variables in a program adds a certain degree of redundancy which is useful in detecting errors in the improper use of variables. Languages often enforce a disciplined use of pointers. Moreover, a structured program is much more easily debugged than its unstructured counterpart.

6. Since most high-level languages offer more powerful control and data-structuring capabilities than low-level languages, the former class of languages facilitates the expression of a solution to a particular problem.

7. Because of the availability of certain language features such as procedures, high-level languages permit a modular and hierarchical description of programming tasks. These tasks can then be assigned to a team of programmers, thus facilitating a division of labor with a minimum of disruption and effort. Such an approach permits better documentation of a problem. Also, increased compatibility among programs and programmers can be realized.

8. Finally, high-level languages are relatively machine-independent. Consequently, certain programs such as FORTRAN and COBOL programs are portable. These programs can be executed on different computers with little, if any, change even though these computers have different internal architectures and machine-language instruction sets. Program portability reduces costs and, in general, protects an installation against computer obsolescence. Often when a computer is replaced, all associated assembly- and machine-language programs become obsolete.

Points 1 through 5 have been stressed in the past and found to be very important. Today, points 6 through 8 are receiving considerable attention and promise to be dominant considerations in the immediate future.

High-level language programs, of course, must be translated automatically to equivalent machine-language programs. The notions of such translators are examined in the next section.

TRANSLATORS

A translator inputs and then converts a source program into an object or target program. The source program is written in a source language and the object program belongs to an object language.

If the source language being translated is assembly language and the object program is machine language, the translator is called an assembler. Assembly language resembles closely machine language. In an elementary assembly language, most of its instructions are symbolic representations of corresponding machine-language instructions. Each assembly instruction contains an ordered set of fields. For example, the first field might represent a label which is followed immediately by an operation field. This field in turn might be followed by one or more operand fields. More sophisticated assembly languages, however, support macroinstructions. A macroinstruction can be expanded to a sequence of simple machine-language instructions. High-level language constructs such as case statements, nested statements, and blocks are not usually contained in assembly languages.

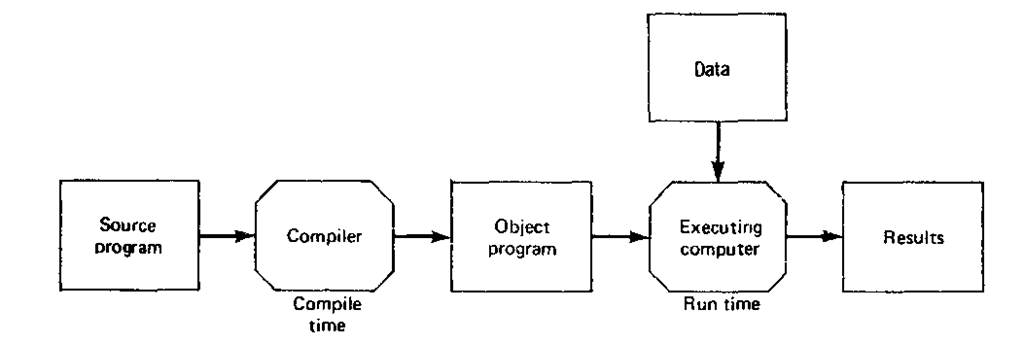

A translator which transforms a high-level language such as FORTRAN, PASCAL, or COBOL into a particular computer’s machine or assembly language is called a compiler. The time at which the conversion of a source program to an object program occurs is called compile time. The object program is executed at run time. Figure 1-1 illustrates the compilation process. Note that the source program and data are processed at different times, namely, compile time and run time, respectively.

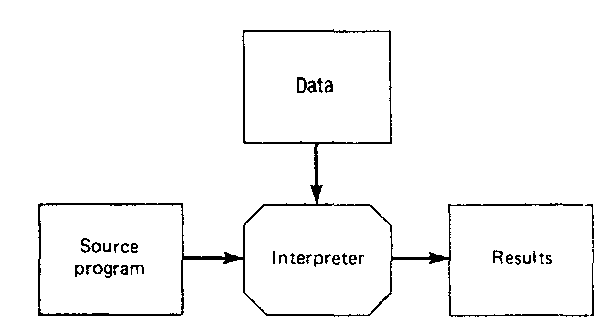

Another kind of translator, called an interpreter, processes an internal form of the source program and data at the same time. That is, interpretation of the internal source form occurs at run time and no object program is generated.

Figure 1-1 Compilation process.

Figure 1-2 Interpretive process.

Figure 1-2 illustrates this interpretive process. Some interpreters analyze each source statement every time it is to be executed. Such an approach is very time-consuming and is seldom used. A more efficient approach involves applying compilation techniques to the translation of the source program to an intermediate source form. This intermediate source form is then interpreted by the interpreter program.

Interpreters have become increasingly popular lately, particularly in microcomputer environments where the overhead of interpretation seems to be significantly less for the user. For example, a main reason why languages such as BASIC, APL, LISP, and SMALLTALK-80 have become very popular is because they have been implemented in an interpretive environment.

In this topic, we concentrate on compiler construction techniques. Although a complete compiler will not be found in this text, the reader is referred to the accompanying text, An Implementation Guide to Compiler Writing, where a compiler for the high-level programming language GAUSS is described in detail.

A compiler can be described in a modular fashion. The next section gives an overview of such an approach.

MODEL OF A COMPILER

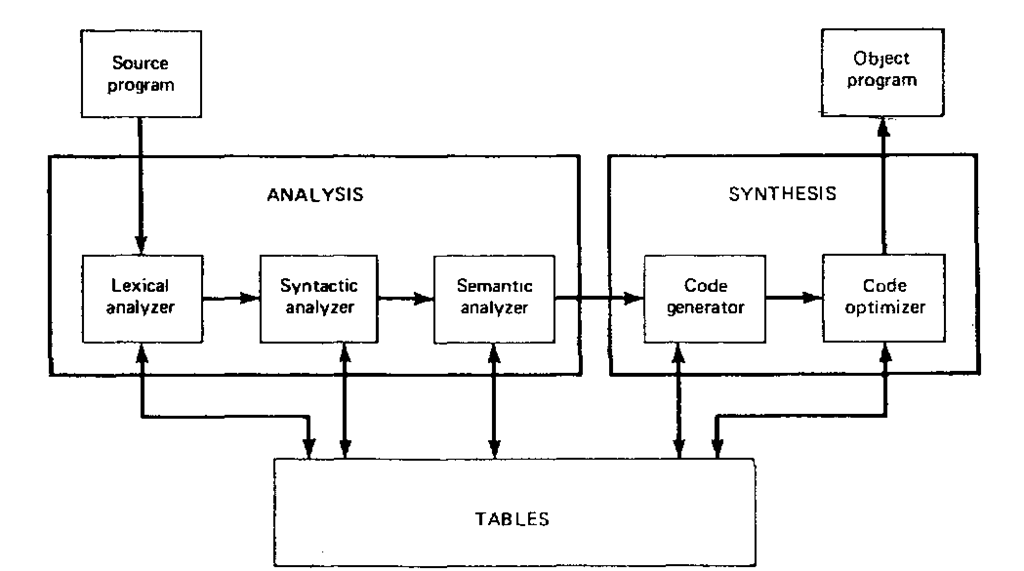

The task of constructing a compiler for a particular source language is complex. The complexity and nature of the compilation process depend, to a large extent, on the source language. Compiler complexity can often be reduced if a program-ming-language designer takes various design factors into consideration. Several of these programming-language design factors are discussed in Chap. 3. Since we are dealing with high-level source languages such as ALGOL and PASCAL, however, a basic model of a compiler can be formulated. Such a model is given in Fig. 1-3. Although this model may vary for the compilation of different high-level languages, it is nevertheless representative of the compilation process.

A compiler must perform two major tasks: the analysis of a source program and the synthesis of its corresponding object program. The analysis task deals with the decomposition of the source program into its basic parts. Using these parts, the synthesis task builds their equivalent object program modules.

Figure 1-3 Components of a compiler.

The performance of these tasks is realized more easily by building and maintaining several tables. More will be said about the contents of these tables shortly.

A source program is a string of symbols each of which is generally a letter, a digit, or certain special symbols such as 4-, —, and (,). A source program contains elementary language constructs such as variable names, labels, constants, keywords, and operators. It is therefore desirable for the compiler to identify these various types as classes. These language constructs are given in the definition of the language.

The source program (see Fig. 1-3) is input to a lexical analyzer or scanner whose purpose is to separate the incoming text into pieces or tokens such as constants, variable names, keywords (such as DO, IF, and THEN in PL/I), and operators. In essence, the lexical analyzer performs low-level syntax analysis. For efficiency reasons, each class of tokens is given a unique internal representation number. For example, a variable name may be given a representation number of 1, a constant a value of 2, a label the number 3, the addition operator (+) a value of 4, etc. For example, the PL/I statement

TEST: IF A > B THEN X = Y;

would be translated by the lexical analyzer into the following sequence of tokens (and associated representation numbers):

|

TEST |

3 |

|

26 |

|

|

IF |

20 |

|

A |

1 |

|

> |

15 |

|

B |

1 |

|

THEN |

21 |

|

X |

1 |

|

= |

10 |

|

Y |

1 |

|

> |

27 |

Note that in scanning the source statement and generating the representation number of each token we have ignored spaces (or blanks) in the statement. The lexical analyzer must, in general, process blanks and comments. Both of these classes of items typically do not represent executable parts of a program and are ignored, for example, in PL/I.

Certain programming languages allow the continuation of statements over multiple lines. Lexical analyzers must then handle the input processing of such multiple-line statements. Also, some scanners place constants, labels, and variable names in appropriate tables. A table entry for a variable, for example, may contain its name, type (e.g., REAL, INTEGER, or BOOLEAN), object program address, value, and line in which it is declared.

The lexical analyzer supplies tokens to the syntax analyzer. These tokens may take the form of a pair of items. The first item gives the address or location of the token in some symbol table. The second item is the representation number of the token. Such an approach offers a distinct advantage to the syntax analyzer; namely, all tokens are represented by fixed-length information: an address (or pointer) and an integer. Topic 4 describes the scanning process in more detail.

The syntax analyzer is much more complex than the lexical analyzer. Its function is to take the source program (in the form of tokens) from the lexical analyzer and determine the manner in which it is to be decomposed into its constituent parts. That is, the syntax analyzer determines the overall structure of the source program. This process is analogous to determining the structure of a sentence in the English language. In such an instance we are interested in identifying certain classes such as "subject," "predicate," "verb," "noun," and "adjective."

In syntax analysis we are concerned with grouping tokens into larger syntactic classes such as expression, statement, and procedure. The syntax analyzer (or parser) outputs a syntax tree (or its equivalent) in which its leaves are the tokens and every nonleaf node represents a syntactic class type. For example, an analysis of the source statement

(A + B)»(C + D)

cin produce the syntactic classes (factor), (term), and (expression) as exhibited ii the syntax tree given in Fig. 1-4. The syntax-tree approach is described in Chap. 2, where a set of rules known as a grammar is used to define precisely the source language. A grammar can be used by the syntax analyzer to determine the structure of the source program.

Figure 1-4 A syntax tree for the expression (A + B)*(C + D)

This recognition process is called parsing, and consequently we often refer to syntax analyzers as parsers. Several classes of parsers are discussed in detail in Chaps. 6 and 7.

The syntax tree produced by the syntax analyzer is used by the semantic analyzer. The function of the semantic analyzer is to determine the meaning (or semantics) of the source program. Although it is conceptually desirable to separate the syntax of a source program from its semantics, the syntax and semantic analyzers work in close cooperation. Nevertheless, the semantic-analysis process is a different and unique process in a compiler. For an expression such as (A + B)*(C + D), for example, the semantic analyzer must determine what actions are specified by the arithmetic operators of addition and multiplication. When the parser recognizes an operator such as " +" or " *," it invokes a semantic routine which specifies the action to be performed. This routine may check that the two operands to be added have been declared, that they have the same type (if not, the routine would probably make them the same), and that both operands have values. The semantic analyzer often interacts with the various tables of the compiler in performing its task.

The semantic-analyzer actions may involve the generation of an intermediate form of source code. For the expression (A + B) * (C + D), the intermediate source code might be the following set of quadruples:

( + ,A,B,T1) ( + ,C,D,T2) (*,T1,T2,T3)

where (+, A, B,Tl) is interpreted to mean "add A and B and place the result in temporary Tl," ( +, C, D, T2) is interpreted to mean "add C and D and place this result in T2," and (*,Tl, T2, T3) is interpreted to mean "multiply Tl and T2 and place the result in T3." The exact form of the intermediate source language used depends, to a large extent, on how it is to be processed during the synthesis task. An infix expression may be converted to an intermediate form called Polish notation. Using this approach, the infix expression (A + B)*(C + D) would be converted to the equivalent suffix-Polish expression AB + CD + *. The latter expression contains the arithmetic operators in the order in which they are to be executed. Another type of intermediate form of source language can be a syntax tree which represents the parse of the source program. (See Chap. 10.)

The output of the semantic analyzer is passed on to the code generator. At this point the intermediate form of the source-language program is usually translated to either assembly language or machine language. As an example, the translation of the three quadruples for the previous expression can yield the following sequence of single-address, single-accumulator assembly-language instructions:

LDA A Load the contents of A into the accumulator.

ADD B Add the contents of B to that of the accumulator.

STO Tl Store the accumulator contents in temporary storage Tl.

LDA C Load the contents of C into the accumulator.

ADD D Add the contents of D to that of the accumulator.

STO T2 Store the accumulator contents in temporary storage T2.

LDA Tl Load the contents of T1 into the accumulator.

MUL T2 Multiply the contents of T2 by that of the accumulator.

STO T3 Store accumulator contents in temporary storage T3.

The topic of code generation is presented in Chap. 11. The output of the code generator is passed on to a code optimizer. This process is present in more sophisticated compilers. Its purpose is to produce a more efficient object program. Certain optimizations that are possible at a local level include the evaluation of constant expressions, the use of certain operator properties such as associativity, commutativity, and distributivity, and the detection of common subexpressions. Because of the commutativity of the multiplication operator, the previous assembly code can be reduced to the following:

LDA A

ADD B

STO Tl

LDA C

ADD D

MUL Tl

STO T2

Note that the expression evaluated by this code is (C + D) * (A + B).

More global optimizations can also be performed. These include the single evaluation of multiple occurrences of identical subexpressions and removing statements that are invariant inside a loop and placing them outside of that loop. These kinds of optimizations are machine-independent. There are, however, certain machine-dependent optimizations which can also be performed. Optimal register allocation is an example of this kind of optimization. A good code optimizer can produce as good or even better code than an experienced assembly-language programmer. Optimization techniques are the topic of Chaps. 12 and 13.

The model of a compiler given in Fig. 1-3 makes distinctions between its successive phases. In certain compilers, however, some of these phases are combined. In our discussion of this model the details are omitted on how the five processes interact with each other. Let us examine some possible interactions between the lexical and syntax analyzers. One possibility is that the scanner generates a token for the syntax analyzer for processing. The syntax analyzer then "calls" the scanner when the next token is required. Another possibility is for the scanner to produce all the tokens corresponding to the source program before passing control to the syntax analyzer. In this case the scanner has examined the entire source program—this is called a separate pass. Some compilers make as little as one pass while other compilers have been known to make more than 30 passes (e.g., some of IBM’s first PL/I compilers). Factors which influence the number of passes to be used in a particular compiler include the following:

1. Available memory

2. Speed and size of compiler

3. Speed and size of object program

4. Debugging features required

5. Error-detection and -recovery techniques desired

6. Number of people and time required to accomplish the compiler writing project

Several educationally oriented or student compilers such as WATFIV and PL/C are essentially one-pass compilers. In these compilers very little (if any) code optimization is performed. The reason for this is the belief that programs will be compiled, more times than executed. Such programs are often executed orice and discarded. Also the semantic-analyzer and code-generation phases are invariably combined into one phase. Great emphasis is placed on debugging, error detection, error recovery, and diagnostic capabilities.

Certain source languages, however, cannot be compiled in a single pass. Compilers for PL/I and FORTRAN often contain many passes. In particular, the optimization phase may require that several passes of the source program (or its intermediate form) be made. In addition, the optimization phase is often spread throughout the other passes of the compilation process (e.g., certain constant folding, as described in Chap. 12, has been incorporated in the lexical analyzer of some compilers).

Other combinations are possible. Sometimes the syntax-analyzer, semantic-analyzer, and code-generation phases can be combined into a single phase.

An aspect of compiler construction which was omitted in Fig. 1-3 deals with the area of error detection and error recovery. Error recovery is most closely associated with the syntax-analysis phase. The aim of error recovery is to prolong the compilation life of the program as long as possible before the compiler "gives up" on the source program. A strategy that is often adopted is that if at all possible, the object program is executed. This approach can reduce the number of times a source program has to be compiled before becoming error-free. Some error-recovery approaches insert and/or discard tokens in attempting to correct the portion of the source program which is syntactically incorrect. We attach significant importance to this topic in this topic. In particular, Chap. 5 introduces the general notions of error detection and error recovery. Also, particular strategies for different parsing techniques are illustrated in Chaps. 6 and 7.

Another aspect of compiling which has been ignored in the current discussion deals with the various symbol tables that must be created and maintained during the executions of the required object program. The topic of symbol tables is presented in Chap. 8. Also, certain language constructs in the source language imply that several data structures must be stored and maintained in the memory of the computer. The design and implementation of these structures is called run-time organization and implementation or storage management. For block-structured languages, string-oriented languages such as SNOBOL, and languages which permit the declaration and manipulation of programmer-defined data structures, the run-time considerations are very significant and complex. Details of these problems are dealt with in Chap. 9.

In closing, a comment on the relative difficulty of implementing each of the phases in Fig. 1-3 is in order. The lexical-analysis phase is perhaps the most straightforward. Next in difficulty comes the syntactic-analysis or parsing phase. A lot of research, based on formal language theory, has been done on these two phases. As a result, the scanning and parsing phases can be largely automated.Topics 6 and 7, on the other hand, describe the theory of parsing. The real difficulties in compiler construction are in the semantic-analysis, code-generation, and code-optimization phases. Another problem area is the portability of programs. These difficulties, which are often language- and machine-dependent, are examined in Chaps. 10, 11, 12, and 13.

Several attempts have been made at automating compiler construction. Some of these efforts have enjoyed limited success. These translator-writing or compiler-compiler systems are briefly discussed in Chap. 14.