This section introduces the concept of QoS and discusses the four main issues in a converged network that have QoS implications, as well as the Cisco IP QoS mechanisms and best practices to deal with those issues. This section also introduces the three steps in implementing a QoS policy on a network.

Converged Network Issues Related to QoS

A converged network supports different types of applications, such as voice, video, and data, simultaneously over a common infrastructure. Accommodating these applications that have different sensitivities and requirements is a challenging task on the hands of network engineers.

The acceptable end-to-end delay for the Voice over IP (VoIP) packets is 150 to 200 milliseconds (ms). Also, the delay variation or jitter among the VoIP packets must be limited so that the buffers at the receiving end do not become exhausted, causing breakup in the audio flow. In contrast, a data application such as a file download from an FTP site does not have such a stringent delay requirement, and jitter does not impose a problem for this type of application either. When numerous active VoIP and data applications exist, mechanisms must be put in place so that while critical applications function properly, a reasonable number of voice applications can remain active and function with good quality (with low delay and jitter) as well.

Many data applications are TCP-based. If a TCP segment is dropped, the source retransmits it after a timeout period is passed and no acknowledgement for that segment is received. Therefore, TCP-based applications have some tolerance to packet drops. The tolerance of video and voice applications toward data loss is minimal. As a result, the network must have mechanisms in place so that at times of congestion, packets encapsulating video and voice receive priority treatment and are not dropped.

Network outages affect all applications and render them disabled. However, well-designed networks have redundancy built in, so that when a failure occurs, the network can reroute packets through alternate (redundant) paths until the failed components are repaired. The total time it takes to notice the failure, compute alternate paths, and start rerouting the packets must be short enough for the voice and video applications not to suffer and not to annoy the users. Again, data applications usually do not expect the network recovery to be as fast as video and voice applications expect it to be. Without redundancy and fast recovery, network outage is unacceptable, and mechanisms must be put in place to avoid it.

Based on the preceding information, you can conclude that four major issues and challenges face converged enterprise networks:

■ Available bandwidth—Many simultaneous data, voice, and video applications compete over the limited bandwidth of the links within enterprise networks.

■ End-to-end delay—Many actions and factors contribute to the total time it takes for data or voice packets to reach their destination. For example, compression, packetization, queuing, serialization, propagation, processing (switching), and decompression all contribute to the total delay in VoIP transmission.

■ Delay variation (jitter)—Based on the amount of concurrent traffic and activity, plus the condition of the network, packets from the same flow might experience a different amount of delay as they travel through the network.

■ Packet loss—If volume of traffic exhausts the capacity of an interface, link, or device, packets might be dropped. Sudden bursts or failures are usually responsible for this situation.

The sections that follow explore these challenges in detail.

Available Bandwidth

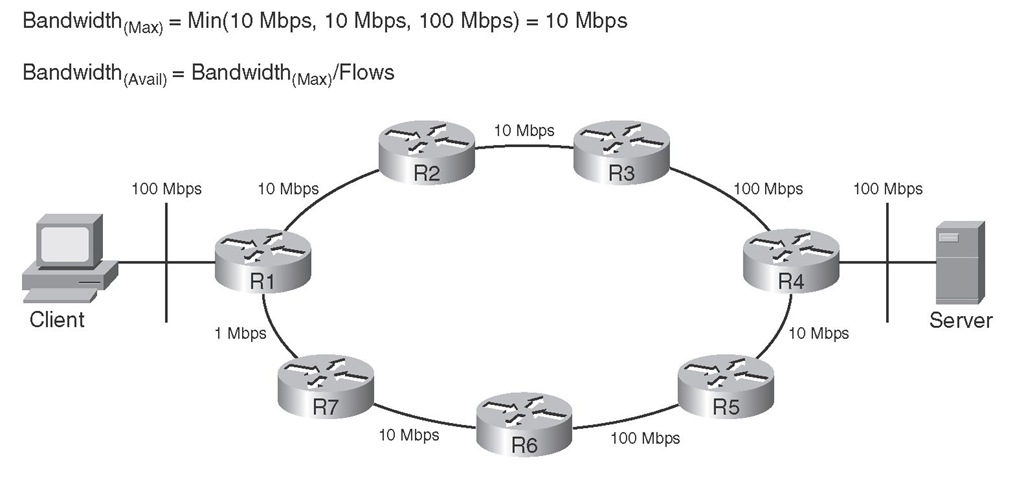

Packets usually flow through the best path from source to destination. The maximum bandwidth of that path is equal to the bandwidth of the link with the smallest bandwidth. Figure 2-1 shows that R1-R2-R3-R4 is the best path between the client and the server. On this path, the maximum bandwidth is 10 Mbps because that is the bandwidth of the link with the smallest bandwidth on that path. The average available bandwidth is the maximum bandwidth divided by the number of flows.

Figure 2-1 Maximum Bandwidth and Average Available Bandwidth Along the Best Path (R1-R2-R3-R4) Between the Client and Server

Lack of sufficient bandwidth causes delay, packet loss, and poor performance for applications. The users of real-time applications (voice and video) detect this right away. You can tackle the bandwidth availability problem in numerous ways:

■ Increase (upgrade) link bandwidth—This is effective, but it is costly.

■ Classify and mark traffic and deploy proper queuing mechanisms—Forward important packets first.

■ Use compression techniques—Layer 2 payload compression, TCP header compression, and cRTP are some examples.

Increasing link bandwidth is undoubtedly beneficial, but it cannot always be done quickly, and it has cost implications. Those who just increase bandwidth when necessary notice that their solution is not very effective at times of heavy traffic bursts. However, in certain scenarios, increasing link bandwidth might be the first action necessary (but not the last).

Classification and marking of the traffic, combined with congestion management, is an effective approach to providing adequate bandwidth for enterprise applications.

Link compression, TCP header compression, and RTP header compression are all different compression techniques that can reduce the bandwidth consumed on certain links, and therefore increase throughput. Cisco IOS supports the Stacker and Predictor Layer 2 compression algorithms that compress the payload of the packet. Usage of hardware compression is always preferred over software-based compression. Because compression is CPU intensive and imposes yet another delay, it is usually recommended only on slow links.

End-to-End Delay

There are different types of delay from source to destination. End-to-end delay is the sum of those different delay types that affect the packets of a certain flow or application. Four of the important types of delay that make up end-to-end delay are as follows:

■ Processing delay

■ Queuing delay

■ Serialization delay

■ Propagation delay

Processing delay is the time it takes for a device such as a router or Layer 3 switch to perform all the tasks necessary to move a packet from the input (ingress) interface to the output (egress) interface. The CPU type, CPU utilization, switching mode, router architecture, and configured features on the device affect the processing delay. For example, packets that are distributed-CEF switched on a versatile interface processor (VIP) card cause no CPU interrupts.

Queuing delay is the amount of time that a packet spends in the output queue of a router interface. The busyness of the router, the number of packets waiting in the queue, the queuing discipline, and the interface bandwidth all affect the queuing delay.

Serialization delay is the time it takes to send all the bits of a frame to the physical medium for transmission across the physical layer. The time it takes for the bits of that frame to cross the physical link is called the propagation delay. Naturally, the propagation delay across different media can be significantly different. For instance, the propagation delay on a high-speed optical connection such as OC-192 is significantly lower than the propagation delay on a satellite-based link.

NOTE In best-effort networks, while serialization and propagation delays are fixed, the processing and queuing delays are variable and unpredictable.

Other types of delay exist, such as WAN delay, compression and decompression delay, and de-jitter delay.

Delay Variation

The variation in delays experienced by the packets of the same flow is called delay variation or jitter. Packets of the same flow might not arrive at the destination at the same rate that they were released. These packets, individually and independent from each other, are processed, queued, dequeued, and so on. Therefore, they might arrive out of sequence, and their end-to-end delays might vary. For voice and video packets, it is essential that at the destination point, the packets are released to the application in the correct order and at the same rate that they were released at the source. The de-jitter buffer serves that purpose. As long as the delay variation is not too much, at the destination point, the de-jitter buffer holds packets, sorts them, and releases them to the application based on the Real-Time Transport Protocol (RTP) time stamp on the packets. Because the buffer compensates the jitter introduced by the network, it is called the de-jitter buffer.

Average queue length, packet size, and link bandwidth contribute to serialization and propagation delay. You can reduce delay by doing some or all of the following:

■ Increase (upgrade) link bandwidth—This is effective as the queue sizes drop and queuing delays soar. However, upgrading link capacity (bandwidth) takes time and has cost implications, rendering this approach unrealistic at times.

■ Prioritize delay-sensitive packets and forward important packets first—This might require packet classification or marking, but it certainly requires deployment of a queuing mechanism such as weighted fair queuing (WFQ), class-based weighted fair queuing (CBWFQ), or low-latency queuing (LLQ). This approach is not as costly as the previous approach, which is a bandwidth upgrade.

■ Reprioritize packets—In certain cases, the packet priority (marking) has to change as the packet enters or leaves a device. When packets leave one domain and enter another, this priority change might have to happen. For instance, the packets that leave an enterprise network with critical marking and enter a provider network might have to be reprioritized (remarked) to best effort if the enterprise is only paying for best effort service.

■ Layer 2 payload compression—Layer 2 compression reduces the size of the IP packet (or any other packet type that is the frame’s payload), and it frees up available bandwidth on that link. Because complexity and delay are associated with performing the compression, you must ensure that the delay reduced because of compression is more than the delay introduced by the compression complexity. Note that payload compression leaves the frame header in tact; this is required in cases such as frame relay connections.

■ Use header compression—RTP header compression (cRTP) is effective for VoIP packets, because it greatly improves the overhead-to-payload ratio. cRTP is recommended on slow (less than 2 Mbps) links. Header compression is less CPU-intensive than Layer 2 payload compression.

Packet Loss

Packet loss occurs when a network device such as a router has no more buffer space on an interface (output queue) to hold the new incoming packets and it ends up dropping them. A router may drop some packets to make room for higher priority ones. Sometimes an interface reset causes packets to be flushed and dropped. Packets are dropped for other reasons, too, including interface overrun.

TCP resends the dropped packets; meanwhile, it reduces the size of the send window and slows down at times of congestion and high network traffic volume. If a packet belonging to a UDP-based file transfer (such as TFTP) is dropped, the whole file might have to be resent. This creates even more traffic on the network, and it might annoy the user. Application flows that do not use TCP, and therefore are more drop-sensitive, are called fragile flows.

During a VoIP call, packet loss results in audio breakup. A video conference will have jerky pictures and its audio will be out of synch with the video if packet drops or extended delays occur. When network traffic volume and congestion are heavy, applications experience packet drops, extended delays, and jitter. Only with proper QoS configuration can you avoid these problems or at least limit them to low-priority packets.

On a Cisco router, at times of congestion and packet drops, you can enter the show interface command and observe that on some or all interfaces, certain counters such as those in the following list have incremented more than usual (baseline):

■ Output drop—This counter shows the number of packets dropped, because the output queue of the interface was full at the time of their arrival. This is also called tail drop.

■ Input queue drop—If the CPU is overutilized and cannot process incoming packets, the input queue of an interface might become full, and the number of packets dropped in this scenario will be reported as input queue drops.

■ Ignore—This is the number of frames ignored due to lack of buffer space.

■ Overrun—The CPU must allocate buffer space so that incoming packets can be stored and processed in turn. If the CPU becomes too busy, it might not allocate buffer space quickly enough and end up dropping packets. The number of packets dropped for this reason is called overruns.

■ Frame error—Frames with cyclic redundancy check (CRC) error, runt frames (smaller than minimum standard), and giant frames (larger than the maximum standard) are usually dropped, and their total is reported as frame errors.

You can use many methods, all components of QoS, to tackle packet loss. Some methods protect packet loss from all applications, whereas others protect specific classes of packets from packet loss only. The following are examples of approaches that packet loss can merit from:

■ Increase (upgrade) link bandwidth—Higher bandwidth results in faster packet departures from interface queues. If full queue scenarios are prevented, so are tail drops and random drops (discussed later).

■ Increase buffer space—Network engineers must examine the buffer settings on the interfaces of network devices such as routers to see if their sizes and settings are appropriate. When dealing with packet drop issues, it is worth considering an increase of interface buffer space (size). A larger buffer space allows better handling of traffic bursts.

■ Provide guaranteed bandwidth—Certain tools and features such as CBWFQ and LLQ allow the network engineers to reserve certain amounts of bandwidth for a specific class of traffic. As long as enough bandwidth is reserved for a class of traffic, packets of such a class will not become victims of packet drop.

■ Perform congestion avoidance—To prevent a queue from becoming full and starting tail drop, you can deploy random early detection (RED) or weighted random early detection (WRED) to drop packets from the queue before it becomes full. You might wonder what the merit of that deployment would be. When packets are dropped before a queue becomes full, the packets can be dropped from certain flows only; tail drop loses packets from all flows.

With WRED, the flows that lose packets first are the lowest priority ones. It is hoped that the highest priority packet flows will not have drops. Drops due to deployment of RED/WRED slow TCP-based flows, but they have no effect on UDP-based flows.

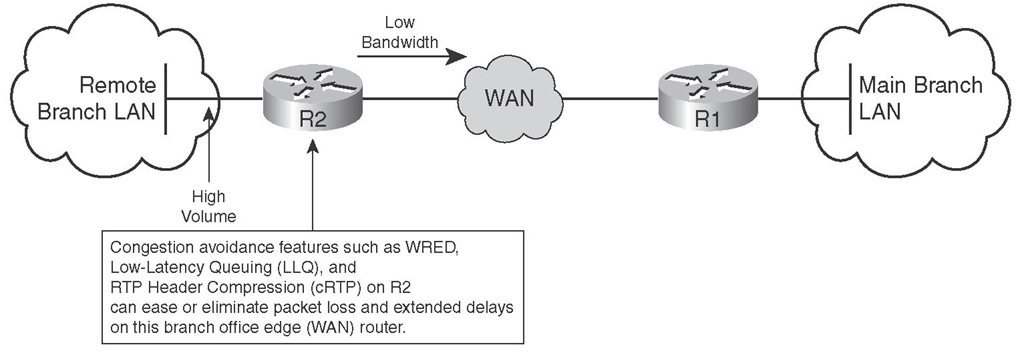

Most companies that connect remote sites over a WAN connection transfer both TCP- and UDP-based application data between those sites. Figure 2-2 displays a company that sends VoIP traffic as well as file transfer and other application data over a WAN connection between its remote branch and central main branch. Note that, at times, the collection of traffic flows from the remote branch intending to cross R2 and the WAN connection (to go to the main central branch) can reach high volumes.

Figure 2-2 Solutions for Packet Loss and Extended Delay

Figure 2-2 displays the stated scenario that leads to extended delay and packet loss. Congestion avoidance tools trigger TCP-based applications to throttle back before queues and buffers become full and tail drops start. Because congestion avoidance features such as WRED do not trigger UDP-based applications (such as VoIP) to slow down, for those types of applications, you must deploy other features, including compression techniques such as cRTP and advanced queuing such as LLQ.

Definition of QoS and the Three Steps to Implementing It

Following is the most recent definition that Cisco educational material provides for QoS:

QoS is the ability of the network to provide better or special service to a set of users or applications or both to the detriment of other users or applications or both.

The earliest versions of QoS tools protected data against data. For instance, priority queuing made sure packets that matched an access list always had the right of way on an egress interface. Another example is WFQ, which prevents small packets from waiting too long behind large packets on an egress interface outbound queue. When VoIP started to become a serious technology, QoS tools were created to protect voice from data. An example of such a tool is RTP priority queue.

RTP priority queue is reserved for RTP (encapsulating voice payload). RTP priority queuing ensures that voice packets receive right of way. If there are too many voice streams, data applications begin experiencing too much delay and too many drops. Strict priority queue (incorporated in LLQ) was invented to limit the bandwidth of the priority queue, which is essentially dedicated to voice packets. This technique protects data from voice; too many voice streams do not downgrade the quality of service for data applications. However, what if there are too many voice streams? All the voice calls and streams must share the bandwidth dedicated to the strict priority queue that is reserved for voice packets. If the number of voice calls exceeds the allocated resources, the quality of those calls will drop. The solution to this problem is call admission control (CAC). CAC prevents the number of concurrent voice calls from going beyond a specified limit and hurting the quality of the active calls. CAC protects voice from voice. Almost all the voice requirements apply to video applications, too; however, the video applications are more bandwidth hungry.

Enterprise networks must support a variety of applications with diverse bandwidth, drop, delay, and jitter expectations. Network engineers, by using proper devices, Cisco IOS features, and configurations, can control the behavior of the network and make it provide predictable service to those applications. The existence of voice, video, and multimedia applications in general not only adds to the bandwidth requirements in networks but also adds to the challenges involved in having to provide granular and strictly controlled delay, jitter, and loss guarantees.

Implementing QoS

Implementing QoS involves three major steps:

Step 1 Identifying traffic types and their requirements

Step 2 Classifying traffic based on the requirements identified

Step 3 Defining policies for each traffic class

Even though many common applications and protocols exist among enterprise networks, within each network, the volumes and percentages of those traffic types vary. Furthermore, each enterprise might have its own unique application types in addition to the common ones. Therefore, the first step in implementing QoS in an enterprise is to study and discover the traffic types and define the requirements of each identified traffic type. If two, three, or more traffic types have identical importance and requirements, it is unnecessary to define that many traffic classes. Traffic classification, which is the second step in implementing QoS, will define a few traffic classes, not hundreds. The applications that end up in different traffic classes have different requirements; therefore, the network must provide them with different service types. The definition of how each traffic class is serviced is called the network policy. Defining and deploying the network QoS policy for each class is Step 3 of implementing QoS. The three steps of implementing QoS on a network are explained next.

Step 1: Identifying Traffic Types and Their Requirements

Identifying traffic types and their requirements, the first step in implementing QoS, is composed of the following elements or substeps:

■ Perform a network audit—It is often recommended that you perform the audit during the busy hour (BH) or congestion period, but it is also important that you run the audit at other times. Certain applications are run during slow business hours on purpose. There are scientific methods for identifying the busy network moments, for example, through statistical sampling and analysis, but the simplest method is to observe CPU and link utilizations and conduct the audit during the general peak periods.

■ Perform a business audit and determine the importance of each application—The business model and goals dictate the business requirements. From that, you can derive the definition of traffic classes and the requirements for each class. This step considers whether delaying or dropping packets of each application is acceptable. You must determine the relative importance of different applications.

■ Define the appropriate service levels for each traffic class—For each traffic class, within the framework of business objectives, a specific service level can define tangible resource availability or reservations. Guaranteed minimum bandwidth, maximum bandwidth, guaranteed end-to-end maximum delay, guaranteed end-to-end maximum jitter, and comparative drop preference are among the characteristics that you can define for each service level. The final service level definitions must meet business objectives and satisfy the comfort expectations of the users.

Step 2: Classifying Traffic Based on the Requirements Identified

The definition of traffic classes does not need to be general; it must include the traffic (application) types that were observed during the network audit step. You can classify tens or even hundreds of traffic variations into very few classes. The defined traffic classes must be in line with business objectives. The traffic or application types within the same class must have common requirements and business requirements. The exceptions to this rule are the applications that have not been identified or scavenger-class traffic.

Voice traffic has specific requirements, and it is almost always in its own class. With Cisco LLQ, VoIP is assigned to a single class, and that class uses a strict priority queue (a priority queue with strict maximum bandwidth) on the egress interface of each router. Many case studies have shown the merits of using some or all of the following traffic classes within an enterprise network:

■ Voice (VoIP) class—Voice traffic has specific bandwidth requirements, and its delay and drops must be eliminated or at least minimized. Therefore, this class is the highest priority class but has limited bandwidth. VoIP packet loss should remain below 1% and the goal for its end-to-end delay must be 150 ms.

■ Mission-critical traffic class—Critical business applications are put in one or two classes. You must identify the bandwidth requirements for them.

■ Signaling traffic class—Signaling traffic, voice call setup and teardown for example, is often put in a separate class. This class has limited bandwidth expectations.

■ Transactional applications traffic class—These applications, if present, include interactive, database, and similar services that need special attention. You must also identify the bandwidth requirements for them. Enterprise Resource Planning (ERP) applications such as Peoplesoft and SAP are examples of these types of applications.

■ Best-effort traffic class—All the undefined traffic types are considered best effort and receive the remainder of bandwidth on an interface.

■ Scavenger traffic class—This class of applications will be assigned into one class and be given limited bandwidth. This class is considered inferior to the best-effort traffic class. Peer-to-peer file sharing applications are put in this class.

Step 3: Defining Policies for Each Traffic Class

After the traffic classes have been formed based on the network audit and business objectives, the final step of implementing QoS in an enterprise is to provide a network-wide definition for the QoS service level that must be assigned to each traffic class. This is called defining a QoS policy, and it might include having to complete the following tasks:

■ Setting a maximum bandwidth limit for a class

■ Setting a minimum bandwidth guarantee for a class

■ Assigning a relative priority level to a class

■ Applying congestion management, congestion avoidance, and many other advanced QoS technologies to a class.

To provide an example, based on the traffic classes listed in the previous section, Table 2-2 defines a practical QoS policy.

Table 2-2 Defining QoS Policy for Set Traffic Classes

|

Class |

Priority |

Queue Type |

Min/Max Bandwidth |

Special QoS Technology |

|

Voice |

5 |

Priority |

1 Mbps Min 1 Mbps Max |

Priority queue |

|

Business mission critical |

4 |

CBWFQ |

1 Mbps Min |

CBWFQ |

Table 2-2 Defining QoS Policy for Set Traffic Classes

|

Class |

Priority |

Queue Type |

Min/Max Bandwidth |

Special QoS Technology |

|

Signaling |

3 |

CBWFQ |

400 Kbps Min |

CBWFQ |

|

Transactional |

2 |

CBWFQ |

1 Mbps Min |

CBWFQ |

|

Best-effort |

1 |

CBWFQ |

500 Kbps Max |

CBWFQ CB-Policing |

|

Scavenger |

0 |

CBWFQ |

Max 100 Kbps |

CBWFQ +CB-Policing WRED |