Congestion happens when the rate of input (incoming traffic switched) to an interface exceeds the rate of output (outgoing traffic) from an interface. Why would this happen? Sometimes traffic enters a device from a high-speed interface and it has to depart from a lower-speed interface; this can cause congestion on the egress lower-speed interface, and it is referred to as the speed mismatch problem. If traffic from many interfaces aggregates into a single interface that does not have enough capacity, congestion is likely; this is called the aggregation problem. Finally, if joining of multiple traffic streams causes congestion on an interface, it is referred to as the confluence problem.

Figure 4-1 shows a distribution switch that is receiving traffic destined to the core from many access switches; congestion is likely to happen on the interface Fa 0/1, which is the egress interface toward the core. Figure 4-1 also shows a router that is receiving traffic destined to a remote office from a fast Ethernet interface. Because the egress interface toward the WAN and the remote office is a low-speed serial interface, congestion is likely on the serial 0 interface of the router.

Figure 4-1 Examples of Why Congestion Can Occur on Routers and Switches

A network device can react to congestion in several ways, some of which are simple and some of which are sophisticated. Over time, several queuing methods have been invented to perform congestion management. The solution for permanent congestion is often increasing capacity rather than deploying queuing techniques. Queuing is a technique that deals with temporary congestion. If arriving packets do not depart as quickly as they arrive, they are held and released. The order in which the packets are released depends on the queuing algorithm. If the queue gets full, new arriving packets are dropped; this is called tail drop. To avoid tail drop, certain packets that are being held in the queue can be dropped so that others will not be; the basis for selecting the packets to be dropped depends on the queuing algorithm. Queuing, as a congestion management technique, entails creating a few queues, assigning packets to those queues, and scheduling departure of packets from those queues. The default queuing on most interfaces, except slow interfaces (2.048 Mbps and below), is FIFO. To entertain the demands of real-time, voice, and video applications with respect to delay, jitter, and loss, you must employ more sophisticated queuing techniques.

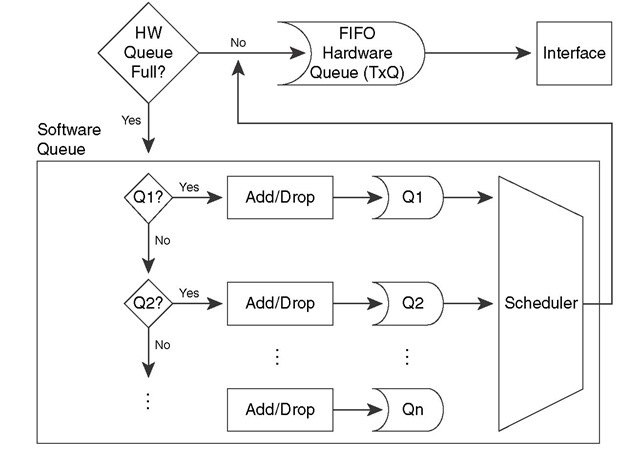

The queuing mechanism on each interface is composed of software and hardware components. If the hardware queue, also called the transmit queue (TxQ), is not congested (full/exhausted), the packets are not held in the software queue; they are directly switched to the hardware queue where they are quickly transmitted to the medium on the FIFO basis. If the hardware queue is congested, the packets are held in/by the software queue, processed, and released to the hardware queue based on the software queuing discipline. The software queuing discipline could be FIFO, PQ, custom queuing (CQ), WRR, or another queuing discipline.

The software queuing mechanism usually has a number of queues, one for each class of traffic. Packets are assigned to one of those queues upon arrival. If the queue is full, the packet is dropped (tail drop). If the packet is not dropped, it joins its assigned queue, which is usually a FIFO queue. Figure 4-2 shows a software queue that is composed of four queues for four classes of traffic. The scheduler dequeues packets from different queues and dispatches them to the hardware queue based on the particular software queuing discipline that is deployed. Note that after a packet is classified and assigned to one of the software queues, the packet could be dropped, if a technique such as weighted random early detection (WRED) is applied to that queue.

As Figure 4-2 illustrates, when the hardware queue is not congested, the packet does not go through the software queuing process. If the hardware queue is congested, the packet must be assigned to one of the software queues (should there be more than one) based on classification of the packet. If the queue to which the packet is assigned is full (in the case of tail-drop discipline) or its size is above a certain threshold (in the case of WRED), the packet might be dropped. If the packet is not dropped, it joins the queue to which it has been assigned. The packet might still be dropped if WRED is applied to its queue and it is (randomly) selected to be dropped. If the packet is not dropped, the scheduler is eventually going to dispatch it to the hardware queue. The hardware queue is always a FIFO queue.

Figure 4-2 Router Queuing Components: Software and Hardware Components

Having both software and hardware queues offers certain benefits. Without a software queue, all packets would have to be processed based on the FIFO discipline on the hardware queue. Offering discriminatory and differentiated service to different packet classes would be almost impossible; therefore, real-time applications would suffer. If you manually increase the hardware queue (FIFO) size, you will experience similar results. If the hardware queue becomes too small, packet forwarding and scheduling is entirely at the mercy of the software queuing discipline; however, there are drawbacks, too. If the hardware queue becomes so small, for example, that it can hold only one packet, when a packet is transmitted to the medium, a CPU interrupt is necessary to dispatch another packet from the software queue to the hardware queue. While the packet is being transferred from the software queue, based on its possibly complex discipline, to the hardware queue, the hardware queue is not transmitting bits to the medium, and that is wasteful. Furthermore, dispatching one packet at a time from the software queue to the hardware queue elevates CPU utilization unnecessarily.

Many factors such as the hardware platform, the software version, the Layer 2 media, and the particular software queuing applied to the interface influence the size of the hardware queue. Generally speaking, faster interfaces have longer hardware queues than slower interfaces. Also, in some platforms, certain QoS mechanisms adjust the hardware queue size automatically. The IOS effectively determines the hardware queue size based on the bandwidth configured on the interface. The determination is usually adequate. However, if needed, you can set the size of the hardware queue by using the tx-ring-limit command from the interface configuration mode.

Remember that a too-long hardware queue imposes a FIFO style of delay, and a too-short hardware queue is inefficient and causes too many undue CPU interrupts. To determine the size of the hardware (transmit) queue on serial interfaces, you can enter the show controllers serial command. The size of the transmit queue is reported by one of the tx_limited, tx_ring_limit, or tx_ring parameters on the output of the show controllers serial command. It is important to know that subinterfaces and software interfaces such as tunnel and dialer interfaces do not have their own hardware (transmit) queue; the main interface hardware queue serves those interfaces. Please note that the terms tx_ring and TxQ are used interchangeably to describe the hardware queue.