Image calculations, sometimes termed image math, refer to arithmetic or logic operations on a pixel-by-pixel basis. Image values can either be manipulated with the same constant value for all pixels or with the values of corresponding pixels in a second image. Any mathematical expression is possible, and some operations are very useful in practical applications. One example of addition and subtraction are CT images. CT image values can typically range from -1000 HU (e.g., air) to +3000 HU (e.g., contrast agents, metal objects). To improve compatibility, negative numbers are often avoided by adding an offset of 1024 (210) to the image values. Therefore, the image values of the stored image now range from +24 to +4095 (212 – 1), and the image values can be stored in an unsigned 12-bit integer field. Compatibility with image processing software that can only handle 8-bit values can be achieved by dropping the least significant 4 bits of the 12-bit integer value. This operation corresponds to a division by 24 = 16 and the resulting value range is now 0 to 255. Other image analysis software that can handle higher bit depths would read the CT image and subtract the offset of 1024 to restore the original values in Hounsfield units. This type of pixel manipulation can be described in its most general form by

where f describes the operation (i.e., addition, subtraction, multiplication, etc.) and C is a constant value. The function f is performed independently on all pixels of the image. Without explicitly defining the operation as image math, Equation (2.5) (histogram stretching) is a linear pixel-by-pixel operation that can serve as an example for the model in Equation (2.39). The function f may also be nonlinear. For example, gamma correction can be applied to enhance contrast in dark image regions. Frequently, output devices (e.g., video screens; even more so, printers) tend to reduce contrast in dark regions. Light output (or, in the case of printed paper, light reflectance) L can be modeled as the normalized image value raised to the power of a constant 7 such that L(x,y) = I(x,y)7, where I(x,y) is the image value normalized to the range 0 to 1. 7 typically lies between 1 and 2. The suitable correction is to raise the value of each pixel to the power of 1/7 before displaying or printing the image. The effect of this operation is shown in Figure 2.14. An even stronger contrast adjustment is to compute the logarithm of each pixel value and to rescale the results to fit the display range (usually, 0 to 255). A logarithmic display function is often chosen to visualize extreme image value ranges such as those obtained through the Fourier transform. In this case it must be ensured that image values of zero or negative values do not occur. To display the magnitude of the Fourier transform (no negative values), an offset of 1 can be added to the image and the function f in Equation (2.39) becomes log(I + 1). In cases where negative values exist, a suitable offset can be added, or negative values are clamped to 1 through thresholding.

The general equation to perform an operation on two images, I1 and I2, is

FIGURE 2.14 Gamma correction. A linear gradient with image values from 0 to 255 in steps of 4 (A) after contrast correction with 7 = 1.5 (B) and 7 = 2.0 (C).

Most commonly, the function f represents addition, subtraction, multiplication, or division. It is also assumed that I1 and I2 have the same size, although zero padding or tiling is possible in special cases. In addition, the same operation can be performed on an image stack for all stacked slices z:

Each of the four operations has typical applications in biomedical imaging. Image addition could be used for noise reduction. For example, N identical images can be acquired with a modality that has a strong noise component (such as magnetic resonance imaging or optical coherence tomography). By adding those N images and dividing the resulting value by N, the pixel-by-pixel average is computed, and additive Gaussian noise is reduced by a factor of VN". An example is given in Figure 2.15. Contrast is approximately 28 (in arbitrary OCT units), and the standard deviation is 5.6 (Figure 2.15A) and 1.46 (Figure 2.15B). The ratio of these two values can be used as a simplified metric of the signal/noise ratio (SNR) and results in SNR values of 5 and 20, respectively. This is an improvement in the SNR by a factor of 4, consistent with 20 averaged slices and V20 = 4.5. Note that further noise reduction is possible by removing shot noise (median filter) before averaging the slices. The combined operation (median filtering of each individual slice followed by averaging) yields an SNR of 31.

Image subtraction and division are often used to remove an inhomogeneous background. Several examples are provided in Section 5.3. Subtraction needs to be used when the background is caused by an inhomogeneous bias (e.g., a bias field in magnetic resonance images), and division is a suitable operation for multiplicative inhomogeneities, such as inhomogeneous illumination. Consider, for example, visible-light illumination L(x,y) that is brighter in the center than toward the edges of the image. A photograph is taken from a reflective object with a reflectance R(x,y). The object is described by the reflectance, but the photograph contains the multiplicative image I(x,y) = R(x,y)L(x,y). If L(x,y) is known (e.g., by photographing a homogeneously gray surface under the same illumination), R(x,y) can be recovered from I(x,y) by dividing I(x,y) by L(x,y). Care must be taken when the image is divided by a second image with a large range of image values. First, a division-by-zero value needs to be avoided. This can be achieved by adding an offset to the denominator image.

FIGURE 2.15 Example of image addition. Image A is an optical coherence tomography scan of an apple to a depth of approximately 0.5 mm. The white scale bar indicates 200 ^m. The thin white layer is the apple’s skin. Underneath is the actual plant tissue with a dark intermediate layer representing the transition of the skin into the tissue. The OCT slice exhibits considerable noise. Both Gaussian noise and shot noise (black pixels) exist. Image B was created by acquiring 20 successive slices of the same region and adding them. After addition, the values were rescaled to fit the range 0 to 255. Noise is markedly reduced and details become better visible. Further noise reduction could be achieved by applying a median filter operation on each individual slice before addition.

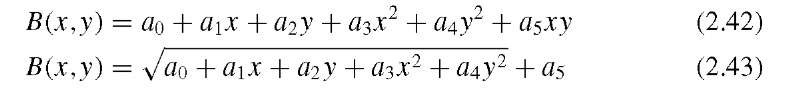

Similar considerations apply when the image in the denominator contains small values. One example for the division of two images is deconvolution in the frequency domain (see Section 3.2.3). Image subtraction is a popular method used to remove an inhomogeneous background when the illumination (or background) function is unknown. A semianalytical method for background removal is to model the background image B(x,y) as a biparabolic plane or the section of a sphere, respectively:

The unknown parameters a0 through a5 can be found by fitting the background function to the image. For this purpose, the image is divided into equal square tiles. When the features protrude as brighter objects from a dark background and the tile size is larger than the feature size, an assumption can be made that the darkest pixel in each tile is a background pixel. In each tile i, the darkest pixel B{ (x;,y, ) can be found, and the unknown parameters a0 through a5 are determined by nonlinear least-squares fitting. Finally, the background image B(x,y) is subtracted from the image pixel by pixel. Alternatively, the background function can be found by extremely strong blurring of the image (e.g., by convolution with a large Gaussian kernel or by application of a Fourier lowpass filter). This background function can then be subtracted directly from the image. This operation was introduced in Section 2.3 as unsharp masking.

Subtraction and division are used when the normalized difference between two images I1 and I2 needs to be computed. The normalized difference image with pixel values in percent is given by

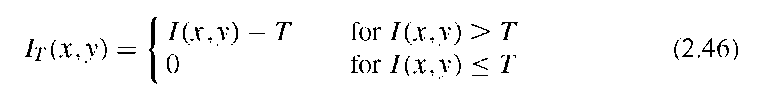

Multiplication of images is a useful tool in masking. The thresholding operation as defined, for example, in Equation (2.29) provides a binary mask where feature pixels are represented by the value 1 and background pixels by the value 0. When the original image is multiplied with the mask pixel by pixel, all background pixels are assigned the value 0, while the feature pixels retain their original value. This combined operation, known as hard thresholding, can be described as

In both hard and soft thresholded images, further analysis can take place while zero-valued pixels are ignored. If image measurements (e.g., pixel count, image value average, histogram) are limited to nonzero values, the measurements reflect the masked features only. Another example where image multiplication plays a key role is image filtering in the frequency domain (Section 3.2). A filter function is the equivalent of an image in frequency space, described by its transfer function H(u,v). The coordinates u and v are spatial frequencies and are treated in the same manner as the spatial coordinates x and y. The main difference is the widely used convention that the origin of the (x,y) coordinate system coincides with the top left corner of an image, while the origin of the (u,v) coordinate system coincides with the center of the image. The Fourier transform of an image has low-frequency components (intensity gradients that continue over the entire image) near the origin, and high-frequency components (e.g., abrupt changes, texture, noise) toward higher values of lul and Ivl closer to the edge of the frequency-domain image. A highpass filter is a filter that attenuates values near the center of the (u, v) coordinate system, with values of H(u,v) < 1 for small lul and Ivl and values of H(u,v) « 1 for large lul and Ivl. Conversely, a lowpass filter has values of H(u,v) « 1 for small lul and lvl and values of H(u,v) < 1 for large lul and lvl. The filter consists of three steps: computation of the Fourier transform, pixel-by-pixel multiplication of the transformed image F(u,v) with the filter H(u,v), and the inverse Fourier transform of the result.

For all image math operations, the image value range needs to be observed. Image math operations may lead to negative values, to values that are larger than the range of the original image (e.g., multiplication or addition of many images), or to very small values [e.g., division, most prominently in Equation (2.44)]. In image processing software that holds a limited value range (e.g., 8-bit images), the range may be exceeded or rounding errors may cause loss of detail. In many cases the image values are either rescaled after the operation to fit the full range or are clamped (e.g., pixels that exceed 255 in an 8-bit image are kept at 255). If this behavior is not acceptable, the result of the operation needs to be stored in a different format, for example, as a floating-point value for each pixel.

The additional subtraction of the threshold value T (to be precise, the pixel-by-pixel subtraction of the mask multiplied by T) leads to an equation for soft thresholding: