Abstract

Routing loops have recently re-emerged as an important issue in new carrier class Ethernet technologies such as IEEE 802.1aq. While they can waste resources through full link utilization, routing loops were originally mitigated in IP networks by TTL expiration, resulting in wasted resources before packets were dropped after a few seconds. In this paper a new mitigation approach based upon Early Packet Dropping (EPD) is developed, in which a router can drop looping packets earlier than expected if full link utilization will occur before TTL expiration. While the EPD algorithm deals only with loops between two nodes, a proposed solution known as TTL Relaxation is also combined with this loop mitigation mechanism in order to minimize the use of EPD and minimize the performance degradation introduced by loops between more than two nodes.

Keywords: routing loops, network performance.

Introduction

Routing loops are a potential issue in data networks which employ dynamic routing protocols. Recently, this has re-emerged as an important consideration in new carrier Ethernet technologies such as IEEE 802.1aq where a link-state routing protocol determines the shortest path between Ethernet bridges. Routing loops which arise because of unsynchronized routing databases in different nodes are known as transient loops, because they exist for only a specific period before the network converges again. Such routing loops have always existed within Interior Gateway Protocol (IGP) networks, while loops occurring specifically between two nodes comprised 90% of the total in one study [1]. The Time-to-Live (TTL) field within an IP packet implements the basic loop mitigation process. It ensures that packets won’t loop forever, as the TTL will eventually expire, causing the packet to be dropped. Packets may still circulate for a long time before expiring, during which the link is fully utilized and router resources are wasted, delaying other traffic passing through the affected links.

This paper introduces a new loop mitigation mechanism based upon Early Packet Dropping (EPD) where packets are dropped only when a loop traversing two nodes is expected to utilize the link fully before the network converges and before the circulating packets get dropped as a result of TTL expiration. The overall proposed solution is divided into two parts. The first part of the mitigation mechanism is known as TTL Relaxation which is a slight modification to the "exact hop count" approach proposed in [2]. The basic idea is that once an ingress edge router receives an IP packet from the clients (i.e. from the Ethernet port), the TTL of the packet is relaxed to a new TTL which is two hops greater than the diameter of the network (the longest shortest path in terms of hop counts) from the perspective of the ingress edge router. Two more hops are added so that traffic can tolerate rerouting. In the event of a loop, packets with the new TTL will be dropped sooner, relieving the problem and reducing bandwidth consumption. Conversely, when an egress edge router forwards an IP packet to a client, it sets the TTL to a reasonable value (64 in this paper).

The remainder of this paper is organized as follows. Section 2 discuses the related studies. Section 3 introduces the methodology while section 4 provides a full discussion of the EPD algorithm. Section 5 and section 6 provide the results and the conclusions respectively.

Related Studies

While minimizing the convergence time by altering the default routing protocol timers has been proposed to minimize the duration of these routing loops [3], [4], other approaches aim to avoid such loops completely [5], [6], [7] by developing routing algorithms to ensure loop-free convergence. Another loop prevention approach for IGP networks performed Forwarding Information Base (FIB) updates in a specific order so that a node does not update its FIB until all the neighbours that use this node to reach their destinations through the failed path have updated their FIBs [8], [9], [10]. IP fast reroute (IPFRR) solutions which include the installation of backup routes considered loop-free convergence [11]. Another technique was proposed where loops are mitigated, but not avoided, by maintaining an interface-specific forwarding table [12], [13]; packets are forwarded according to both their destination and the incoming interface, so that looped packets can be identified and dropped at the FIB because they arrive from an unexpected interface. Other studies aimed to avoid routing loops only in cases where network changes are planned [14], [15]. A more general overview of the routing loop problem and existing techniques to mitigate or avoid such loops is discussed in [16].

Methodology

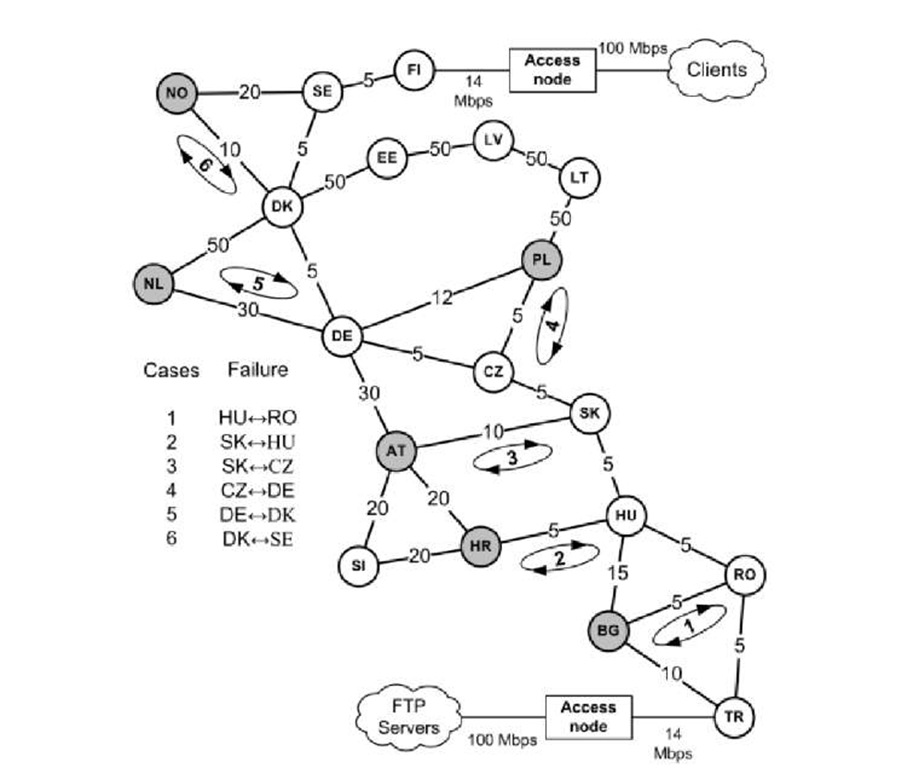

Part of the GEANT2 network (Fig. 1) is simulated with the OPNET simulator as a single-area Open Shortest Path First (OSPF [17]) network. In Fig. 1, the numbers on the links represent the routing metrics. Full segment File Transfer Protocol (FTP) traffic is sent from the servers to the clients (150 flows), using Transmission Control Protocol (TCP). The servers and clients are connected to the backbone through access nodes. Traffic from the servers follows the shortest path through nodes TR, RO, HU, SK, CZ, DE, DK, SE, and FI. Backbone optical links are configured as OC1 (50 Mbps) with utilization maintained at below 30%. The transmission buffer capacity (in packets) in all routers is equal to Backbone Link Capacity x TCP Round Trip Time (RTT) with an estimated RTT of 250 ms, hence router buffers have a capacity of 1020 packets. IP packets of 1533 bytes are assumed throughout the paper (including headers for IP, TCP, Ethernet and PPP).

Fig. 1. Simulated network – part of GEANT2

The looped arrows in Fig. 1 identify the six links containing loops. Such loops involve TCP traffic and arise because of link failure followed by delayed IGP convergence. Every simulation run involves only one link failure, creating of one of the loops shown in Fig 1. The shaded nodes delay shortest path calculation after the corresponding link failure, thus creating the loop. Every case was simulated with loop durations varying from 200 ms to 1 second in steps of 200 ms. Voice over IP (VoIP) traffic passed though each loop; this was carried by User Datagram Protocol (UDP) and consumed 10% of the link capacity. However the UDP traffic was not caught in the loop because it was not going through the failed link originally – only TCP traffic is caught in the loop. Since in every case the loop takes place at a different "hop count distance" from the source, TCP traffic enters it with a different Relaxed TTL (RTTL) as shown in Table 1. This is because node TR is rewriting the TTL of the source traffic with RTTL of 11 which gets subtracted by one every time it passes a backbone node. During the loop, the utilization of the corresponding link and the performance of the VoIP traffic passing through it was monitored and analyzed with and without the EPD mechanism.

Table 1. Rttl of the traffic entering the loop for each case in Fig. 1

|

Case |

1 |

2 |

3 |

4 |

5 |

6 |

|

Rttl entering the loop |

10 |

9 |

8 |

7 |

6 |

5 |

Early Packet Dropping Loop Mitigation

The EPD algorithm has been developed to mitigate loops arising between two nodes due to link failure, node failure and increase in link metric. However due to lack of space, this paper introduces the part of the algorithm which mitigates loops that arise between the node that detects the link failure and performs the mitigation process (Master), and the node to which the traffic was rerouted (Neighbour). As the node detecting the failure is the first to converge, it’s most likely that a routing loop will form with the Neighbour node which includes the failed link in its Shortest Path Tree and to which traffic is rerouted, because the Neighbour node takes longer to converge. Before describing the details of the algorithm, the following timers and parameters are considered:

• Link Failure Detection Delay (Dlfd): The time between detecting the link failure at hardware level (known in advance) and notifying the routing protocol stack (configurable timer).

• SPF Delay (DspF): The default delay between receiving the Link-State Advertisement (LSA) and starting the Shortest Path First (SPF) calculation (configurable timer).

• Neighbour Dummy SPT Calculation Delay![]()

Delay due to Shortest Path Tree (SPT) calculation and routing table update performed at the Neighbour node, assuming the link to the Master node fails.

• Master Dummy SPT Calculation Delay![]()

Delay due to SPT calculation and routing table update performed at the Master node assuming the link to the Neighbour node fails. • FIB Delay (DFIB): The time required for any node to upload the original routing table to the FIB.

• Neighbour Total Convergence Delay

• TTLAvg: Average value of the TTL of packets transmitted by each interface on the Master node.

• S: The throughput in packets per second (pps) of every interface on the Master node.

All delays are measured in seconds, while the dummy SPT calculations and routing updates do not take part in forwarding decisions – their only purpose in the model is to measure the time consumed.

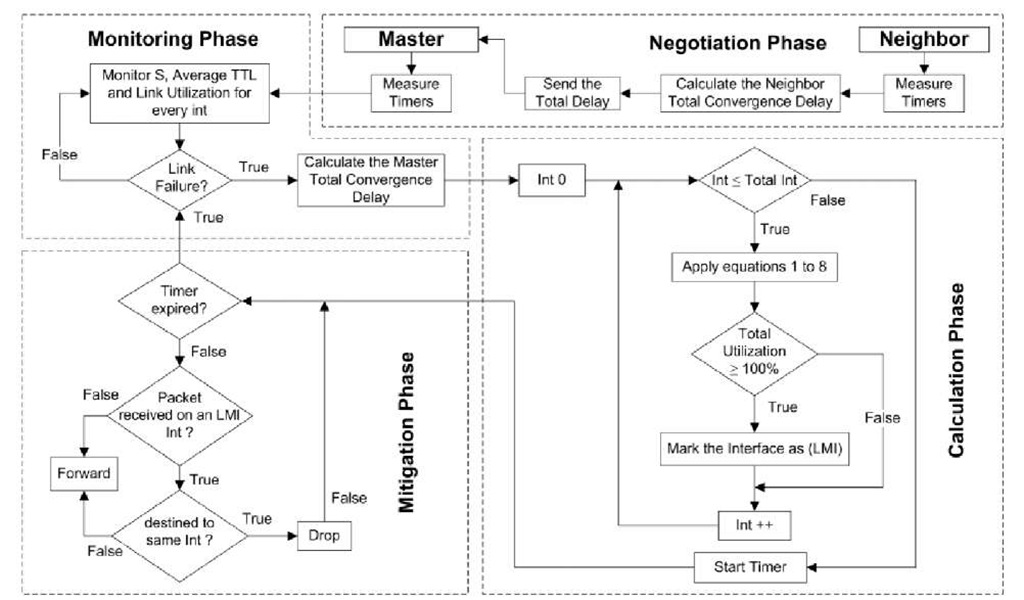

As shown in the algorithm in Fig 2, in the negotiation phase each Neighbour node sends K^ff6^ to a corresponding Master node; at this time, each node will take on the role of both Master and Neighbour at the same time. This will provide the Master node with the approximate maximum time required by any of its Neighbours to converge when each receives an update (LSA) from the Master node informing it of link failure. During the monitoring phase, the Master node registers the Link Capacity in pps (C), while monitoring S, TTLAvg and Link Utilization (ULink) for every interface (Neighbour).

Fig. 2. The Early Packet Dropping Loop Mitigation Algorithm. Int denotes Interface

If a failure takes place forming a loop that traps TCP traffic, the TCP source sends packets up to its Congestion Window (CWND) and waits until the Retransmission Timeout (RTO) has expired because no Acknowledgments (ACKs) are received from the destination. The utilization of the link increases while these packets bounce between both nodes, until either the routing protocol converges or the TTL expires. The sum of the CWND of all TCP sources measured in packets (WTotaL) will be equal to the capacity of the bottleneck buffer in the access node, which is 1020 packets. If such traffic bounces over an OC1 link more than 3 times, the link will be fully utilized. If the Master node knows WTotal in advance, it can estimate the amount of link capacity consumed by the rerouted and looped traffic. As the nodes cannot know the exact WTotal in advance, no more than S packets per second (throughput) will enter the loop if the interface on which S was registered has failed and S packets per second were rerouted. This is why S needs to be registered for every interface. We will denote TTLuA™eachable and sUnreachable as TTLAvg and S respectively of the Master node’s interface at which the link was failed. Once the Master node detects a link failure, it registers

Convergence Delay

belongs to the failed interface).

In the calculation phase, the Master node performs a set of calculations (equations 1-8) for every active interface (Neighbour). All other parameters except

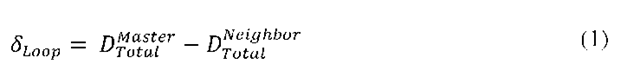

and the Neighbour belong to the interface on which the calculation is performed. The difference in the convergence time between the Master ![]() and the Neighbour

and the Neighbour![]() indicates the maximum loop duration in seconds

indicates the maximum loop duration in seconds

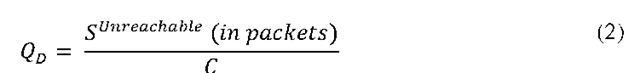

Assuming that all the sUnreachable packets will be rerouted (alternative path exists) to a single interface, the maximum Queuing Delay in seconds (QD) at that interface is equal to:

As justified later, the first second of the loop is being considered, which is why ^unreachable is effectively in units of packets, not packets per second. We define LTT as the Loop Trip Time, TD as the transmission delay and PD as the propagation delay (all in seconds). The Master node can estimate the duration of a loop trip with any of its Neighbours (Master^ Neighbour^Master) by calculating:

In the backbone network where sUnreachable is large and the transmission speed is high, Td and PD can be neglected so that LTT « QD. LT is defined as the Loop Traversals which indicates the maximum total number of times the packet travels round the loop before being dropped.

is the number of times packets travel round the loop while considering the loop duration that the Master calculated

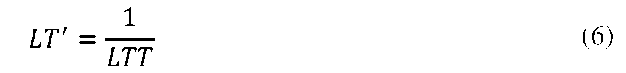

LT’ is the number of Loop Traversals during the first second of the loop. The rationale for considering the first second is discussed later.

Hence, the Master node is able to calculate the utilization that will be introduced by a loop (ULoop) with any Neighbour and the total utilization during the loop (UTotal):

Any interface with UTotal > 1 will be marked as a Loop Mitigation Interface (LMI). A timer equal to the largest SLoop of any LMI interface is invoked, and packets received by and destined to the same LMI interface are dropped (mitigation phase). In equation (7), min (LT, LT’, LTFail) is considered for the following reason. If LT is the minimum value then all the packets will be dropped during the loop (TTL expiration). If LTgLgov is the minimum then the loop will end before the TTL expires. If LT’ is the minimum then Uioop will reach 100% (assuming that sUnreachable is large) except when LTT is large. While QD is known in advance and TD is normally very small, the propagation delay PD should be large enough to make the packets take longer to traverse between the nodes, hence maximizing LTT and minimizing ULoop. While PD between any two nodes is not expected to be large in current optical backbone networks, traffic bouncing inside the loop can easily utilize the link fully in the one-second period.

The Algorithm’s Complexity and Applicability

Although in the EPD algorithm, every node runs the SPT algorithm once for every link connected to it, introducing a complexity of 0(N(E + VlogV)) where N is the average node degree, such computational complexity only arises after the network is fully converged and hence does not affect the convergence time, and the nodes can perform the SPT calculations while traffic is being forwarded normally. The complexity of the calculations performed between a link failure or an LSA being received, and convergence taking place, is only 0(N). Because these calculations do not involve any complex computational operations like calculating a tree or building a complex data structure, we expect that they will consume negligible processing time. Measuring through simulation the time taken for these calculations on a router with 10 interfaces revealed that it takes less than 1 millisecond.

The requirements for implementing the EPD algorithm in today’s routers are divided into two categories, namely protocol modification and router modification. The protocol modifications do not require changing the way shortest paths are calculated but it requires the link-state protocol to register and keep track of different timers and be able to send these timers to the router’s neighbours as an LSA/LSP. Router modification must permit monitoring of TTLAvg at every interface, and performance of the calculations presented earlier.

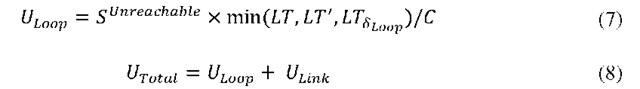

Once the router marks an interface as an LMI, there should be a way for the router to determine whether the packets have the same incoming and outgoing interfaces (packets arrive from a next hop node) before dropping them. This requires the router to maintain interface-specific forwarding tables instead of interface-independent forwarding tables (the same FIB for all interfaces). These modifications were proposed in [12]. The results from [12] showed no extra convergence delay against the convergence delay of a traditional link-state protocol. Table 2 shows the interface-independent forwarding table while Table 3 shows the interface-specific forwarding table for node RO in the network of Fig 3. These forwarding tables are produced after failure of link RO^HU. Table 3 is produced after node RO carried all the calculations in equations 1 to 8, in order to determine whether the loop utilization (ULoop) on any interface is equal to or greater than 1 (100% utilization). The entries in Table 2 are all marked with the next hop while the entries in Table 3 are marked with the next hop, ‘-’, or next hop referenced with X. Entries with ‘-’ will never be used, for example, node RO will never receive traffic from node BG destined to node BG itself. Entries marked with the next hop and referenced with X indicate a possibility of a loop, for example, if node RO received traffic from node BG with destination HU and sent it back again to node BG, a loop will be triggered as node BG is not converged yet and still believes that node RO is the next hop to destination HU.

Fig. 3. Example network illustrating the interface-specific forwarding table to be implemented with the EPD mechanism

Table 2. Interface-independent forwarding table at node RO. Int denotes Interface.

|

|

|

Destination |

|

|

|

|

|

HU |

BG |

TR |

|

BG ^ RO |

BG |

BG |

TR |

|

TR ^ RO |

BG |

BG |

TR |

Table 3. Interface-specific forwarding table at node RO when implementing the EPD algorithm. Int denotes Interface.

|

|

|

Destination |

|

|

|

|

|

HU |

BG |

TR |

|

tn |

BG ^ RO |

BG (X) |

- |

TR |

|

nn |

TR ^ RO |

BG |

BG |

- |

If any of the interfaces that are referenced with an ‘X’ for any of the destinations in the interface-specific forwarding table had ULoop equal to or greater than 1, that interface will be referenced with an LMI (instead of ‘X’) for those destinations. Packets that are now received by such an interface, and destined to the same interface, will be dropped. The difference between our approach and the approach introduced in [12] is that the forwarding table may contain loops but these loops are not harmful. Once the loop is predicted as being harmful, the interfaces which were referenced as X will be referenced as LMI. The establishment of this interface-specific FIB will only require the original SPT which was computed with Dijkastra’s algorithm.