Abstract

Moving object detection techniques have been studied extensively for such purposes as video content analysis as well as for remote surveillance. Video surveillance systems rely on the ability to detect moving objects in the video stream which is a relevant information extraction step in a wide range of computer vision applications. There are many ways to track the moving object. Most of them use the frame differences to analyze the moving object and obtain object boundary. This may be quite resource hungry in the sense that such approaches require a large space and a lot of time for processing. This paper proposes a new method for moving object detection from video sequences by performing frame-boundary tracking and active-window processing leading to improved performance with respect to computation time and amount of memory requirements. A stationary camera with static background is assumed.

Keywords: Moving Object Detection, Frame Differencing, Boundary Tracking, Bounding Box, Active Window.

Introduction

Moving object detection from video sequence is an important field of research as security has become a prime concern for every organization and individual nowadays. Surveillance systems have long been in use to monitor security sensitive areas such as banks, department stores, highways, public places and borders. Also in commercial sectors, surveillance systems are used to ensure the safety and security of the employees, visitors, premises and assets. Most of such systems use different techniques for moving object detection.

Detecting and tracking moving objects have widely been used as low-level tasks of computer vision applications, such as video surveillance, robotics, authentication systems, user interfaces by gestures and image compression. Software implementation of low-level tasks is especially important because it influences the performance of all higher levels of various applications.

Motion detection is a well-studied problem in computer vision. There are two types of approaches: the region-based approach and the boundary-based approach. In the case of motion detection without using any models, the most popular region-based approaches are background subtraction and optical flow.

Background subtraction detects moving objects by subtracting estimated background models from images. This method is sensitive to illumination changes and small movement in the background, e.g. leaves of trees. Many techniques have been proposed to overcome this problem. The mixture of Gaussians is a popular and promising technique to estimate illumination changes and small movement in the background. Many algorithms have been proposed for object detection in video surveillance applications. They rely on different assumptions e.g., statistical models of the background, minimization of Gaussian differences, minimum and maximum values, adaptability or a combination of frame differences and statistical background models [1]. Local neighborhood similarity based approach [2] and contour based technique [3] for moving object detection are also found in the literature.

However, a common problem of background subtraction is that it requires a long time for estimating the background models. Optical flow also has a problem caused by illumination changes since its approximate constraint equation basically ignores temporal illumination changes. This paper presents a novel approach for detecting moving objects from video sequence. It is better than conventional background subtraction approach as it is computationally efficient as well as requires less memory for its operation.

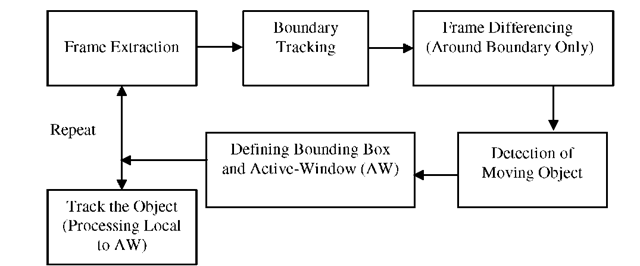

Fig. 1. Major Functional Steps

Methodology

The proposed method is a low complexity solution to moving object detection. It offers an improvisation and enhancement of the basic background subtraction method [1, 4]. The authors assume a stationary video camera. As there is no movement of the camera so the background it captures is fixed with respect to the camera frame.

The proposed method is a two part solution for detecting moving objects in the assumed scenario. It detects those moving objects that appear in the frame by crossing the frame boundary from any possible direction. This would be the scenario in many real life situations for most of the surveillance cameras. However, there are possibilities that a moving object pops in the middle of a frame without crossing the camera frame boundary when appears for the first in the video. The proposed method has to be customized to handle this issue. The major steps are as follows: Frame

Extraction, Boundary Tracking, Frame Differencing (Around Boundary Only), Detection of Moving Object, Defining Bounding Box and Active-Window (AW), Track the Object (Processing Local to AW). Finally all the steps are repeated around the frame boundary and inside the active-window if any moving object is detected inside the frame. A block diagram of the major functional steps has been given in Fig.1. All the steps are detailed out in the following subsections.

Frame Extraction

This is the first step in which a frame is extracted from a video sequence. It is assumed that the camera is stationery and there is no change in the background. Objects enter the frame by crossing the frame boundary. Conventional method of background subtraction needs complete information of the entire frame for applying a frame differencing to detect the position of the moving object. But in the proposed method only the information of the ideal background frame is needed. Subsequent processing is done around the frame boundary or in the active window itself as explained in the following sections.

Boundary Tracking

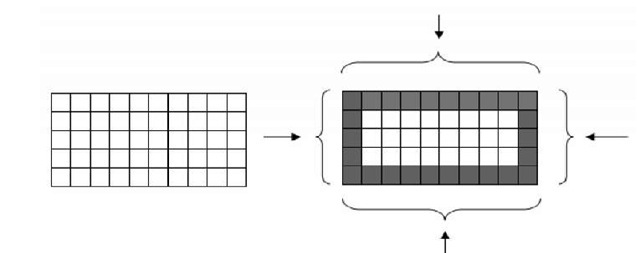

The frame is divided into small grids as depicted in Fig 2. This figure also highlights an area around the frame boundary (the four possible directions). This is the portion of the frame that should be tracked for detecting the initial movements of a moving object entering the camera frame crossing the frame boundary. The whole frame need not be considered for the frame differencing. This significantly reduces the computation time. Moreover, as frame differencing is performed on a sub-image of the actual frame, this leads to less memory requirement for storing the sub-images.

Fig. 2. Boundary Tracking

Frame Differencing

Frame differencing is applied for detecting the existence and position of a moving object. An ideal background image (ground image) is considered. The shaded portion in Fig 2 shows the boundary of the frame. Each extracted sub-image (the boundary portion of an extracted frame) is subtracted from the respective portion of the ground image to determine the existence of an object near the boundary of the camera frame.

Detection of Moving Object

The initial position of any moving object can be detected from as explained in section 2.3. When it is found that an object is entering the camera frame by crossing the frame boundary, the object can be tracked to get its subsequent position inside the frame. For this purpose the sub-image of the previous frame is subtracted from that of the current frame. The difference indicates the changes in the image-pixels intensities where there is motion. This processing is performed on sub-images of the frame obtained by defining an active-window around the object. This has been explained in the next section.

Defining Bounding Box and Active Window

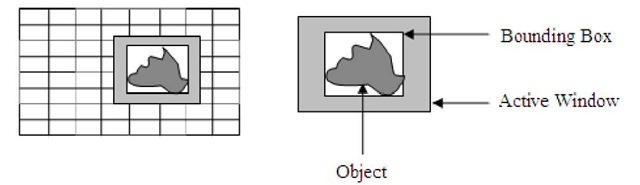

When a moving object totally enters the camera frame a bounding box is defined around it. The bounding box is the rectangular window that most tightly defines the object. The concept is depicted in Fig 3.

Active-window is another rectangular window around the bounding box. The size of this window is taken slightly larger than that of a bounding box because all the processing needs to be concentrated within this window only. Any subsequent movement of the object is possible in the lightly-shaded portion of the active-window. So if the frame differencing is performed only inside the active-window, the motion of the object can be successfully tracked in all subsequent video frames. The size of this shaded portion may be of 10 or 15 pixels width.

Fig. 3. Bounding Box, Active Window, Object and all together inside the frame

For tracking the object all around the frame the frame differencing has to be applied over successive frames of the video. All the processing should be confined to active window itself.

The Proposed Methodology for Moving Object Detection

The proposed algorithm for moving object detection involves several independent but associated sub-tasks. In the following few sections, the components of the proposed algorithm have been explained before the same has been described in section 3.4.

Modeling the Background

A common approach to moving object detection is to perform background subtraction, which identifies moving objects from the portion of a video frame that differs significantly from a background model. Modeling the background is an important task. It must be robust against changes in illumination. It should avoid detecting non-stationary changes in the background such as moving leaves, rain, snow, and shadows cast by moving objects or noise incorporated by the camera or the environment etc. It should react quickly to changes in background such as starting and stopping of vehicles [5, 6].

Color is an important feature of objects. Although the background subtraction can be performed on the binary frames for better performance, but the processing can also be performed on colored frames without binarizing the frame images. In a color image, each pixel can be represented by a 3×1 vector, representing the pixel intensities of three basic color components (Red, Green and Blue).

The most important aspect of the proposed method is that all the processing need not be done on the whole image frame. It is required to be performed only at the boundary region (shaded portion of the frame in Fig 2). Once an object is detected in the boundary and the bounding-box and active-widow have been defined for the moving object, the processing can be confined inside the active-window only.

Handling Objects Inside the Frame without Crossing the Frame Boundary

The proposed method performs boundary tracking of the frame. So, any moving object that appears inside the frame without crossing the frame boundary (e.g. any object pops inside the frame from behind a wall, or coming out of a building etc.) should be taken into consideration. The proposed method does not directly deal with objects appearing in the middle of the frame without crossing the frame boundary.

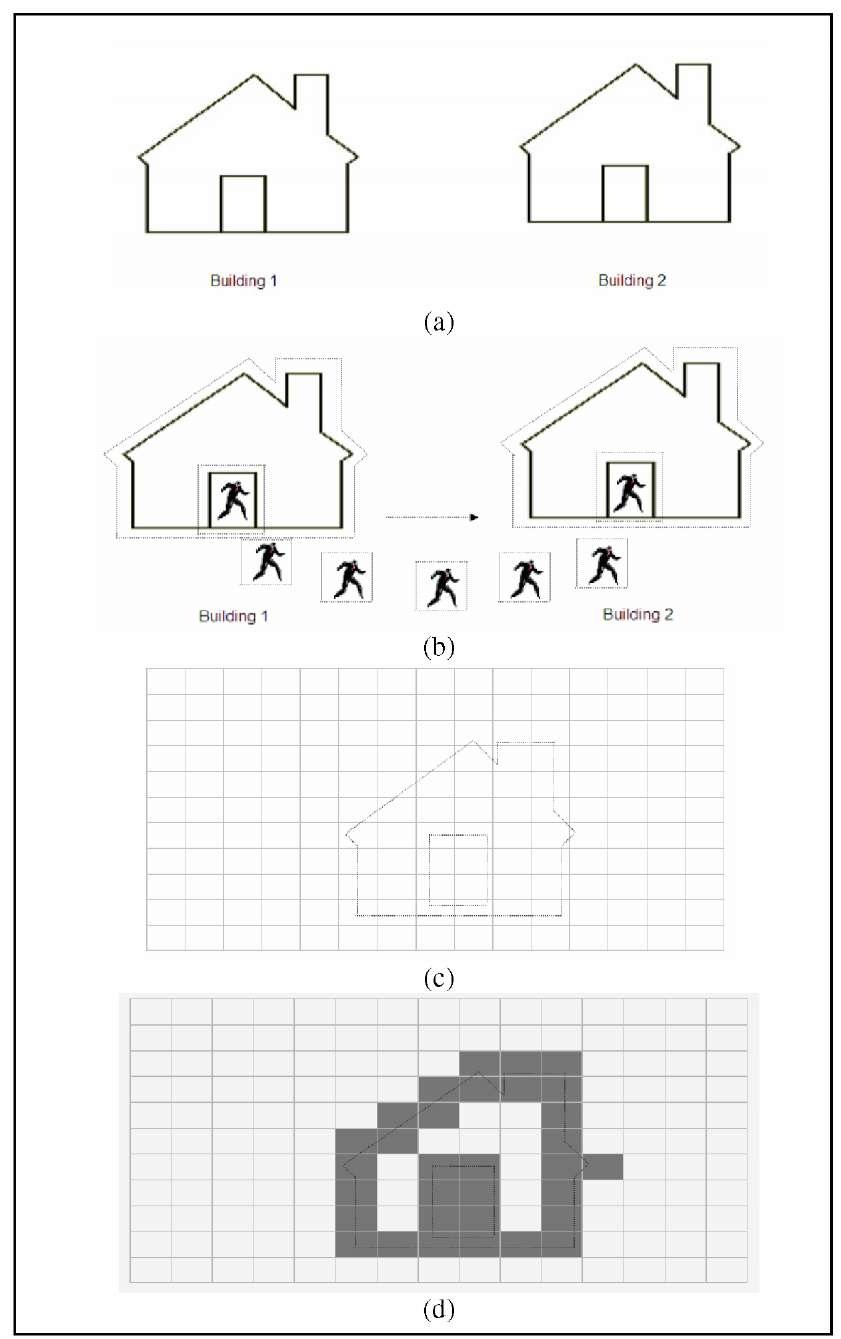

However, this may be handled in a different way. Let us take an arbitrary situation where the camera is fixed for surveillance of a campus. The method assumes fixed camera and static background. Fig 4 (a) shows the campus of an organization having two buildings. The camera frame contains two buildings and the premises around the buildings. The possible sources of moving objects and the portions of the frame that need to be tracked for detecting these objects are as follows:

i) Objects can pop up from inside the two buildings: The entrance/gate/doors of the buildings are to be tracked for detecting these objects.

ii) Objects can appear inside the frame from behind the buildings: The entire building contours are to be tracked for detecting these objects.

The system installed for the surveillance of the campus can be customized to deal with these possibilities. So, in addition to tracking the boundary of the frame as addressed in the proposed method, the building contours and the building entrance can be tracked for detecting the presence of the moving objects appearing in the frame without crossing the frame boundary. The portion around the entrance of the building is shown using dotted lines. This solution is better than considering the whole image-frame pixel-by-pixel for frame differencing.

Fig. 4. Boundary Tracking of a campus of an organization

The dotted rectangle around the person is the active-window that has to be tracked for detecting the motion/position of the person. Fig 4(b) shows a situation where a person comes out of Building 1 and goes to Building 2. The surveillance system can be customized to monitor this kind of movement of objects. As the person crosses the boundary of the dotted rectangle across the entrance of Building 1, the system starts tracking that person. It finds out the active-window around the person as shown by the dotted rectangle. As the person approaches Building 2, the system continuously tracks him till he disappears inside the building.

In case of a stationery object inside the frame, it is only required to identify the potential areas where a moving object can appear. Fig 4 (c) shows the camera-frame is subdivided into small equal-sized grids. Fig 4 (d) shows that the grids have to be processed for tracking the boundary of the building and the entrance.

Defining the Bounding Box and the Active Window

It is important to define the bounding-box and the active-window properly. As any object enters the camera frame, the frame subtraction can be continued for a few frames on the whole image frame until the moving object entirely enters the camera frame. Once the moving object is totally inside the frame, the bounding box is defined that most tightly encloses the object. The active-window can be calculated accordingly. Once this has been done, all subsequent frame differencing can be applied only inside the active-window. The position of the active window changes over the camera frame as the object moves in different directions inside the frame. The position of the active-window needs to be updated in subsequent frames. This is done by finding the centroid of each bounding-box and then by measuring the difference of two consecutive centroids. If this is less than a predefined threshold, no change in the position of the active-window is made in the subsequent frame. Alternatively, the boundary of the active-window can be updated by horizontal and vertical separation of two centroids from two consecutive frames. Another alternative is to fix the size of the active window for all frames once it has been computed. This is suitable for objects moving slowly and predictably in the frame as shown in Fig 5 (b). If there is a sudden change in the speed of the object then an active-window defined very close to the bounding-box will fail to track the moving object correctly.

Algorithm for Moving Object Detection

The proposed algorithms for moving object detection is outlined as follows: Begin

Step 1: Boundary Tracking

1.1. Apply frame differencing and subtract the background frame from each incoming frame.

1.2. If there is no change continue step 1.1.

Step 2: Apply frame differencing (subtract (i-1)-th frame from i-th frame) until the object entirely enters the camera frame. Step 3. Define the initial bounding-box around the moving object and estimate the size of the initial active-window. Step 4. Active-window processing

4.1. Apply frame differencing on active-window area only in successive frames.

4.2. Find out the bounding-box around the moving object.

4.3. Calculate and update the boundary of the active window.

4.4. Continue steps 3.1 through 3.3 until the object moves out of the frame.

End.

Experimental Results

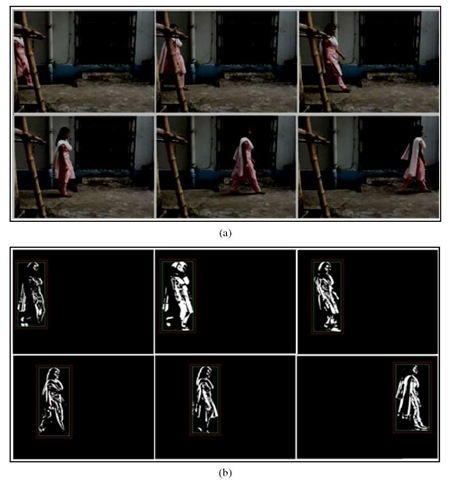

The authors have used some samples recorded at 30 fps as 480×640 resolution color video for experiments. For processing, the video is converted to grayscale and ultimately the binary frames have been considered for background subtraction purpose. Fig 5 (a) shows some frames of one of the test videos.

Fig. 5 (b) shows some frames of the same video after applying frame differencing. The bounding-box has been outlined using a green rectangle around the moving object and the active-window has been depicted as a red rectangle around the bounding-box. The centroid of each Active-window has been marked as a red dot inside the green rectangle.

Fig. 5. (a) A few frames from Original Video (b) Applying Frame Differencing using Bounding-box and Active-window

Table 1. Some Results using the proposed method

|

Frame No. |

AW Height (H) |

AW Width (W) |

Total no. of Pixels used for background Subtraction using AW (HxW) |

Saving (Pixels) (480×640) -(HxW) |

Percentage of Saving |

|

13 |

316 |

145 |

45820 |

261380 |

85% |

|

14 |

339 |

160 |

54240 |

252960 |

82.34% |

|

15 |

341 |

144 |

49104 |

258096 |

84% |

|

16 |

341 |

175 |

59675 |

247525 |

80.57% |

|

17 |

339 |

187 |

63393 |

243807 |

79.36% |

|

18 |

339 |

170 |

57630 |

249570 |

81.24% |

|

19 |

336 |

160 |

53760 |

253440 |

82.5% |

Overall 82.59% performance benefit has been achieved for the test video shown in Fig 5. It has been tested with other sample videos. For most of the cases more than 80% saving has been achieved. However, the performance improvement highly depends on the type of video used for testing. In all the samples the moving objects occupy very small proportion of the overall frame. This is true for surveillance video where the size of moving objects inside the frame is insignificant compared to the size of the whole camera frame. There could be situation where the moving object may pass very close to the camera occupying a significant portion of the camera frame. Even in such scenarios the proposed approach will perform better than the conventional frame differencing approach that takes the entire frame for processing.

The concept of active-window not only reduces the processing time and space requirement of conventional frame differencing approach for moving object detection, it also reduces the chances of being susceptible to non-stationery changes in the background. If the active-window is used, then all necessary processing in successive frames only need to be confined inside this window itself. So, any non-stationery change outside this window will not be considered in frame differencing. This strongly reduces the chances of considering non-stationery changes in background as motion of objects.

Conclusion

The proposed methodology successfully detects any moving object maintaining low computational complexity and low memory requirements. This would open up the scope of its usage for many interesting applications. It is particularly suitable for online detection of moving objects in embedded mobile devices limited by less computation power and low preinstalled memory. This approach can also be adopted for transmitting data of moving objects over a low bandwidth line for a surveillance system. In future, the proposed methodology may be extended towards efficient modeling of the background. There are also issues of illumination changes and non-stationery transitions in the background. Identification of shadows is another important task in this scenario. Considering these issues in the view of providing a low complexity solution is a real challenge.