Database Reference

In-Depth Information

1

2

Node 1

Node 2

Node 3

Node 4

Node 5

Node 6

3

SSKY_2

SSKY_3

SSKY_4

SSKY_5

SSKY_6

SSKY_1

5

4

6

AV7

AV8

AV9

AV10

AV11

AV12

AV13

AV14

AV15

AV16

AV17

AV18

GRID_DATA

PRD_DATA

PRD_FRA

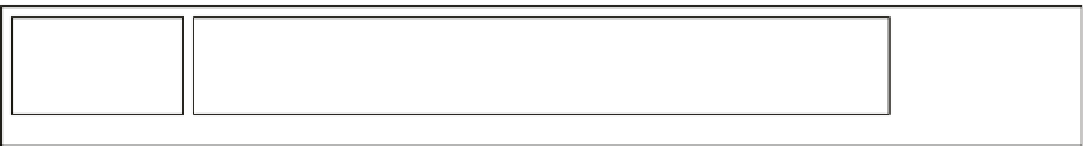

Figure 3-1.

Points of failure in a RAC hardware configuration

We briefly examine each of these failure scenarios and discuss the various methods to protect them from these

failures.

Interconnect Failure

If the interconnect between nodes fail, either because of a physical failure or a software failure in the communication

or interprocess communication (IPC) layer, it appears to the Oracle Clusterware (OCW) at each end of the

interconnect that the node at the other end has failed. The OCW should use an alternative method, such as checking

for a quorum disk, to evaluate the status of the system. In the case of a complete communication link failure, a voting

disk protocol is initiated. Whichever node grabs the most number of disks becomes the master. The master writes a

kill block to the disk in case the communication link is down. Instance will then kill itself.

Eventually, it may shut down both the nodes involved in the operation or just one of the nodes at the end of the

failed connection. It will evict the node by means of fencing to prevent any continued writes that could potentially

corrupt the database.

Traditionally in a RAC environment, when a node or instance fails, an instance is elected to perform instance

recovery. The Global Enqueue Service and the Global Cache Service are reconfigured after the failure; redo logs are

merged and rolled forward. The transactions that have not been committed are rolled back.

This operation is performed by one of the surviving instances reading through the redo log files of the failed

instance. Such recovery provides users immediate access to consistent data. However, in situations where the

clusterware is deciding on which node(s) to shut down, access is denied and in turn the recovery operation is delayed;

thus, data is not available for access. This is because recovery operations are not performed until the interconnect

failure causes one of the instances or nodes to fail.

Search WWH ::

Custom Search