Database Reference

In-Depth Information

improvements with high speed, high throughput interconnects (10GigE). For a full table scan, disk access achieves

higher throughput than global cache, because the global cache does not do large I/Os very well and is CPU intensive.

The trade-off is multidimensional: Interconnect latency is traded off for disk access when the disks are not very fast

and therefore inexpensive. This is more true in OTLP systems that predominantly have single random block access.

We look at a two scenarios to better understand the RAC behavior.

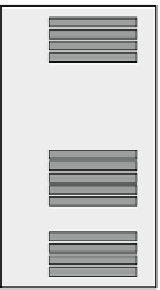

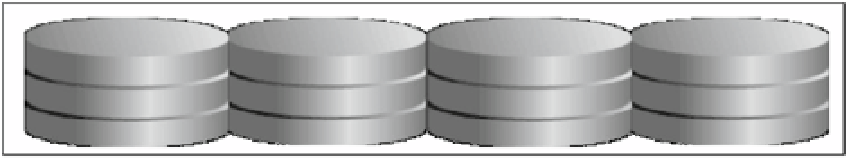

Scenario 1: Block Request Involving Two Instances

Figure

13-3

is a four-node RAC cluster. All instances maintain masters for various objects represented by R1 thru R8.

In our discussions, we assume that the data blocks for the object discussed are mastered on instance 4 (

SSKY4

).

1.

The user session requests for a block of data and the GCS process checks the local cache

for the data block; when data is not found, GCS makes a request to the object master. In

Figure

13-3

, the instance

SSKY3

requires a row from a block at data block address file#100,

block# 500 (100/500) and makes a request to the GCS of the resource.

SSKY2

SSKY1

SSKY4

SSKY3

R7

R5

R3

R1

R8

500:9996

1

R4

R2

2

SCN 9996

Block 450

Block 459

Block 550

Block 500

Block 600

Block 490

SSKYDB

Figure 13-3.

Cache Fusion 2-way

GCS on

SSKY4

after checking with the GRD determines that the block is currently in its

local cache. The block is found on instance

SSKY4

, and the GCS sends the block to the

requesting instance

SSKY3

.

2.

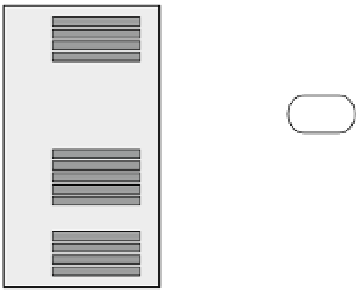

This instrumentation required to determine the time taken for each of these steps is available within the Oracle

Database in the

GV$SYSSTAT

or the

GV$SESSTAT

views. RAC-specific statistics are in class 8, 32, and 40 (see Figure

13-4

)

and are grouped under “Other Instance Activity Stats” in an AWR report.

Search WWH ::

Custom Search