Database Reference

In-Depth Information

There is a subtle difference between Oracle Database 11g Release 2 and Oracle Database 12c Release 1. In the

earlier version, the repository used to store the diagnostic data was a Berkelydb compared to the Oracle Database 12c

Release 1, called the management instance installed as part of the (GI) installation.

CHM provides real-time monitoring, continuously tracking O/S resource consumption at node, processes, and

device levels to help diagnose degradation and failures of the various components in a RAC environment. In the

versions 10g and 11g, the real-time data was available through a GUI interface or historical data could be replayed

from the repository stored on individual nodes in the cluster to analyze issues such as high interconnect latency times,

high CPU usage, runaway processes, high run queue lengths, and off course reasons and causes that lead to situations

causing node evictions.

In Oracle Database 12c Version 1, only a command-line interface is available to analyze data.

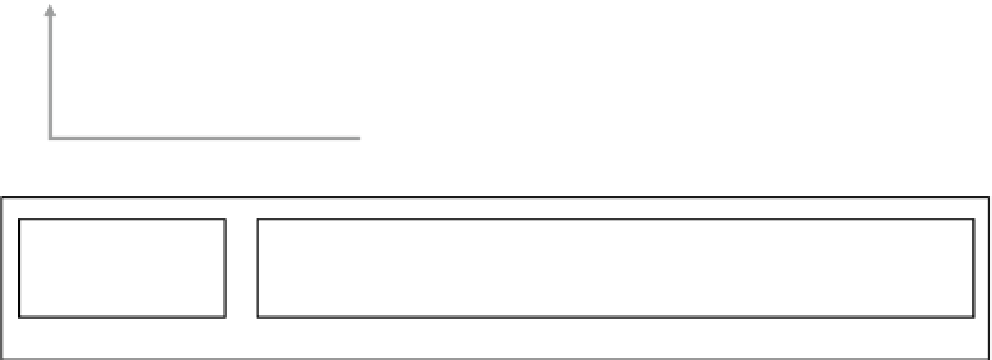

Architecture

Similar to the Oracle clusterware, the CHM architecture is robust (as illustrated in Figure

6-21

). CHM has several

daemon processes that run on all servers to perform various tasks.

Node 1

Node 2

Node 3

Node 4

Node 5

Node 6

SSKY1

SSKY2

SSKY3

SSKY4

SSKY5

SSKY6

ologgerd

osysmond

ologgerd

osysmond

osysmond

osysmond

osysmond

osysmond

AV7

AV8

AVL9

AV10

AV11

AV12

AV13

AV14

AV15

AV16

GRID_DATA

PRD_DATA

Figure 6-21.

CHM Oracle Database 12c architecture

CHM consists of a database and three daemons:

•

oproxyd

is the proxy daemon that handles connections (prior to Oracle 12c). All connections

are made via the public interface. If CHM is configured to use a dedicated private interface,

then

oproxyd

listens on the public interface for external clients such as

oclumon

and

crfgui

(discussed later in this section). It is configured to run on all nodes in the cluster.

•

osysmond

is the primary daemon responsible for monitoring and O/S metric collection. It's

responsible for metrics collection on all nodes in the cluster and sending the data to the

ologgerd

daemon process.

osysmond

is responsible for spawning/starting the

ologgerd

, and

for some reason if the

ologgerd

dies, it will restart it.

Search WWH ::

Custom Search