Database Reference

In-Depth Information

Level 0

All

Level 1

A

B

C

D

Level 2

AB

AC

AD

BC

BD

CD

Level 3

ABC

ABD

ACD

BCD

Level 4

ABCD

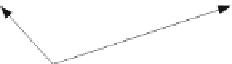

Fig. 7.6

A data cube lattice

The simplest optimizations for computing the cube lattice are:

Smallest-parent: Computes each view from the smallest previously com-

puted one. In the lattice of Fig.

7.6

,

AB

can be computed from

ABC

,

ABD

,

or

ABCD

. This method chooses the smallest of them.

Cache-results: Caches in memory an aggregation from which other ones

can be computed.

Amortize-scans: Computes in memory as many aggregations as possible,

reducing the amount of table scans.

Share-sorts: Applies only to methods based on sorting and aims at sharing

costs between several aggregations.

Share-partitions: These are specific to algorithms based on hashing. When

the hash table is too large to fit in main memory, data are partitioned

and aggregation is performed for each partition that fits in memory. The

partitioning cost can be shared across multiple aggregations.

Note that these methods can be contradictory. For instance, share-sorts would

induce to prefer

AB

to be derived from

ABC

, while

ABD

could be its smallest

parent. Sophisticated cube computation methods try to combine together

some of these simple optimization techniques to produce an ecient query

evaluation plan. We explain below a method based on sorting. Methods based

on hashing follow a similar rationale. Note that most of these algorithms

require the estimation of the sizes of each aggregate view in the lattice.

7.4.1 PipeSort Algorithm

The

PipeSort algorithm

gives a global strategy for computing the data

cube, which includes the first four optimization methods specified above.

Search WWH ::

Custom Search