Information Technology Reference

In-Depth Information

DATA

DATA

DATA

DATA

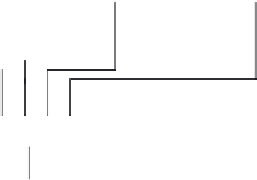

Step 1

Step 2

Compare

MUX

Step 3

DATA

DATA

DATA

DATA

Step 1

Step 2

Compare

MUX

Step 3

FIGURE 4.24:

Phased Cache: Tags first, data later.

Depending on the prediction accuracy, this scheme's performance and power consump-

tion approach those for a small and fast direct-mapped cache. With mispredictions, however,

the sequential search can be much more expensive in power consumption than a phased cache

and almost certainly slower. Moreover, this scheme suffers considerably on misses since it will

consume the maximum energy per access just to find out that it needs to fetch the data from a

lower hierarchy level.

☞

earlier work on pseudo-associativity

: The idea of a sequentially accessed set-associative

cache was followed by work on pseudo-associativity which eventually led to way prediction.

Early work focused on improving the miss ratio of direct-mapped caches by endowing

them with the illusion of associativity [

43

,

122

,

3

,

4

]. This was driven by performance

considerations—and not power which was a secondary concern back then.

Direct-mapped caches are faster than set-associative caches and can be easier to

implement and pipeline [

99

]. Any enhancement that brings their miss ratio closer to that

of set-associative caches and at the same time does not compromise their latency can

potentially make them top performers. In this direction, work such as the Hash-Rehash

cache (Agrawal et al. [

4

]), the Column associative cache (Agrawal et al. [

3

]), and the PSA

Search WWH ::

Custom Search