Database Reference

In-Depth Information

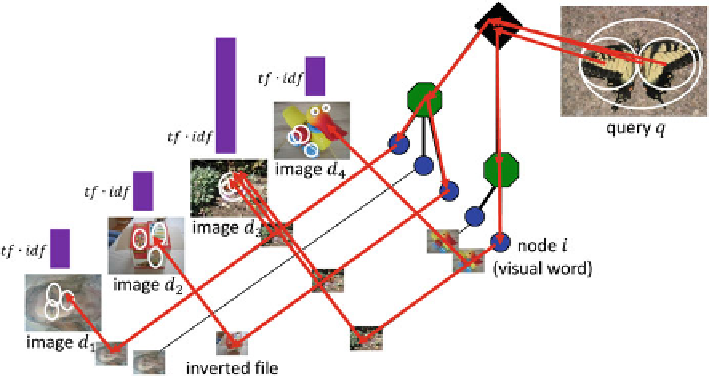

Fig. 4.4

Image search scheme with visual vocabulary tree. Note that the

white circle

in the image

corresponds to a local descriptor (not an O-query)

for the BoW model to map each local feature, and subsequently, constructing a BoW

representation. By establishing a hierarchical K-means clustering for the codebook,

this algorithm manages to shorten the codebook generation process. Therefore,

it is scalable and efficient for processing large-scale data. Specifically, the CVT

algorithm is able to reduce the following ambiguities:

Sometimes, issuing O-query only in image-based search engines may lead to

too many similar results. The surrounding pixels provide a useful context to

differentiate those results.

Sometimes, the O-query may not have (near) duplicates or exist in the image

database. Issuing only O-query may not lead to any search results. The surround-

ing pixels then can help in providing a context to search for the images with

similar backgrounds.

Hierarchically built K-means clustering for codebook generation makes the

retrieval process efficient, wherein each queried local feature only goes through

one particular branch at the highest level and its sub-branches instead of going

through the entire codebook.

The CVT-based visual search method encodes different weights of term fre-

quencies inside and outside the O-query. For off-line image indexing, SIFT local

descriptors are extracted as a first step. Since our target database is large-scale, an

efficient hierarchical K-means is used to cluster local descriptors and build the CVT.

Then, the large-scale images are indexed using the built CVT and the inverted file

mechanism, which is to be introduced in the following.

Search WWH ::

Custom Search