Image Processing Reference

In-Depth Information

The third stage is the orientation assignment, that is starting by calculating the Haar wavelet

(Haar side: 4

s

, where

s

is the scale at which the interest point was detected) responses in the

x

and

y

direction within a circular neighborhood of radius 6

s

around the interest point. The

responses have centered at the interested point and weighted with Gaussian (2

s

). At that mo-

ment, the sum has calculated for all responses within sliding orientation window of size

π

/3

to estimate the leading orientation, then determining the sum of horizontal and vertical re-

sponses within the window. A local orientation vector has produced with the two collected

responses, such that the longest vector over all windows defines the orientation of the interest

point.

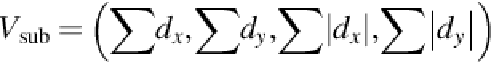

Last stage in SURF is the descriptor based on sum of Haar wavelet responses. For the ex-

traction of the descriptor, the first step consists of constructing a square template region (the

size is 20

s

) oriented along the selected orientation and centered on the interested point. The re-

gion splits up regularly into smaller 4 × 4 square subregions. For each subregion, Haar wavelet

responses have computed at 5 × 5 regularly spaced sample points. Simply, the Haar wavelet

response in horizontal direction is denoted by

d

x

, also the Haar wavelet response in vertical

direction by

d

y

. The responses

d

x

and

d

y

are first weighted with a Gaussian (

σ

= 3.3

s

) centered at

the interested point. Moreover, the responses |

d

x

| and |

d

y

| are extracted to bring in informa-

tion about the polarity of changes in the intensity. Hence, the structure of each subregion has

four-dimensional descriptor vector

V

sub

:

(3)

From the previous, by multiplying all 4 × 4 subregions results in a descriptor vector of

length are 4 × (4 × 4) = 64 [

17

,

18

]

. Additionally, to judge whether the two feature points of im-

ages are matched or not, the distance of the characteristic vector between two feature points

is calculated. Finally, it is interesting to note that SURF ignores the geometric relationship

between the features, which is very important characteristic of many objects in the image. For

that reason, this chapter presents proposed algorithm to detect and matching objects based on

own constructed signatures.

3 Overview on Image Segmentation

The segmentation idea is spliting an image into many various regions containing every pixel

with similar characteristics such that; texture information, motion, color, whereas the detec-

addition, these regions should strongly related to the detected objects or features of interest to

be meaningful and useful for image analysis and interpretation. Actually, the transformation

from gray scale or color image in a low-level image into one or more other images in a high-

level image, which is depending on features, objects, and scenes, represents the first step in

signiicant segmentation. Generally, the accurate partitioning of an image is the main challen-

ging problem in image analysis, and the success of it depends on consistency of segmentation

Search WWH ::

Custom Search