Image Processing Reference

In-Depth Information

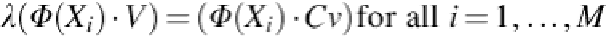

To do this, this equation λ

v

=

Cv

which is the eigenvalue equation should be solved for eigen-

values λ ≥ 0and eigenvactors

v

∈

F

.

As

Cv

= (1/

M

) ∑

i

= 1

M

(

Φ

(

X

i

) ·

v

)

Φ

(

X

i

), solutions for

v

with

λ

≠ 0 lie within the span of

Φ(X

1

), … Φ (X

M

)

, these coefficients

α

i

(

i

= 1, …,

M

) are obtained such that

(8)

The equations can be considered as follows

(9)

Having

M

×

M

matrix

K

by

K

ij

=

k

(

X

i

,

X

j

) =

Φ

(

X

i

) ·

Φ

(

X

j

), causes an eigenvalue problem.

The solution to this is

(10)

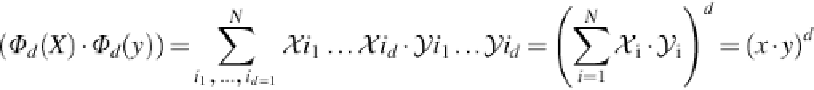

By selecting the kernels properly, various mappings can be achieved. One of these map-

pings can be achieved by taking the

d

-order correlations, which is known as ARG, between the

entries,

X

i

, of the input vector

X

. The required computation is prohibitive when

d

> 2.

(11)

To map the input data into the feature space

F

, there are four common methods such as lin-

ear (polynomial degree 1), polynomial, Gaussian, and sigmoid, which all are examined in this

work in addition to PCA.

Search WWH ::

Custom Search