Image Processing Reference

In-Depth Information

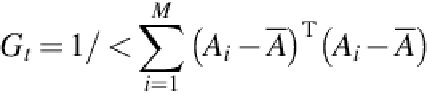

(5)

where

is the mean matrix of input images and finally we have

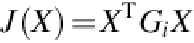

(6)

First, the

n

×

n

matrix of

G

t

is calculated from all of the training images. Then, the unitary

vectors

X

are obtained by geting the eigenvector matrix of

G

t

. This stage decides how many

eigenvectors are to be used in the projection of data. To achieve this, the eigenvalues of the cor-

responding eigenvectors are arranged in a descending order, and a subset of the higher values

is selected. Assuming

d

eigenvectors (with optimal projection axes

X

1

,

X

2

, …,

X

d

) are selected,

then how to achieve feature extraction and classification stages are explained in the next sec-

tion.

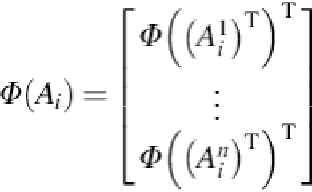

4 Kernel mapping along row and column direction

4.1 Two-Dimensional KPCA

The main idea of using kernel function in PCA is that the data are first mapped into another

space using a mapping function and then PCA is performed on the nonlinearly mapped data.

2DPCA is beter than 1D PCA in terms of speed and accuracy. The idea of using kernel func-

tion in 2DPCA is to improve the accuracy of the system. With

N

input images, let

A

i

be the

i

th image, where

i

= 1, 2, …,

N

, and

A

i

j

be the

j

th row of the matrix

A

i

, where

j

= 1, 2, …,

n

. The

nonlinear mapping is defined as follows:

(7)

Search WWH ::

Custom Search