Image Processing Reference

In-Depth Information

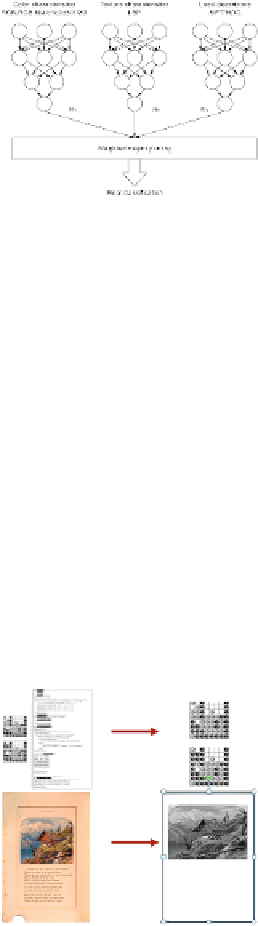

FIGURE 3

The final classification.

Since the aim is to classify document scans as well as regular images, we have also included

an additional document analysis module. Its purpose is to process the scans and extract the

images included in the document, in order to pass them subsequently to the module in charge

of extraction of descriptors and to classify them accordingly. We are not interested in text seg-

mentation, therefore this module will only binarize the document and go through a botom-up

• the document is split in tiles, which are analyzed according to their average intensity and

variance. The decision criteria is that in a particular tile, an image tends to be more uniform

than the text;

• the remaining tiles are clustered through a K-Means algorithm, which uses as a decision

metric the Euclidean distance;

• the clusters are filtered according to their connectivity and scarcity scores in order to elim-

inate tiles containing text areas with different fonts, affected by noise/poor illumination or

by page curvature;

• the final clusters are exposed to a reconstruction stage and merged into a single image,

which is provided as an input to the modules in charge of descriptors extraction/classiica-

tion (

Figure 4

).

FIGURE 4

Document image segmentation results.

All the neural networks have the same structure. The transfer function is sigmoid and the

images in the training set have been split in three groups:

• 60% for training;

• 20% for cross-validation;

• 20% for testing.

In order to validate the neural network progress, we have used gradient checking on the

cost function specified below:

Search WWH ::

Custom Search