Image Processing Reference

In-Depth Information

(CBPF) is used. CBPF atenuates the details that the human visual system (HVS) is not able to

perceive, enhances those that are perceptually relevant and produces an approximation of the

image that the brain visual cortex perceives. CBPF takes an input image

I

and decomposes it

into a set of wavelet planes

ω

s

,

o

of different spatial scales s (i.e., spatial frequency ν) and spatial

orientations

o

. It is described as:

(2)

where

n

is the number of wavelet planes,

c

n

is the residual plane, and

o

is the spatial orientation

either

v

ertical,

h

orizontal, or

d

ia

g

ona

l

. The perceptual image

I

ρ

is recovered by weighting these

ω

s

,

o

wavelet coefficients using the

extended Contrast Sensitivity Function

(e-CSF), which con-

siders spatial surround information (denoted by

r

), visual frequency (

ν

related to spatial fre-

quency by observation distance), and observation distance (

d

). Perceptual image

I

ρ

can be ob-

tained by

(3)

where

α

(

ν

,

r

) is the e-CSF weighting function that tries to reproduce some perceptual proper-

ties of the HVS. The term

α

(

ν

,

r

)

ω

s,o

≡

ω

s,o

;

ρ

,

d

can be considered the

perceptual wavelet coefficients

of image

I

when observed at distance

d

. For details on the CBPF and the

α

(

ν

,

r

) function, see

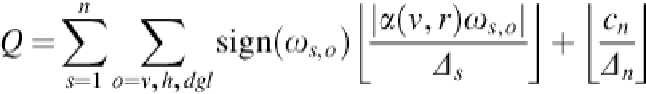

We employ the perceptual quantizer (ρSQ) either forward (F-ρSQ) and inverse (I-ρSQ),

with a specific interval on the real line. Then, the perceptually quantized coefficients

Q

(F-ρSQ),

from a known viewing distance

d

, are calculated as follows:

(4)

Search WWH ::

Custom Search