Biology Reference

In-Depth Information

Information

Algorihmic

Information

Uncertainty-Baseed

Information

Kolmogorov-Chaitin

Complexity

Classical

Set Theory

Probability

Theory

Fuzzy Set

Theory

Possibility

Theory

Evidence

Theory

Kolmogorov-Chaitin

Information

Hartely

Information

Shannon

Information

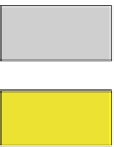

Fig. 4.1 A classification of information based on the quantitative aspect of information

(Klir 1993)

Themeaning of H is that it reflects the average

uncertainty

of a message being selected

from the message source for transmission to the user. When the probabilities of the

individual messages being selected are all equal, H assumes the maximum value given

by Eq.

4.3

, which is identical to the Hartley information (see Fig.

4.1

):

H

¼

log

2

n

(4.3)

According to Klir (1993), there are two types of information - the

uncertainty-

based

information and the

algorithmic

information (see Fig.

4.1

). The amount of

the uncertainty-based information, I

X

, carried by a messenger, X, can be calculated

using Eq.

4.3

leading to the following formula:

log

2

n

0

¼

n

0

bits

I

X

¼

H

before

H

after

¼

log

2

n

log

2

n

=

(4.4)

where H

before

and H

after

are the Shannon entropies before and after the selection

process, respectively, and n

0

is the number of messages selected out of the initial n.

Evidently, I

X

assumes a maximal numerical value when n

0

¼

1orH

after

¼

0. That

is, when the selected message, X, reduces the uncertainty to zero.

It is possible to view Shannon entropy, H, as characterizing the property of the

sender

(i.e., the message source) while Shannon information, I, characterizes the

amount of the information received by the

user

(Seife 2006). If there is no loss of

information during the transmission through the communication channel, H and I

would be quantitatively identical. On the other hand, if the channel is noisy so that

some information is lost during its passage through the channel, I would be less

than H. Also, according to Eq.

4.4

, information, I

X

, and Shannon entropy of the

message source, H

before

, become numerically identical under the condition where

H

afer

is zero (i.e., under the condition where the number of the message selected is 1).

It is for this reason that the term “information” and “Shannon entropy” are almost