Graphics Reference

In-Depth Information

the class variable. PCA and KPCA using both the polynomial kernel and the Gaus-

sian kernel are carried out on all of the input measurements. In Figs.

.

-

.

, the

circles and dots denote positive and negative samples, respectively. Figure

.

shows

thedata scatter projectedonto thesubspacespannedbythefirstthree principal com-

ponents produced by the classical PCA. Similarly, Fig.

.

shows plots of data scat-

ter projected onto the subspace spanned by the first three principal components ob-

tained byKPCAusing apolynomial kernel of degree

and scale parameter

.Figures

.

a-c are pictures of projections onto the principal component space produced by

Gaussian kernels with σ

, respectively. If we compare Fig.

.

(obtained using PCA) with the other plots, it is clear that KPCA provides some extra

information about the data that cannot be obtained through classical PCA.

=

,

and

Example 7

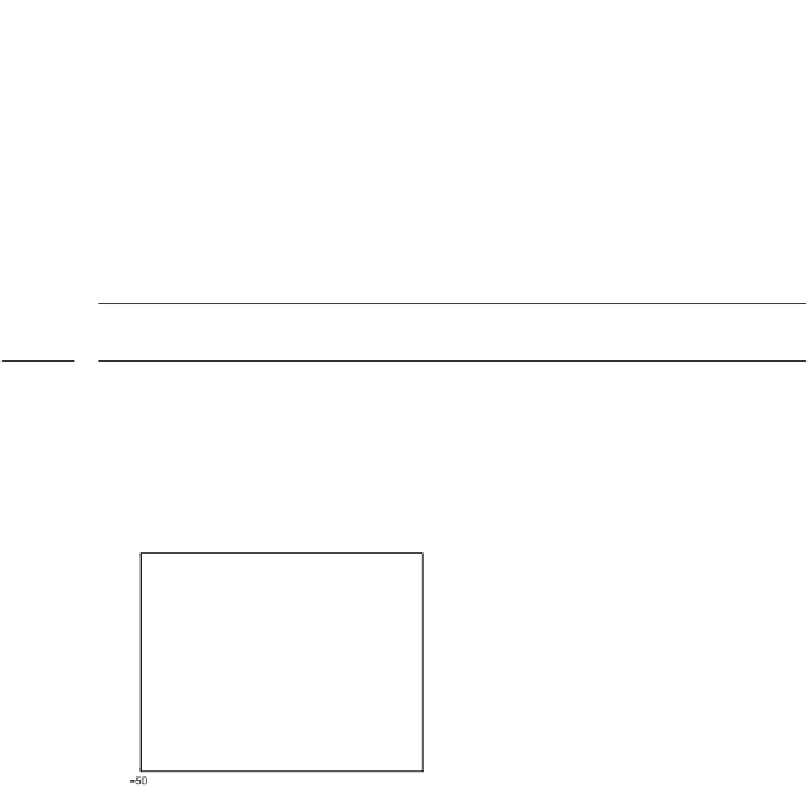

Inthethirdseries(Fig.

.

),weapplyPCAKPCAtothe“imagesegmen-

tation” data set (also from the UCI Machine Learning data archives), which consists

of

data points(eachwith

attributes) thatareclassifiedintosevenclasses.KPCA

with the RBF gives better class separation than PCA, as can be seen in (a

) & (b

).

When all seven classes are plotted in one graph, it is hard to determine the effect of

7

Figure

.

.

Results from applying PCA and KPCA to the “image segmentation” data set. A limited

number of outliers have been omitted in the figures