Database Reference

In-Depth Information

But for applications where uptime is critical, you'll want a replica set consisting of

three

complete replicas. What does the extra replica buy you? Think of the scenario

where a single node fails completely. You still have two first-class nodes available while

you restore the third. As long as a third node is online and recovering (which may

take hours), the replica set can still fail over automatically to an up-to-date node.

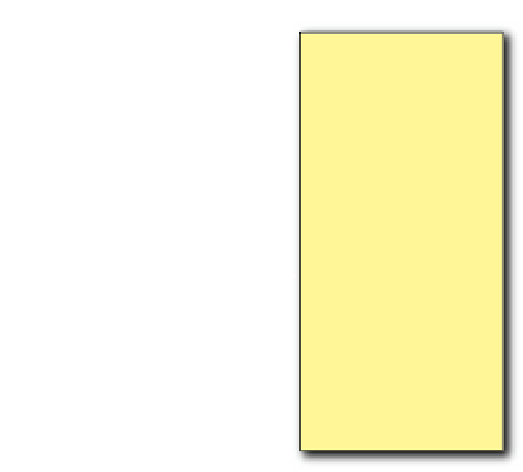

Some applications will require the redundancy afforded by two data centers, and

the three-member replica set can also work in this case. The trick is to use one of the

data centers for disaster recovery only. Figure 8.2 shows an example of this. Here, the

primary data center houses a replica set primary and secondary, and a backup data

center keeps the remaining secondary as a passive node (with priority

0

).

In this configuration, the replica set primary will always be one of the two nodes liv-

ing in data center

A

. You can lose any one node or any one data center and still keep

the application online. Failover will usually be automatic, except in the cases where

both of

A

's nodes are lost. Because it's rare to lose two nodes at once, this would likely

represent the complete failure or partitioning of data center

A

. To recover quickly,

you could shut down the member in data center

B

and restart it without the

--repl-

Set

flag. Alternatively, you could start two new nodes in data center

B

and then force a

replica set reconfiguration. You're not supposed to reconfigure a replica set when a

majority of nodes is unreachable, but you can do so in emergencies using the

force

option. For example, if you've defined a new configuration document,

config

, then

you can force reconfiguration like so:

> rs.reconfig(config, {force: true})

As with any production system, testing is key. Make sure that you test for all the typical

failover and recovery scenarios in a staging environment comparable to what you'll be

Primary data center

Secondary data center

Secondary

Ping

Replication

Secondary

(priority = 0)

Ping

Ping

Primary

Figure 8.2

A three-node

replica set with members in

two data centers