Information Technology Reference

In-Depth Information

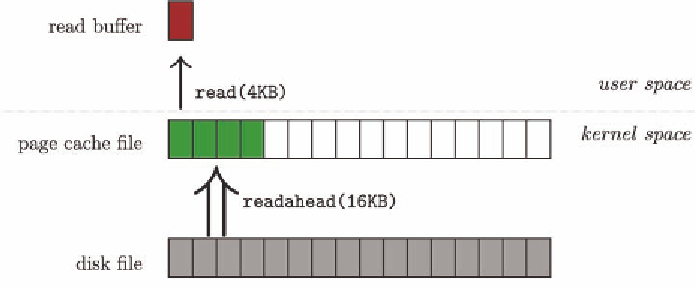

Figure 3. Page cache oriented read and readahead: when an empty page cache file is asked for the first

4KB data, a 16KB readahead I/O will be triggered

application provided I/O hints. Linux provides

a per-device tunable max_readahead parameter

that can be queried and modified with command

blockdev. As for now it defaults to 128KB for

hard disks and may be increased in the future. To

better parallelize I/O for disk arrays, it defaults to

2*stripe_width for software RAID.

Linux also provides madvise(), posix_fadvise()

and the non-portable readahead() system calls. The

first two calls allow applications to indicate their

future access patterns as normal, random or sequen-

tial, which correspondingly set the read-around and

read-ahead policies to be default, disabled or ag-

gressive. TheAPIs also make application controlled

prefetching possible by allowing the application to

specify the exact time and location to do readahead.

Mysql is a good example to make use of this facility

in carrying out its random queries.

This layer of indirection enables the kernel to

reshape “silly” I/O requests from applications:

a huge sendfile(1GB) request will be served in

smaller max_readahead sized I/O chunks; while

a sequence of tiny 1KB reads will be aggregated

into up to max_readahead sized readahead I/Os.

The readahead algorithm does not manage a

standalone readahead buffer. Prefetched pages are

put into page cache together with cached pages. It

also does not take care of the in-LRU-queue life

time of the prefetched pages in general. Every

prefetched page will be inserted not only into

the per-file radix tree based page cache for ease

of reference, but also to one of the system wide

LRU queues managed by the page replacement

algorithm.

This design is simple and elegant in general;

however when memory pressure goes high, the

memory will thrash (Wiseman, 2009), (Jiang,

2009) and the interactions between prefetching

and caching algorithms will become visible. On

the one hand, readahead blurs the correlation be-

tween a page's position in the LRU queue with its

first reference time. Such correlation is relied on

by the page replacement algorithm to do proper

page aging and eviction. On the other hand, in a

memory hungry system, the page replacement

algorithm may evict readahead pages before they

are accessed by the application, leading to reada-

the page cache

Figure 3 shows how Linux transforms a regular

read() system call into an internal readahead re-

quest. Here the page cache plays a central role:

user space data consumers do read()s which

transfer data from page cache, while the in-kernel

readahead routine populates page cache with data

from the storage device. The read requests are

thus decoupled from real disk I/Os.

Search WWH ::

Custom Search