Graphics Reference

In-Depth Information

that queries memory must endure two wire-propagation delays: one for the address

to travel to the memory, and a second for the addressed data to be returned. The

time required for these two propagations, plus the time required for the memory

circuit itself to be queried, is referred to as memory

latency.

11

Together, latency

and bandwidth are the two most important memory-related constraints that system

implementors must contend with.

With this somewhat theoretical background in hand, let's consider the impor-

tant practical example of dynamic random-access memory (DRAM). DRAM is

important because its combination of tremendous storage capacity (in 2009 indi-

vidual DRAM chips stored four billion bits) and high read-write performance

make it the best choice for most large-scale computer memory systems. For exam-

ple, both the DDR3-based CPU memory and the GDDR3-based GPU memory in

the PC block diagram of Figure 38.1 are implemented with DRAM technology.

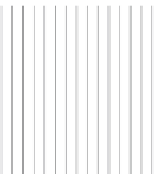

Because modern integrated circuit technology is a planar technology, DRAM

is organized as a 2D array of 1-bit memory cells (see Figure 38.8). Perhaps sur-

prisingly, DRAM cells cannot be read or written individually. Instead, individ-

ual bit operations specified at the interface of the DRAM are implemented inter-

nally as operations on

blocks

of memory cells. (Each row in the memory array in

Figure 38.8 is a single block.) When a bit is read, for example, all the bits in the

block that contains the specified bit are transferred to a block buffer at the edge of

the 2D array. Then the specified bit is taken from the block buffer and delivered

to the requesting circuit. Writing a bit is a three-step process: All the bits in the

specified block are transferred from the array to the block buffer; the specified bit

in the block buffer is changed to its new value; then the contents of the (modified)

block buffer are written back to the memory array.

Interface

Bit selector

Block buffer (1 of 4)

Memory array

Figure 38.8: Block diagram of

a simplified GDDR3 memory cir-

cuit. For increased clarity, the

true storage capacity (one billion

bits) is reduced to 256 bits, imple-

mented as an array of sixteen 16-

bit blocks (a.k.a. rows). The red

arrows arriving at the left edges

of blocks indicate control paths,

while the blue ones meeting the

tops and bottoms of blocks are

data paths.

While early DRAMs hid this complexity behind a simple interface protocol,

modern DRAMs expose their internal resources and operations.

12

The GDDR3

DRAM used by the GeForce 9800 GTX, for example, implements four separate

block buffers, each of which can be loaded from the memory array, modified, and

written back to the array as individually specified, and in some cases concurrent,

operations. Thus, the circuit connected to the GDDR3 DRAM does more than

just read or write bits—it manages the memory as a complex, optimizable sub-

system. Indeed, the memory control circuits of modern GPUs are large, carefully

engineered subsystems that contribute greatly to overall system performance.

But what performance is optimized? In practice, the DRAM memory con-

troller cannot simultaneously maximize

bandwidth

and minimize

latency

.For

example, sorting requests so that operations affecting the same block can be aggre-

gated minimizes transfers between the block buffers and the array, thereby opti-

mizing bandwidth at some expense to latency. Because the performance of modern

GPUs is frequently limited by the available memory bandwidth, their optimization

is skewed toward bandwidth. The result is that total memory latency—through

the memory controller, to the DRAM, within the DRAM, back to the GPU, and

again through the memory controller—can and does reach

hundreds

of computa-

tion cycles. This observation is our second hardware principle.

11. Note that latency affects only memory reads. It can be hidden during writes with

pipeline parallelism, as discussed in Section 38.4.

12. Using the terminology of this chapter, DRAM architecture has evolved to more closely

match DRAM implementation.