Graphics Reference

In-Depth Information

Application

GPU chip

PCIe interface

Vertex generation

Primitive generation

Fragment generation

Core

Instruction

unit

Work queuing and distribution

Core

Core

Core

Core

Core

Core

Core

Core

Core

Core

Core

Core

Core

Core

Core

Core

DP

ALU

DP

ALU

DP

ALU

DP

ALU

DP

ALU

DP

ALU

DP

ALU

DP

ALU

SFU

SFU

Local

memory

TU

L1$

TU

L1$

TU

L1$

TU

L1$

TU

L1$

TU

L1$

TU

L1$

TU

L1$

DP: data path

ALU: fp add and mult

SFU: special functions

TU: texture unit

L1$: 1st level cache

L2$: 2nd level cache

Interconnection network

Pixel ops

L2$

Pixel ops

L2$

Pixel ops

L2$

Pixel ops

L2$

GDDR3 memory

GDDR3 memory

GDDR3 memory

GDDR3 memory

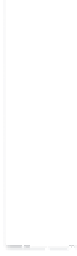

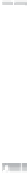

Figure 38.4:

NVIDIA

GeForce 9800 GTX block diagram.

Now we turn our attention to an implementation of the graphics pipeline

architecture—NVIDIA's GeForce 9800 GTX. Figure 38.4 is a block-diagram

depiction of this implementation. Block names have been chosen to best illus-

trate the correspondence to the blocks on the pipeline block diagram shown in

Figure 38.3. There is a one-to-one correspondence between the vertex, primitive,

and fragment generation stages in the pipeline and the identically named blocks

in the implementation diagram. Conversely, all three programmable stages in the

pipeline correspond to the aggregation of 16 cores, eight texture units (TUs), and

the block titled “Work queuing and distribution.” This highly parallel, application-

programmable complex of computing cores and fixed-function hardware is central

to the GTX implementation—we'll have much more to say about it. Completing

the correspondence, the pixel operation stage of the pipeline corresponds to the

four

pixel ops

blocks in the implementation diagram, and the large memory block

adjacent to the pipeline corresponds to the aggregation of the eight L1$, four L2$,

and four GDDR3 memory blocks in the implementation. The

PCIe interface

and

interconnection network

implementation blocks represent a significant mecha-

nism that has no counterpart in the architectural diagram. A few other significant

blocks, such as display refresh (regularly transferring pixels from the output image

to the display monitor) and memory controller logic (a complex and highly opti-

mized circuit), have been omitted from the implementation diagram.

As we have seen, several decades of exponential increase in transistor count and

performance have given computer system designers ample resources to design and

build high-performance systems. Fundamentally, computing involves performing

operations

on

data

.

Parallelism,

the organization and orchestration of simulta-

neous operation, is the key to achieving high-performance

operation

.Itisthe