Graphics Reference

In-Depth Information

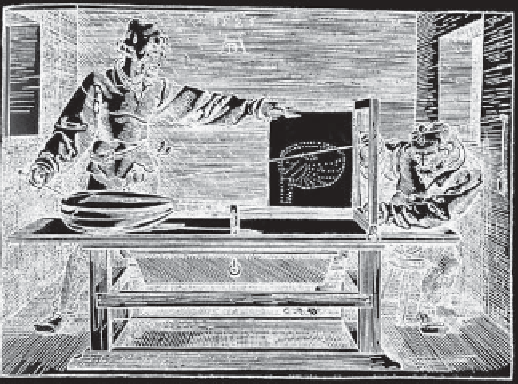

Figure 36.11: Two people using an early “rendering engine” to make a picture of a lute.

produced by marking intersections of that string with the image plane. But, as we

noted in Chapter 3, this apparatus can in fact measure more than just the image.

Consider what would happen if the artist were to annotate each point he marked

on the image plane with the length of string between the plumb bob and the pulley

corresponding to that point. He would then record a depth buffer for the scene,

encoding samples of all

visible

three-dimensional geometry.

Dürer's artist had little need of a depth buffer for a single image. The physical

object in front of him ensured correct visibility. However, given two images with

depth buffers, he could have composited them into a single scene with correct

visibility at each point. At each sample, only the nearer depth value (which in this

case means a longer string below the pulley) could be visible in the combined

scene. Our rendering algorithms work with virtual objects and lack the benefit

of automatic physical occlusion. For simple convex or planar primitives such as

points and triangles we know that each primitive does not occlude itself. This

means we can render a single image plus depth buffer for each primitive without

any visibility determination. The depth buffer allows us to combine rendering of

multiple primitives and ensure correct visibility at each sample point.

The depth buffer is often visualized with white values in the distance and black

values close to the camera, as if black shapes were emerging from white fog (see

Figure 36.12). There are many methods for encoding the distance. The end of

this section describes some that you may encounter. Depth buffers are commonly

employed to ensure correct visibility under rasterization. However, they are also

useful for computing shadowing and depth-based post-processing in other render-

ing frameworks, such as ray tracers.

There are three common applications of a depth buffer in visibility determi-

nation. First, while rendering a scene, the depth buffer provides implicit visible

surface determination. A new surface may cover a sample only if its camera-space

depth is less than the value in the depth buffer. If it is, then that new surface over-

writes the color in the depth buffer and its depth value overwrites the depth in

the depth buffer. This is implicit visibility because until rendering completes it

is unknown what the closest visible surface is at a sample, or whether a given