Graphics Reference

In-Depth Information

▪

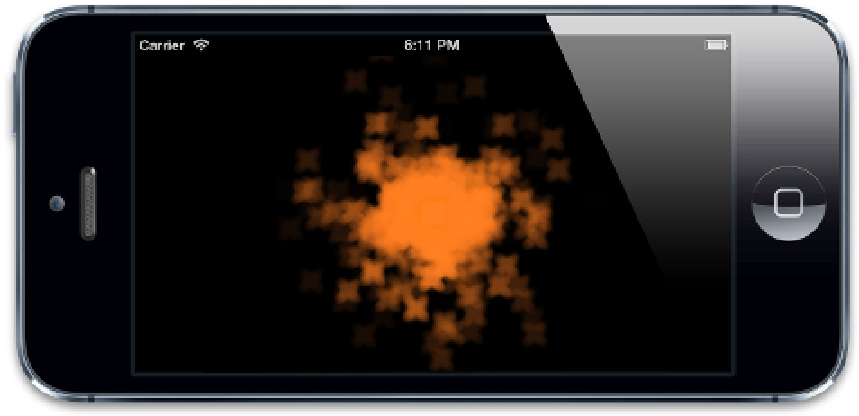

renderMode

, which controls how the particle images are blended visually. You

may have noted in our example that we set this to

kCAEmitterLayerAdditive

,

which has the effect of combining the brightness of overlapping particles so that they

appear to glow. If we were to leave this as the default value of

kCAEmitterLayerUnordered

, the result would be a lot less pleasing (see

Figure 6.14).

Figure 6.14

The fire particles with additive blending disabled

When it comes to high-performance graphics on iOS, the last word is OpenGL. It should

probably also be the

last resort

, at least for nongaming applications, because it's

phenomenally complicated to use compared to the Core Animation and UIKit frameworks.

OpenGL provides the underpinning for Core Animation. It is a low-level C API that

communicates directly with the graphics hardware on the iPhone and iPad, with minimal

abstraction. OpenGL has no notion of a hierarchy of objects or layers; it simply deals with

triangles. In OpenGL everything is made of triangles that are positioned in 3D space and

have colors and textures associated with them. This approach is extremely flexible and

powerful, but it's a lot of work to replicate something like the iOS user interface from

scratch using OpenGL.

To get good performance with Core Animation, you need to determine what sort of content

you are drawing (vector shapes, bitmaps, particles, text, and so on) and then select an

appropriate layer type to represent that content. Only some types of content have been