Information Technology Reference

In-Depth Information

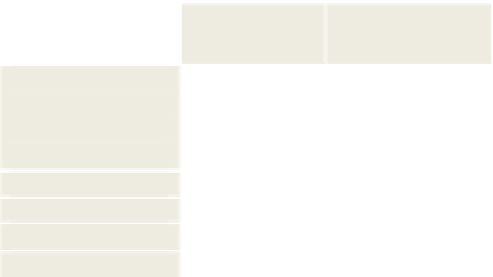

Table 2.

Sentiment Diffusion Prediction results

All Features

Best Features

High-Quality

62.90%

65.04%

Low-Quality_1

61.73%

65.36%

Low-Quality_2

63.47%

66.26%

All Instance

62.13%

64.25%

61.65%

62.33%

SCL

61.84%

65.27%

TrAdaBoost

59.58%

62.59%

Label-Powerset

NLTL

64.21%

68.30%

•

User Sentiment Information.

Similar to link sentiment information, user senti-

ment information models the tendency of each user to reply to positive/negative

posts. For a user, we generate the user sentiment score according to sender aspect

(

), receiver aspect (

), and sender-receiver aspect (

). More specifical-

ly, for

we only consider the number of positive and negative posts sent by us-

er, and ignore those received by this user. On the other hand,

only considers

the number of positive and negative posts received by user.

considers both

aspects.

•

Topic Information.

We follow the same approach described in [7] to extract latent

topic signature (

) features. Besides

TG

, we also extract topic similarity (

TS

)

features weighted by link sentiment information and user sentiment information.

There are four features generated based on topic similarity, topic similarity for link

sentiment (

), topic similarity for user sentiment with sender aspect (

),

topic similarity for user sentiment with receiver aspect (

), and topic similar-

ity for user sentiment with sender-receiver aspects (

).

•

Global Information.

We extract global social features such as in-degree (

ID

), out-

degree (

OD

), and total-degree (

TD

) from social network. Note that these three fea-

tures remain the same for different labeling methods; thus, we utilize them as pivot

features in SCL and NLTL algorithms.

5.4

Results

The experiment setting of sentiment diffusion prediction task is the same as that de-

scribed in Section 4. We compare NLTL that utilizes three sources to the competi-

tors as described in 4.1. We run the experiment on two set of feature combinations:

using all features and the best feature combination chosen using wrapper-based for-

ward selection method [9]. The result shows that NLTL is able to integrate the infor-

mation of features and labels to outperform the competitors by a large margin.

Search WWH ::

Custom Search