Digital Signal Processing Reference

In-Depth Information

Wyner-Ziv coding

Dirty-paper coding

Encoder Decoder

X

m

X

Y

m

X

Encoder

Decoder

Z~N

(0

,

σ

2

)

S

S

FIgure 12.4

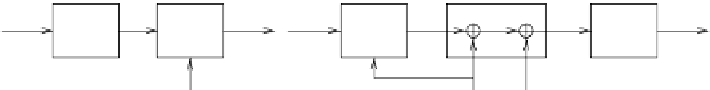

Coding with side information. WZC refers to lossy source coding of

X

with

decoder side information

S

, whereas DPC considers channel encoding of message

m

with encoder

side information

S

over an AWGN channel.

minimum rate for compressing

X

was derived. In general, WZC incurs a rate loss when

compared to the case with

S

, also available at the encoder. However, if the correlation

between

X

and

S

is modeled as

X

=

S

+

Z

, with

Z

being an i.i.d., memoryless Gaussian

random variable, independent of

S

, then there is no rate loss with WZC under the mean-

squared error (MSE) distortion measure.

The information-theoretical dual [17] of WZC is channel coding with side infor-

mation at the encoder, or Gelfand-Pinsker coding [18], where the encoder has perfect

(noncausal) knowledge of the side information or CSI. The limits on the rate at which

messages can be transmitted to a receiver are given in [18]. In general, there is a rate loss

compared to the case when the receiver also knows noncausally the CSI, i.e., the encoder

side information. However, when the channel is additive white Gaussian noise (AWGN),

Gelfand-Pinsker coding does not suffer any rate loss. In this case we have the celebrated

dirty-paper coding (DPC) problem [14], shown in Figure 12.4 (right), where the decoder

can completely cancel out the effect of the interference caused by the side information.

Practical WZC and DPC both involve source-channel coding. WZC can be imple-

mented by first quantizing the source

X

, followed by Slepian-Wolf coding of the quan-

tized

X

with side information

S

at the decoder [19]. Using syndrome-based channel

coding for compression, Slepian-Wolf coding here plays the role of conditional entropy

coding. For DPC, source coding is needed to quantize the side information to satisfy the

power constraint. In the meantime, the quantizer induces a constrained channel, for

which practical channel codes can be designed to approach its capacity. Indeed, limit-

approaching code designs [20-22] have appeared for both WZC and DPC recently.

12.3.2 The Relay Channel

Since cooperative diversity is largely based on relaying messages, its information-

theoretical foundation is built upon the landmark 1979 paper of Cover and El Gamal

[23] on capacity bounds for relay channels. We thus start with the relay channel, give

the theoretical bounds on its capacity, and describe proposed coding strategies in the

Gaussian and Rayleigh flat-fading environments. Then we proceed with extensions to

two-transmitter two-receiver cooperative channels.

The relay channel, introduced by van der Meulen in [5], is a three-node channel where

the source communicates to the destination with the help of an intermediate relay node.

It is shown in

Figure 12.5

. The source broadcasts encoded messages to the relay and

destination. The relay processes the received information and forwards the resulting

signal to the destination. The destination collects signals from both the source and relay

Search WWH ::

Custom Search