Game Development Reference

In-Depth Information

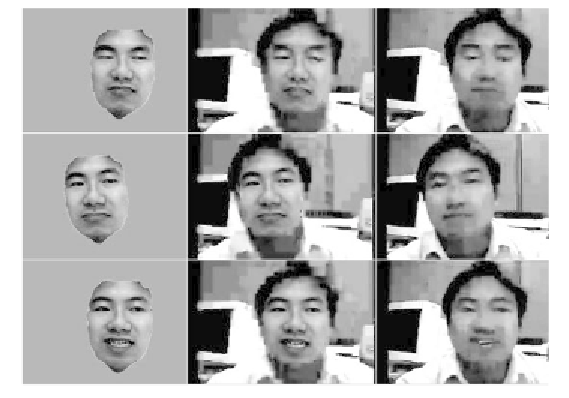

visualize the results. It can be observed that compared with the neutral face (the

first column images), the mouth opening (the second column), subtle mouth

rounding and mouth protruding (the third and fourth columns) are captured in the

tracking results visualized by the animated face model. Because the motion units

are learned from real facial motion data, the facial animation synthesized using

tracking results looks more natural than that using handcrafted action units in Tao

(1998).

The tracking algorithm can be used in model-based face video coding (Tu et al.,

2003). We track and encode the face area using model-based coding. To encode

the residual in the face area and the background for which

a priori

knowledge

is not generally available, we use traditional waveform-based coding method

H.26L. This hybrid approach improves the robustness of the model-based

method at the expense of increasing bit-rate. Eisert, Wiegand & Girod (2000)

proposed a similar hybrid coding technique using a different model-based 3D

facial motion tracking approach. We capture and code videos of 352

240 at

30Hz. At the same low bit-rate (18 kbits/s), we compare this hybrid coding with

H.26L JM 4.2 reference software. Figure 9 shows three snapshots of a video

with 147 frames. The PSNR around the facial area for hybrid coding is 2dB

higher than H.26L. Moreover, the hybrid coding results have much higher visual

quality. Because our tracking system works in real-time, it could be used in a

real-time low-bit-rate video-phone application. Furthermore, the tracking results

×

Figure 9. (a) The synthesized face motion; (b) The reconstructed video

frame with synthesized face motion; (c) The reconstructed video frame

using H.26L codec.

Search WWH ::

Custom Search