Game Development Reference

In-Depth Information

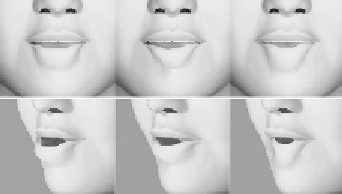

Figure 6. Three lower lip shapes deformed by three of the lower lip parts-

based MUs respectively. The top row is the frontal view and the bottom row

is the side view.

MU Adaptation

The learned MUs are based on the motion capture data of particular subjects.

To use the MUs for other people, they need to be fitted to the new face geometry.

Moreover, the MUs only sample the facial surface motion at the position of the

markers. The movements at other places need to be interpolated. In our

framework, we call this process “MU adaptation.”

Interpolation-based techniques for re-targeting animation to new models, such as

Noh & Neumann (2001), could be used for MU adaptation. Under more

principled guidelines, we design our MU adaptation as a two-step process: (1)

face geometry based MU-fitting; and (2) MU re-sampling. These two steps can

be improved in a systematic way if enough MU sets are collected. For example,

if MU statistics over a large set of different face geometries are available, one

can systematically derive the geometry-to-MU mapping using machine-learning

techniques. On the other hand, if multiple MU sets are available which sample

different positions of the same face, it is possible to combine them to increase the

spatial resolution of MU because markers in MU are usually sparser than face

geometry mesh.

The first step, called “MU fitting,” fits MUs to a face model with different

geometry. We assume that the corresponding positions of the two faces have the

same motion characteristics. Then, the “MU fitting” is done by moving the

markers of the learned MUs to their corresponding positions on the new face.

We interactively build the correspondence of facial feature points shown in

Figure 2(c) via a GUI. Then, warping is used to interpolate the remaining

correspondence.

Search WWH ::

Custom Search