Game Development Reference

In-Depth Information

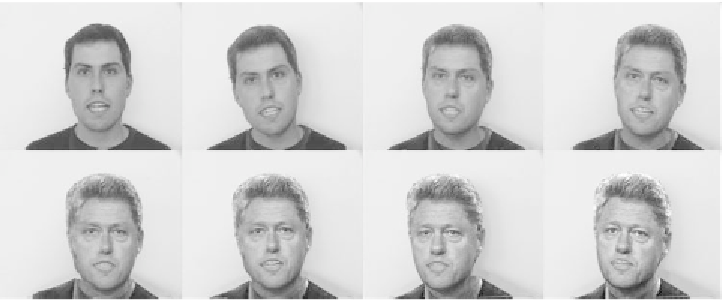

Figure 9: Motion-compensated 3-D morph between two people.

Conclusions

Methods for facial expression analysis and synthesis have received increasing

interest in recent years. The computational power of current computers and

handheld devices like PDAs already allow a real-time rendering of 3-D facial

models, which is the basis for many new applications in the near future.

Especially for handheld devices that are connected to the Internet via a wireless

channel, bit-rates for streaming video is limited. Transmitting only facial expres-

sion parameters drastically reduces the bandwidth requirements to a few kbit/s.

In the same way, face animations or new human-computer interfaces can be

realized with low demands on storage capacities. On the high quality end, film

productions may get new impacts for animation, realistic facial expression, and

motion capture without the use of numerous sensors that interfere with the actor.

Last, but not least, information about motion and symmetry of facial features can

be exploited in medical diagnosis and therapy.

All these applications have in common that accurate information about 3-D

motion deformation and facial expressions is required. In this chapter, the state-

of-the-art in facial expression analysis and synthesis has been reviewed and a

new method for determining FAPs from monocular images sequences has been

presented. In a hierarchical framework, the parameters are robustly found using

optical flow information together with explicit knowledge about shape and motion

constraints of the objects. The robustness can further be increased by incorpo-

rating photometric properties in the estimation. For this purpose, a computationally

efficient algorithm for the determination of lighting effects was given. Finally,

Search WWH ::

Custom Search