Game Development Reference

In-Depth Information

contour features passively shape the facial structure without any active control

based on observations.

Based on a similar animation system as that of Waters', that is, developed on

anatomical-based muscle actions that animate a 3D face wireframe, Essa and

Petland define a suitable set of control parameters using vision-based observa-

tions. They call their solution FACS+ because it is an extension of the traditional

FAC system. They use optical flow analysis along the time of sequences of

frontal view faces to get the velocity vectors on 2D and then they are mapped

to the parameters. They point out in Essa, Basun, Darrel and Pentland (1996) that

driving the physical system with the inputs from noisy motion estimates can result

in divergence or a chaotic physical response. This is why they use a continuous

time Kalman filter (CTKF) to better estimate uncorrupted state vectors. In their

work they develop the concept of motion templates, which are the “corrected”

or “noise-free” 2D motion field that is associated with each facial expression.

These templates are used to improve the optical flow analysis.

Morishima has been developing a system that succeeds in animating a generic

parametric muscle model after having been customized to take the shape and

texture of the person the model represents. By means of optical flow image

analysis, complemented with speech processing, motion data is generated.

These data are translated into motion parameters after passing through a

previously trained neural network. In Morishima (2001), he explains the basis of

this system, as well as how to generate very realistic animation from electrical

captors on the face. Data obtained from this hardware-based study permits a

perfect training for coupling the audio processing.

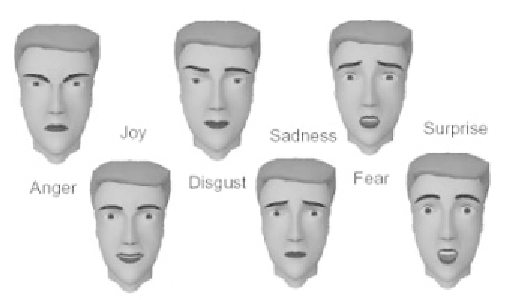

Figure 7. Primary face expressions synthesized on a face avatar. Images

courtesy of Joern Ostermann, AT&T Labs - Research.

Search WWH ::

Custom Search