Game Development Reference

In-Depth Information

be reconstructed directly from the two views from cameras 1 and 2 of site 2

(remote site) at the local site according to the current viewpoint of participant

A

.

This process will be specifically discussed below. Due to the symmetry in the

system, the reconstruction for the other participants is similar.

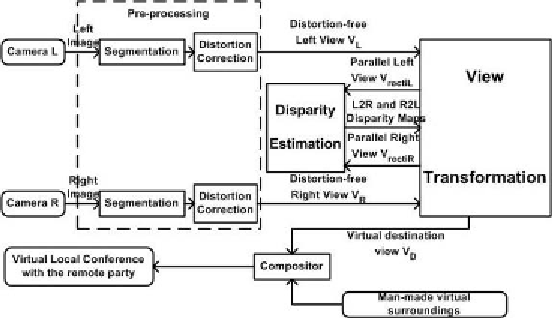

Every 40ms, the fixed stereo set-up at the remote site acquires two images. After

segmentation, the pair of stereo views, containing only the remote participant

without background, is broadcast to the local site. Locally, the two views, based

on the information about the stereo set-up, the local display, and the

pose

(position and orientation) of the local participant, are used to reconstruct a novel

view (“telepresence”) of the remote participant that is adapted to the current

local viewpoint. The reconstructed novel view is then combined with a man-

made uniform virtual environment to give the local participant the impression that

he/she is in a local conference with the remote participant. The whole processing

chain is shown in Figure 6.

Obviously, all parameters of each of the three four-camera set-ups should be

computed beforehand. The calibration is done by combining the linear estimation

technique and the Levenberg-Marquardt nonlinear optimization method.

With explicitly recovered camera parameters, the view can be transformed in a

very flexible and intuitive way, discussed briefly in the next section.

Figure 6. The processing chain for adapting the synthesized view of one

participant in line with the viewpoint change of another participant. Based

on a pair of stereo sequences, the “virtually” perceived view should be

reconstructed and integrated seamlessly with the man-made uniform

environment in real time.

Search WWH ::

Custom Search