Database Reference

In-Depth Information

reinforces the efficiency of decision trees. However, once a bad split is taken, it

is propagated through the rest of the tree. To address this problem, an ensemble

technique (such as random forest) may randomize the splitting or even randomize

data and come up with a multiple tree structure. These trees then vote for each

class, and the class with the most votes is chosen as the predicted class.

There are a few ways to evaluate a decision tree. First, evaluate whether the splits

of the tree make sense. Conduct sanity checks by validating the decision rules with

domain experts, and determine if the decision rules are sound.

Next, look at the depth and nodes of the tree. Having too many layers and

obtaining nodes with few members might be signs of overfitting. In overfitting, the

model fits the training set well, but it performs poorly on the new samples in the

testing set.

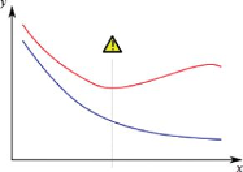

Figure 7.7

illustrates the performance of an overfit model. The

x

-axis

represents the amount of data, and the

y

-axis represents the errors. The blue curve

is the training set, and the red curve is the testing set. The left side of the gray

vertical line shows that the model predicts well on the testing set. But on the right

side of the gray line, the model performs worse and worse on the testing set as

more and more unseen data is introduced.

Figure 7.7

An overfit model describes the training data well but predicts poorly

on unseen data

For decision tree learning, overfitting can be caused by either the lack of training

data or the biased data in the training set. Two approaches [10] can help avoid

overfitting in decision tree learning.

• Stop growing the tree early before it reaches the point where all the

training data is perfectly classified.

• Grow the full tree, and then post-prune the tree with methods such as

reduced-error pruning and rule-based post pruning.

Last, many standard diagnostics tools that apply to classifiers can help evaluate

overfitting. These tools are further discussed in Section 7.3.